Image foreground and background segmentation method, image foreground and background segmentation network model training method, and image processing method and device

A background segmentation and network model technology, applied in image data processing, image analysis, image enhancement, etc., can solve problems such as high training cost, complicated training process, and long training time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

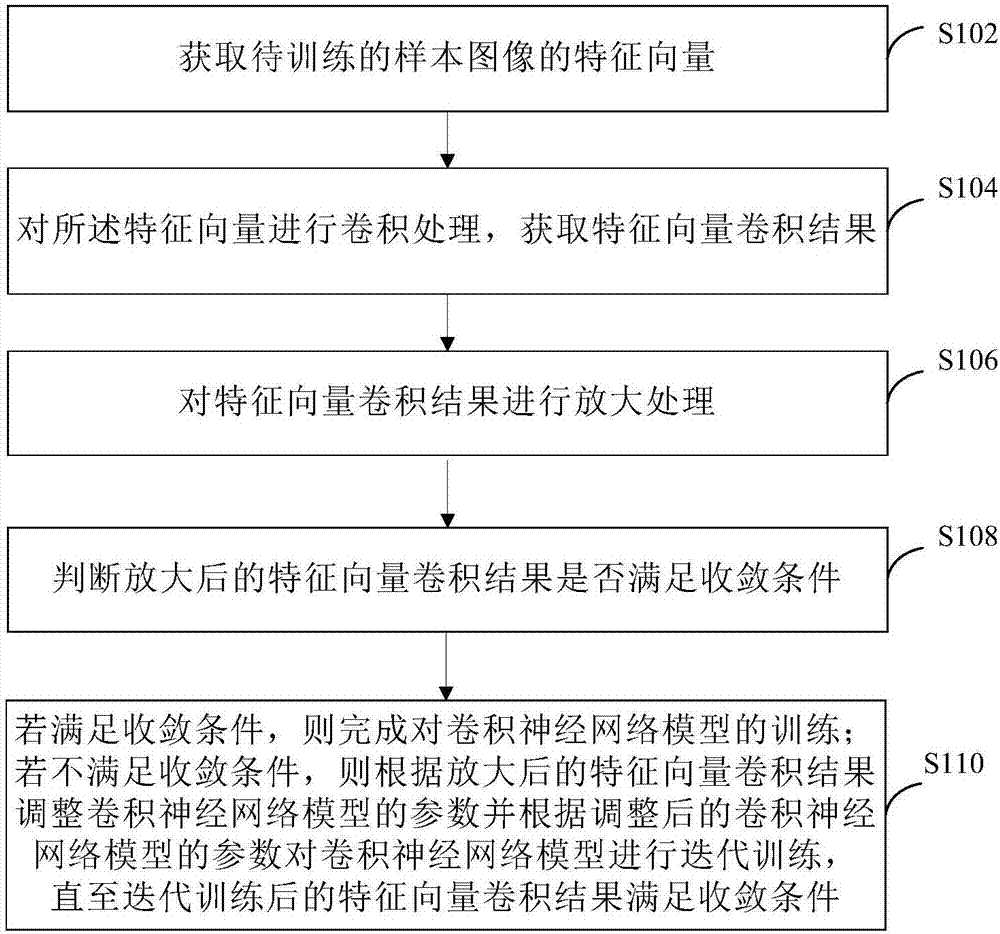

[0061] refer to figure 1 , shows a flowchart of steps of a method for training an image foreground and background segmentation network model according to Embodiment 1 of the present invention.

[0062] The training method of the image foreground and background segmentation network model of the present embodiment comprises the following steps:

[0063] Step S102: Obtain feature vectors of sample images to be trained.

[0064] Wherein, the sample image is a sample image including foreground annotation information and background annotation information. That is, the sample image to be trained is a sample image that has been marked with a foreground area and a background area. In the embodiment of the present invention, the foreground area may be the area where the subject of the image is located, such as the area where the person is located; the background area may be other areas except the area where the subject is located, and may be all or part of other areas.

[0065] In a ...

Embodiment 2

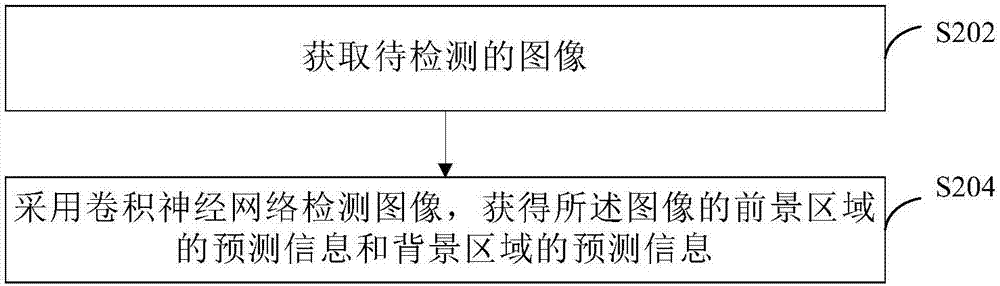

[0147] refer to figure 2 , shows a flow chart of the steps of a method for image foreground and background segmentation according to Embodiment 2 of the present invention.

[0148] In this embodiment, the trained image foreground and background segmentation network model shown in the first embodiment is used to detect the image and segment the foreground and background of the image. The image foreground and background segmentation method of the present embodiment comprises the following steps:

[0149] Step S202: Obtain an image to be detected.

[0150] Wherein, the image includes a still image or an image in a video. In an optional solution, the image in the video is an image in a live video. In another optional solution, the images in the video include multi-frame images in the video stream, because there are more contextual associations in the multi-frame images in the video stream, and the image segmentation method shown in Embodiment 1 is used to The convolutional ne...

Embodiment 3

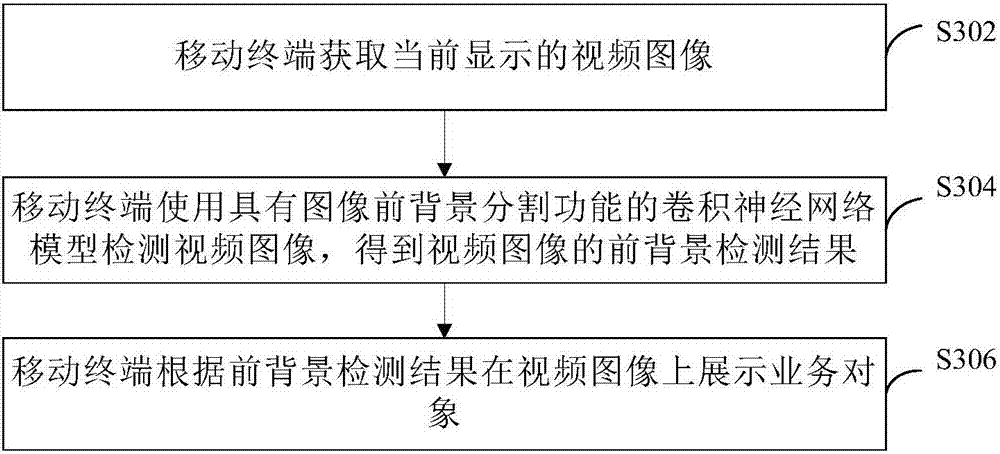

[0156] refer to image 3 , shows a flow chart of steps of a video image processing method according to Embodiment 3 of the present invention.

[0157] The video image processing method in this embodiment can be executed by any device with data collection, processing and transmission functions, including but not limited to mobile terminals and PCs. This embodiment takes a mobile terminal as an example to describe the method for processing a service object in a video image provided by the embodiment of the present invention, and other devices may refer to this embodiment for implementation.

[0158] The video image processing method of the present embodiment comprises the following steps:

[0159] Step S302: the mobile terminal acquires the currently displayed video image.

[0160] In this embodiment, the video image of the currently playing video is obtained from the live application as an example, and the processing of a single video image is taken as an example, but those s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com