Dense optical flow estimation method and device

A dense optical flow and estimation algorithm technology, applied in the field of computer vision, can solve problems such as poor accuracy, long time-consuming search and query operations, difficulty in obtaining dense optical flow, etc., and achieve fast results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

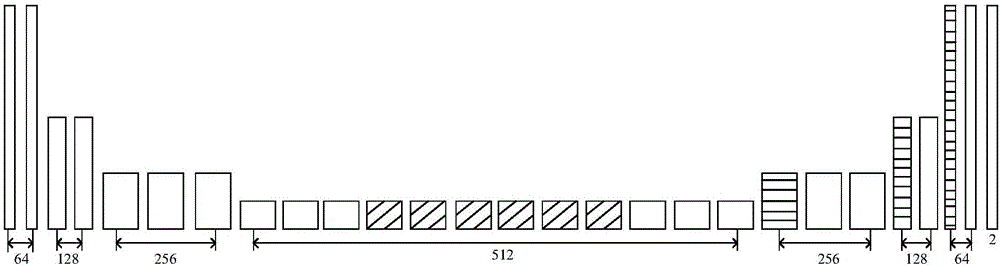

Image

Examples

Embodiment 1

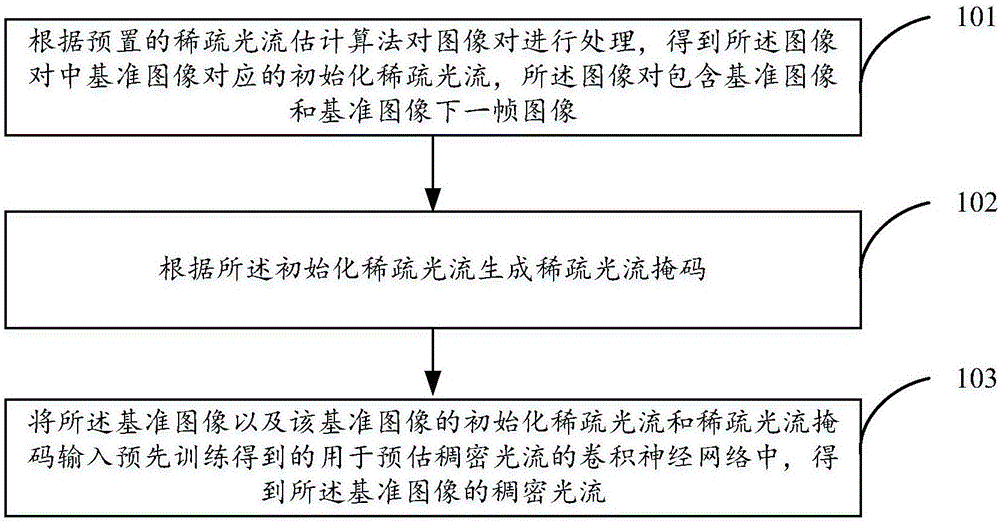

[0025] see figure 1 , is a flow chart of the dense optical flow estimation method in the embodiment of the present invention, the method includes:

[0026] Step 101. Process the image pair according to the preset sparse optical flow estimation algorithm to obtain the initialized sparse optical flow corresponding to the reference image in the image pair. The image pair includes the reference image and the next frame image of the reference image.

[0027] Preferably, in the embodiment of the present invention, the sparse optical flow estimation algorithm may be the Lucas-Kanade algorithm, and the initialization of the sparse optical flow obtained by processing the image degree through the Lucas-Kanade algorithm is accurate and fast.

[0028] In the embodiment of the present invention, the image pair refers to the image pair formed by the image to be estimated dense optical flow and the next frame image of the image. The image to be estimated dense optical flow is called the ref...

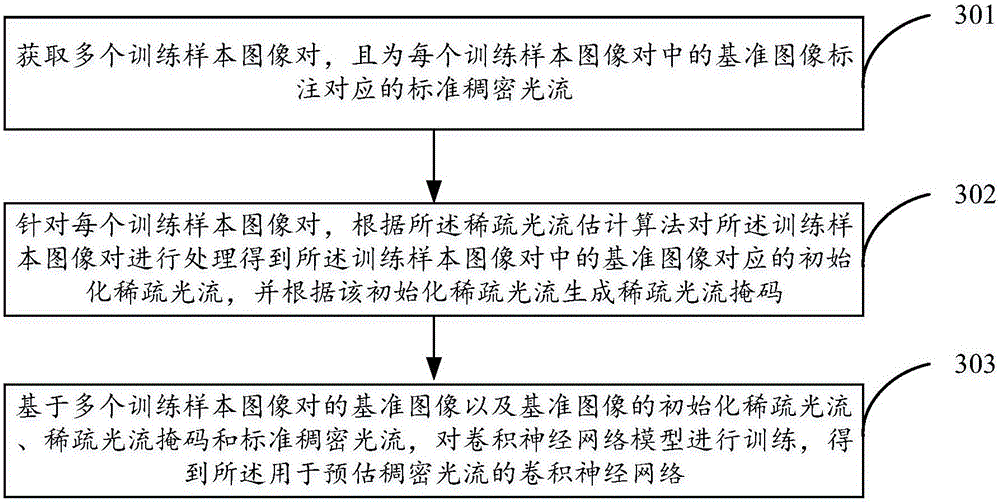

Embodiment 2

[0049] Based on the same idea as the dense optical flow estimation method provided in the first embodiment, the second embodiment of the present invention also provides a dense optical flow estimation device, the structure of which is as follows Figure 4 shown, including:

[0050] The processing unit 41 is configured to process the image pair according to the preset sparse optical flow estimation algorithm to obtain the initialized sparse optical flow corresponding to the reference image in the image pair, the image pair including the reference image and the next frame image of the reference image .

[0051] Preferably, the sparse optical flow estimation algorithm is Lucas-Kanade algorithm.

[0052] A generating unit 42, configured to generate a sparse optical flow mask according to the initialized sparse optical flow.

[0053] The generation unit 42 is specifically configured to: mark the pixels containing sparse optical flow in the reference image as 1, and mark the pixel...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com