PCNN spectrogram feature integration based emotion voice recognition system

A speech recognition and feature fusion technology, applied in speech analysis, character and pattern recognition, instruments, etc., can solve the problem of less research on the combination of time domain and frequency domain correlation of speech signals

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0087]The present invention uses the windows 7 system as the program development software environment, uses MATLAB R2010a as the program development platform, and adopts the German Berlin speech library as the experimental data. The speech database is recorded by 10 different people, 5 men and 5 women, including 7 different emotions of calm, fear, disgust, joy, disgust, sadness, and anger, with a total of 800 sentence corpus. In this paper, 494 statements are selected to form a database for experiments. The sentences of 5 people are used as the training set, and 30 sentences of each emotion are selected from the remaining sentences, and a total of 210 sentences are used as the test set.

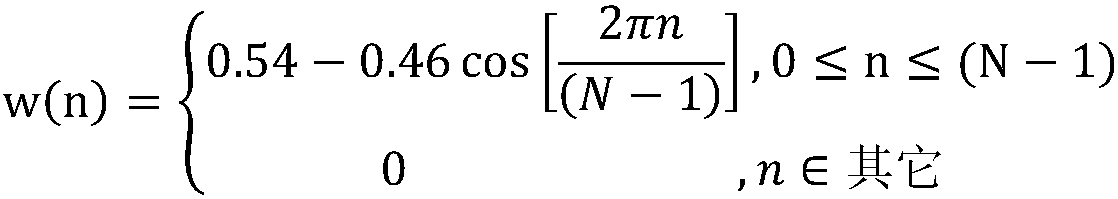

[0088] Carry out windowing to speech signal s (n), the window function that the present invention adopts is Hamming window w (n):

[0089]

[0090] Multiply the speech signal s(n) by the window function w(n) to form a windowed speech signal x(n):

[0091] x(n)=s(n)*w(n)

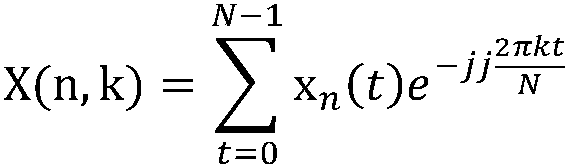

[0092] The win...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com