Positioning device and method based on depth vision and robot

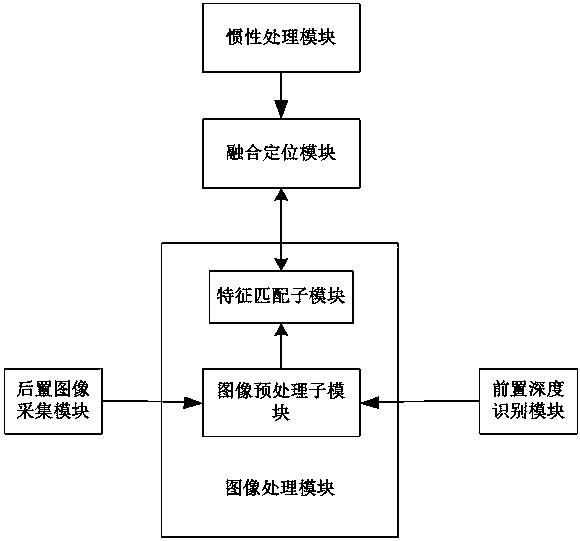

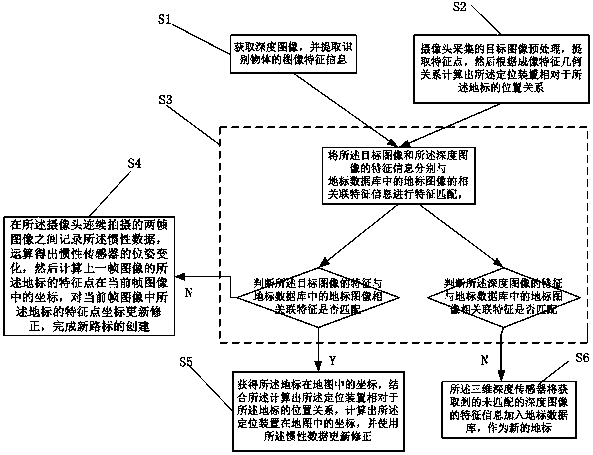

A positioning device and depth sensor technology, applied in two-dimensional position/channel control, instruments, manipulators, etc., can solve the problems of complex visual simultaneous positioning algorithm and large consumption of processor computing resources, so as to improve navigation efficiency and reduce computing power. The effect of resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

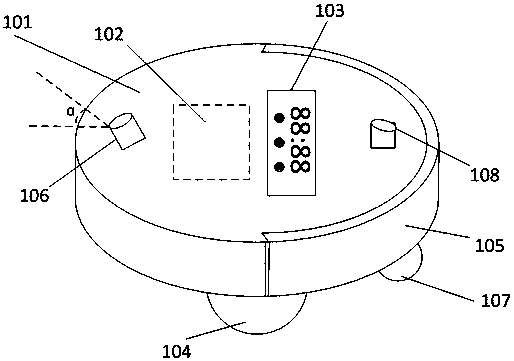

[0032] The specific embodiment of the present invention will be further described below in conjunction with accompanying drawing:

[0033] A positioning device based on depth vision in the embodiment of the present invention is implemented in the form of a robot, including mobile robots such as sweeping robots and AGVs. In the following, it is assumed that the positioning device is installed on the cleaning robot. However, those skilled in the art will appreciate that the configurations according to the embodiments of the present invention can be extended to be applied to mobile terminals, in addition to being used specifically for mobile robots.

[0034] In the implementation of the present invention, those skilled in the art can easily know that in the process of executing vslam, a small map is buffered according to the feature points of the input image, and then the positional relationship between the current frame and the map is calculated. The map here is only a temporar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com