Multivariate feature fusion Chinese text classification method based on attention neural network

A technology of feature fusion and neural network, applied in the direction of neural learning method, biological neural network model, text database clustering/classification, etc., can solve the problem of not fully combining the advantages of the three algorithms, and achieve improved accuracy and improved recognition effect of ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] Exemplary embodiments of the present invention will be described below with reference to the accompanying drawings. It should be understood that the specific embodiments described here are only used to explain the embodiments of the present invention, rather than to limit the embodiments of the present invention. In addition, it should be noted that, for the convenience of description, the drawings only show some but not all structures related to the embodiments of the present invention, and some parts in the drawings will be omitted, enlarged or reduced, and do not represent actual products size of.

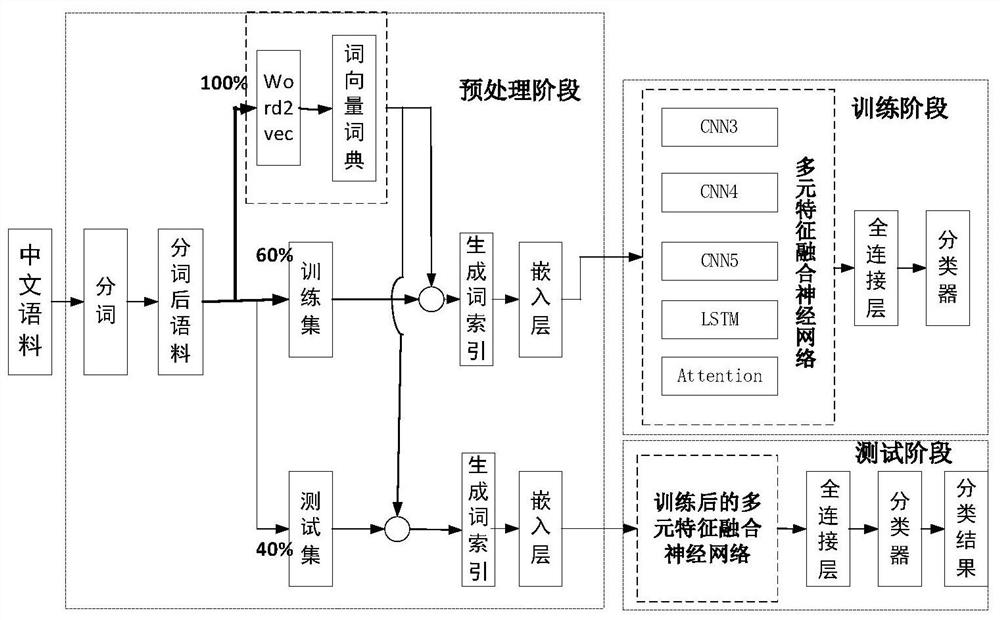

[0047] The corpus used in this example was organized and produced by the Natural Language Processing Group of the International Database Center of the Department of Computer and Technology, Fudan University. The main process of preprocessing is as figure 1 shown. The corpus used contains 9833 Chinese documents, which are divided into 20 categories. Use 60% of the corp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com