Space-time bone characteristic and depth belief network-based human body behavior identification method

A deep belief network and recognition method technology, applied in the field of human behavior recognition, can solve the problems of complex feature extraction of human behavior recognition, easy to be affected by changes in lighting conditions and complex backgrounds, and high computing costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

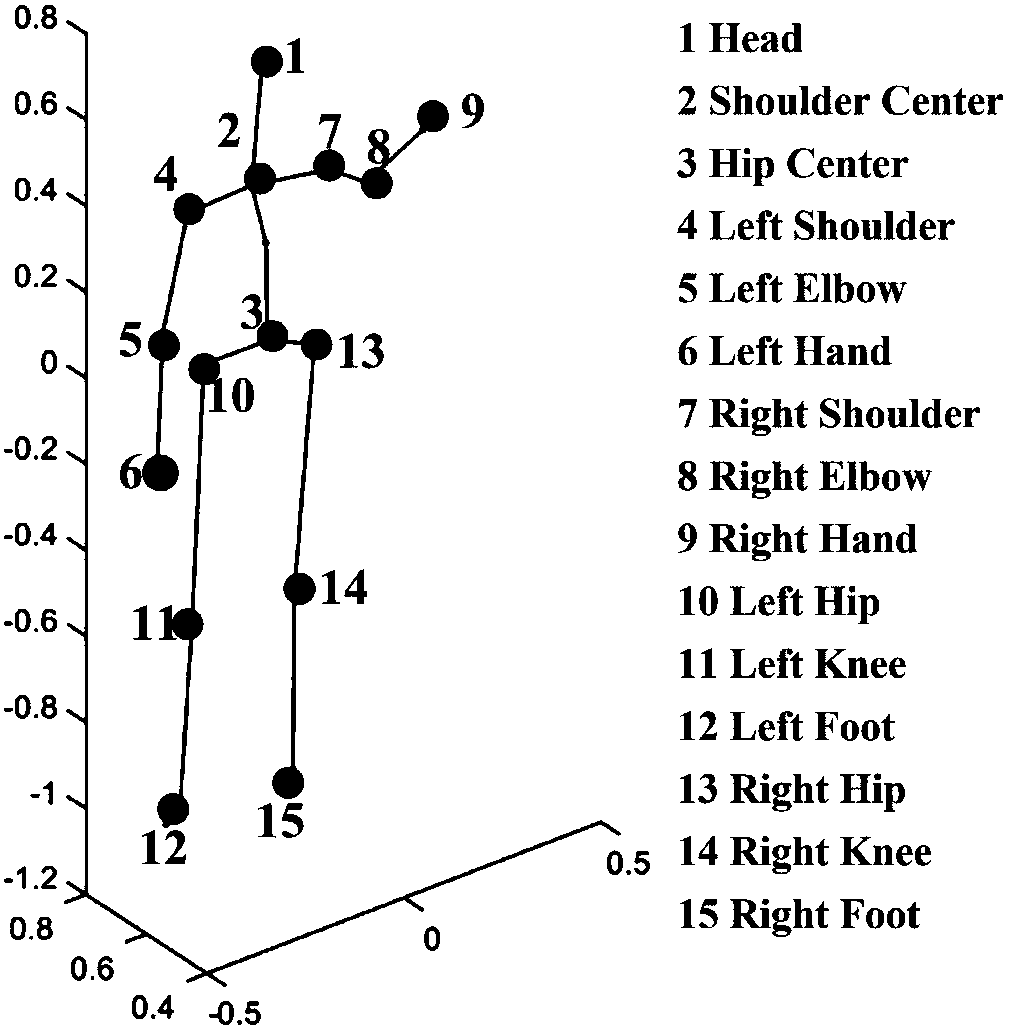

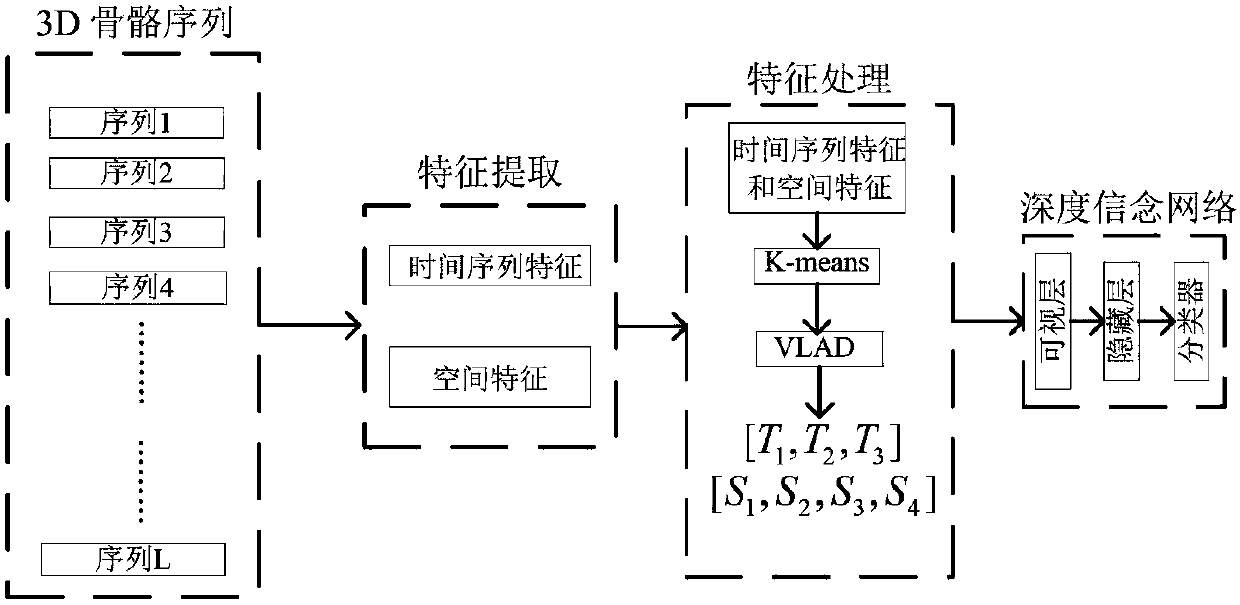

[0062] This embodiment provides a human behavior recognition method based on spatio-temporal skeletal features and a deep belief network. The skeletal points used in the method in this embodiment include the head skeletal point Head, the shoulder central skeletal point Shoulder Center, and the hip central skeletal point Hip Center, left shoulder bone point Left Shoulder, left elbow bone point Left Elbow, left hand bone point Left Hand, right shoulder bone point Right Shoulder, right elbow bone point Right Elbow, right hand bone point RightHand, left hip bone point Left Hip, left knee Bone point Left Knee, left foot bone point Left Foot, right hip bone point Right Hip, right knee bone point Right Knee, right foot bone point Right Foot.

[0063] The specific steps of the method are as follows:

[0064] Step 1: Get the skeleton sequence;

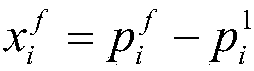

[0065] For each action, use the depth camera (Kinect v2 SDK can be used), take the camera coordinates as the origin, and obtain the name and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com