Chinese text classification method based on attention mechanism and feature enhancement fusion

A text classification and attention technology, applied in computer parts, character and pattern recognition, special data processing applications, etc., can solve the problems of appropriate weight configuration, single processing granularity, uneven location distribution, etc., to improve the recognition ability , the effect of improving the effectiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

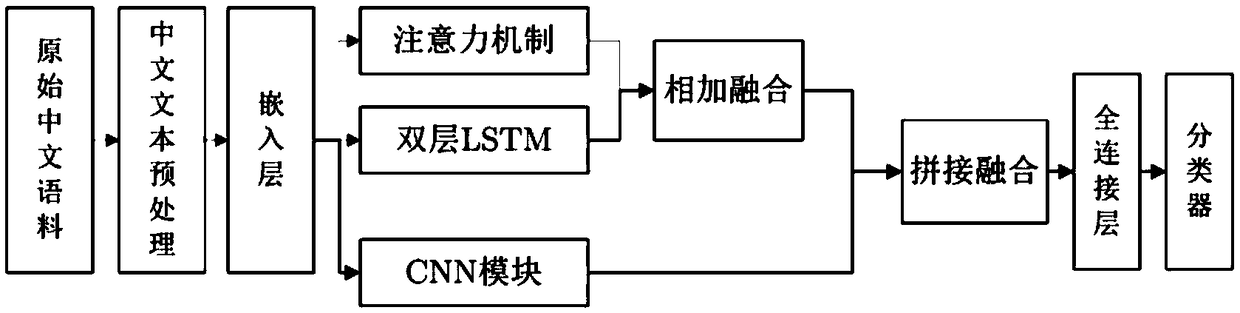

[0029] This embodiment is a specific embodiment of a Chinese text classification method based on attention mechanism and feature enhancement fusion.

[0030] A Chinese text classification method based on attention mechanism and feature enhancement fusion, comprising the following steps:

[0031] Step a, sorting out the original Chinese text corpus, performing word segmentation on the original Chinese text corpus and pre-training the word vector dictionary, and performing text preprocessing;

[0032] Step b, preprocessing the Chinese text corpus into an N-dimensional vector based on terms; performing feature selection on the preprocessed text to form a feature space of the text data set;

[0033] Step c, the original Chinese text corpus is stored in the embedding matrix of the embedding layer before entering the neural network module for training and testing after preprocessing, and the representation form of each row is a vector representation form of a text document;

[0034...

specific Embodiment 2

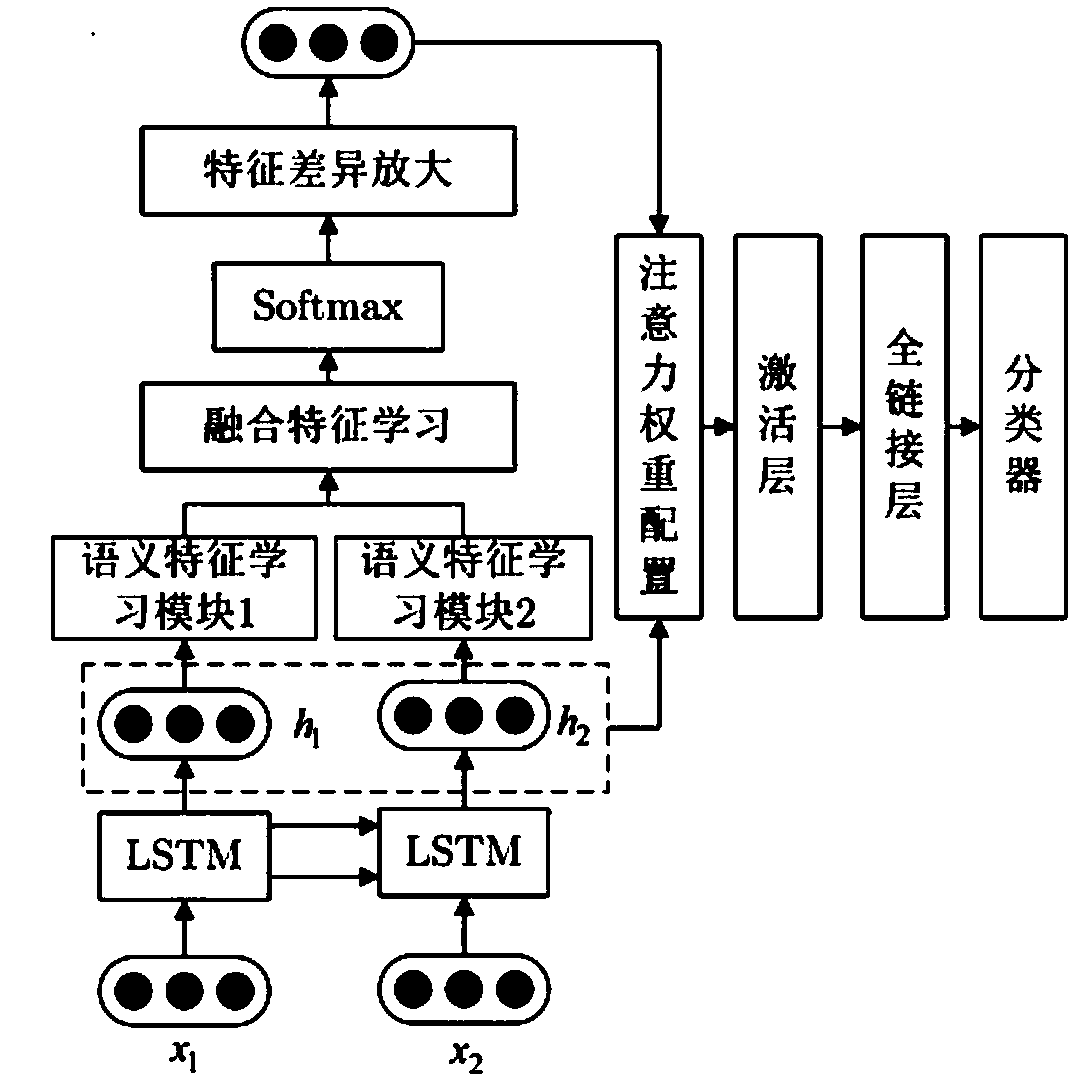

[0046] This embodiment is a Chinese text classification method based on the fusion of attention mechanism and feature enhancement, in which the attention mechanism model is a specific embodiment of the semantic feature differentiated attention algorithm model.

[0047] Described a kind of Chinese text classification method based on attention mechanism and feature strengthening fusion, described attention mechanism model is constituted by semantic feature difference attention algorithm model, described semantic feature difference attention algorithm model comprises the following steps:

[0048] Step a1, input the text in the semantic feature differential attention algorithm model as TEXT text, and determine the word vectors x1 and x2 in the text;

[0049] Step b1, importing the word vectors x1 and x2 into the encoder LSTM; performing an encoding operation on the word vectors x1 and x2 imported into the encoder LSTM, the word vector x1 is encoded as a semantic code h1, the The w...

specific Embodiment 3

[0085] This embodiment is a specific embodiment of a CNN module in a Chinese text classification method based on attention mechanism and feature enhancement fusion.

[0086] A Chinese text classification method based on attention mechanism and feature strengthening fusion, the CNN module includes three-dimensional convolutional neural networks with CNN3 and CNN4 convolution kernel sizes, and the CNN3 convolution kernel size is 3 times Word vector dimension, the size of the CNN4 convolution kernel is 4 times the word vector dimension.

[0087] The neural network structure characteristics of CNN determine that it is very suitable for extracting local feature information represented by digitized vectors of Chinese text corpus under different convolution kernel sizes.

[0088] The CNN module contains two convolutional neural network channels CNN3 and CNN4; both of them have three one-dimensional convolutional layers connected by a maximum pooling layer in the middle; the output wi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com