Radar and multi-network fusion based pedestrian pose recognition method and system

A multi-network fusion and recognition method technology, applied in the field of pedestrian gesture recognition methods and systems, can solve the problems of low recognition accuracy, discounted recognition effect, and inability to recognize, and achieves a reduction in signal sampling rate requirements, easy implementation, and high accuracy. The effect of rate recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The principles and features of the present invention are described below in conjunction with the accompanying drawings, and the examples given are only used to explain the present invention, and are not intended to limit the scope of the present invention.

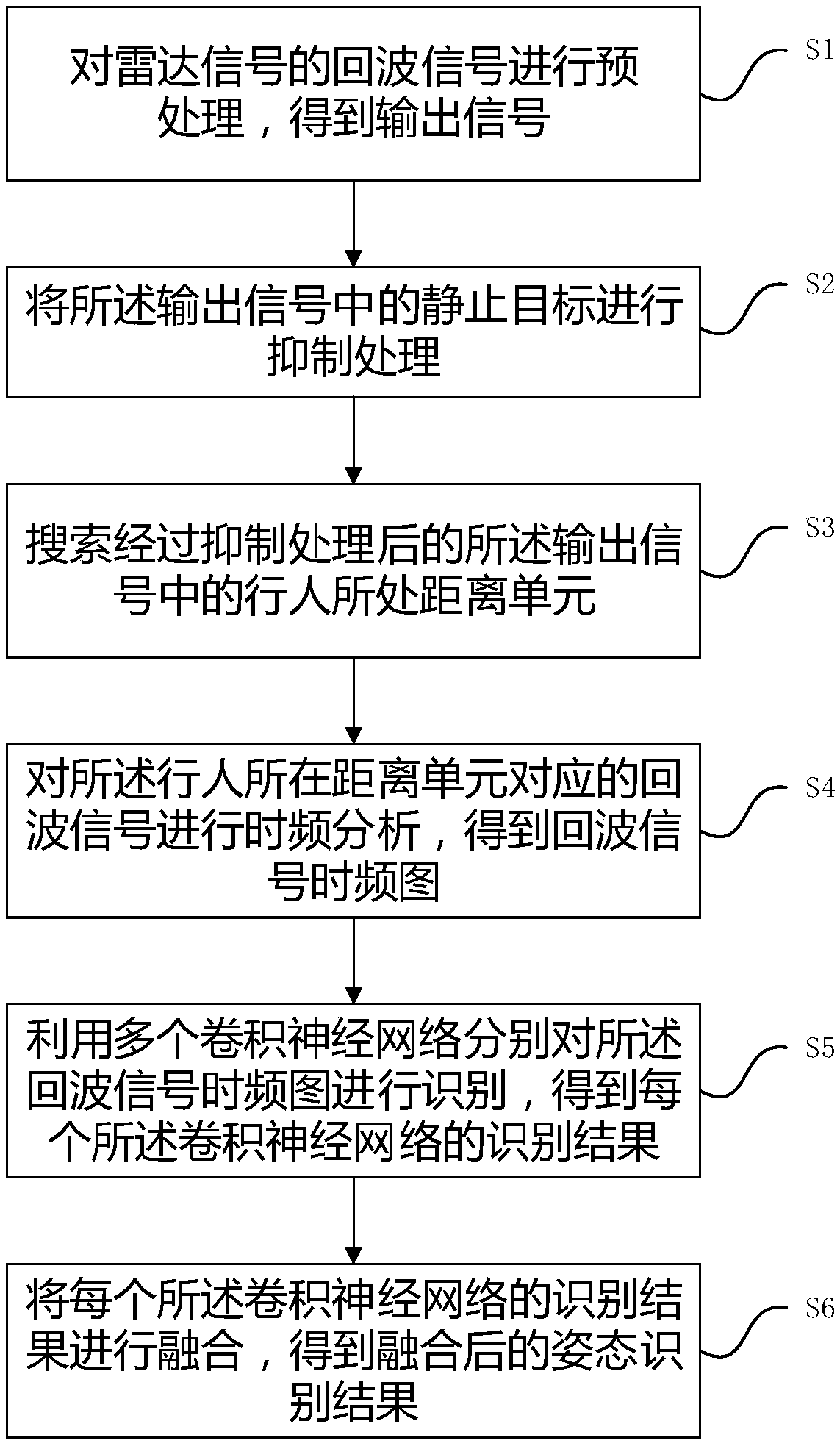

[0075] Such as figure 1 As shown, a pedestrian posture recognition method based on radar and multi-network fusion includes the following steps:

[0076] Step 1: Preprocessing the echo signal of the radar signal to obtain the output signal;

[0077] Step 2: suppressing stationary targets in the output signal;

[0078] Step 3: Searching for the distance unit of the pedestrian in the output signal after suppression processing;

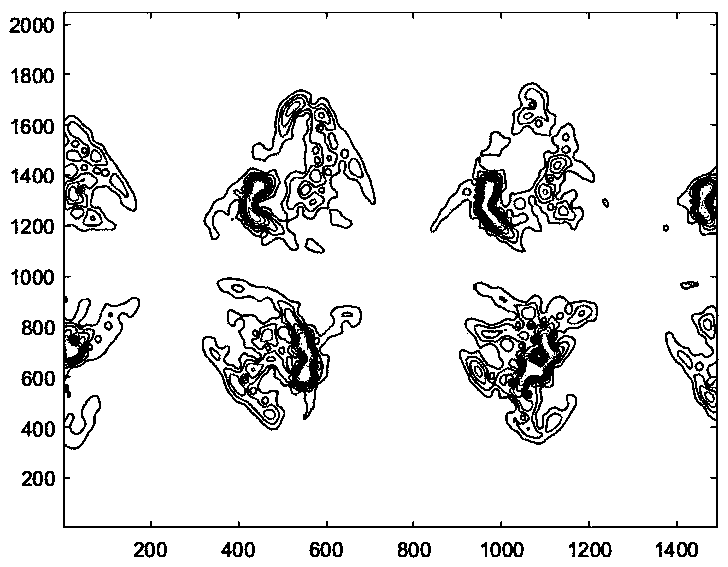

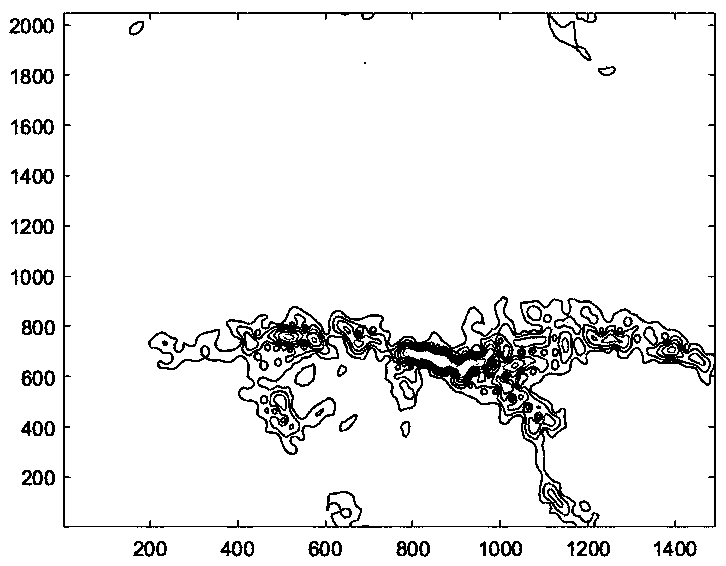

[0079] Step 4: Perform time-frequency analysis on the echo signal corresponding to the distance unit where the pedestrian is located to obtain a time-frequency diagram of the echo signal;

[0080] Step 5: Using a plurality of convolutional neural networks to identify the time-frequency diagr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com