A video data synthesis method and a device

A technology of video data and synthesis method, which is applied in the field of video processing, can solve the problem of environmental sound affecting the sound of the target object, and achieve the effect of improving the quality of synthesis

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

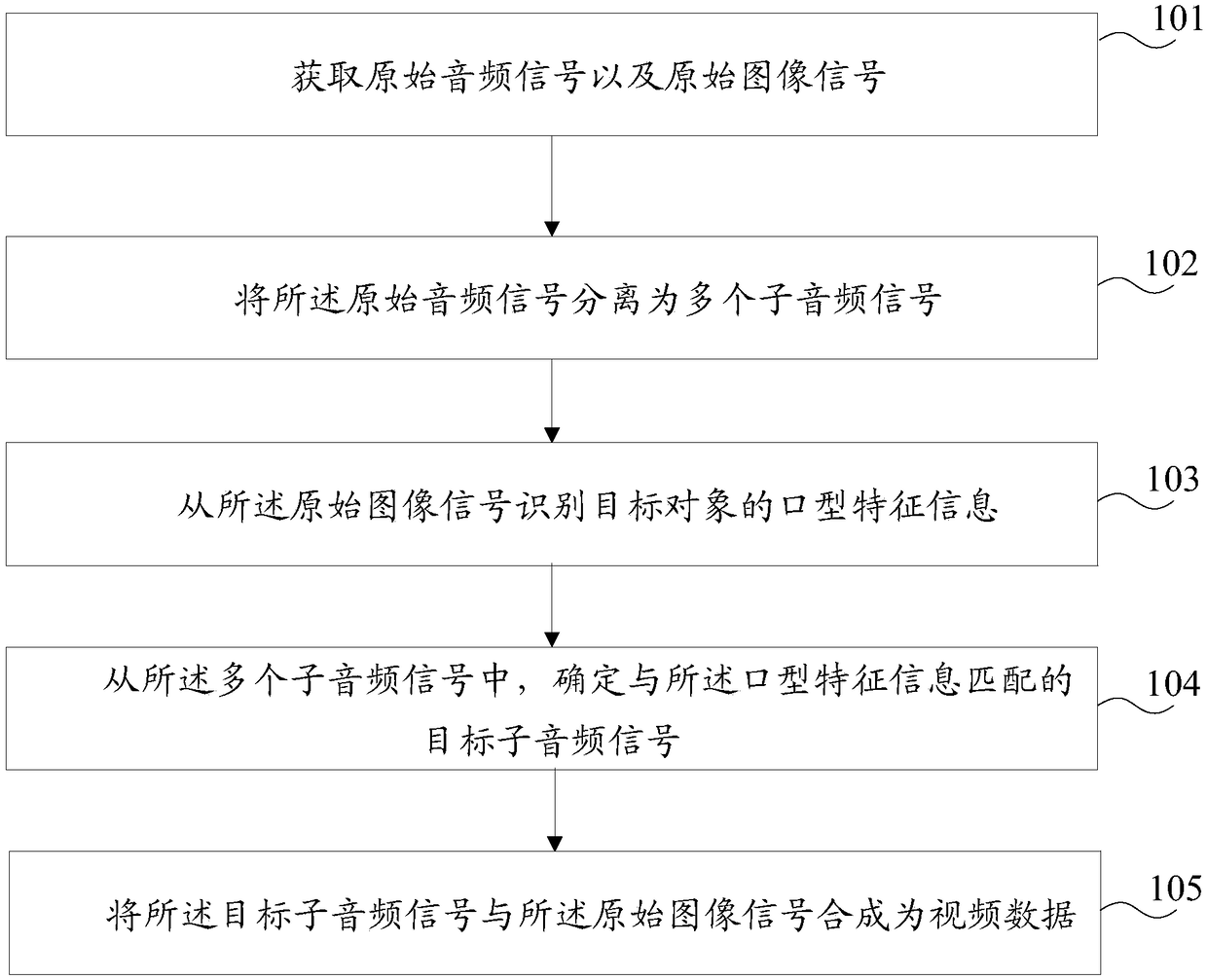

[0028] refer to figure 1 , which shows a flow chart of the video data synthesis method provided in Embodiment 1 of the present invention, which may specifically include the following steps:

[0029] Step 101, acquire an original audio signal and an original image signal.

[0030] In the embodiment of the present invention, the original audio signal and the original image signal are obtained. Specifically, the original audio signal may be acquired through a microphone, the original audio signal may be acquired through one microphone, or the original audio signal may be acquired through multiple microphones. In the embodiment of the present invention, there is no specific limitation on this.

[0031] In the embodiment of the present invention, the original image signal can be obtained through the camera. The above-mentioned original audio signal and original image signal may be acquired at the same time, or may not be acquired at the same time, for example, the original audio...

Embodiment 2

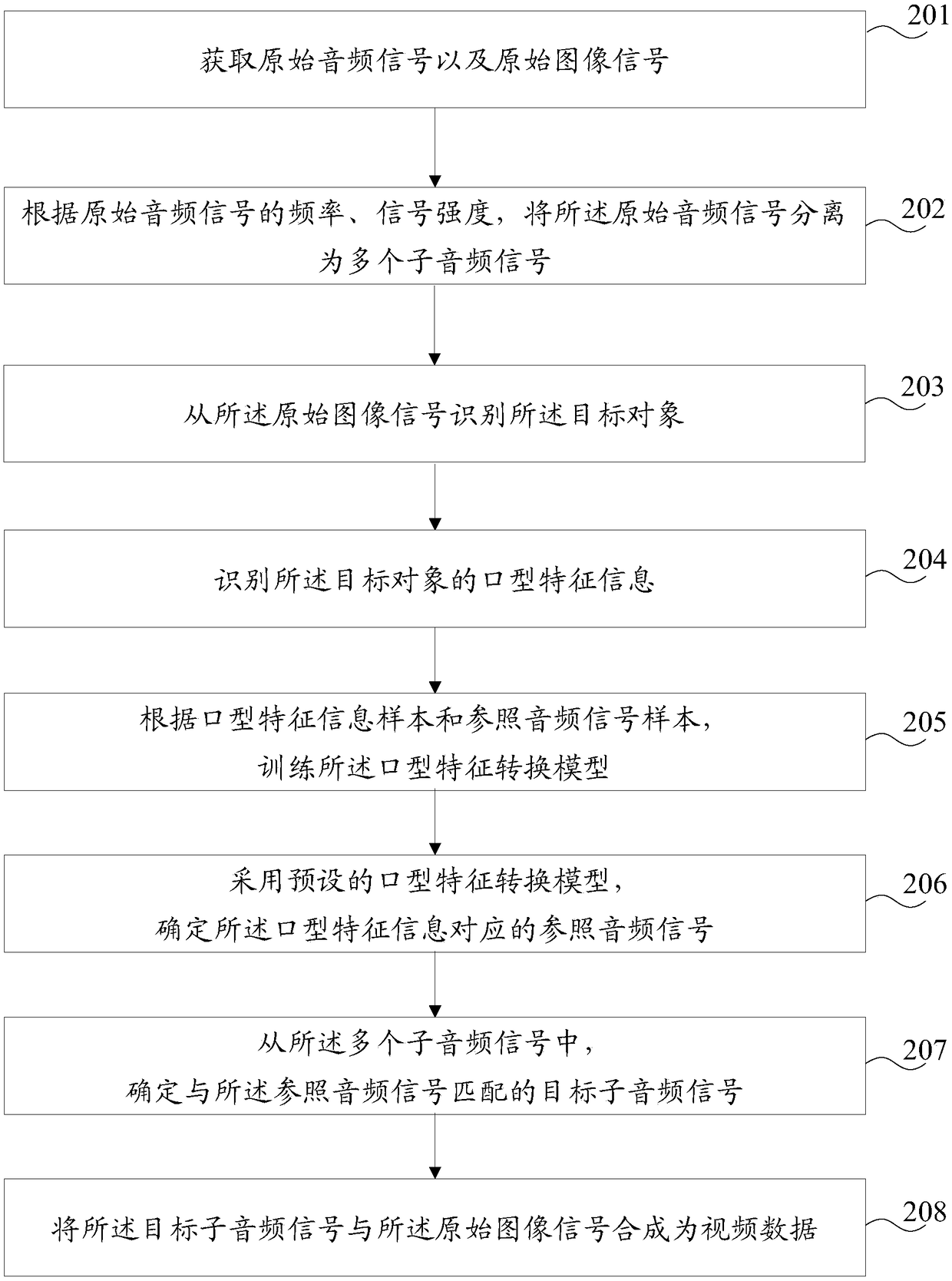

[0059] refer to figure 2 , which shows a flow chart of the video data synthesis method provided in Embodiment 2 of the present invention, which may specifically include the following steps:

[0060] Step 201, acquire an original audio signal and an original image signal.

[0061] In the embodiment of the present invention, for step 201, reference may be made to the specific description of step 101 in the embodiment of the present invention, which is not specifically limited in the embodiment of the present invention.

[0062] Step 202: Separate the original audio signal into multiple sub-audio signals according to the frequency and signal strength of the original audio signal.

[0063] In the embodiment of the present invention, the original audio signal is separated into multiple sub-audio signals according to the frequency and signal strength of the original audio signal. Specifically, the number of channels in the acquisition process of the original audio signal can be d...

Embodiment 3

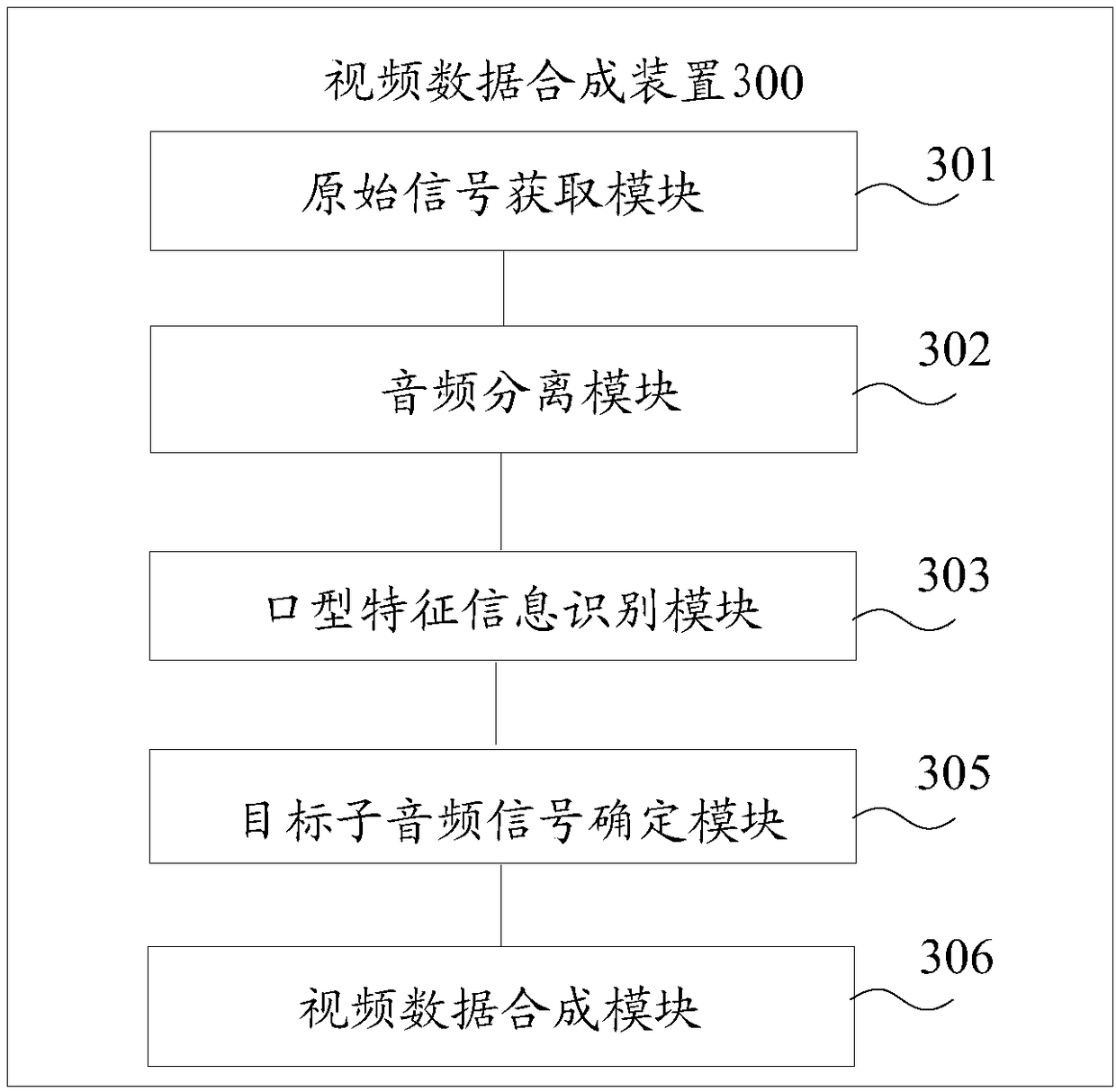

[0093] refer to image 3 As shown, it is a structural block diagram of the video data synthesis device 300 provided in Embodiment 3 of the present invention, and the above-mentioned video data synthesis device 300 may specifically include:

[0094] An original signal acquisition module 301, configured to acquire an original audio signal and an original image signal;

[0095] An audio separation module 302, configured to separate the original audio signal into multiple sub-audio signals;

[0096] A mouth-shaped feature information identification module 303, configured to identify the lip-shaped feature information of the target object from the original image signal;

[0097] A target sub-audio signal determining module 305, configured to determine a target sub-audio signal matching the lip-shape feature information from the plurality of sub-audio signals;

[0098] A video data synthesis module 306, configured to synthesize the target sub-audio signal and the original image si...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com