A visual-auditory evoked emotion recognition method and system based on an EEG signal

An EEG signal and emotion recognition technology, applied in medical science, psychological devices, sensors, etc., can solve the problem of low accuracy of emotion recognition, and achieve the goal of improving classification accuracy, eliminating redundant features, and improving accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

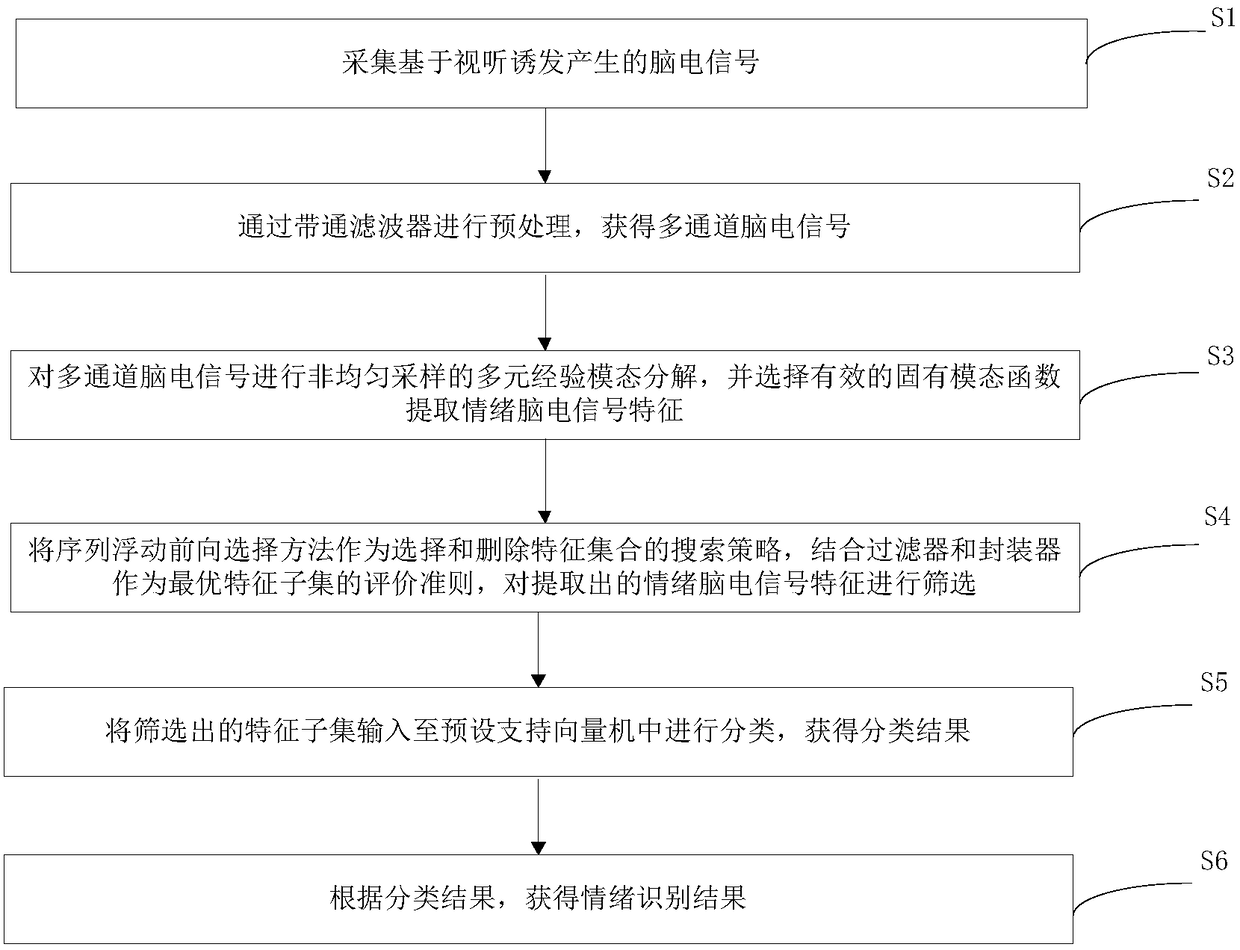

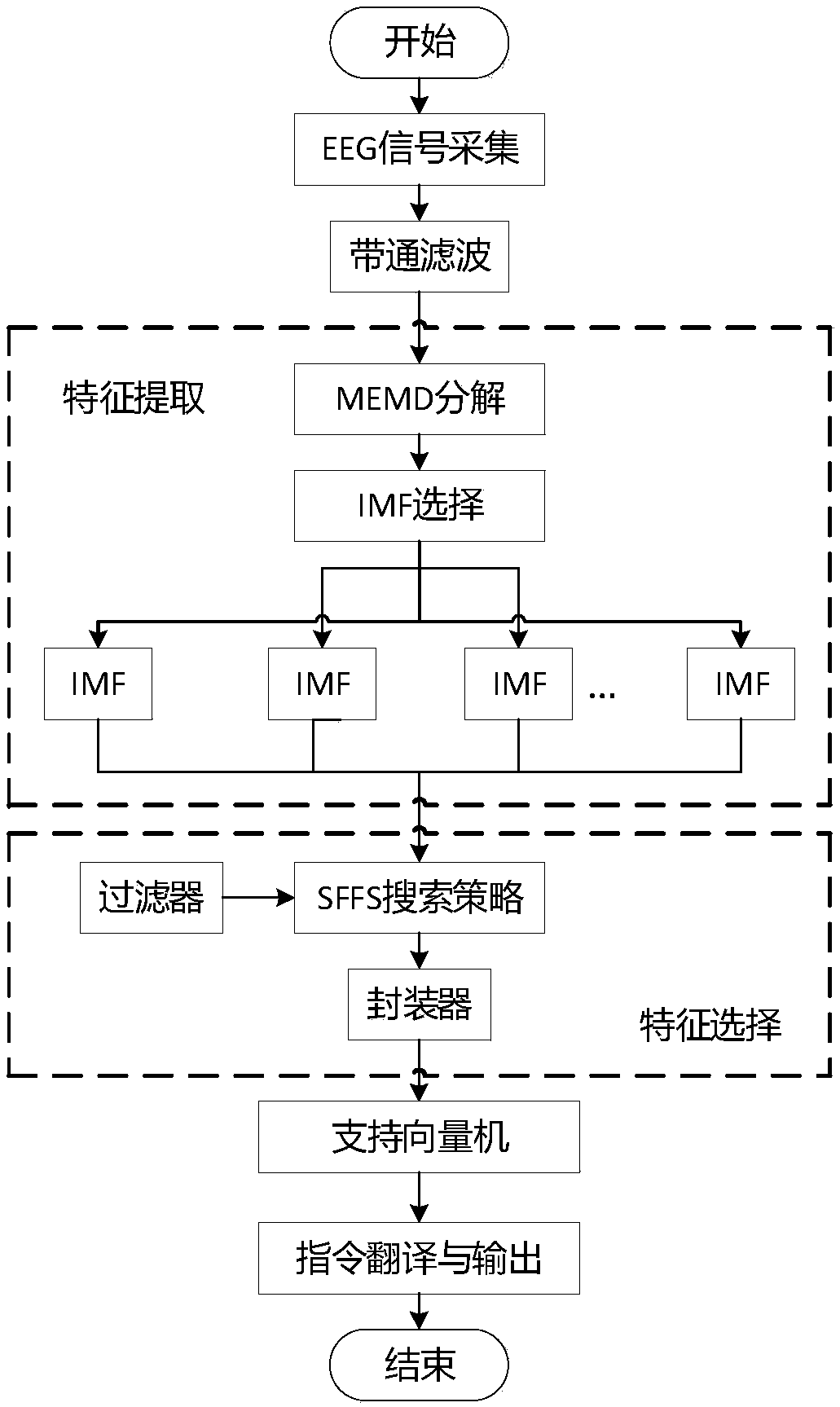

[0040] This embodiment provides an audio-visual-induced emotion recognition method based on EEG signals, please refer to figure 1 , the method includes:

[0041] First, step S1 is performed: collecting EEG signals generated based on audio-visual induction.

[0042] Specifically, electroencephalogram signal (EEG) is the overall reflection of the electrophysiological activity of brain nerve cells on the surface of the cerebral cortex or scalp. In this embodiment, the generation of EEG signals is induced by auditory stimulation and visual stimulation. EEG signal collection such as image 3 As shown, the schematic diagram of the signal acquisition instrument and the EEG cap.

[0043] Then step S2 is performed: performing preprocessing through a band-pass filter to obtain multi-channel EEG signals.

[0044] Specifically, the EEG signal can be filtered at 4-45HZ through a band-pass filter, which can eliminate clutter and interference in other frequency bands, improve the signal-...

Embodiment 2

[0122] This embodiment provides an audiovisual-induced emotion recognition system based on EEG signals, please refer to Figure 8 , the system consists of:

[0123] A signal collection module 801, configured to collect electroencephalogram signals generated based on audio-visual induction;

[0124] A preprocessing module 802, configured to perform preprocessing through a bandpass filter to obtain multi-channel EEG signals;

[0125] The feature extraction module 803 is used to perform multivariate empirical mode decomposition of non-uniform sampling on the multi-channel EEG signal, and select an effective intrinsic mode function to extract the characteristics of the emotional EEG signal;

[0126] The feature screening module 804 is used to use the sequential floating forward selection method as a search strategy for selecting and deleting feature sets, combine filters and wrappers as the evaluation criteria for the optimal feature subset, and perform an evaluation of the extra...

Embodiment 3

[0136] Based on the same inventive concept, the present application also provides a computer-readable storage medium 900, please refer to Figure 9 , on which a computer program 911 is stored, and the method in Embodiment 1 is implemented when the program is executed.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com