Convolution neural network training method, gesture recognition method, device and apparatus

A technology of convolutional neural network and training method, which is applied in the field of convolutional neural network training method, gesture recognition method and device, can solve the problems of low processing efficiency and high complexity, and achieve simplification of complexity, reduction of data, and improvement of The effect of processing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

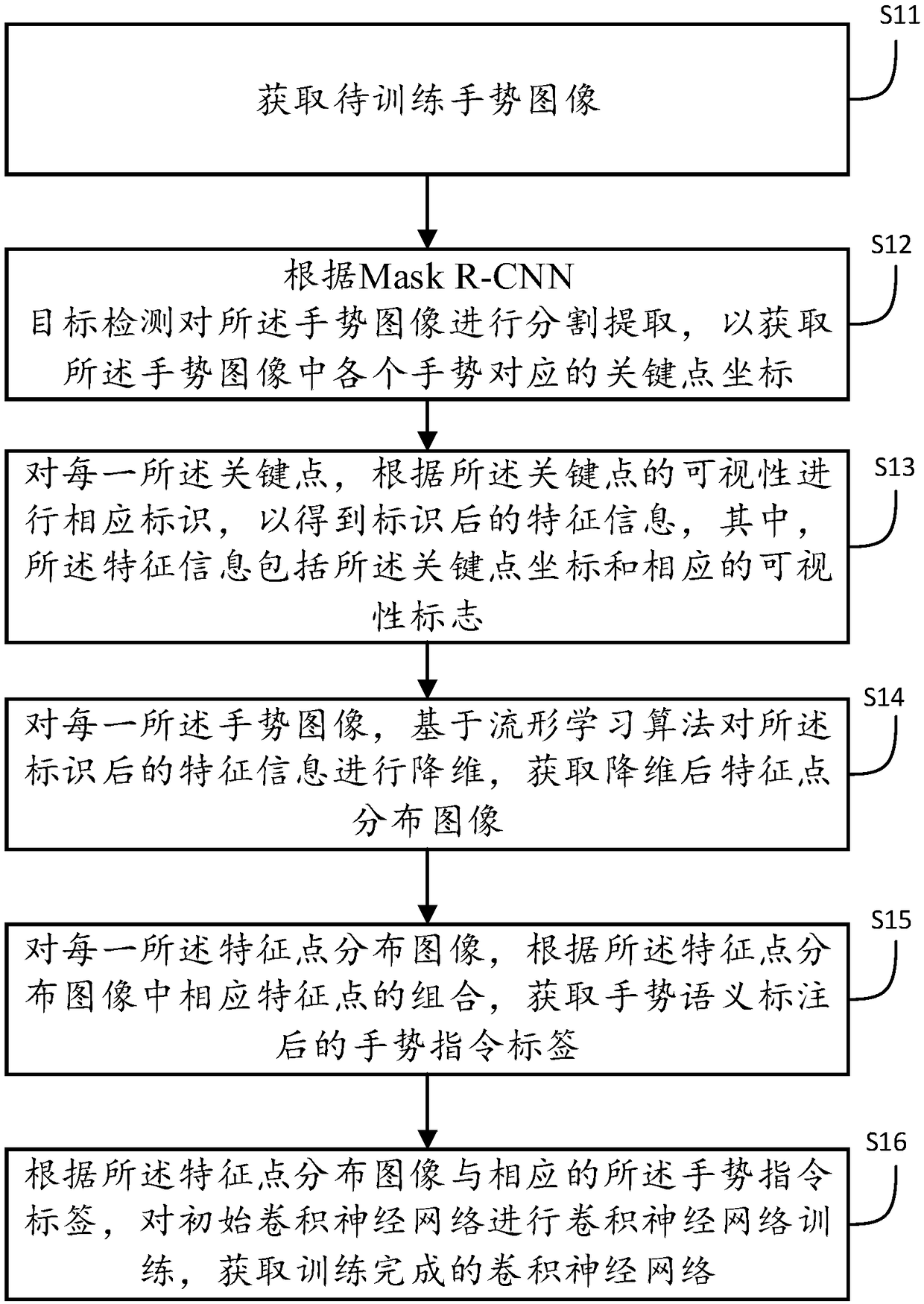

[0053] see figure 1 , a schematic flowchart of a convolutional neural network training method provided in Embodiment 1 of the present invention;

[0054] A training method for a convolutional neural network, comprising:

[0055] S11. Obtain the gesture image to be trained;

[0056] S12. Segment and extract the gesture image according to Mask R-CNN target detection, so as to obtain key point coordinates corresponding to each gesture in the gesture image;

[0057] S13. For each key point, correspondingly mark according to the visibility of the key point to obtain the marked feature information, wherein the feature information includes the key point coordinates and the corresponding visibility flag ;

[0058] S14. For each gesture image, perform dimensionality reduction on the marked feature information based on a manifold learning algorithm, and obtain a feature point distribution image after dimensionality reduction;

[0059] S15. For each feature point distribution image, ...

Embodiment 2

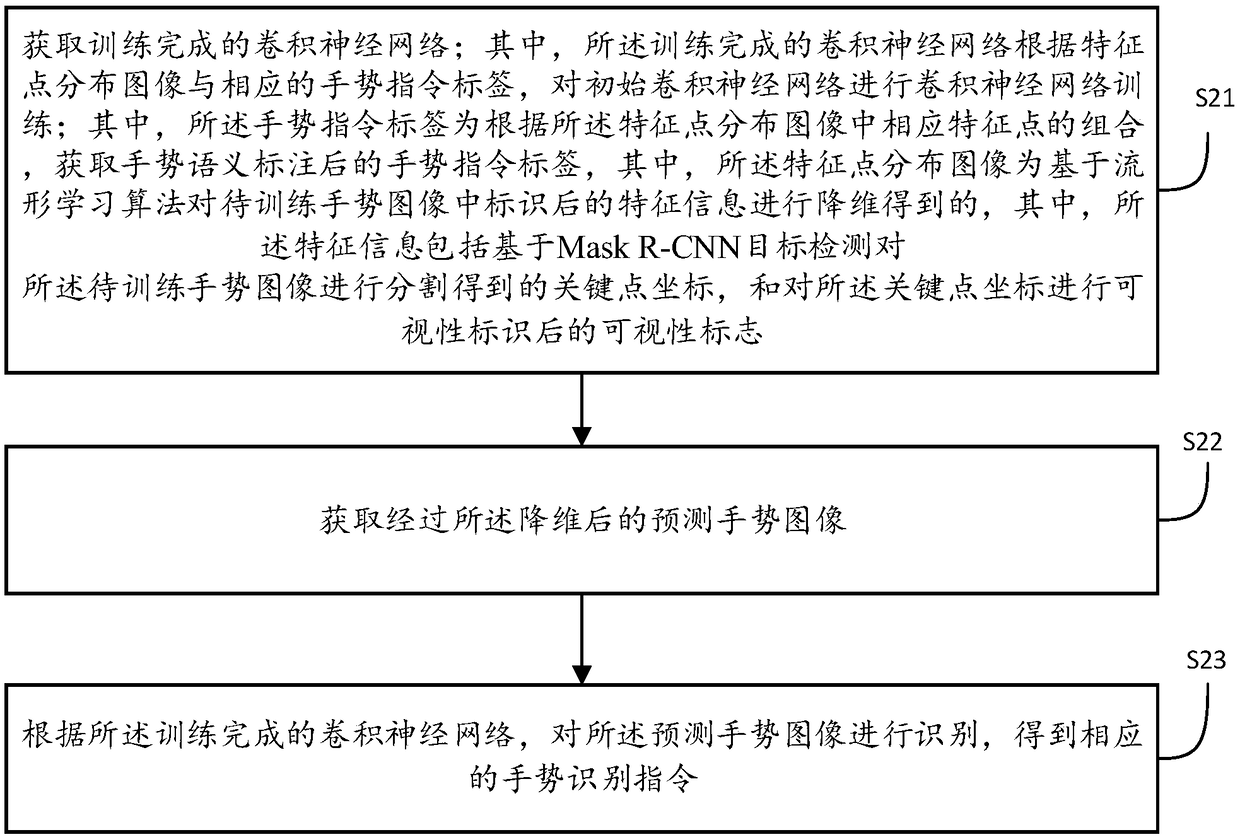

[0089] see figure 2 , a schematic flow chart of a convolutional neural network-based gesture recognition method provided by Embodiment 2 of the present invention, including:

[0090] S21. Obtain the trained convolutional neural network; wherein, the trained convolutional neural network performs convolutional neural network training on the initial convolutional neural network according to the feature point distribution image and the corresponding gesture instruction label; wherein, the The gesture command label is based on the combination of corresponding feature points in the feature point distribution image, and the gesture command label after gesture semantic annotation is obtained, wherein the feature point distribution image is identified in the gesture image to be trained based on the manifold learning algorithm The feature information is obtained by dimensionality reduction, wherein the feature information includes key point coordinates obtained by segmenting the gestur...

Embodiment 3

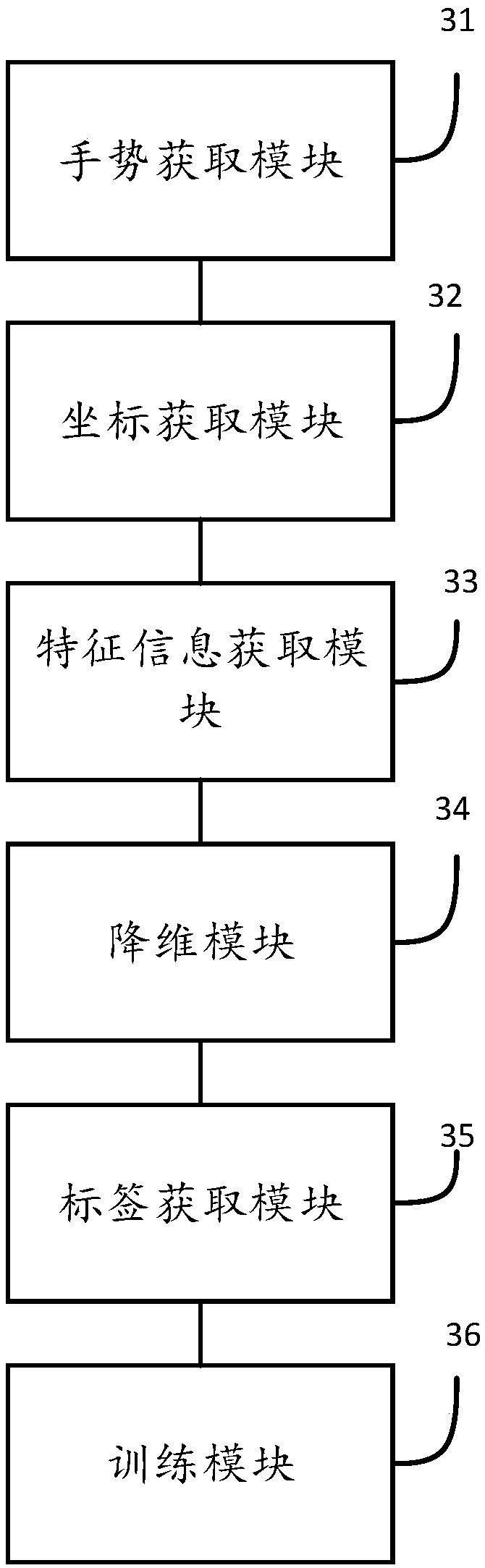

[0097] see image 3 , a schematic structural diagram of a convolutional neural network training device provided in Embodiment 3 of the present invention;

[0098] A training device for a convolutional neural network, comprising:

[0099] Gesture acquisition module 31, used to acquire gesture images to be trained;

[0100] The coordinate acquisition module 32 is used to segment and extract the gesture image according to the Mask R-CNN target detection, so as to obtain the key point coordinates corresponding to each gesture in the gesture image;

[0101] The feature information acquisition module 33 is configured to identify each key point according to the visibility of the key point to obtain the identified feature information, wherein the feature information includes the key point coordinates and corresponding visibility signs;

[0102] A dimensionality reduction module 34, configured to perform dimensionality reduction on the identified feature information based on a manif...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com