Method and apparatus for estimating indoor scene layout based on conditional generation countermeasure network

An indoor scene and condition generation technology, applied in the field of image scene understanding, can solve problems such as difficulty in solving model parameters and high complexity of network models, and achieve the effect of preventing low complexity, fine lines, and precise layout

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] Below in conjunction with accompanying drawing and specific embodiment the technical solution of the present invention is described in further detail:

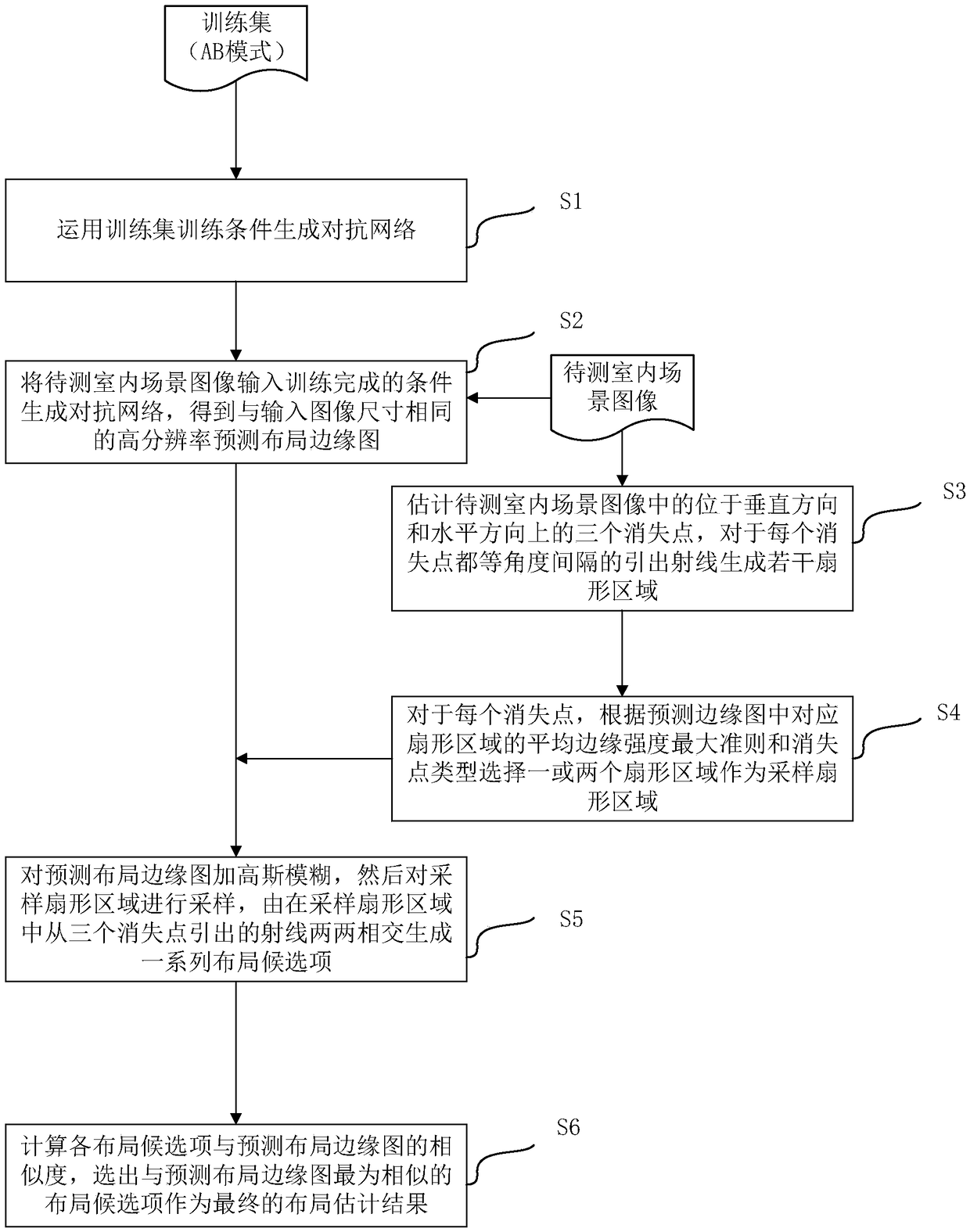

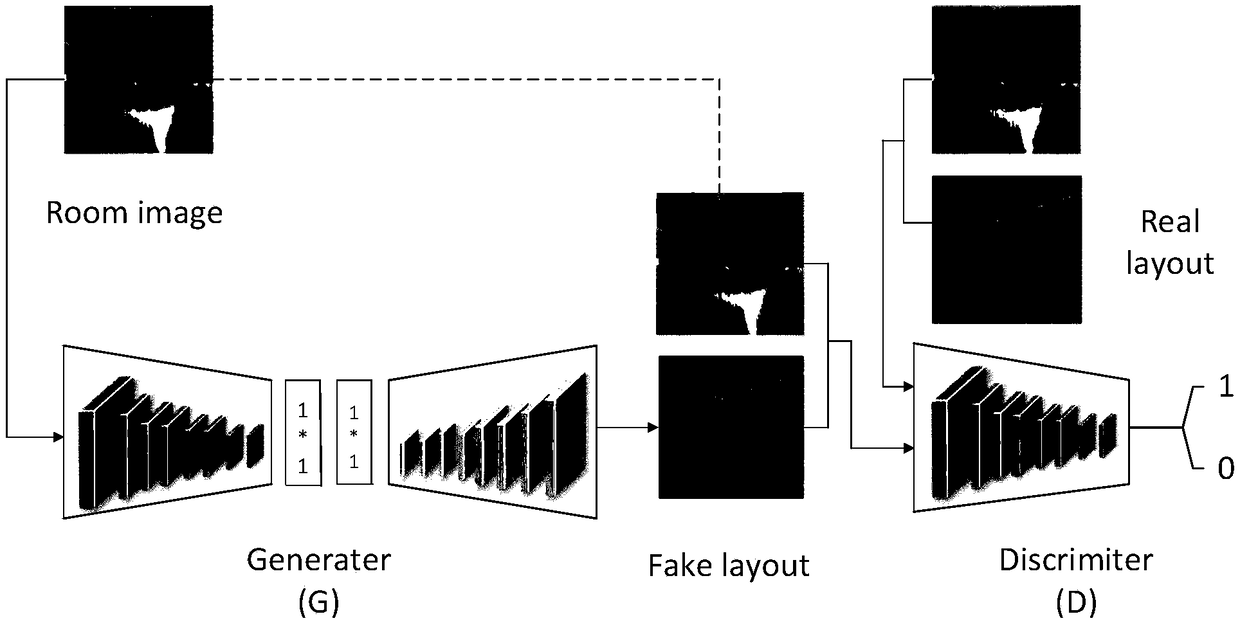

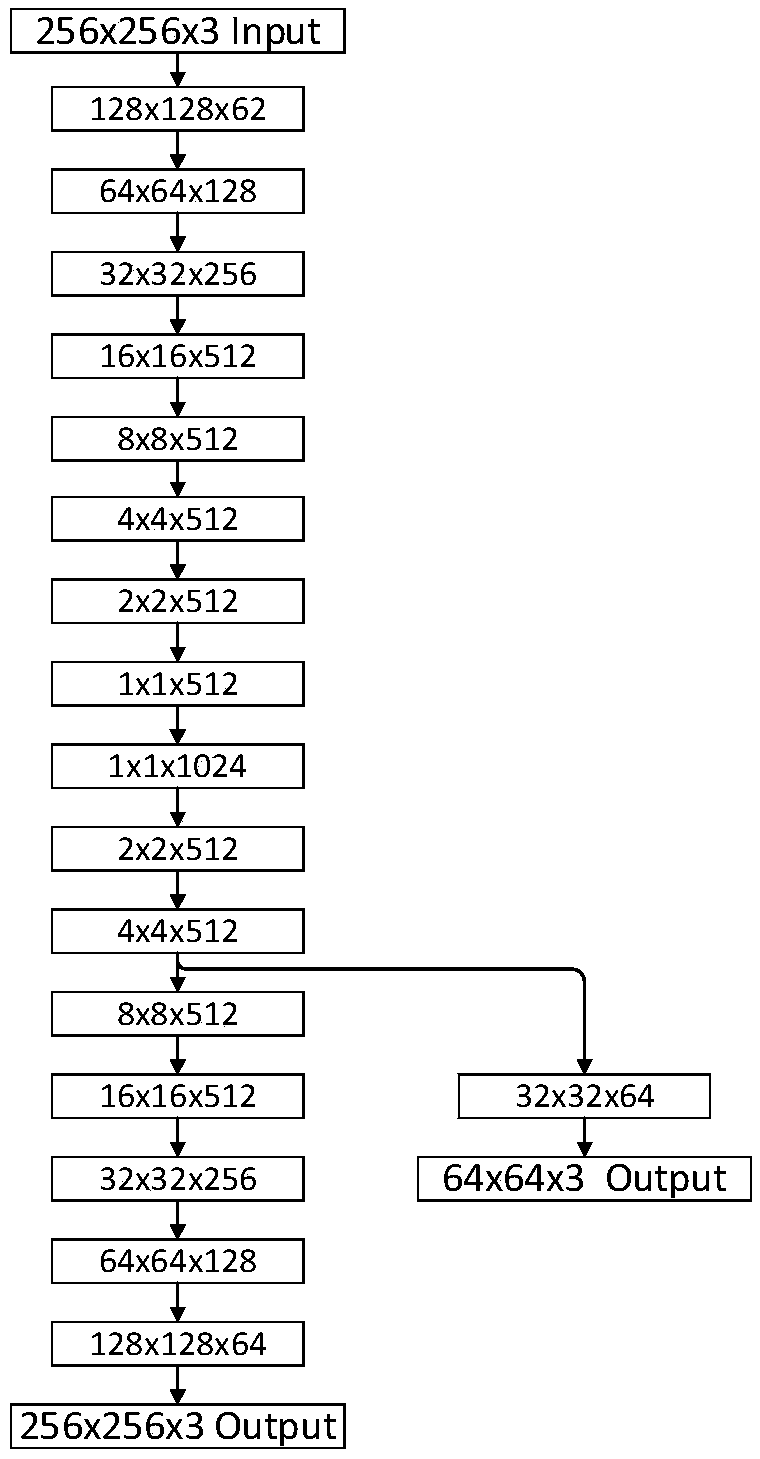

[0055] The embodiment of the present invention provides a method for estimating indoor scene layout based on conditional generative adversarial network. Firstly, the conditional generative adversarial network is used to classify each local area of the input image, thereby obtaining a high-resolution predicted layout edge map, and then Select a sampling sector from a series of fan-shaped regions estimated by vanishing points according to the predicted layout edge map, and then Gaussian blur the predicted layout edge map so that it is well aligned with the most accurate sampling line generated by the vanishing point in the fan-shaped region , so as to obtain the most accurate layout estimation results. Flowchart such as figure 1 As shown, it specifically includes the following steps:

[0056] Step S1, extract training...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com