An efficient global illumination rendering method based on depth learning

A technology of deep learning and global light, applied in neural learning methods, 3D image processing, instruments, etc., to achieve the effect of saving rendering time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

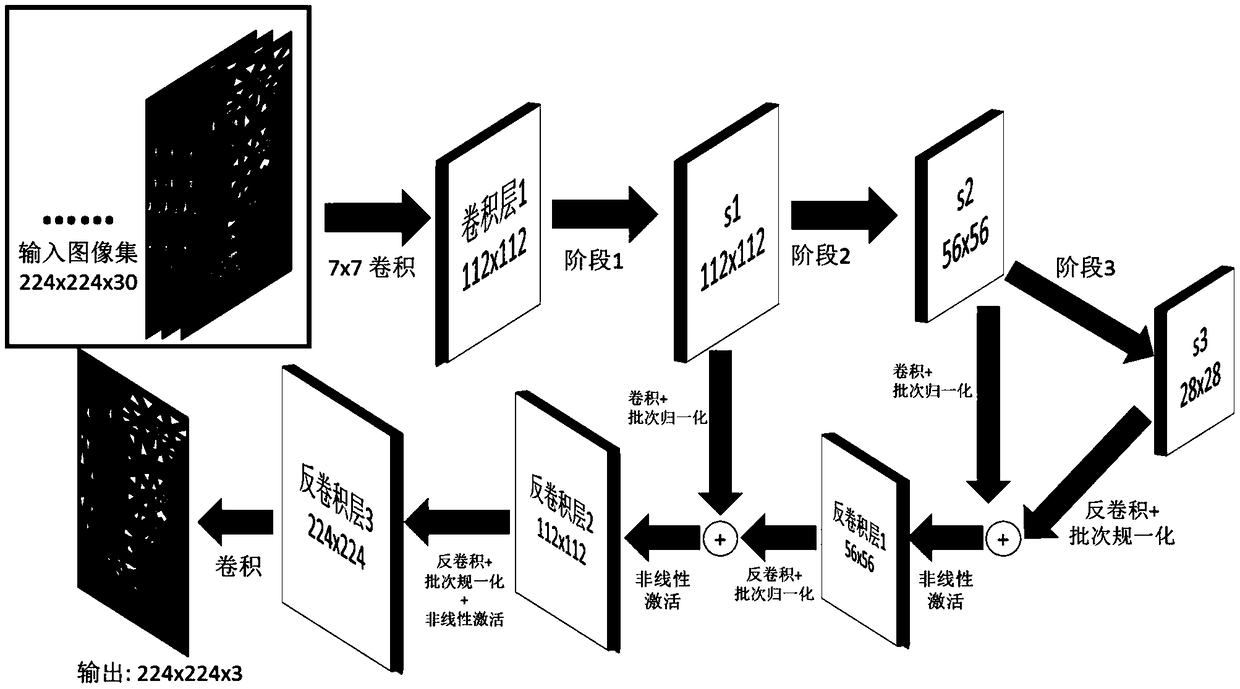

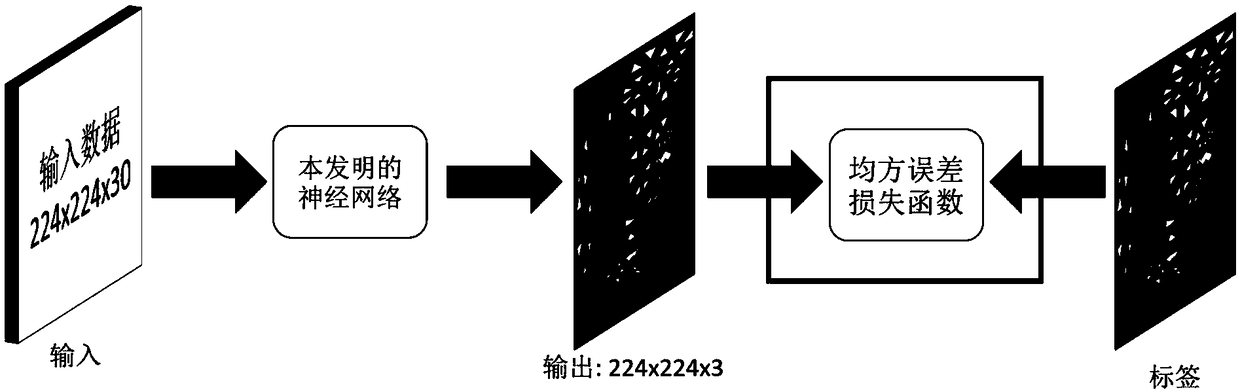

[0036] The implementation of the lighting rendering method based on deep learning of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0037] Basic concepts related to the convolutional neural network (CNN) in the present invention and deep learning:

[0038] a) stride, kernel, pooling in convolutional neural network (CNN)

[0039] Step size (stride), convolution kernel size (kernel), and pooling (pooling) are concepts commonly used in the design of convolutional neural network structures. Image convolution is generally a 2-dimensional convolution. In the convolution layer, kernel refers to the size (height and width) of the convolution kernel, and stride refers to the sampling step of convolution. Stride can also be used for image features (feature map), which refers to the sampling step size corresponding to the height and width of the feature map, that is, a multi-layer neural network can also be used as a sampling.

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com