An embedded convolutional neural network acceleration method based on ARM

A convolutional neural network and convolutional neural technology, applied in the field of embedded convolutional neural network acceleration, can solve problems such as inefficiency, achieve the effect of wide use space and improve computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

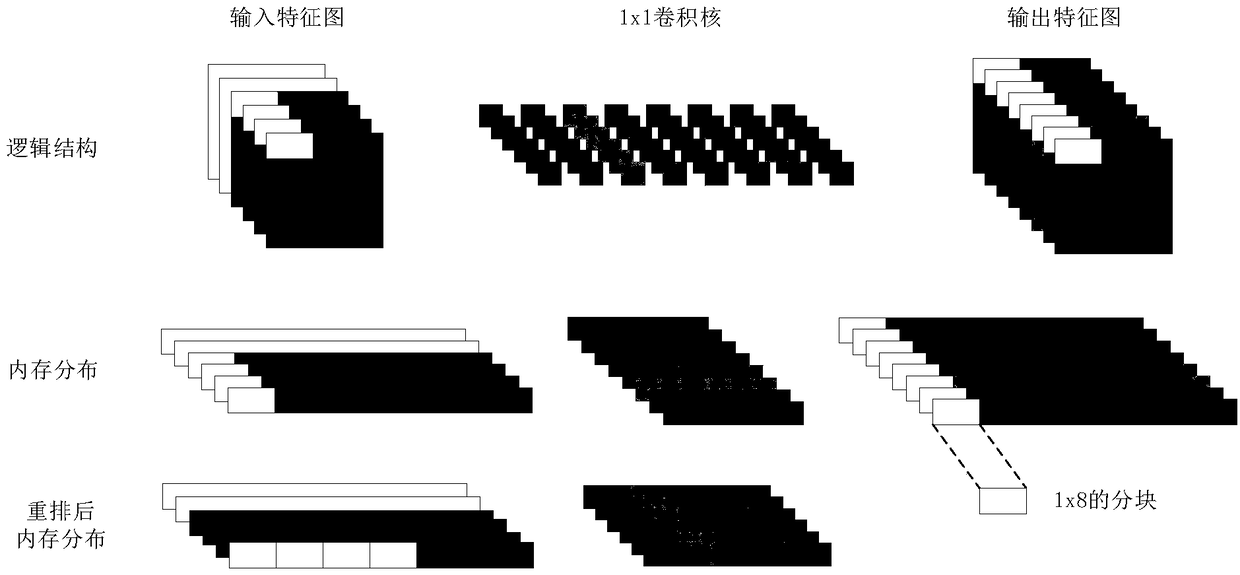

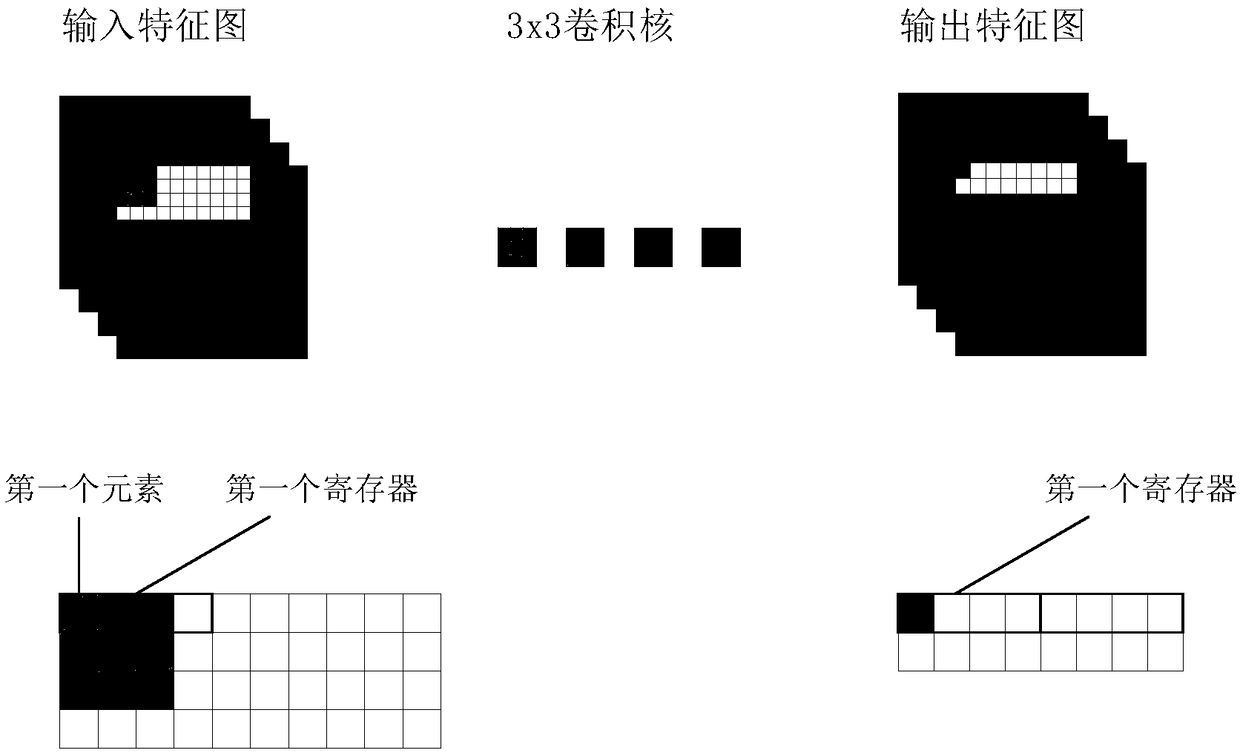

[0035] The optimization method of the present invention will be further described in detail in conjunction with the drawings and MobileNetV1 below, but the present invention is also applicable to other neural networks using 1×1 convolution and 3×3 depth separable convolution.

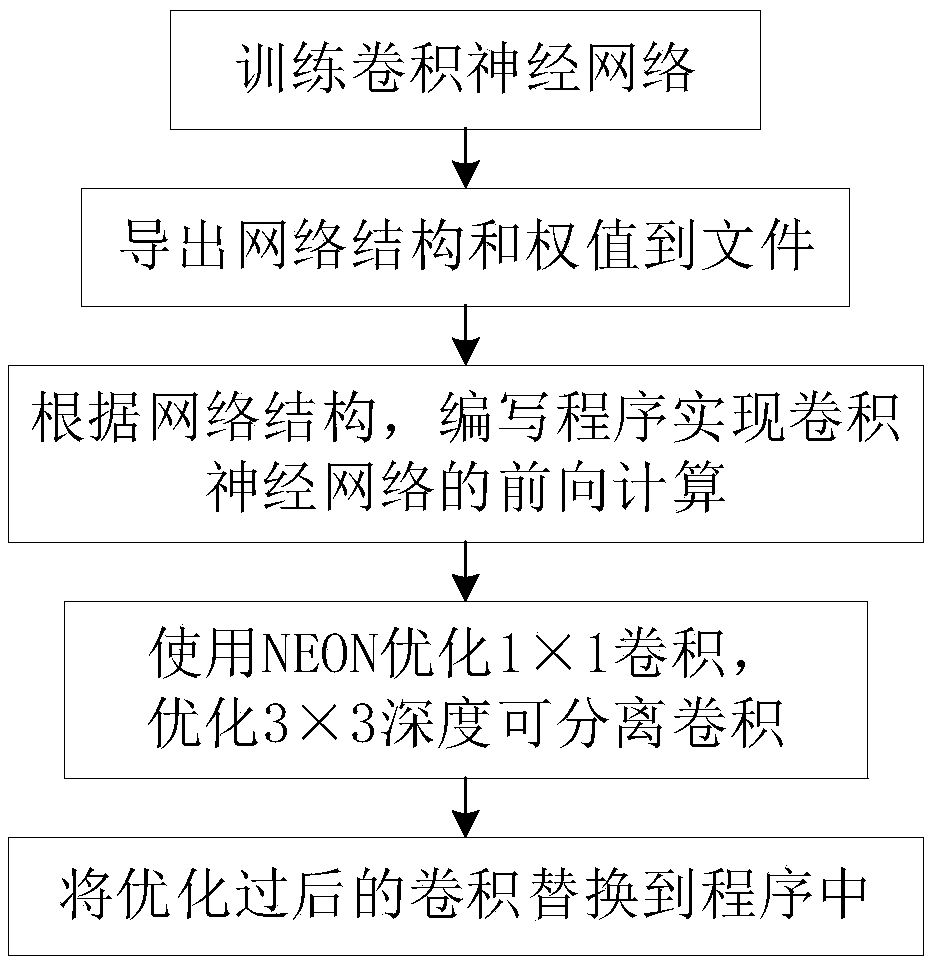

[0036] like image 3 As shown, the ARM-based embedded convolutional neural network acceleration method provided by the present invention comprises the following steps:

[0037] Step 1, use Caffe or other deep learning frameworks to train the lightweight convolutional neural network MobileNetV1.

[0038] Step 2, export the trained MobileNetV1 network structure and weights to a file.

[0039] Step 3, the design program imports the weight file, and realizes the forward calculation of the neural network according to the trained network structure. Different layers in the neural network can be represented by different functions. Function parameters include layer specification parameters, input feature maps,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com