Video target detection method based on deep learning

A technology of object detection and deep learning, applied in the field of video object detection based on deep learning, can solve the problems of hindering application, high research cost, and insufficient integration of video time and space context information, so as to improve accuracy and take into account accuracy and real-time effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

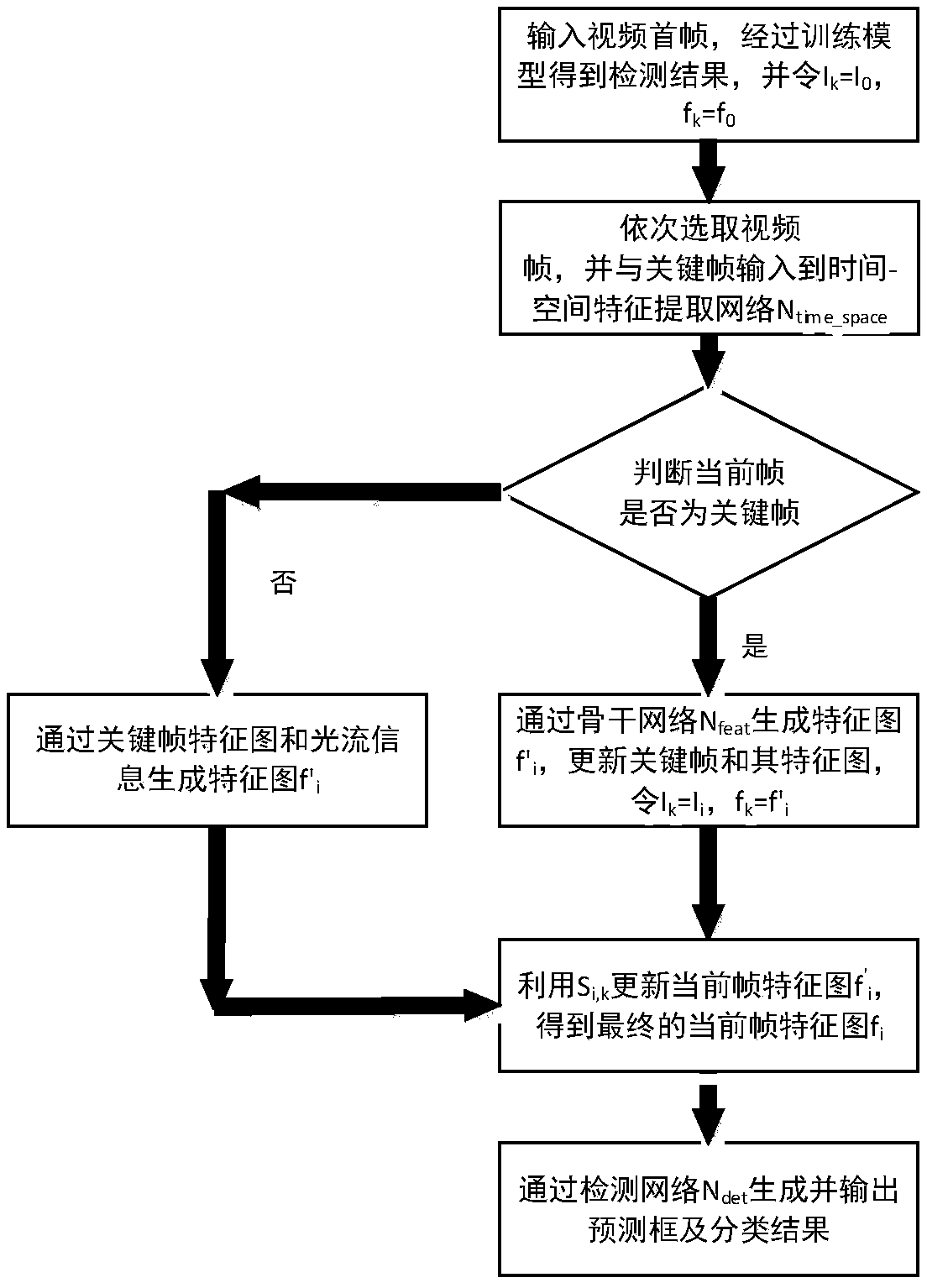

[0042] Such as figure 1 Shown flow chart, the steps of the present invention include:

[0043] S1: Normalize the training image to a size of 600×1000 pixels, and initialize the parameters of the convolutional neural network;

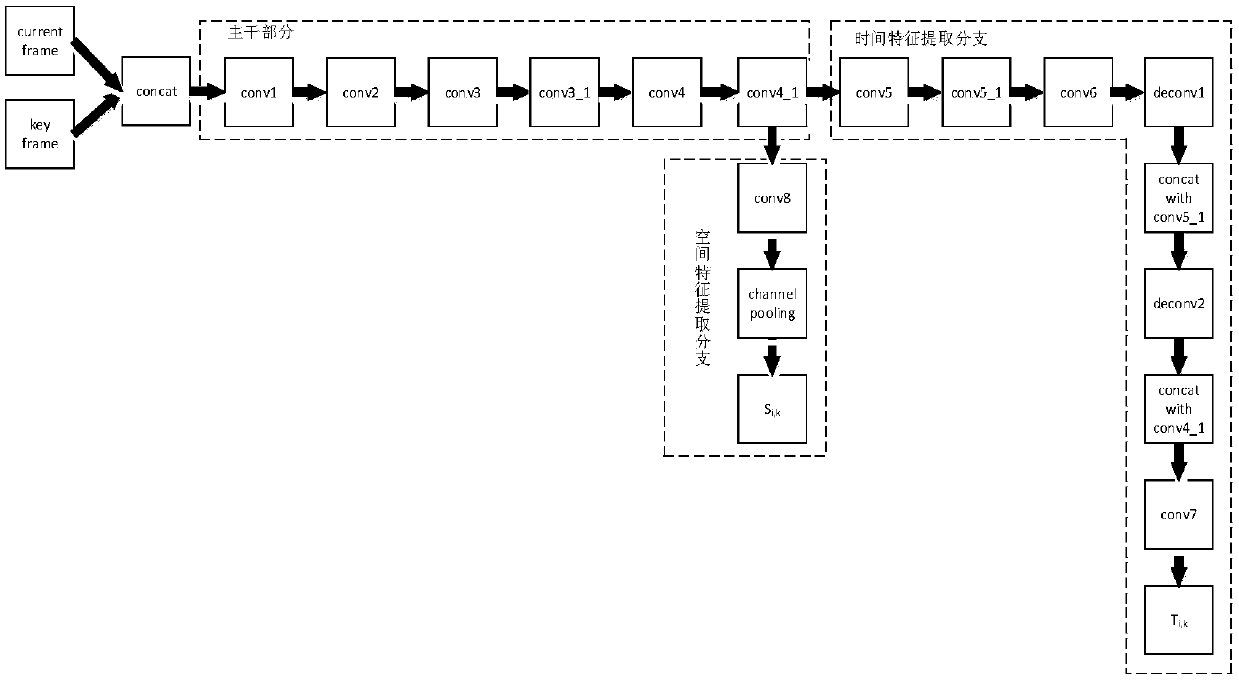

[0044] S2: Training backbone network, time-space feature extraction network and detection network;

[0045] S21: Randomly select two frames of images within n frames of the same video as training samples. In the specific embodiment of the present invention, n is taken as 10. Since there is no concept of key frames and non-key frames in training, the two frames of images are used in training. The previous frame in is used as the reference frame I k , the latter frame is used as the predicted frame I i ;

[0046] S22: set the reference frame I k As input, through the backbone network N feat , extract image features, and output the corresponding reference frame feature map f k , its formula is expressed as follows:

[0047] f k =N feat (I k )

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com