A multi-scale Hash retrieval method based on deep learning

A deep learning and multi-scale technology, applied in the field of image and multimedia signal processing, can solve the problems of large quantization error, information loss, and long training time, and achieve the effect of enhancing retention, reducing quantization error, and speeding up encoding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

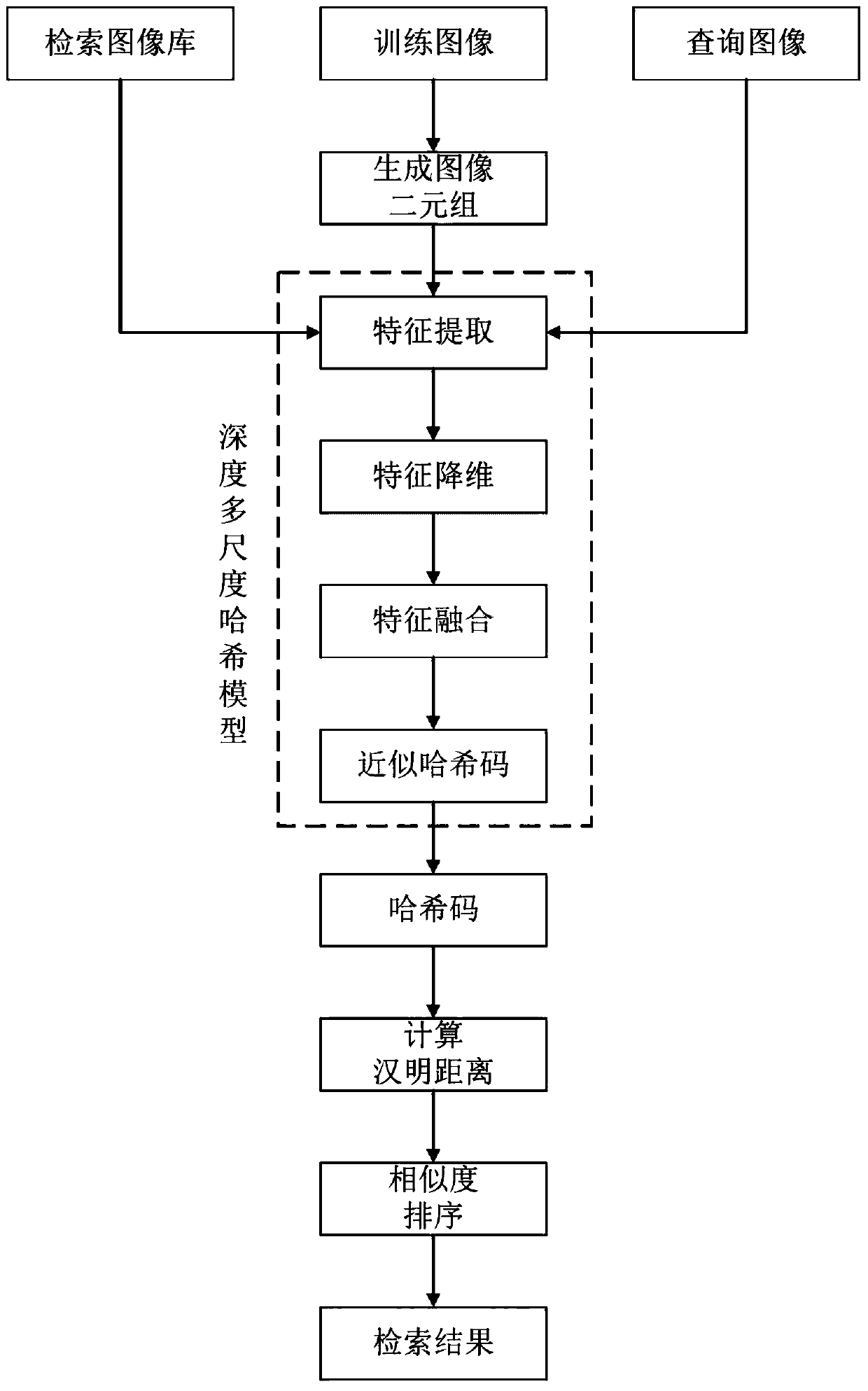

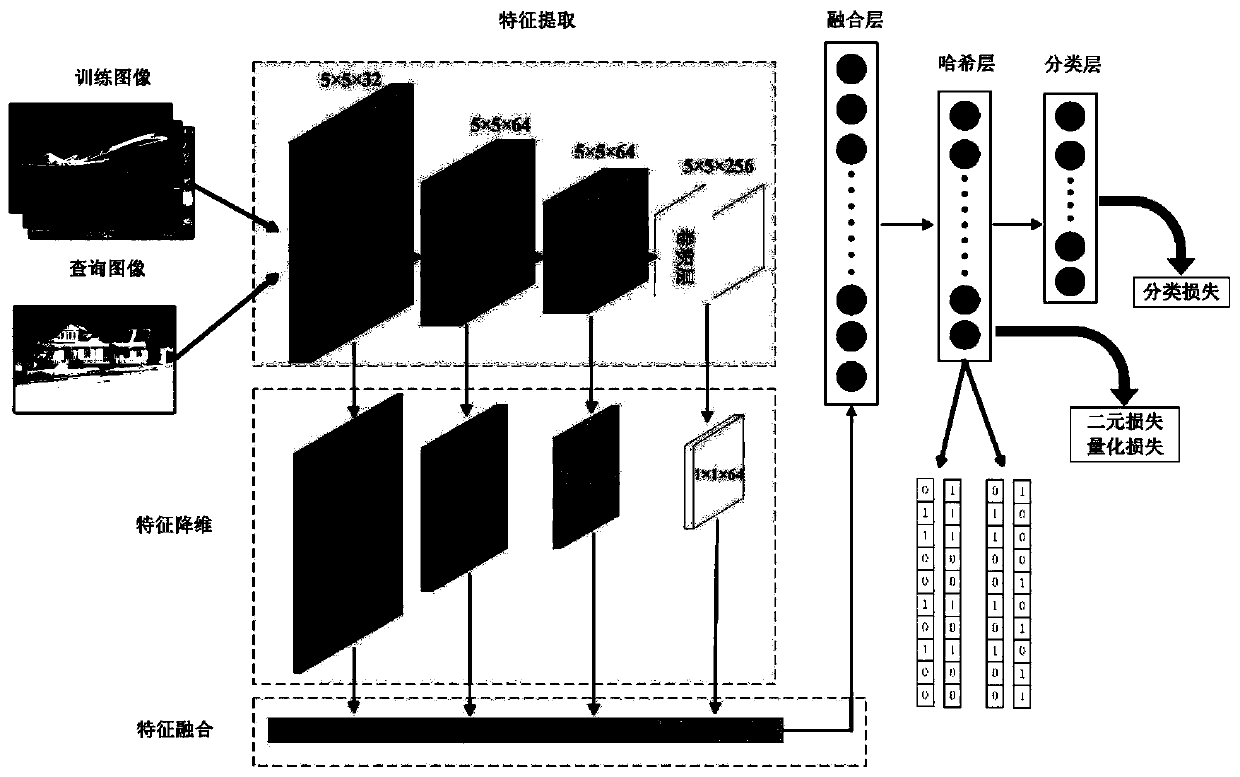

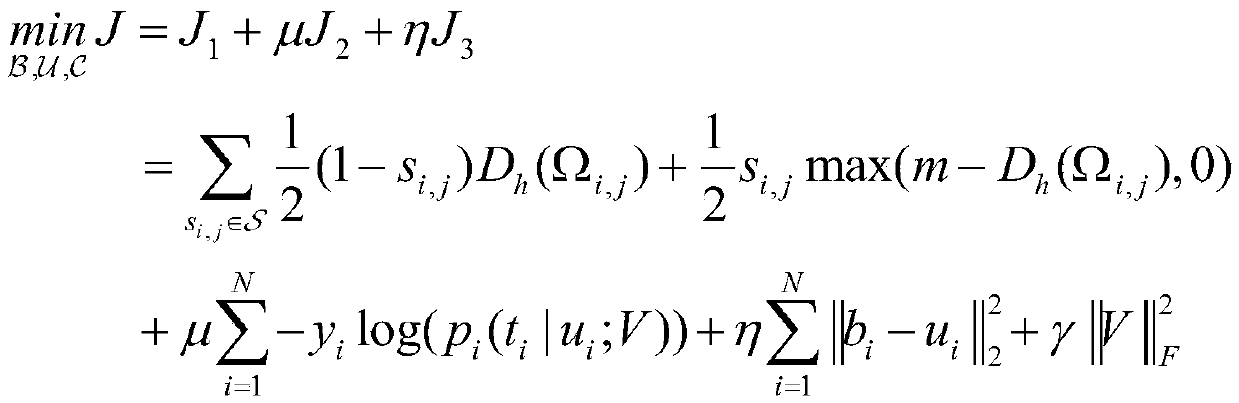

[0030] The specific process of the multi-scale hash retrieval method based on deep learning proposed by the present invention is as follows: figure 1 As shown, first preprocess the data, obtain the similarity matrix according to the label of the image, generate binary image pairs, then build the model, adjust the parameters of the model, and then use the backpropagation algorithm to train the model. The image is then encoded to obtain a unique identifier for the data. Finally, the query image is encoded, and the Hamming distance between the query image and the database image is calculated. Arrange in ascending order according to the size of the Hamming distance, and return the image data whose Hamming distance is less than a certain threshold, which is the retrieval result.

[0031] The present invention will be further described below in conjunction with specific embodiments (but not limited to this example) and accompanying drawings.

[0032] (1) Data preprocessing

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com