Light human body action recognition method based on deep learning

A technology of human action recognition and deep learning, which is applied in the field of graphics and image processing, and can solve problems such as the network is too deep and the parameters of the human action recognition model are large

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0073] In order to make the purpose, technical solutions and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the examples and accompanying drawings. As a limitation of the present invention.

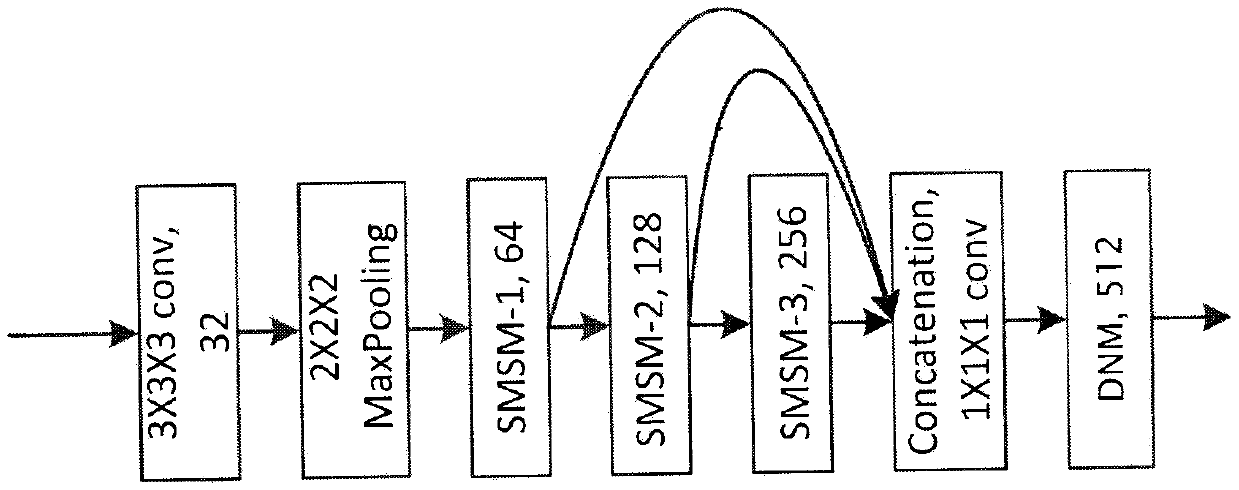

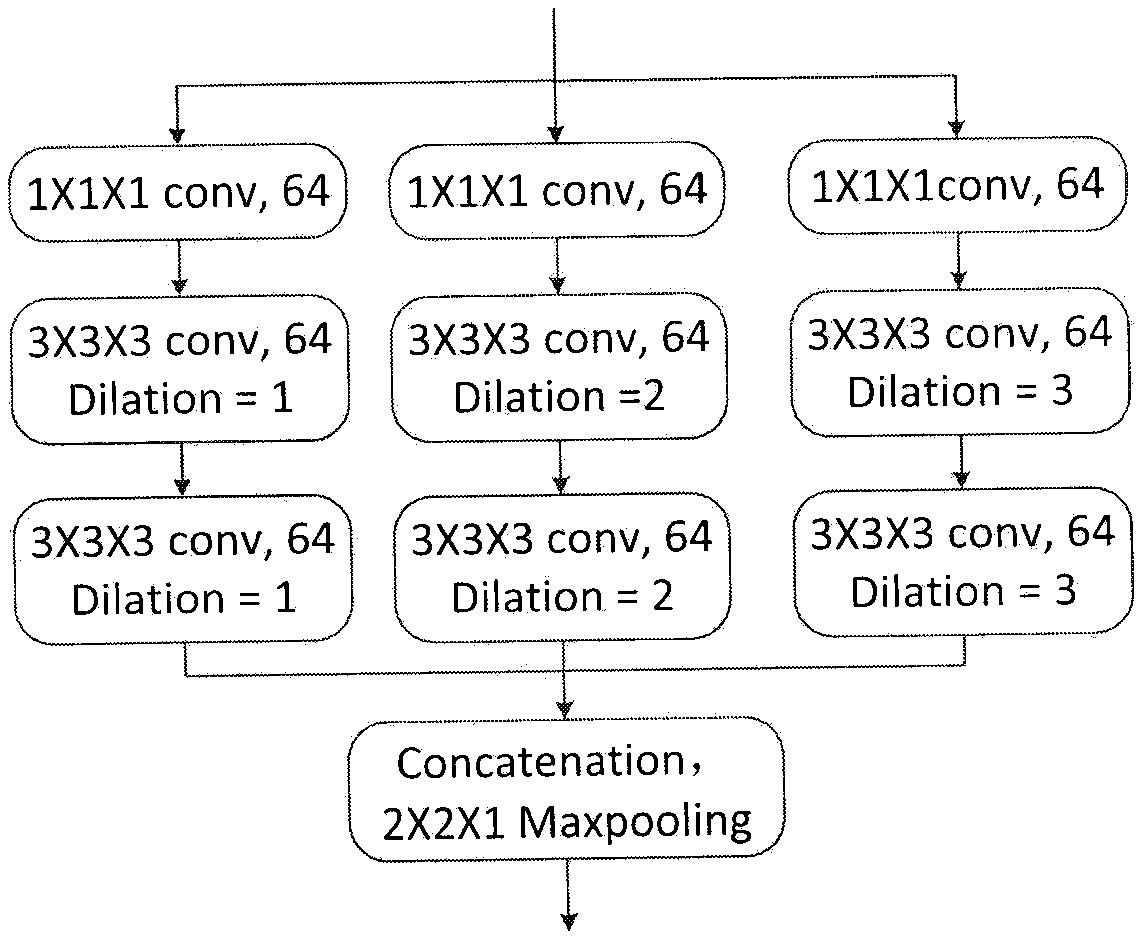

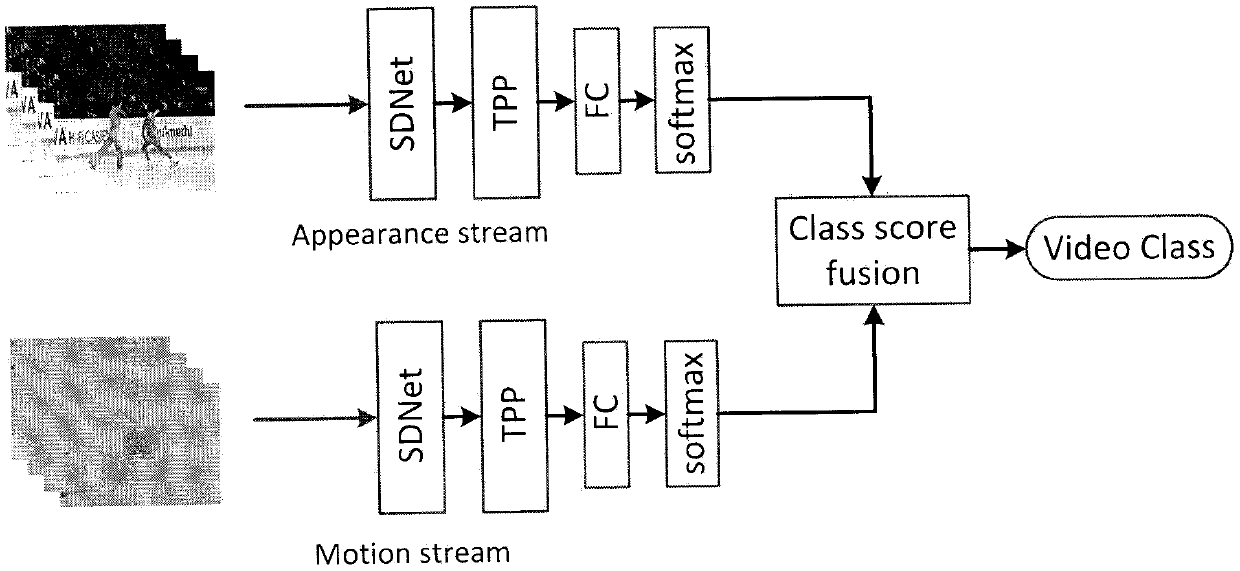

[0074] Such as image 3 As shown, first of all, the present invention utilizes the light-weight deep learning network (SDNet) that combines the proposed shallow layer and deep network to extract and represent the features of the spatio-temporal dual stream, and then utilizes the time pyramid pooling layer (TPP) to convert the time stream The video frame-level features of the spatial stream and the spatial stream are aggregated into a video-level representation, and then the recognition results of the spatio-temporal dual-stream to the input sequence are obtained through the fully connected layer and the softmax layer. Finally, the dual-stream results are fused by weighted average fusion to obtain the final ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com