Reconstruction method based on point-line feature rapid fusion

A feature fusion and point feature technology, applied in the field of reconstruction based on the rapid fusion of point and line features, can solve the problems of unreliable results and small number of 3D point clouds, and achieve fast extraction and matching, small reprojection error, and accurate extraction Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

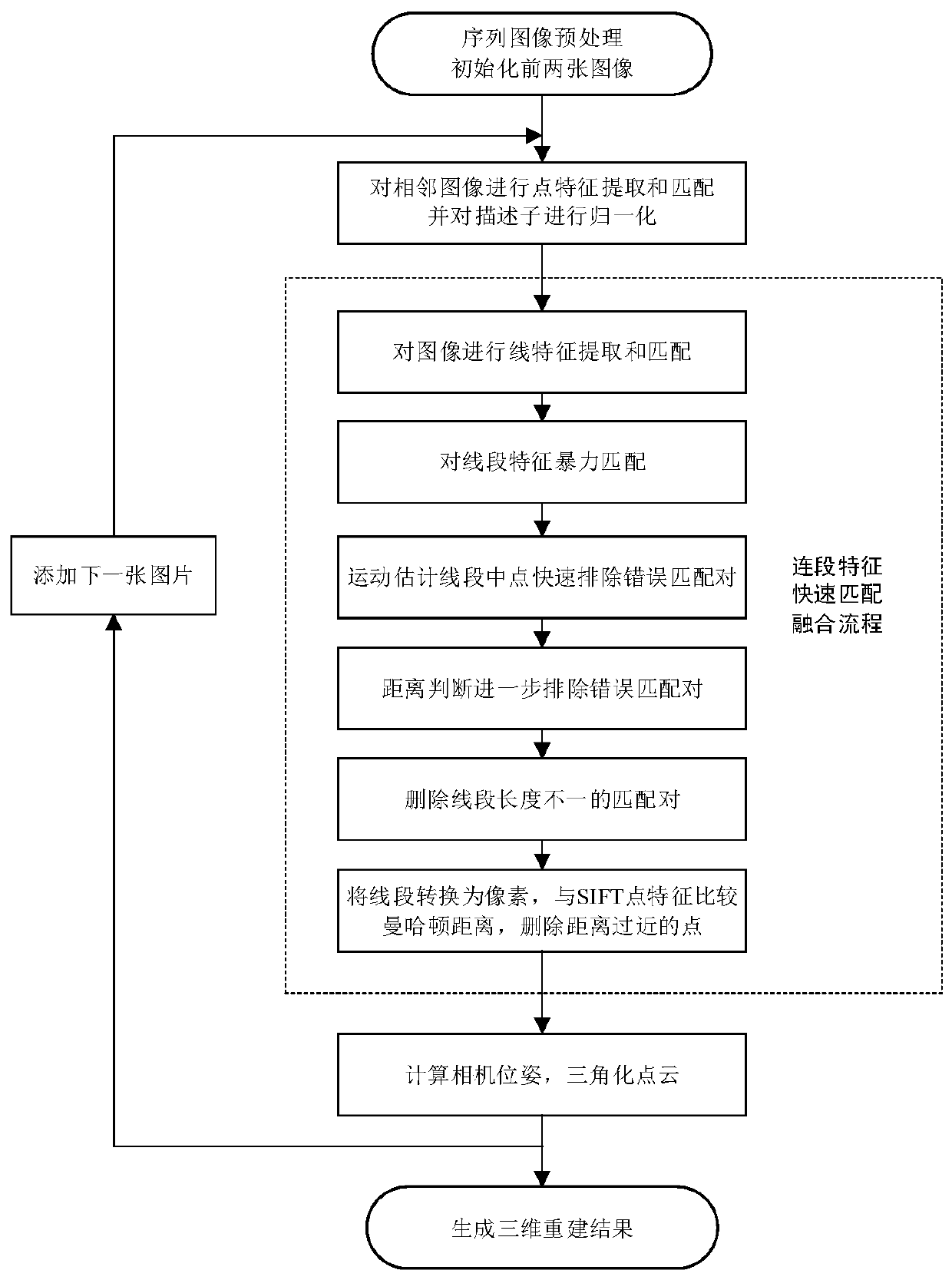

[0021] refer to figure 1 : figure 1 The dotted line part is the rapid fusion process of the dotted line feature in the present invention. From figure 1 It can be seen that the point features of the sequence images are first matched and the descriptors are normalized. Then the line segment features are matched from coarse to fine, and finally the point line features are fused to participate in the 3D reconstruction.

[0022] The present invention uses the Swiss Federal Institute of Technology multi-view collection data set fountain-P11 to carry out sparse 3D reconstruction, the experimental environment is a Vmware Ubuntu16.04 virtual machine, the hardware configuration is a 4-core processor with 8G memory, and GPU is not used for acceleration. Use Opencv4.0 to write 3D reconstruction program. Through the experiment process, make further explanation.

[0023] Step 1: Image preprocessing. The dataset includes 11 time-series images with a size of 3072*2048 pixels. Use the E...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com