Multi-modal emotion classification method based on text, voice and video fusion

A technology of emotional classification and video fusion, applied in character and pattern recognition, other database clustering/classification, instruments, etc., can solve the problems of unstable accuracy and high cost, achieve good flexibility, improve accuracy, and be easy to implement Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

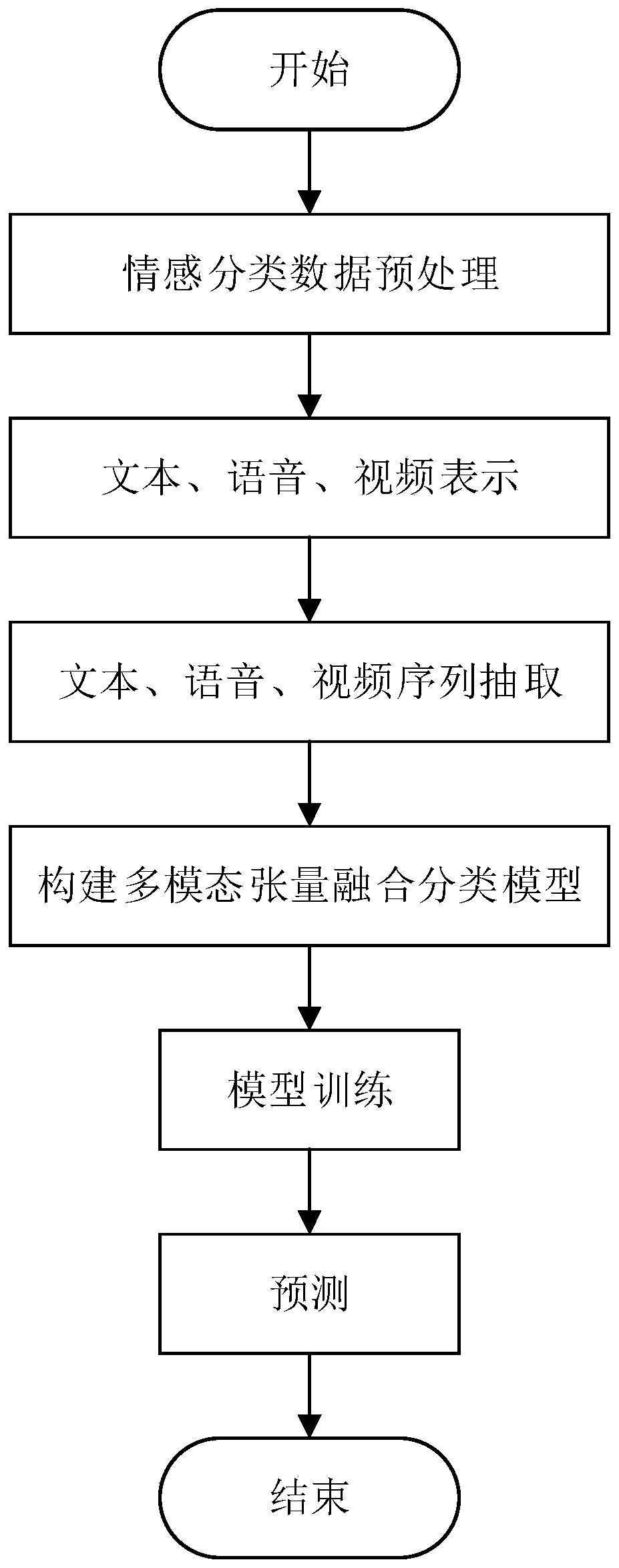

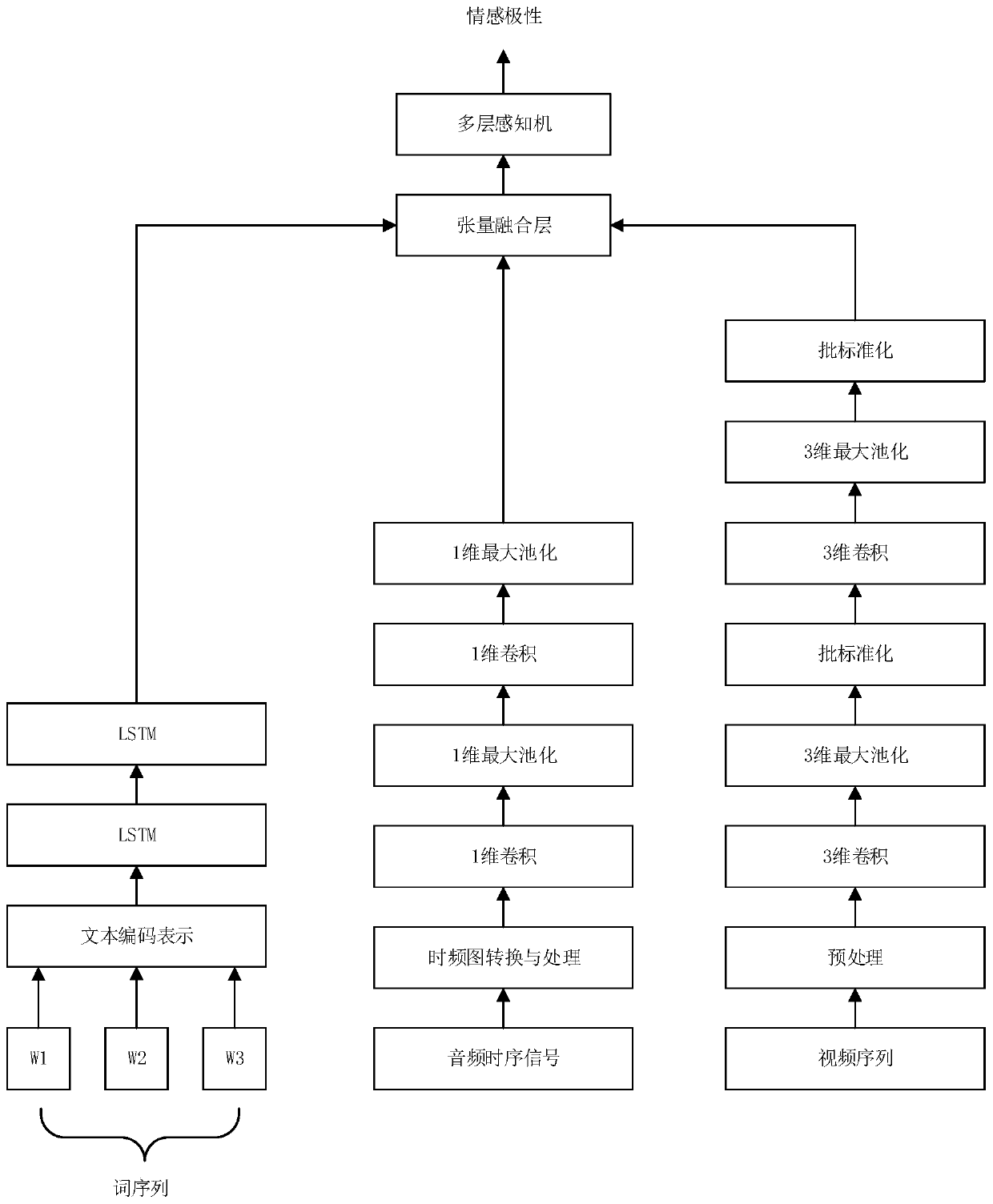

[0055] Such as Figure 4 As shown, this embodiment takes the MOSI data set of Carnegie Mellon University as an example, first obtains the original data of three modes, and then performs preprocessing.

[0056] Mark the emotional label of the corresponding segment, and align the corresponding video subtitle data (text mode), same-frequency audio data (audio mode), and video data (video mode). for example:

[0057] Ordinary sample: "I love this movie." From the semantics, the emotional category can be directly marked as positive;

[0058] Semantically ambiguous samples: "The movie is sick." Combined with a loud voice and obvious frowns in the video, the emotional category can be marked as negative;

[0059] In the training phase, the original samples of are sent to the multimodal emotion classification model based on tensor fusion for training, and the emotion classification model is obtained, which is used to judge the emotion category of the test sample during the test; In...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com