Sparse multi-view-angle three-dimensional reconstruction method for indoor scene

A technology for 3D reconstruction and indoor scene, which is applied in the fields of computer vision and computer graphics to achieve good scalability and easy implementation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

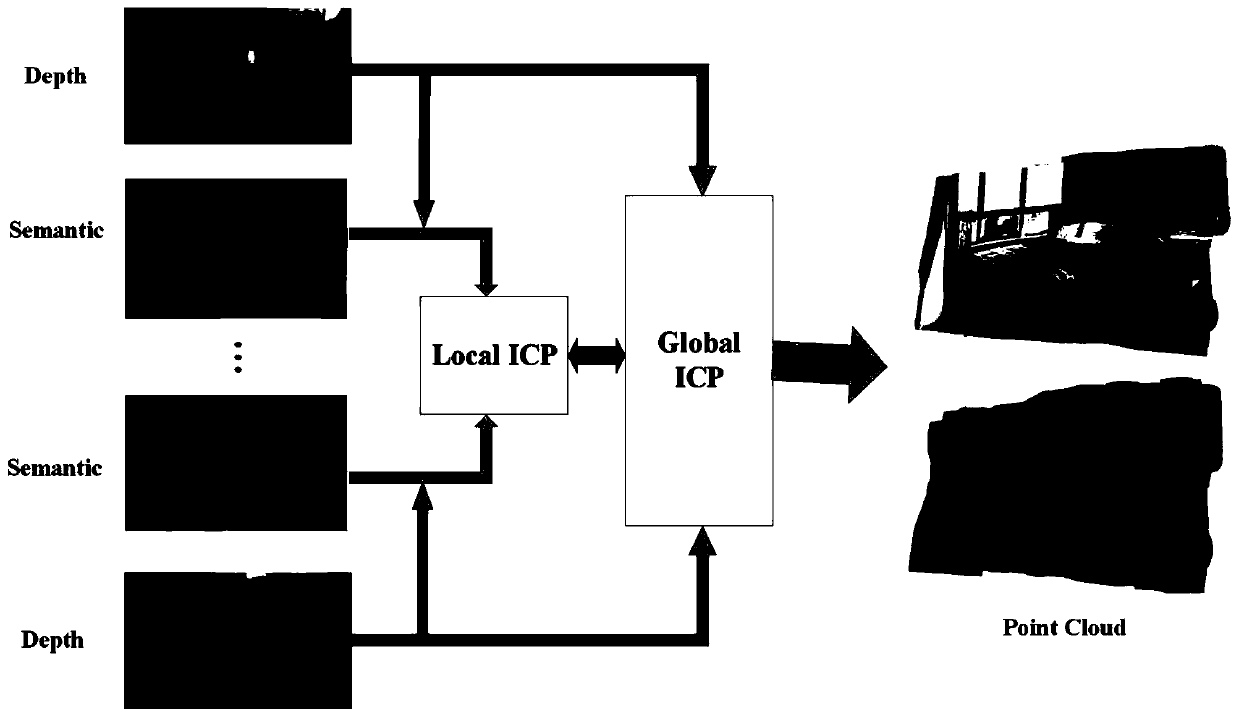

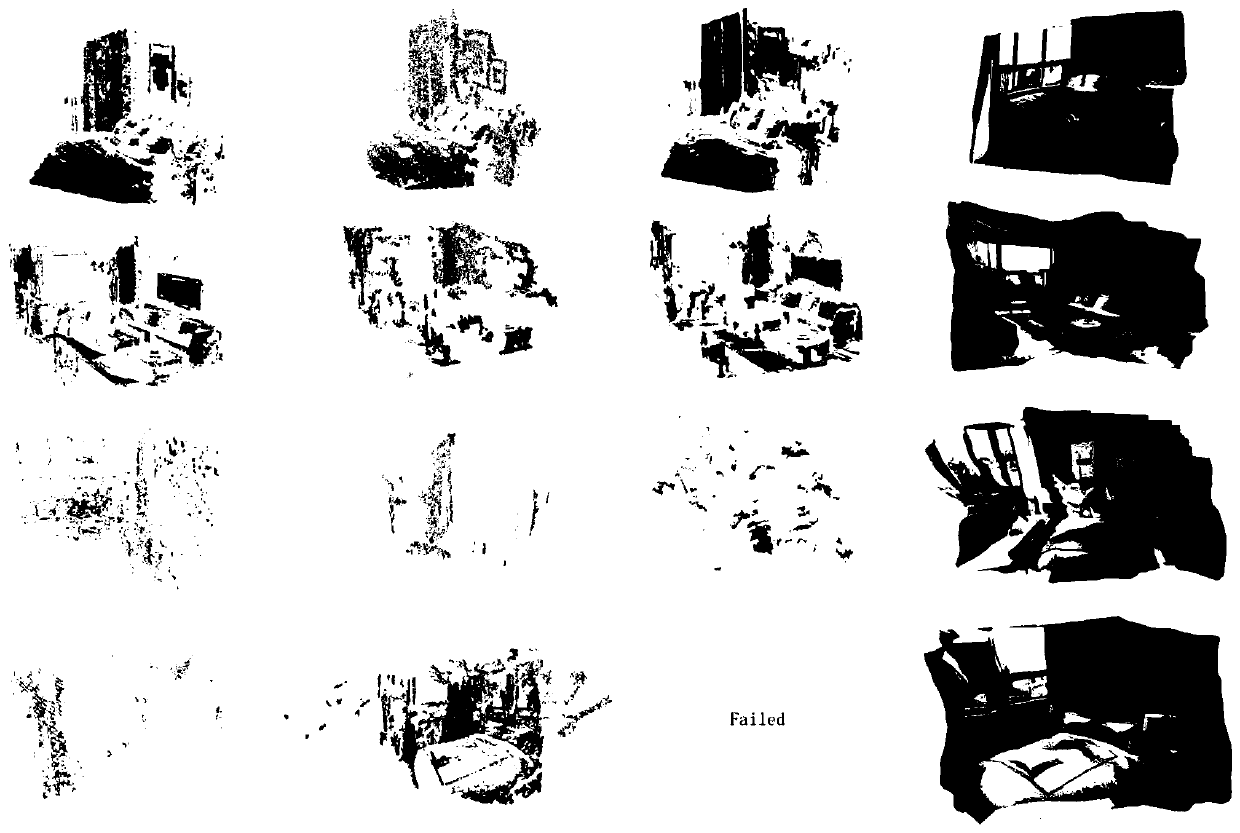

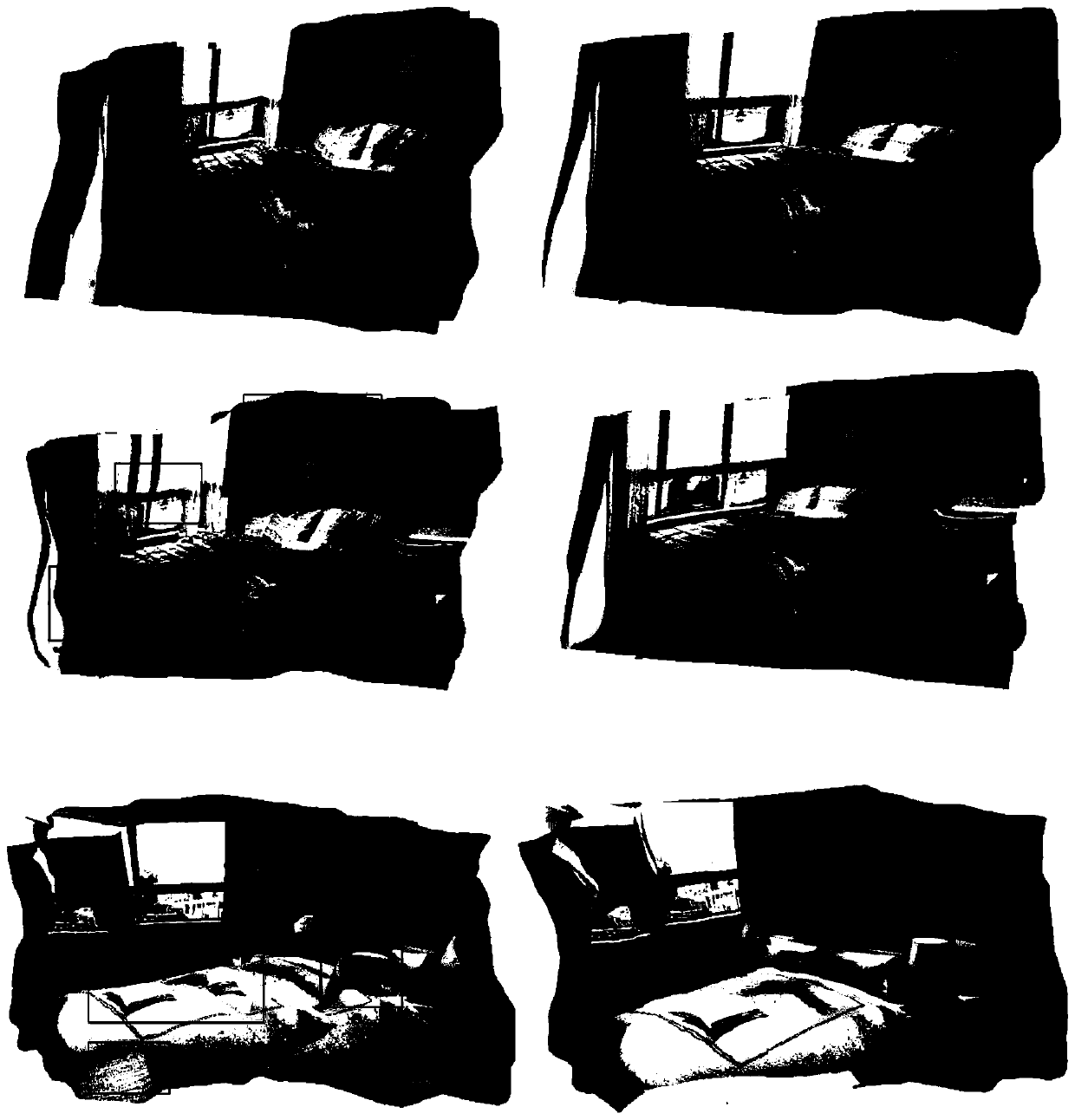

[0030] The invention utilizes the color images of sparse viewing angles to reconstruct the indoor three-dimensional scene. Firstly, the existing method is used to calculate the depth map and semantic map corresponding to each color image, and then the global-local registration method proposed by us is used to realize the fusion of 3D point cloud models of each sparse perspective. like figure 1 As shown, it is a flow chart of the indoor reconstruction based on the color picture three-dimensional scene of the embodiment of the present invention, and the specific implementation scheme is as follows:

[0031] 1) Take 3 to 5 images in an indoor scene, and use a sparse perspective to shoot, but there is still a certain degree of overlap between each two. Compared with the tracking method, the photographer has more room for movement, and Easier to operate.

[0032] 2) Use existing methods to estimate the depth map and semantic map corresponding to each color image.

[0033] 3) Fil...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com