Real-time heart rate detection method based on double cameras

A detection method and dual-camera technology, which can be used in the measurement of pulse rate/heart rate, diagnostic recording/measurement, medical science, etc., can solve the problem of increasing the difficulty of extracting micro-signals of facial parameters, ambient light interference and noise, and image data inconsistencies. Stability and other issues, to achieve the effect of increasing data stability, improving accuracy and stability, reducing signal distortion and jitter

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

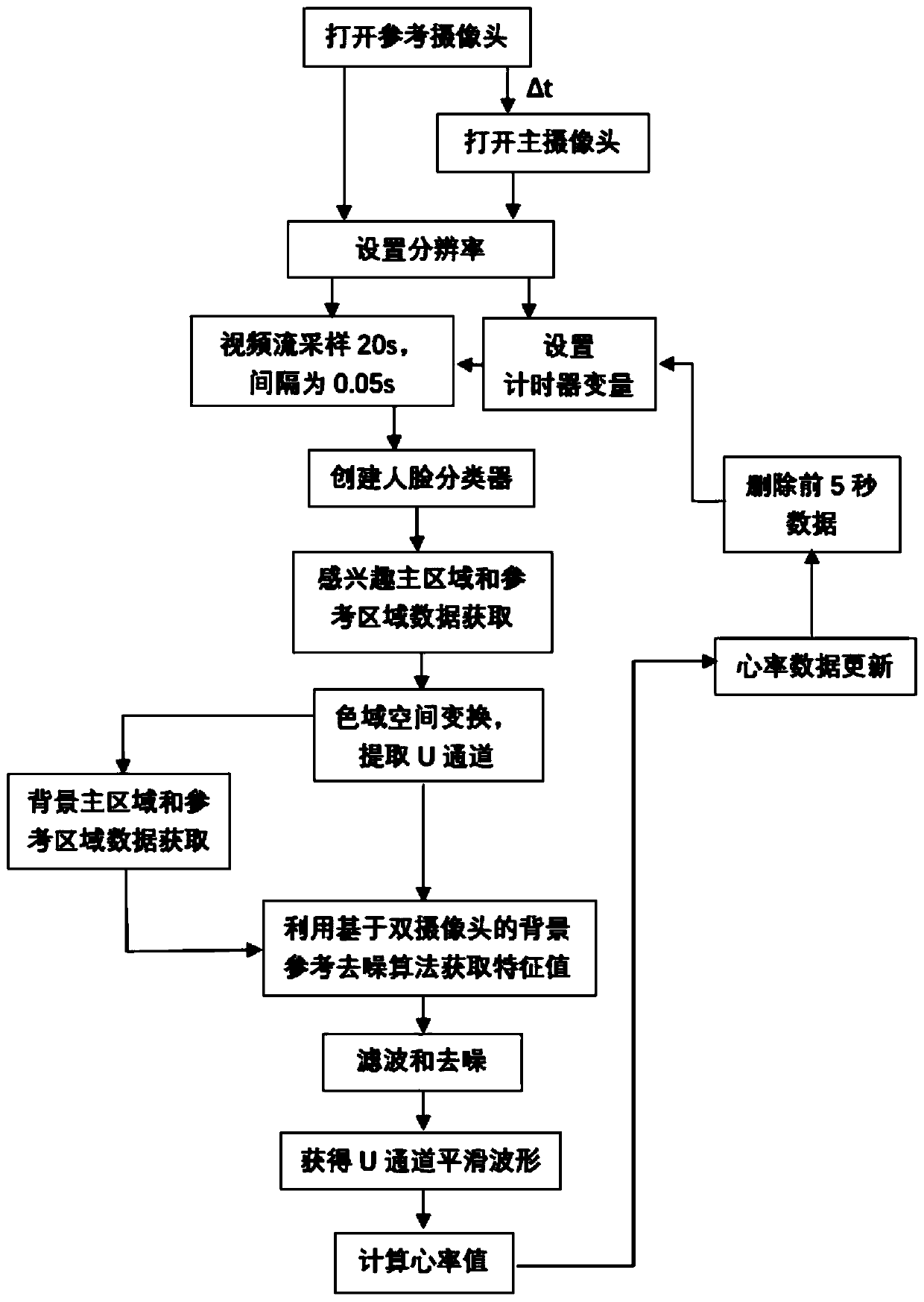

[0059] refer to Figure 1-7 , this embodiment includes the following steps:

[0060] S101: Turn on the main camera and the reference camera, and alternately shoot the main video and the reference video including the human face for 20 seconds at the same frame rate. Take a 30-year-old healthy woman as an example for illustration.

[0061] The steps are specifically:

[0062] Create the main camera object and reference camera object, set the same frame rate fs=20Hz;

[0063] set frame counter frame_counter = 0;

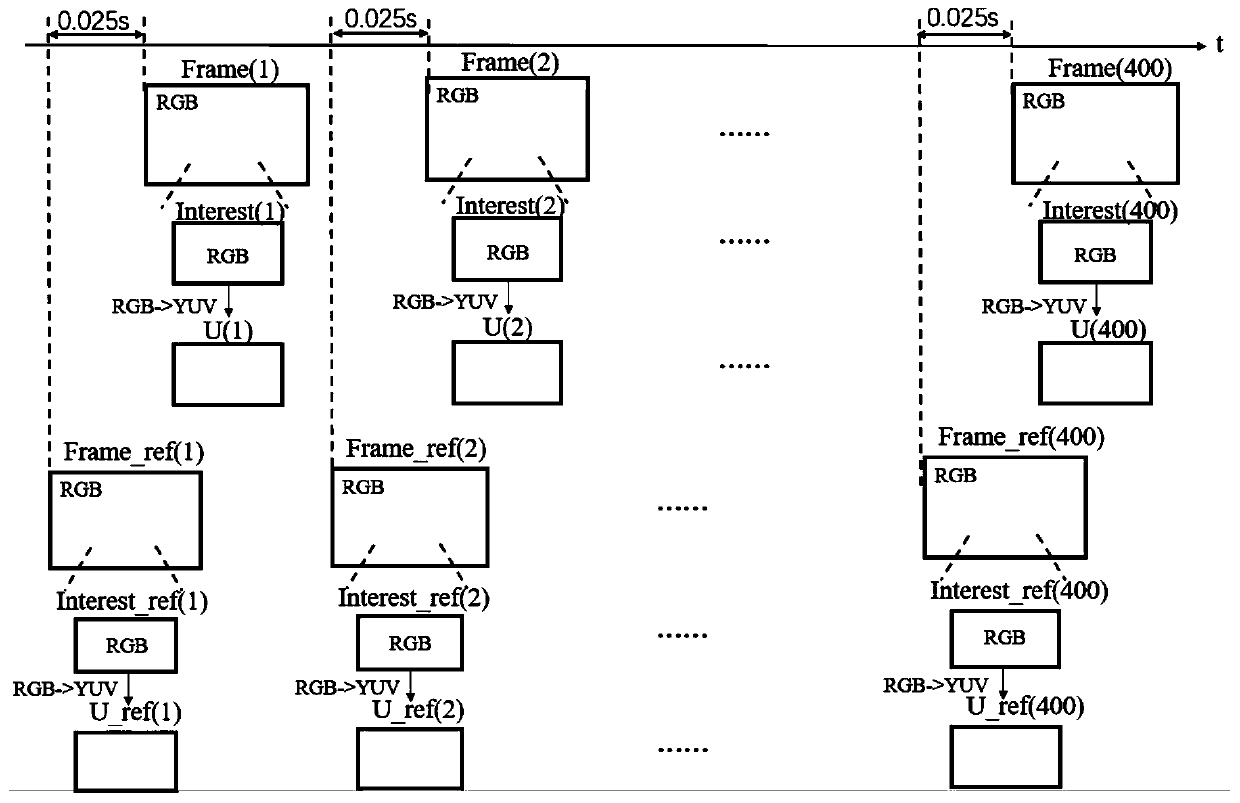

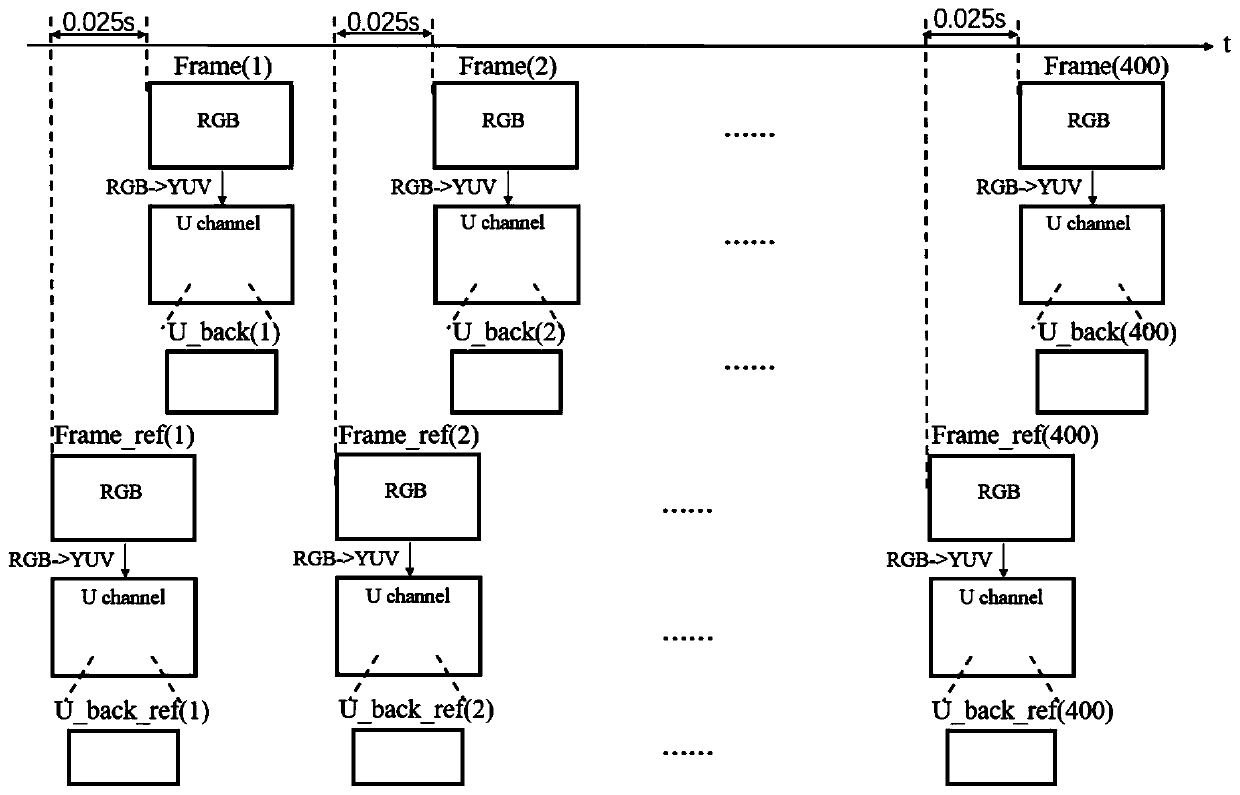

[0064] Turn on the reference camera first, then turn on the main camera after Δt=0.025s, and shoot videos containing human faces at a frame rate of 20Hz for 20s, record the current frame of the reference camera and the current frame of the main camera as Frame_ref(i) and Frame( i), frame_counter=K=400.

[0065] S102: Create two face detectors to obtain the ROI of the main camera and the ROI of the reference camera respectively, extract the U channel data based on t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com