Target detection method based on laser radar and image pre-fusion

A technology of laser radar and target detection, which is applied in image analysis, image enhancement, image data processing, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0045]In the first step, Zhang Zhengyou calibration method is used to obtain the internal parameters of the camera, and the method of manually extracting feature points to solve the rotation offset matrix is used to obtain the external parameters of the lidar and camera;

Embodiment 2

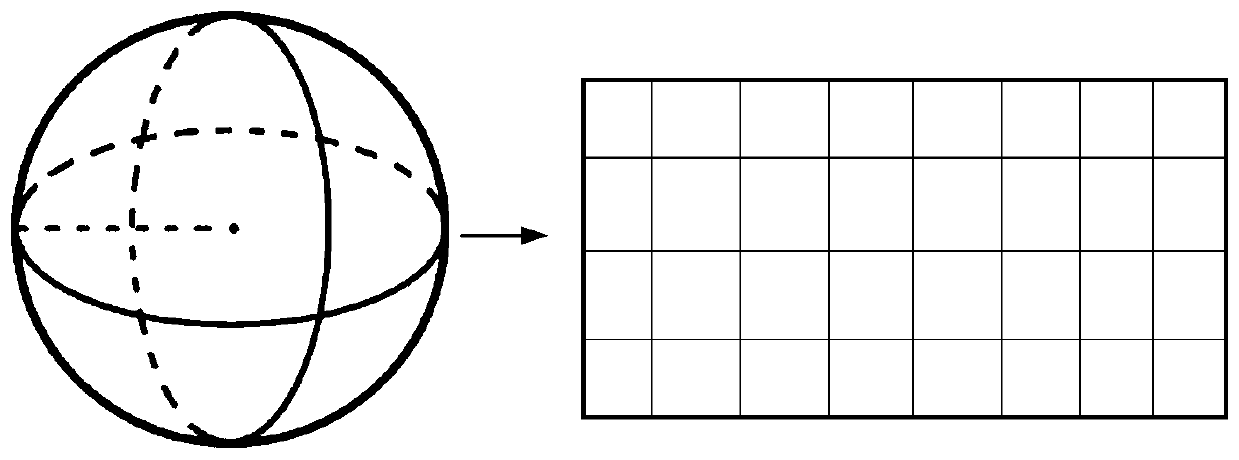

[0047] In the fourth step, the spherical projection of the tagged lidar point cloud dataset includes the following steps: first, the spherical projection of the lidar point cloud dataset is performed, φ represents the angle between the point and the front of the car, and θ represents the point and The calculation formulas of horizontal angle, φ and θ are:

[0048]

[0049]

[0050] Then differentiate the obtained angle to obtain a two-dimensional Cartesian coordinate system, δθ and δφ refer to the resolution of the angle differential

[0051]

[0052]

[0053] Extract the five features of each point in the lidar point cloud dataset: (x, y, z, intensity, range), put them into (i, j), where (x, y, z) is the point coordinates, and the intensity is Radar reflection intensity, range is the distance to the origin

[0054]

[0055] The point cloud is sampled according to the radar harness in the height direction, and 512 equally divided samples are taken in the horizo...

Embodiment 3

[0059] As a further preference of the present invention, the training step of the neural network in the aforementioned fourth step specifically includes the following steps:

[0060] Step 41: Extract two sub-datasets from the lidar point cloud dataset and the image dataset, and the targets in the two sub-datasets are clearly identifiable;

[0061] Step 42, use the above two sub-data sets to train the image and point cloud neural network respectively, so that the convolution layer can fully learn the point cloud obstacle features and image obstacle features, and form the convolution layer parameters of the single-mode detection network;

[0062] Step 43, use the convolution layer parameters of the single-mode detection network trained in step 42 as the features of lidar point cloud data and image data, no longer train, and add a 1×1 convolution block behind, Then use all the data sets for training. During the training process, keep the parameters of the previously trained singl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com