Aircraft monocular visual scale estimation method and device, aircraft navigation system and aircraft

A technology of monocular vision and scale estimation, which is applied in the field of aircraft and can solve problems such as inability to achieve

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The technical solution of the present disclosure will be described in further detail below with reference to the drawings and embodiments.

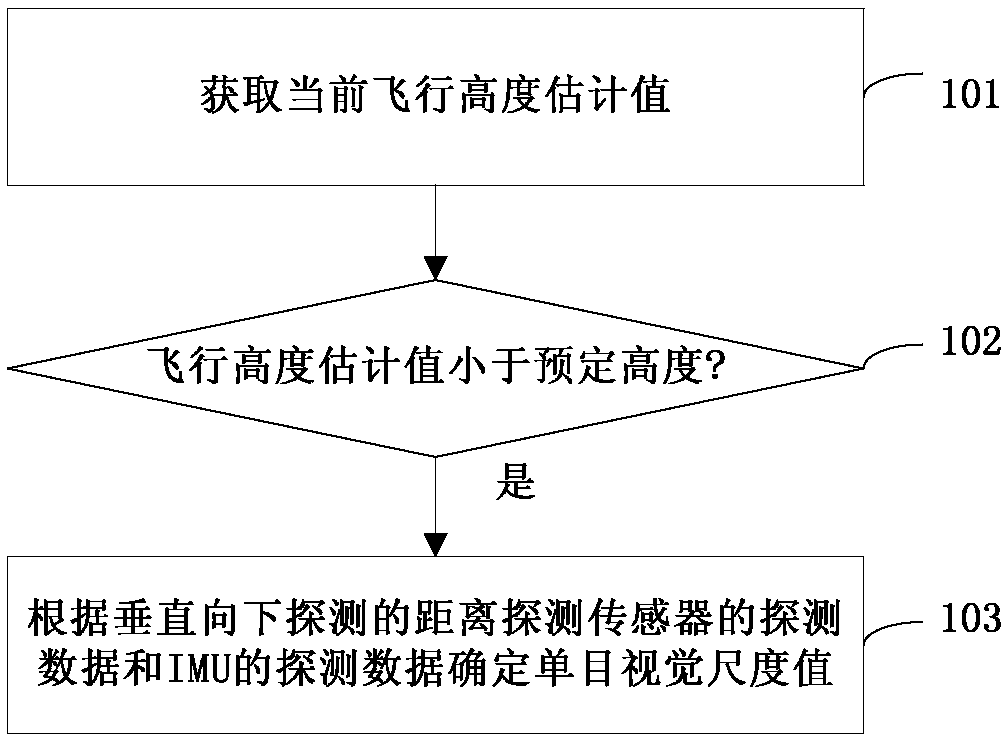

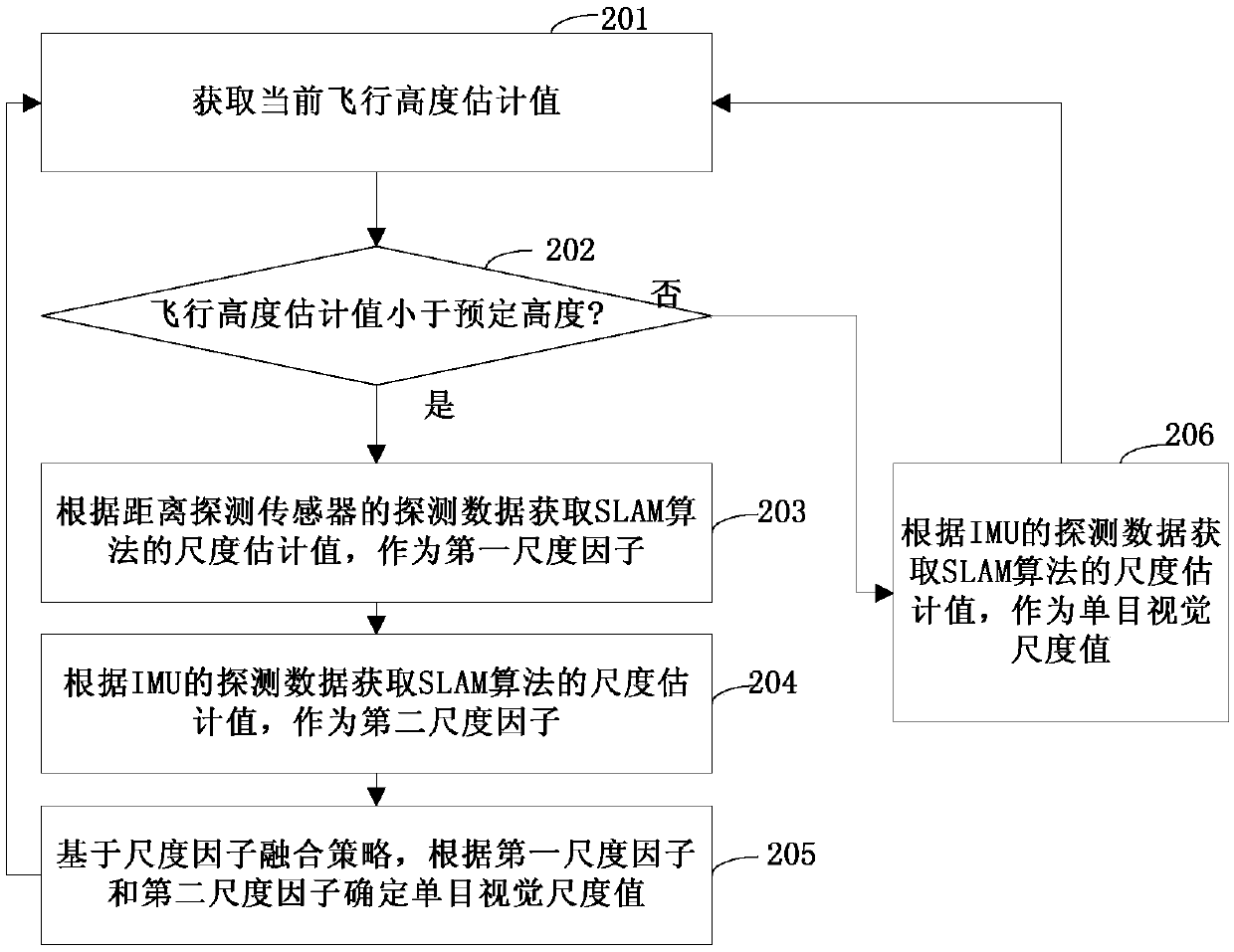

[0044] The flow chart of an embodiment of the aircraft monocular vision scale estimation method of the present disclosure is as follows figure 1 shown.

[0045] In step 101, an estimated current flight height is acquired. In an embodiment, the estimated value of the flying height may be obtained by a distance detection sensor installed on the aircraft with a detection direction vertically downward. When the flight height exceeds the measurement range of the sensor and the detection cannot be performed, the estimated value of the flight height may be a predetermined threshold value by default, and the predetermined threshold value is higher than the predetermined height in step 102 .

[0046] In step 102, the flight altitude estimate is compared to a predetermined altitude. Step 103 is executed when the estimated flight height is...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com