Very low precision floating point representation for deep learning acceleration

A low-precision, special-purpose circuit technique used to optimize the computation involved in training neural networks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

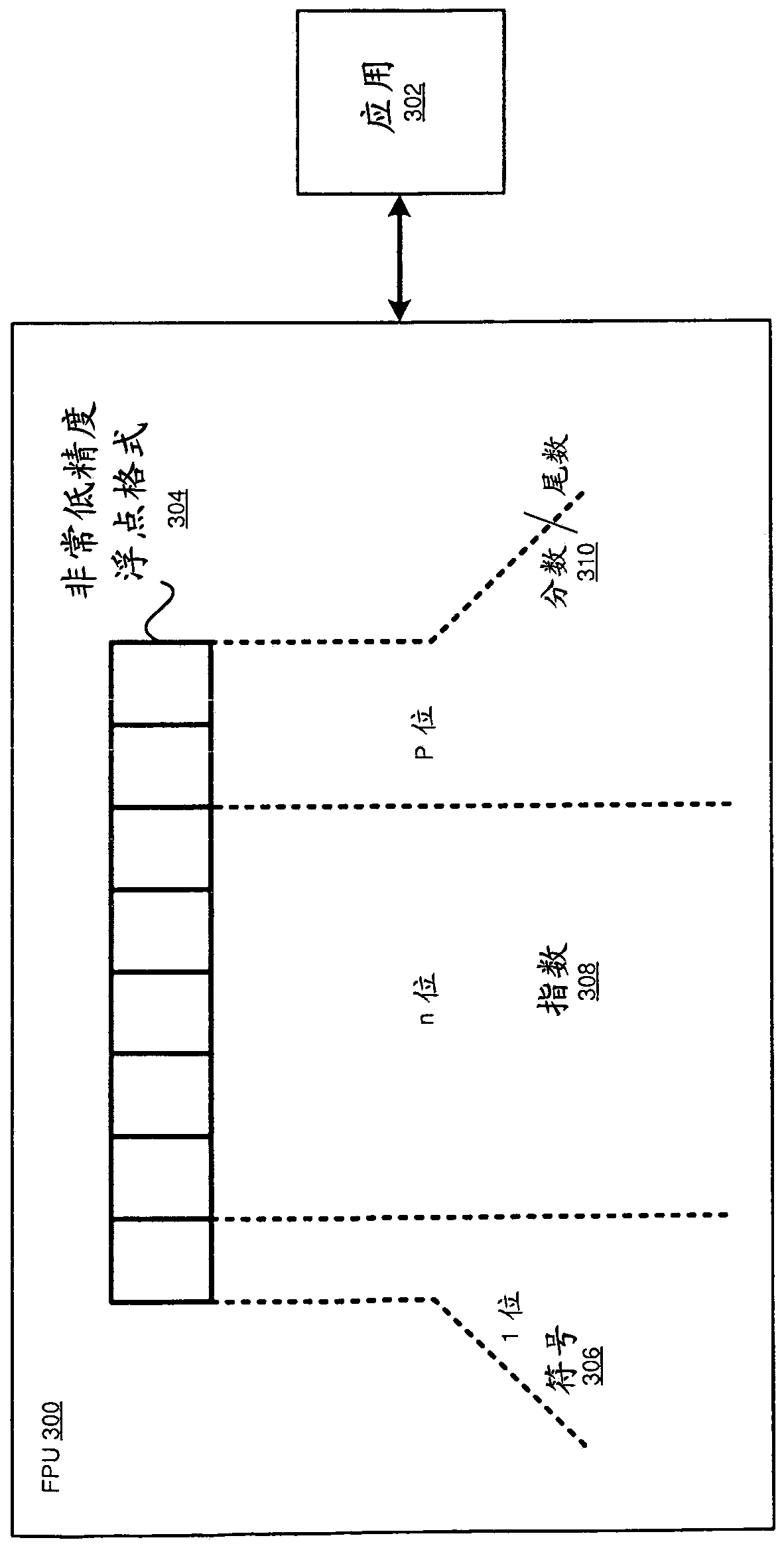

[0019] FPU has a bit width. In terms of the number of binary bits used to represent numbers in a floating-point format (hereinafter referred to as "format" or "floating-point format"), the bit width is the size. One or more organizations, such as the Institute of Electrical and Electronics Engineers (IEEE), have created standards related to floating point formats. The format currently in use provides a standard method for representing numbers in 16-bit, 32-bit, 64-bit, and 128-bit formats.

[0020] The illustrative embodiments recognize that in terms of the physical size of the semiconductor manufacturing circuit and the amount of power consumed, the larger the bit width, the more complex and larger the FPU. In addition, the larger the FPU, the more time it takes to generate the calculated output.

[0021] Dedicated computing circuits, especially FPUs, are recognized fields of endeavor technology. The current state of the technology in this field of endeavor has certain shortcom...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap