Facial expression action unit adversarial synthesis method based on local attention model

An attention model, facial expression technology, applied in the field of computer vision and affective computing, can solve the problems of loss of details, complexity, lack of authenticity, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

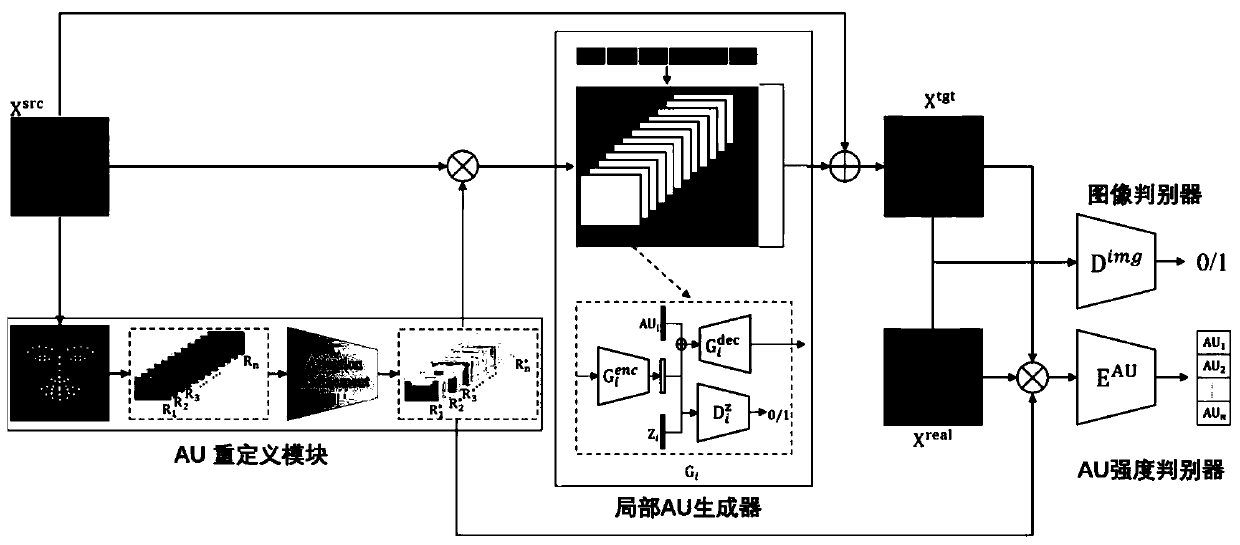

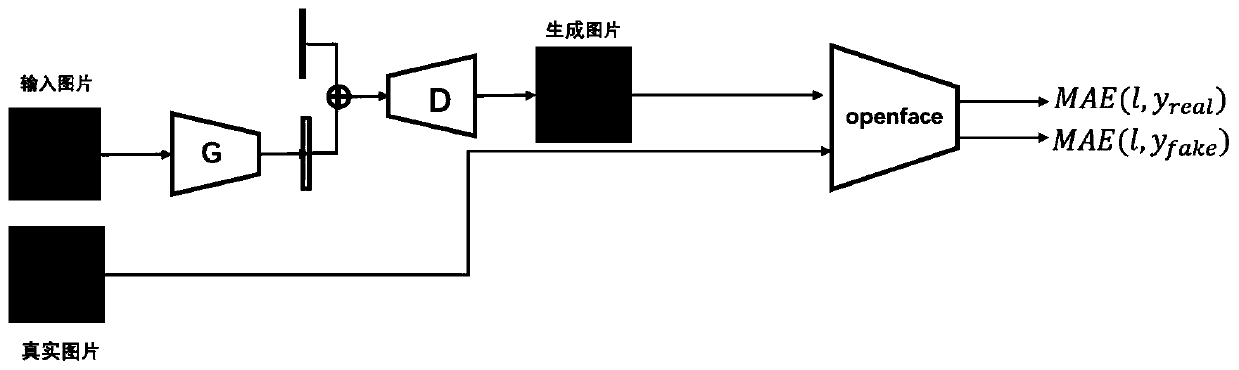

[0036] The present invention performs feature extraction on the AU area of the facial motion unit through the local attention model, then performs confrontation generation on the local area, and finally evaluates the AU strength on the enhanced data to detect the quality of the generated image.

[0037] Concrete steps of the present invention are as follows:

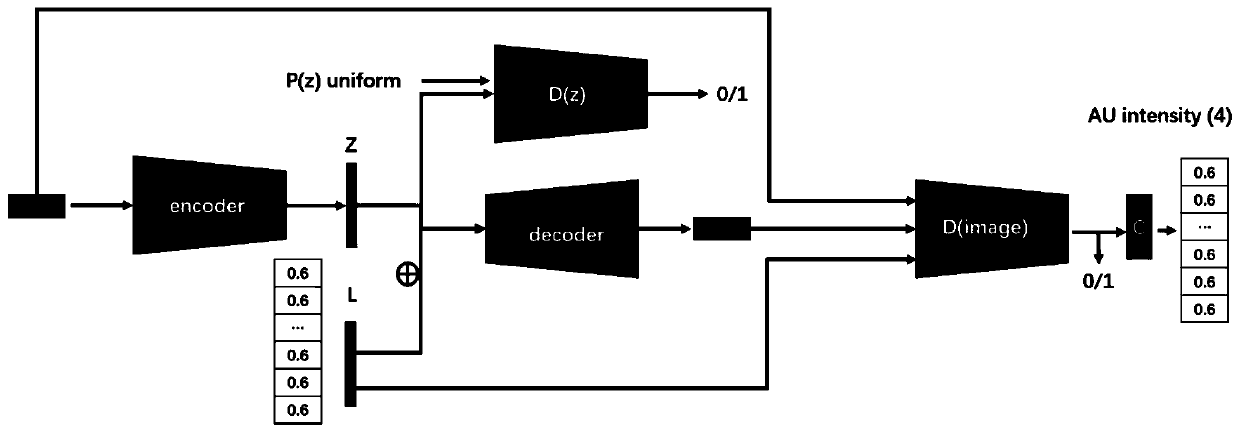

[0038] First, the local attention model is used to extract the features of the local area of the face, and the AU feature distribution extracted by the local attention model is learned and modeled based on the conditional adversarial self-encoding model to form a human face after removing the original AU strength. Face feature vector; then, add the label information of the specified AU strength to the feature layer of the self-encoder generator, so as to generate the image of the corresponding AU strength, so as to achieve the effect of changing the AU strength, so as to establish facial motion units with different comb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com