Task model training method, device and equipment

A task model and training method technology, applied in the field of information processing, can solve problems such as single function, inability to meet the individual needs of users, and insufficient flexibility, so as to achieve the effect of enriching functions, improving user experience, and improving flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

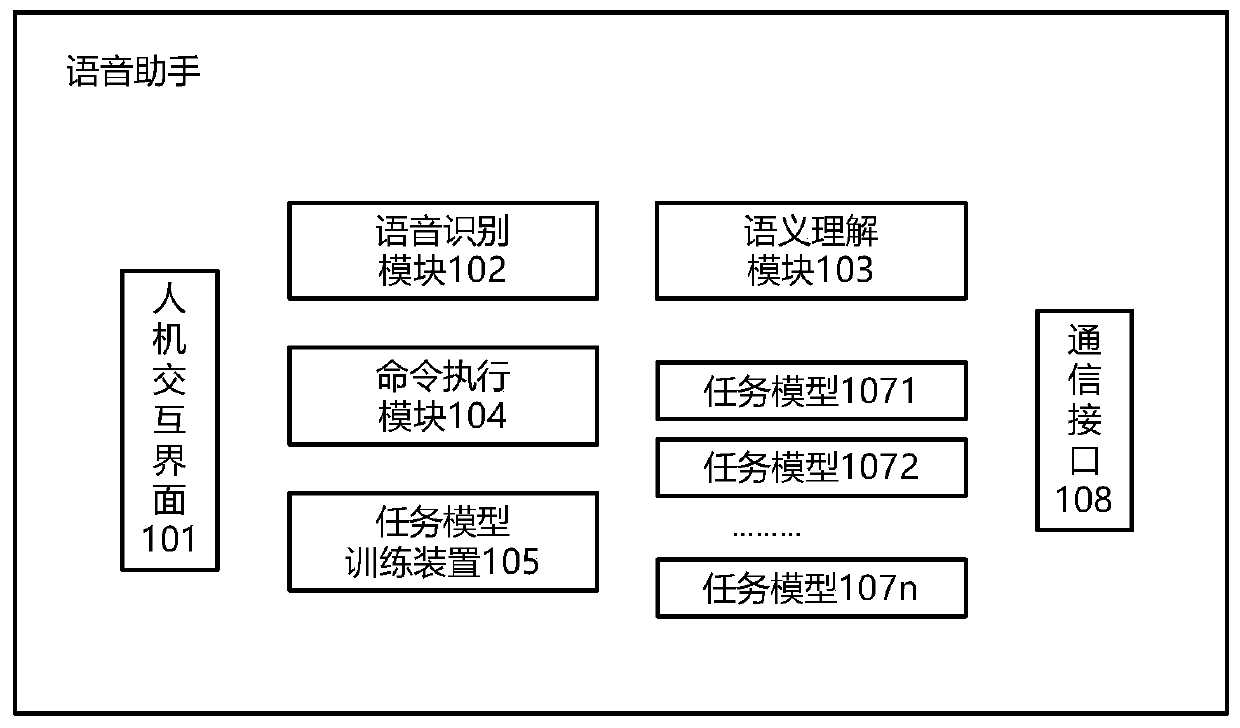

[0041] In this embodiment, the function of each module in the voice assistant of the present invention is described in combination with the travel needs of the user. The specific process involved in the following is only used to illustrate how to implement the functions of each module in the voice assistant of the present invention, and should not be regarded as a limitation on each module.

[0042]The user sends a voice command to the voice assistant through the man-machine interface 101 of the voice assistant: Please give a suggestion to go to Hangzhou for three days during the Qingming Festival. According to the usage habits and working environment of some users, the instructions may also be text instructions, gesture instructions or image instructions. If the user sends a voice command, the man-machine interface 101 of the voice assistant sends the received user voice command to the voice recognition module 102 for voice recognition, and sends the text information that is ...

Embodiment 2

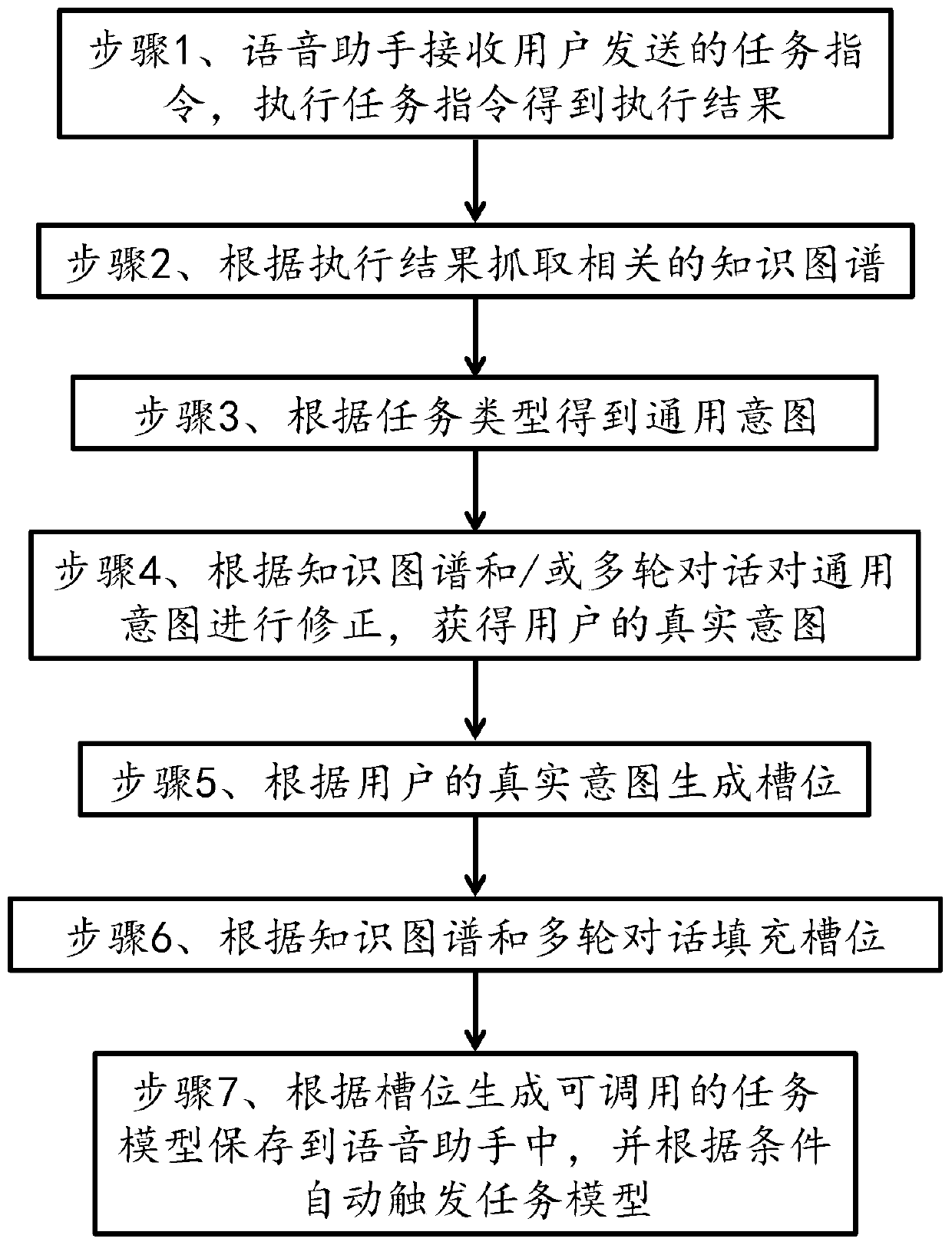

[0048] see figure 2 , figure 2 It is a flowchart of a task model training method of a voice assistant. The task model training method for voice assistant of the present invention comprises the following steps:

[0049] Step 1. The voice assistant receives the task instruction sent by the user, executes the task instruction and obtains the execution result;

[0050] In this step, the voice assistant receives the voice command from the user through the human-computer interaction interface, recognizes the voice command and performs semantic understanding on it, generates an executable task command according to the semantic understanding result, and executes the task command to obtain the execution result. Preferably, the voice assistant further screens the recommended execution results according to parameters such as the user's personal data and historical behavior.

[0051] Step 2. Grab relevant knowledge graphs according to the execution results;

[0052] In this step, th...

Embodiment 3

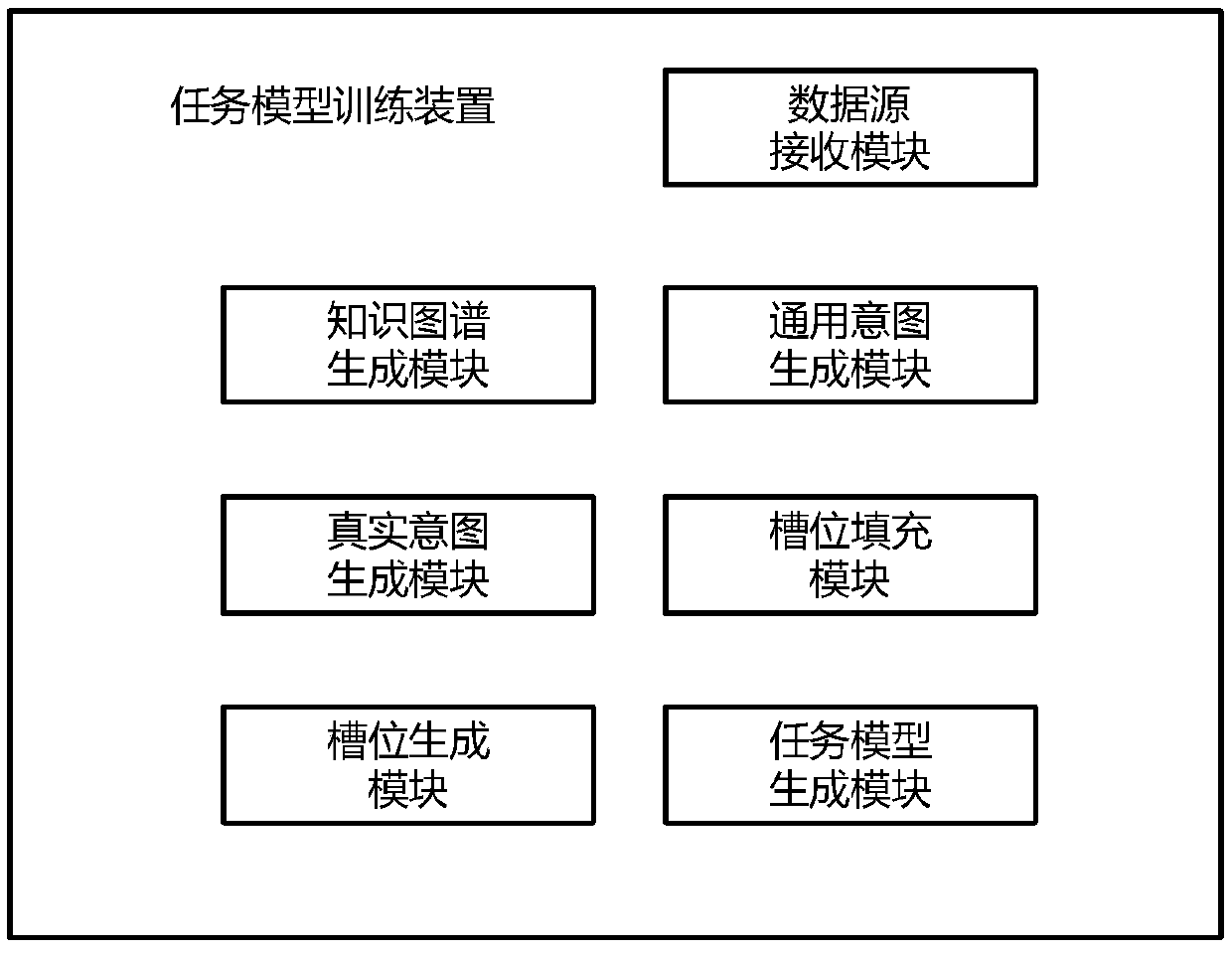

[0086] see image 3 , image 3 The structure of the task model training device in the voice assistant involved in this embodiment is shown, which can realize the task model training method of the second embodiment. The task model training device includes: a data source receiving module, which is used to receive the data source selected by the user; the data source can be directly input to the voice assistant by the user through the human-computer interaction interface, and then sent to the data source receiving module by the voice assistant, or can be sent by the voice assistant. The assistant executes the user's task instruction to obtain the execution result, and the user selects the data source from the execution result.

[0087] The knowledge map generation module is used to capture relevant knowledge maps according to the selected data source;

[0088] A general intention generation module is used to obtain general intentions according to task types;

[0089] The real ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com