Remote sensing object interpretation method based on focusing weight matrix and variable-scale semantic segmentation neural network

A technology of semantic segmentation and weight matrix, applied in the field of image processing, can solve problems such as inaccurate recognition and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

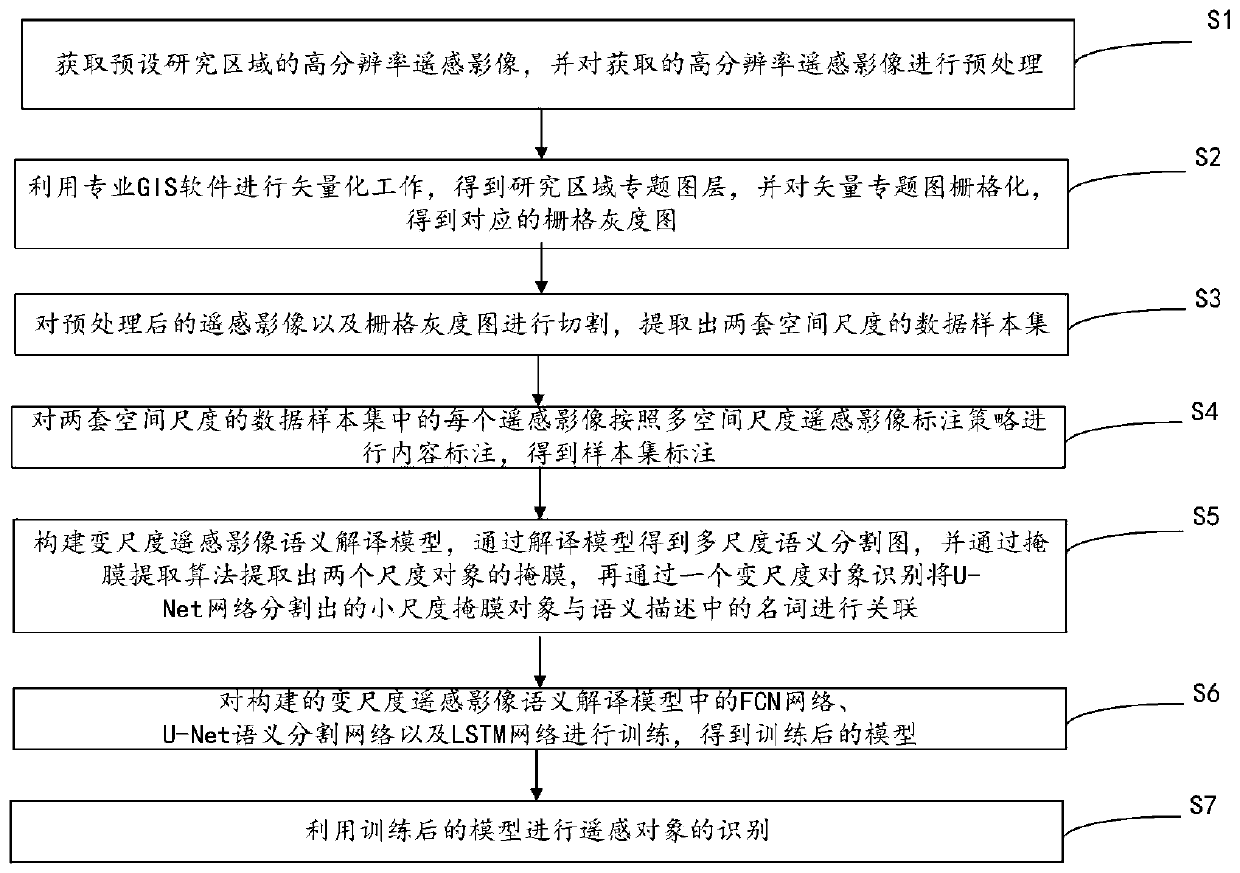

[0033] The purpose of the present invention is to solve the technical problem of inaccurate recognition due to the inaccurate recognition of the spatial relationship of the remote sensing object by the method in the prior art, and to provide a method for constructing the semantic description obtained by LSTM The connection between the template maps transfers the spatial relationship in the semantic description to the object mask map, so as to realize the semantic segmentation of remote sensing objects and the end-to-end recognition of the spatial relationship.

[0034] In order to achieve the above object, the main idea of the present invention is as follows:

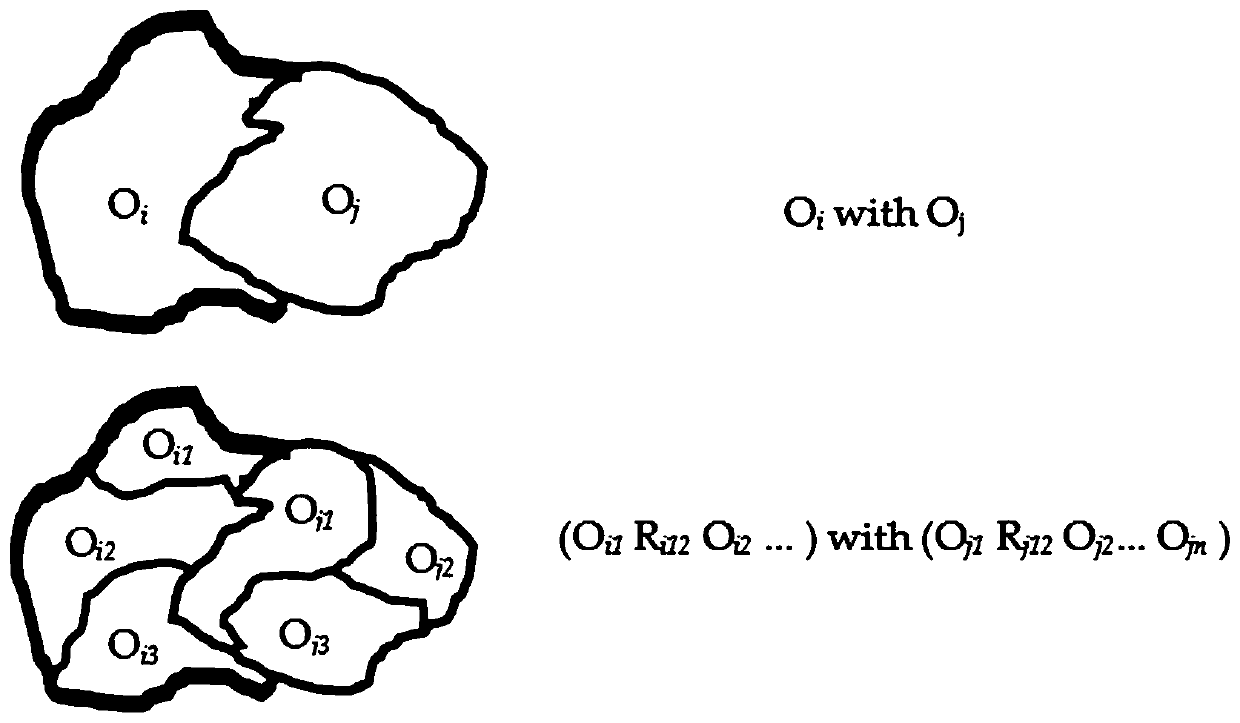

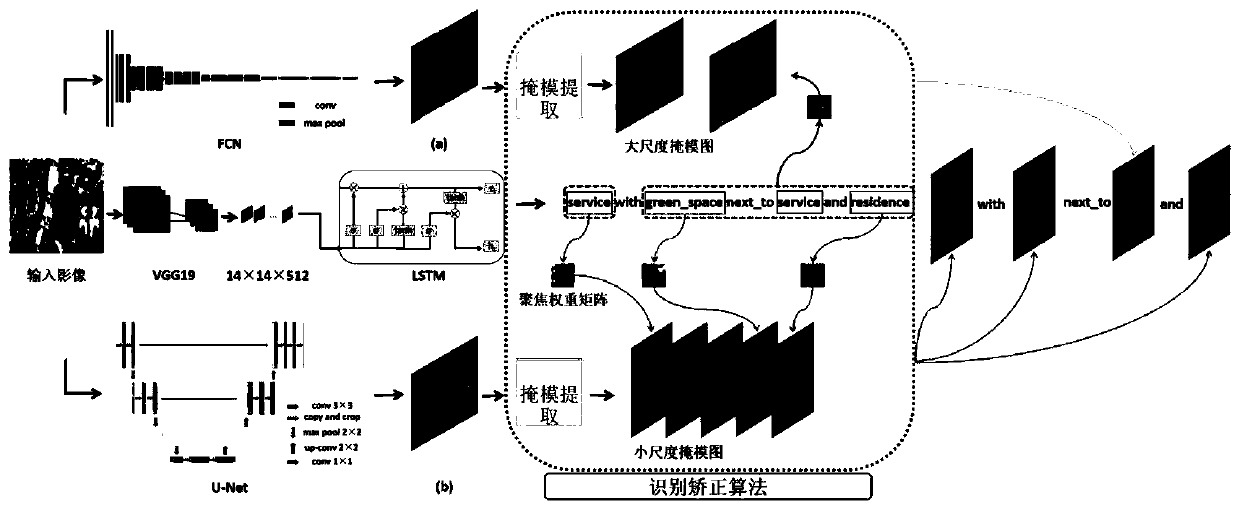

[0035] By designing a remote sensing image variable-scale semantic interpretation model based on FCN, U-Net and LSTM network, it can generate multi-spatial scale remote sensing image descriptions, and at the same time segment objects in the image and identify their spatial relationships end-to-end . In this method, t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com