A vision-based real-time human motion analysis method

A technology of human movement and analysis methods, applied in the field of computer vision, to achieve high accuracy, strong adaptability, and improve performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

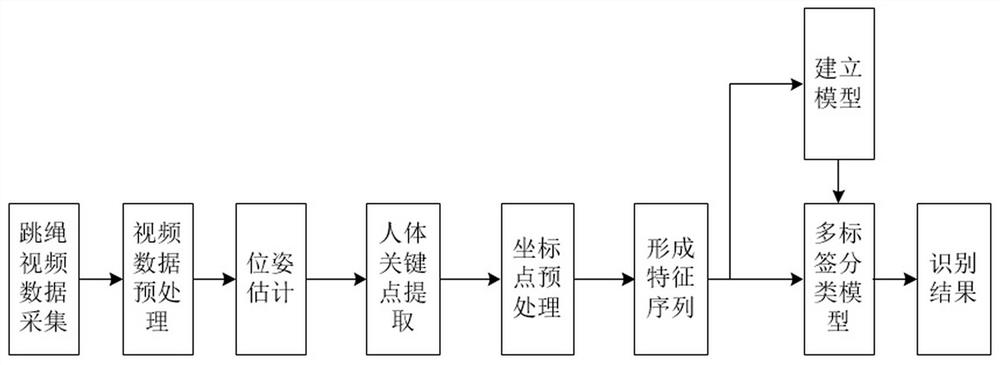

[0030] Embodiment one, a kind of real-time human motion analysis method based on vision, comprises the following steps:

[0031] Step 1: Obtain a large amount of video stream data of human body movement through mobile phones, record and save the basic information of the target object, including name, gender, age, and name of the action;

[0032] Step 2: Preprocess the video data, and estimate the pose of the human body in each frame of the video to obtain the coordinates of the joint points. The steps are as follows:

[0033] Step 2-1: convert the video data taken by different mobile phones into a unified scale;

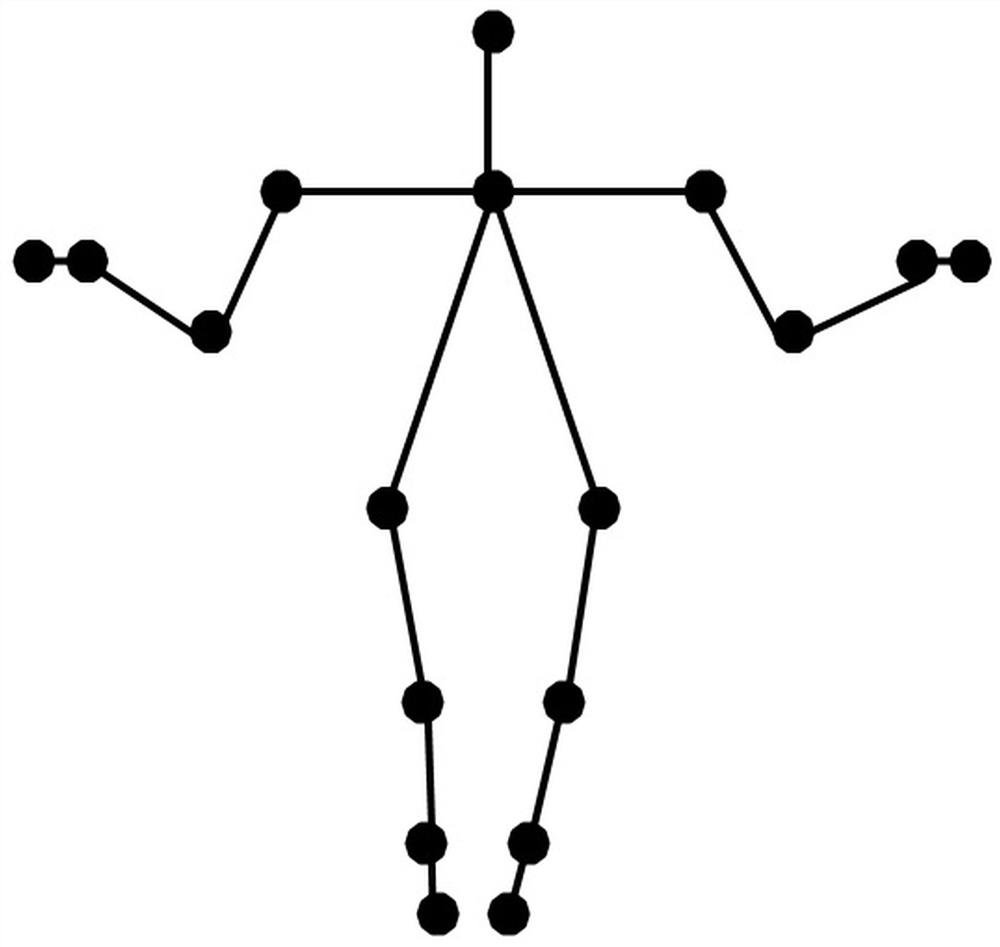

[0034] Step 2-2: Use the Open-pose method to obtain the nose, neck, right shoulder, right elbow, right wrist, right face, left shoulder, left elbow, left wrist, left hand face, and right hip of each frame of the human body in the video through transfer learning , right knee, right sole, right ankle, left hip, left knee, left ankle and left sole are the coordinate po...

Embodiment 2

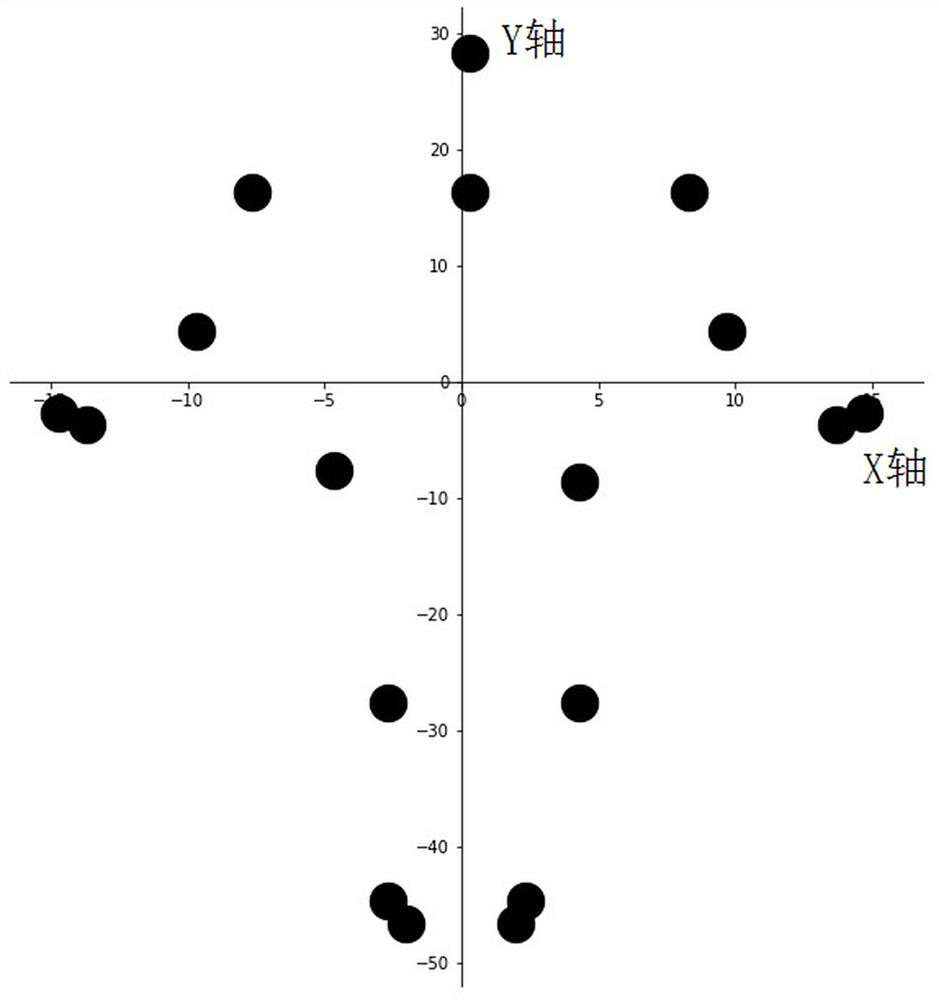

[0043] Embodiment 2, on the basis of Embodiment 1, when step 2 is performed, the position information of the four coordinates of the left sole, right sole, left hand face, and right hand face is obtained through the Labelme image annotation tool, and the Open -pose obtains the required coordinate positions of the 18 joint points; when step 3 is executed, the left hip C is obtained 11 =(cx 11 ,cy 11 ), right hip C 15 =(cx 15 ,cy 15 ) and neck C 2 =(cx 2 ,cy 2 ) coordinates, define the coordinate origin, that is, C 2 、C 11 、C 15 The center of gravity of the three points C 0 =(cx 0 ,cy 0 ),in And update all coordinate points with reference to the origin coordinates, convert the Cartesian coordinate system into polar coordinates, polar coordinates pc i =(ρ ii ,θ i ), where i ranges from 1 to 18, where ρ i >0,,-πi ≤ π.

Embodiment 3

[0044] Embodiment 3, on the basis of Embodiment 1, when step 7 is executed, the GRU model is a variant of the LSTM long-short-term memory network, which synthesizes the forget gate and the input gate into a single update gate, and BiGRU is a two-way GRU, two-way GRU consists of two GRUs stacked up and down, the output is determined by the state of the two GRUs, and one of the recursive networks calculates the hidden vector from front to back Another recurrent neural network calculates hidden vectors from back to front final output

[0045] When constructing the network model, in order to make the distribution of input data of each layer in the network relatively stable and accelerate the learning speed of the model, a batch normalization layer Batch Normalization is added before the BiGRU layer;

[0046] In order to achieve multi-label classification, the activation function of the last layer is set to the sigmoid activation function, and the loss function is binary_cross...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com