Weight adaptive pose estimation method based on point-line fusion

A pose estimation and self-adaptive technology, applied in computing, image analysis, image enhancement and other directions, can solve the problems of uneven feature distribution, uneven feature distribution, affecting the effect of pose estimation, etc., to improve the description accuracy, The effect of improving descriptiveness, improving adaptability and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further described below in conjunction with the embodiments and accompanying drawings. The specific embodiments are only used to further describe the present invention in detail, and do not limit the protection scope of the claims of the present application.

[0028] The present invention provides a kind of weight adaptive pose estimation method (abbreviation method) based on point-line fusion, it is characterized in that the method comprises the following steps:

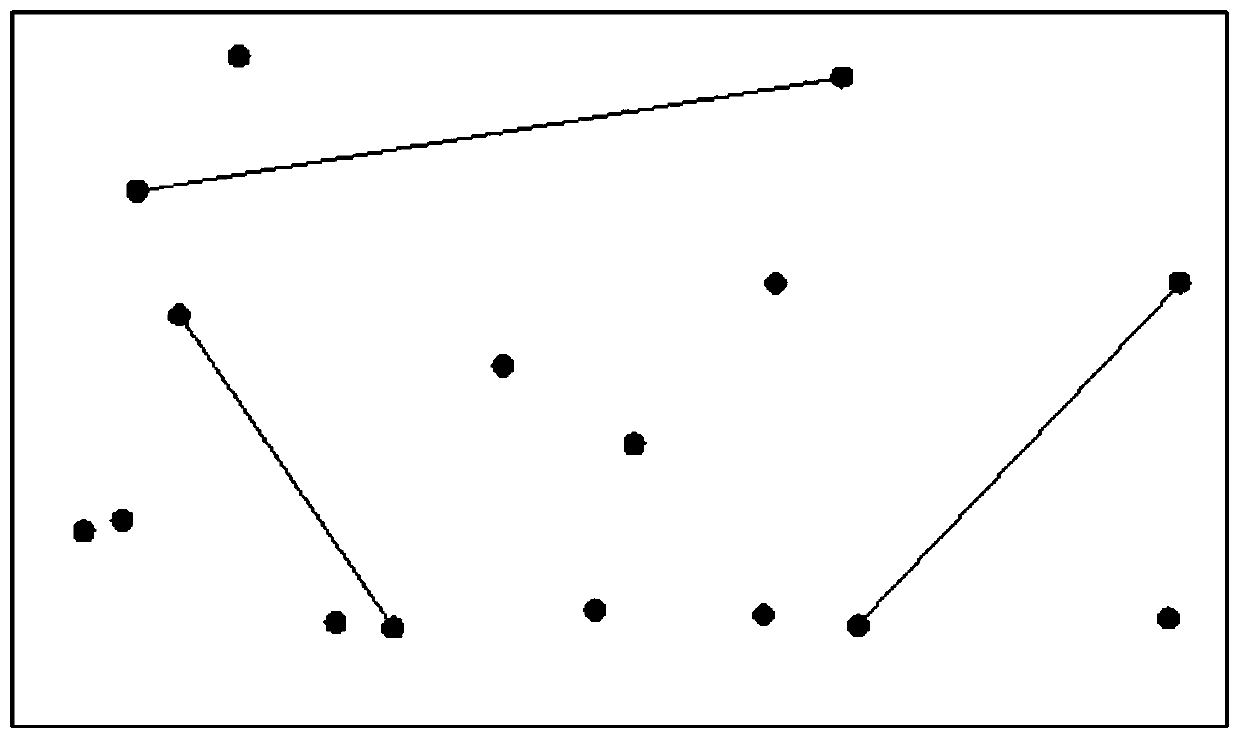

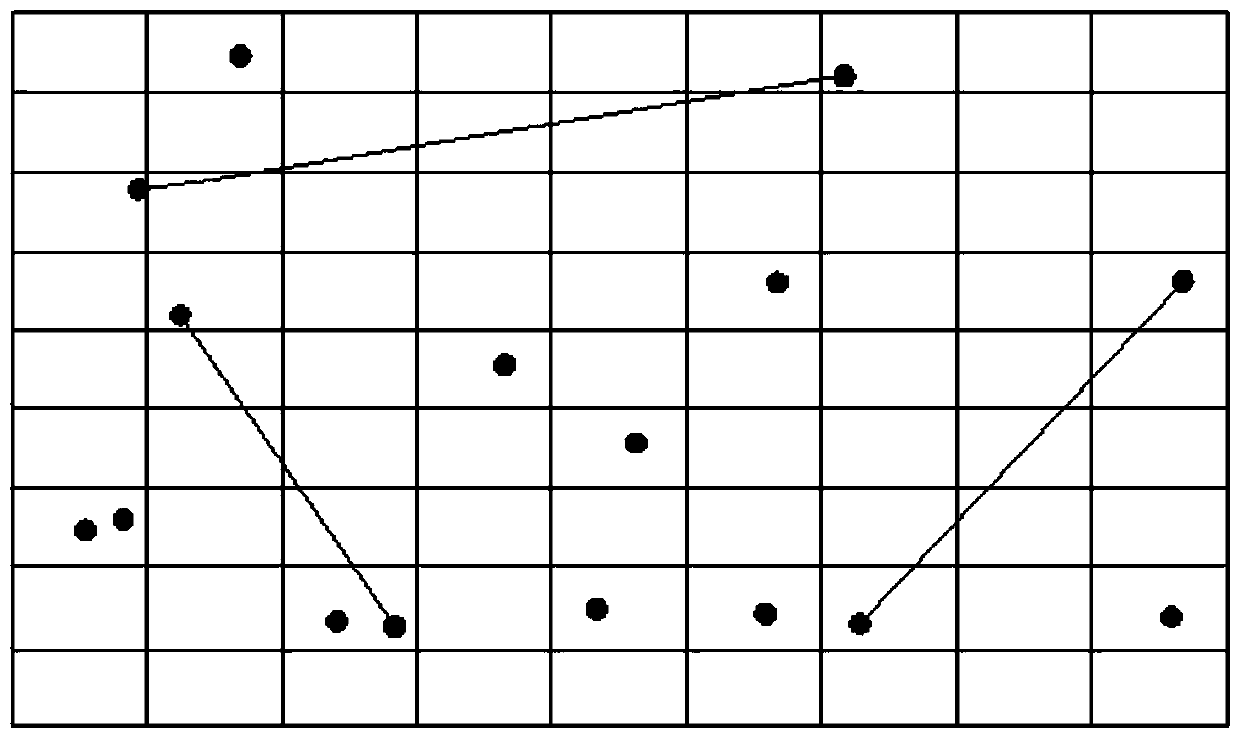

[0029] Step 1. Use the binocular camera to collect images to obtain a continuous image sequence: use the binocular camera to collect scene information in the environment, obtain real-time environmental information, and form a continuous image sequence;

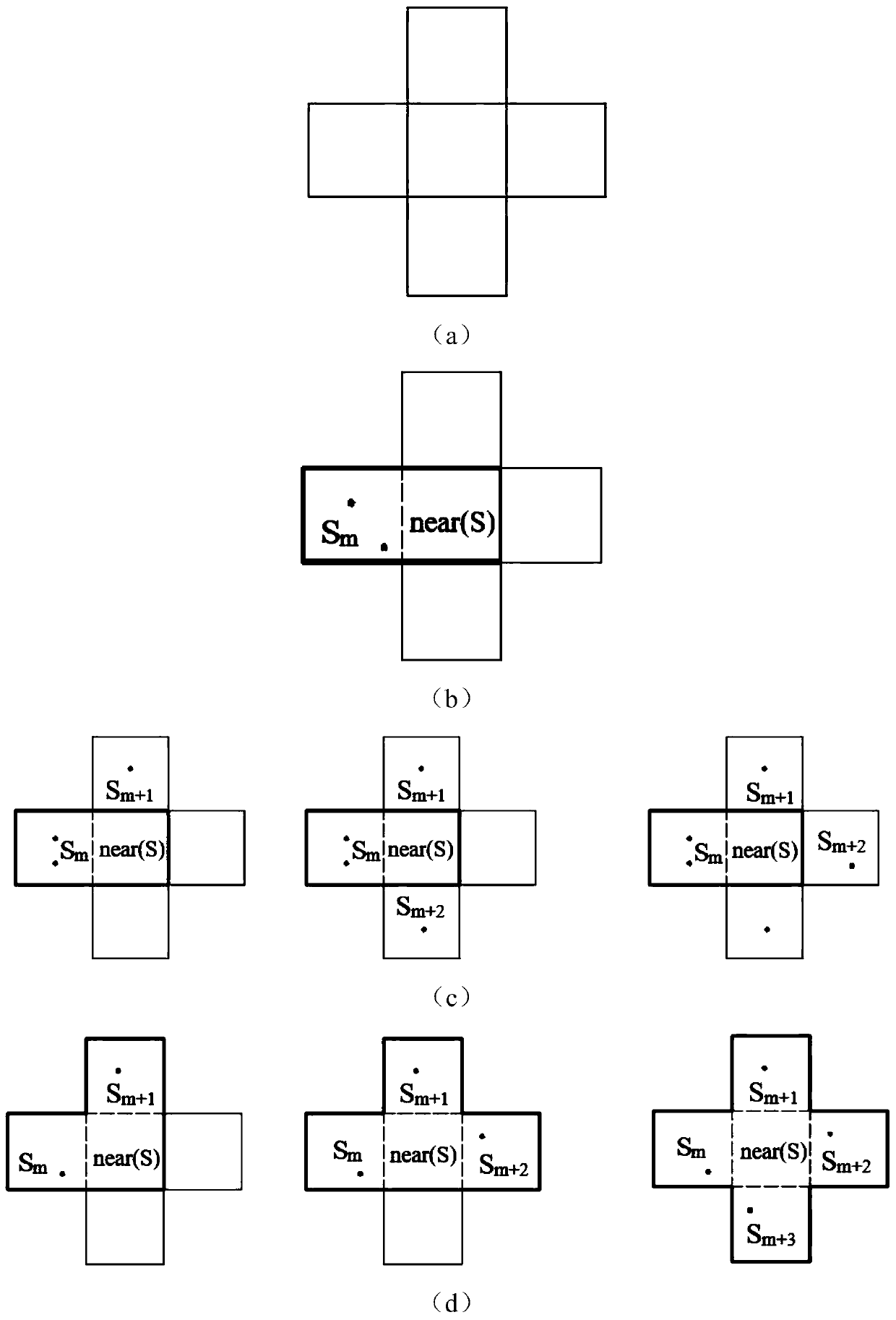

[0030] Step 2: Perform feature extraction and processing on the image to obtain the total number of midpoint features and line feature endpoints n in each frame f and its pixel coordinate set C j :

[0031] (1) Use the ORB alg...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com