Computing resource allocation method, device and storage medium based on hybrid distributed architecture

A technology of hybrid computing and computing resources, applied in the field of big data processing, can solve problems such as resource preemption, configuration process and user interface are not the same, can not meet the real-time requirements of computing tasks, etc., to achieve efficient and flexible scheduling, to meet the effect of diversity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to further illustrate the technical means and effects adopted by the present invention to achieve the predetermined purpose, the present invention will be described in detail below with reference to the accompanying drawings and preferred embodiments.

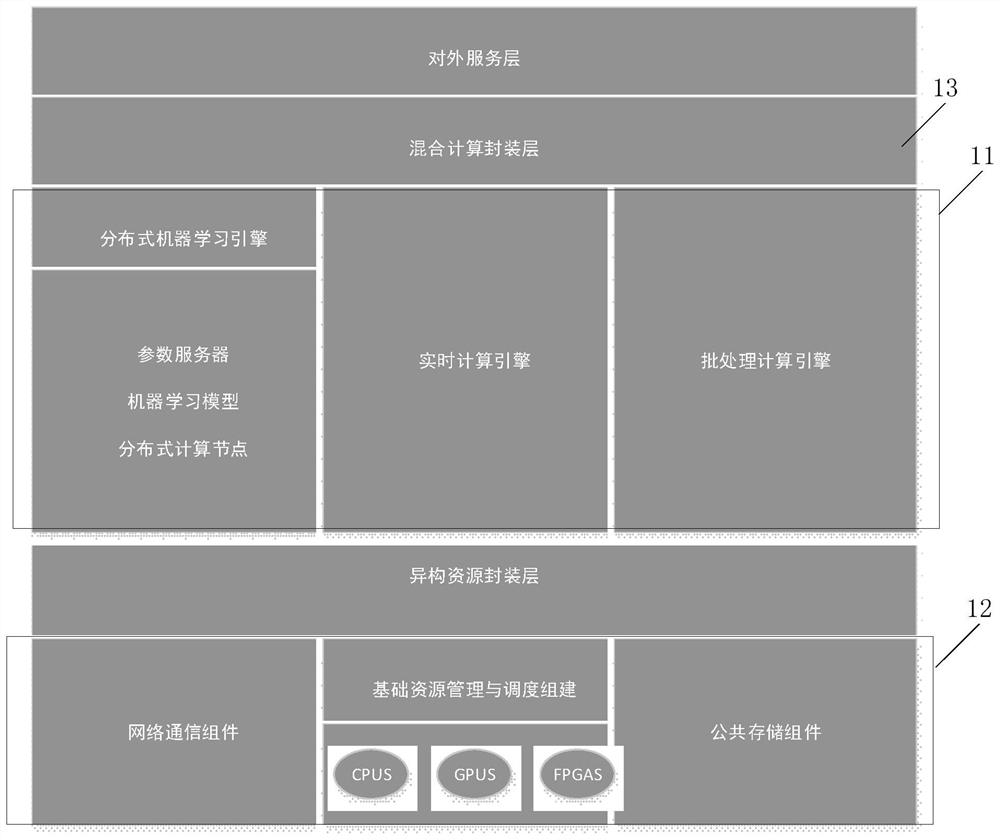

[0028] like figure 1 As shown, it is a distributed computing system based on hybrid computing resources provided by an embodiment of the present invention, including a computing engine layer 11, a hybrid computing encapsulation layer 13, and a resource scheduling layer 12, wherein:

[0029] The computing engine layer 11 is composed of multiple deep learning frameworks built on the same Spark computing engine;

[0030] The hybrid computing encapsulation layer 13 is used to uniformly encapsulate the access interfaces of each deep learning framework for the computing engine layer 11;

[0031] The resource scheduling layer 12 includes a variety of heterogeneous computing resources, and the heterogeneous computing r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com