Pre-training text abstract generation method based on neural topic memory

A pre-training, themed technology, applied in neural architecture, biological neural network model, unstructured text data retrieval, etc., can solve the problem of inability to complete inverted sentences, and achieve the effect of improving satisfaction and smooth and natural sentences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0059] The present invention will be described in further detail below in conjunction with the accompanying drawings.

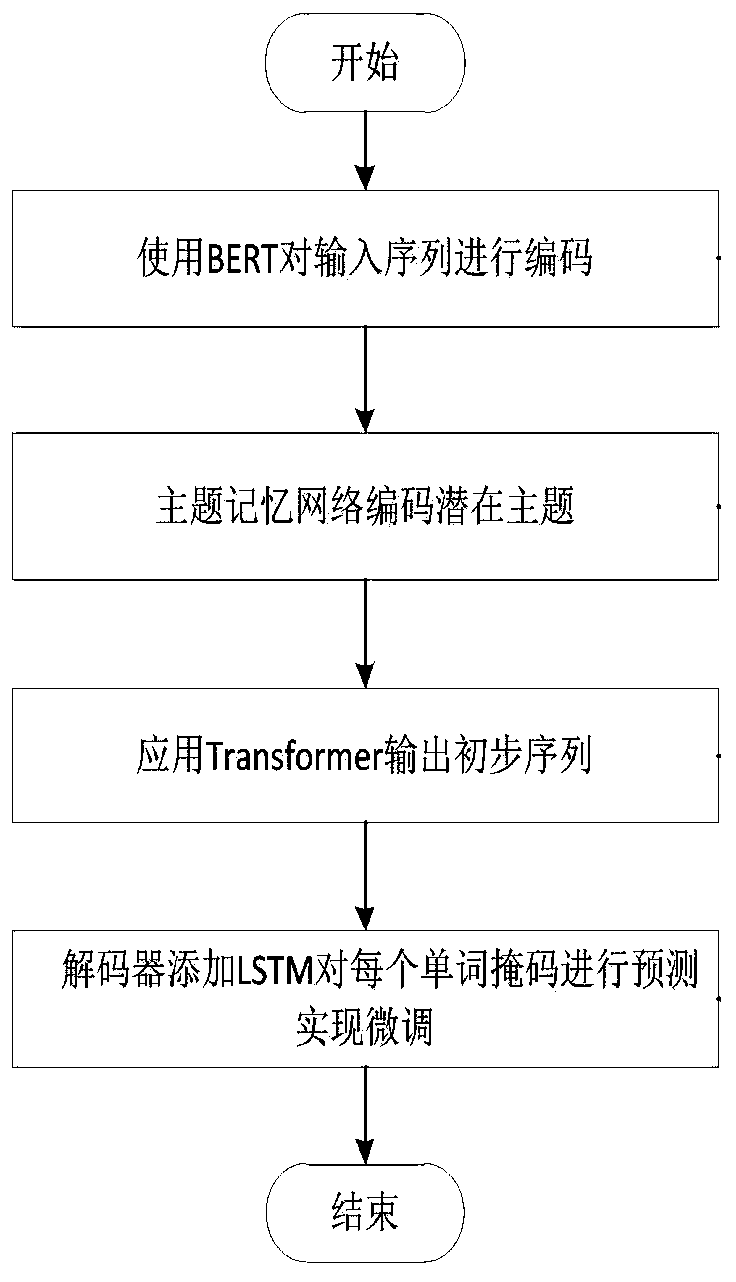

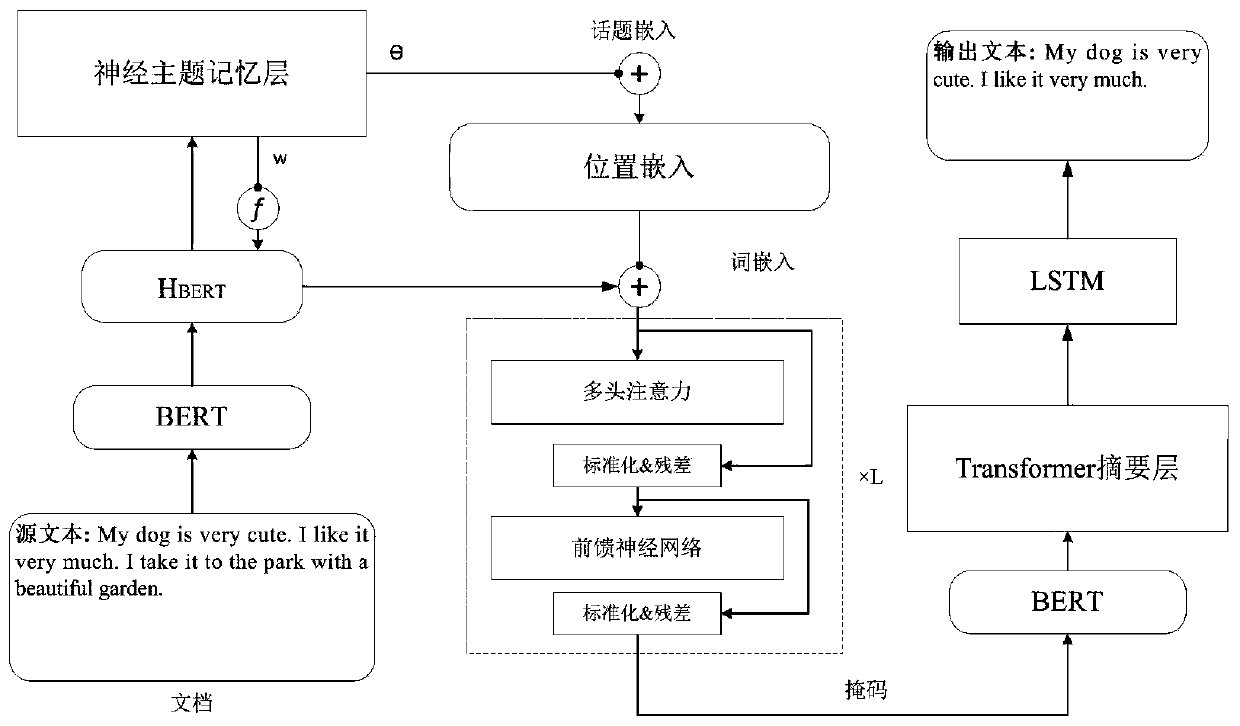

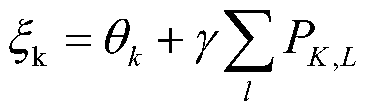

[0060] The present invention proposes a method for generating a pre-trained text summary based on neural topic memory, which fully utilizes the role of the pre-trained language model in the process of encoding and decoding, and can realize end-to-end training without manual features. At the same time, combined with the topic memory network to encode the latent topic representation of the document, this method can use pre-trained topics and topic vocabulary as features. This can better capture the important information of the article. Put the topic-aware coding sequence into the decoder to perform soft alignment through transformer multi-attention and output the preliminary summary sequence. Then use the BERT and LSTM layers of the bidirectional context to capture the features in depth, and fine-tune the parameters to generate a smoother and more informative ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com