Face quality evaluation method and device

A quality evaluation and target face technology, applied in the image field, can solve the problems of high sample construction difficulty, high training difficulty, wrong recognition results, etc., to improve analysis efficiency or monitor analysis accuracy, save display space and storage space, The effect of improved operability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

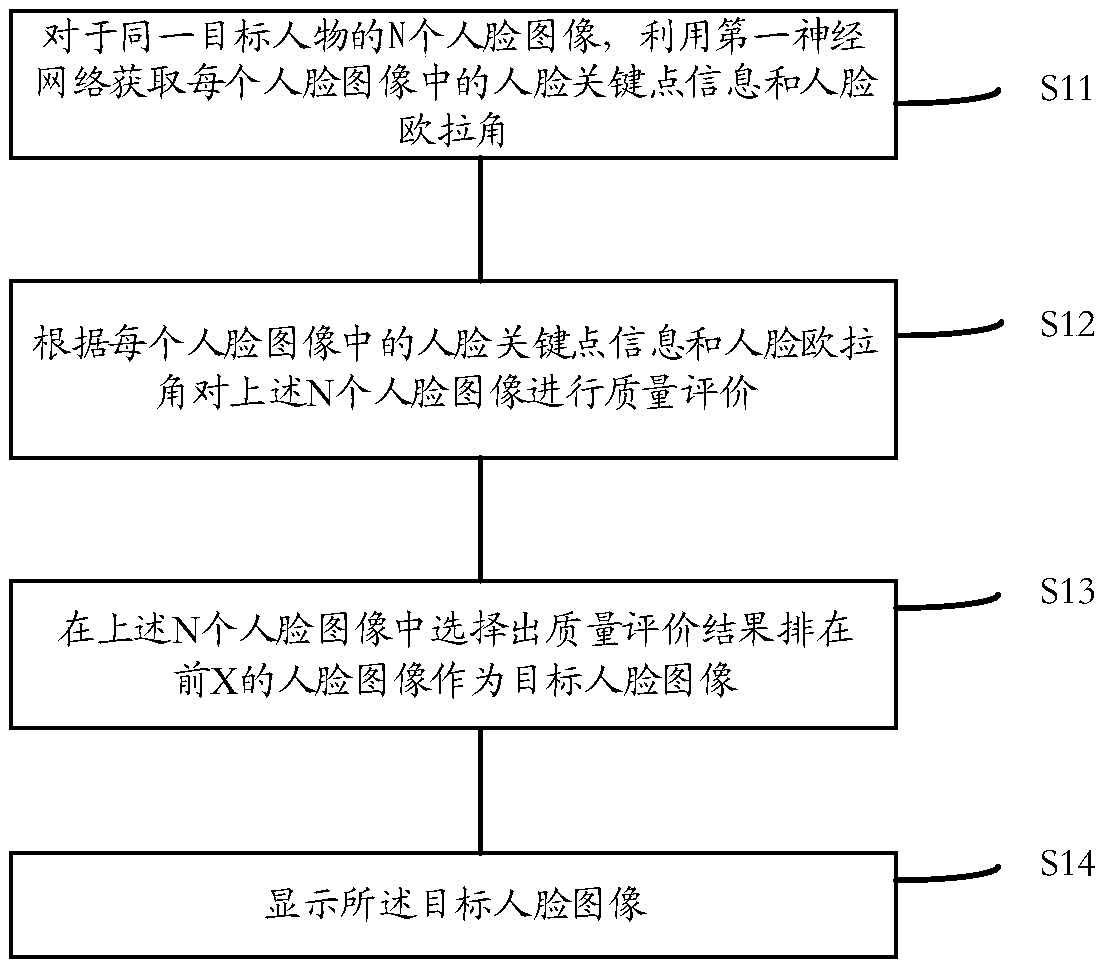

[0105] Such as Figure 7 As shown as an example, the first neural network includes Net1, Net2, and Net3. Net1 includes and is not limited to Conv (convolution), BN (batch normalization), Pooling (pooling), Relu (corrected linear unit); Net2 includes and is not limited to Deconv (deconvolution), Conv, Relu, Upsampling (upsampling ) layer; Net3 includes and is not limited to Fc (full connection), Pooling (pooling), Relu (corrected linear unit), and Regression (regression).

[0106] S21, for any input face image, output a featuremap (feature map) of M*W*H after Net1 and Net2 processing. Take out the M*W*H Feature Map output by Net2, and judge the visibility of each key part / key point of the face according to the response of the Feature Map. M here represents the number of key points that the user cares about. For example, if a configuration is: eyes*2+nose tip*1+mouth corner*2, there are five points in total, then M here is 5. by Figure 7 For example, the three key points of...

example 2

[0127] Such as Figure 8 As shown as an example, the first neural network includes Net1, Net2, and Net3. Net1 includes and is not limited to Conv (convolution), BN (batch normalization), Pooling (pooling), Relu (corrected linear unit); Net2 includes and is not limited to Deconv (deconvolution), Conv, Relu, Upsampling (upsampling ) layer; Net3 includes and is not limited to Fc (full connection), Pooling (pooling), Relu (corrected linear unit), and Regression (regression).

[0128] S31, obtain the visibility score P of each key point in each face image as above-mentioned S21 i And the yaw angle, pitch angle, and roll angle of the face.

[0129] S32, the key point visibility score and the face Euler angle are no longer calculated as the total score of the face quality through the formula in Example 1, but the key point visibility score and the face Euler angle are fused as a trained score The input of the network (the second neural network), and the output result of the score ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com