Indoor positioning and navigation method based on UWB fused visual SLAM

An indoor positioning and vision technology, applied in the directions of surveying and mapping, navigation, navigation, navigation calculation tools, etc., to achieve the effect of rapid accumulation of errors, reduction of time consumption, and increase of recall rate and accuracy rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be further described below in conjunction with drawings and embodiments.

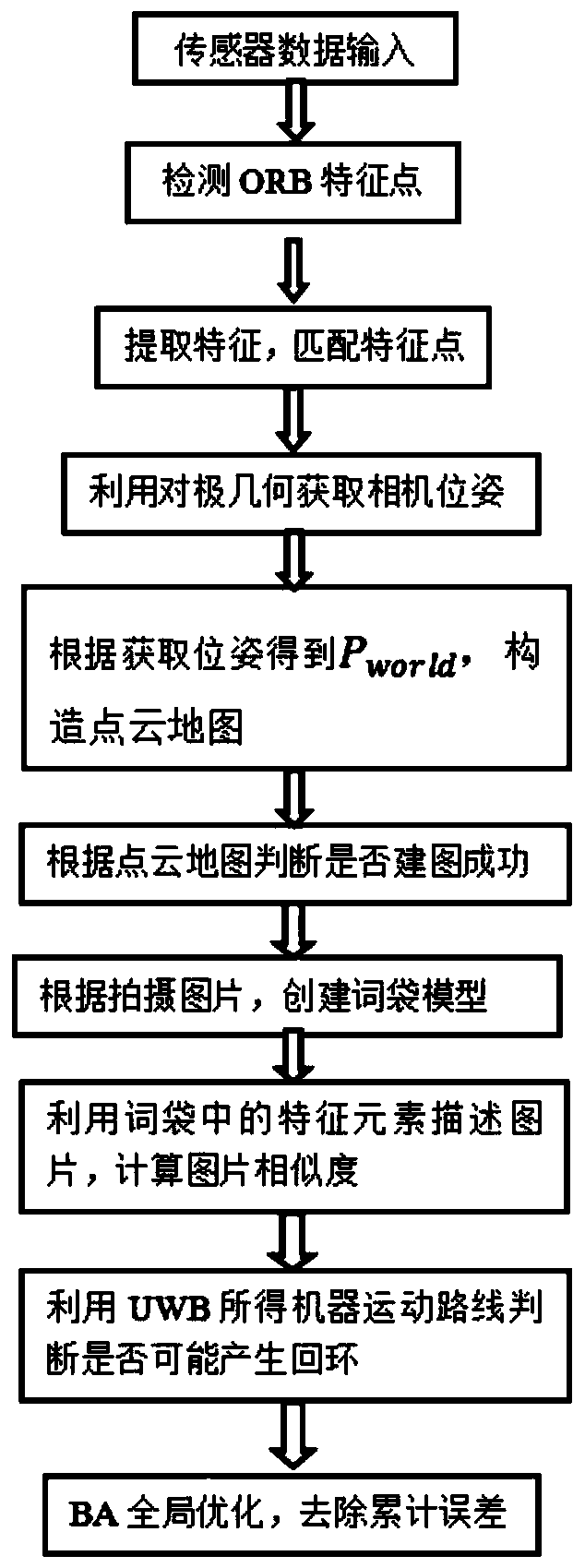

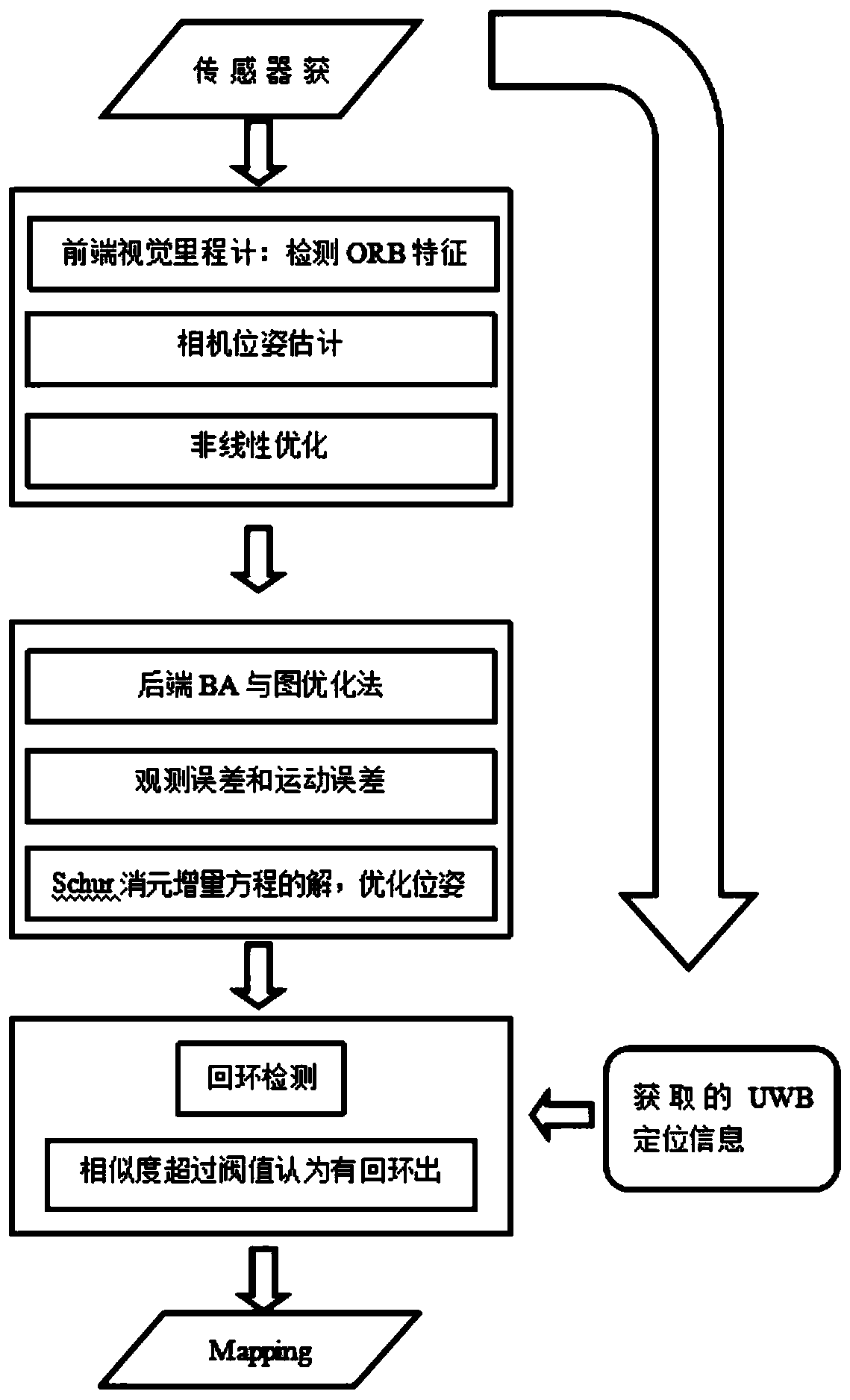

[0034] Such as figure 1 As shown, a method of indoor positioning and navigation based on visual SLAM fused with UWB, the specific implementation steps are as follows:

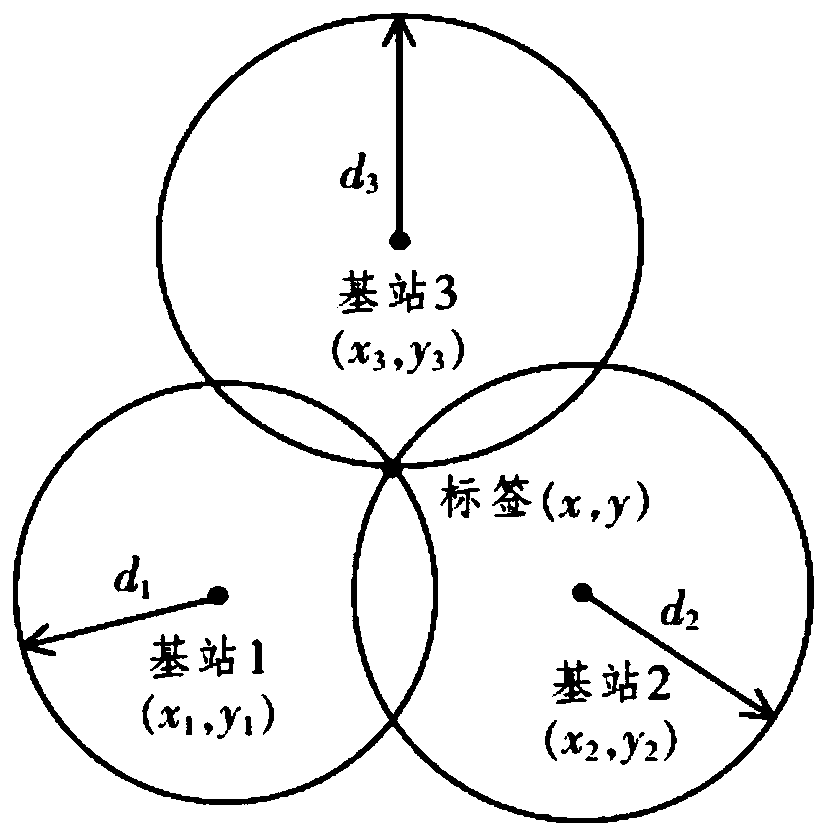

[0035] Step 1. Take the initial position of the robot as the origin, and the initial orientation as the X coordinate axis, establish a world coordinate system, and select three locations in the room to set up the base station. The robot carries the RGB-D camera and the signal sending and receiving device to move according to the set route, and takes color pictures and depth pictures of the environment frame by frame according to the camera. During the movement of the robot taking pictures, the UWB trilateral positioning method is used to obtain and record the coordinates of the camera taking pictures that change with time in the world coordinate system according to the positional relationship between th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com