A Convolutional Neural Network Accelerator Architecture with Binarized Weights and Activations

A convolutional neural network and activation value technology, applied in the field of convolutional neural network accelerator architecture, can solve problems such as computing speed limitations, achieve the effects of solving read-write conflicts, saving hardware computing resources, and improving throughput

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

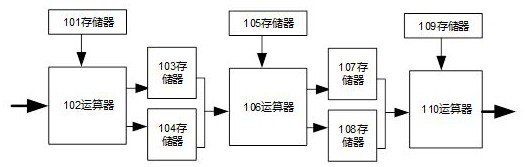

[0019] The convolutional neural network accelerator architecture with binarized weights and activation values in this embodiment, such as figure 1 , the weight data is stored in the memory 101, the memory 105, and the memory 109 in the FPGA, and the feature map data is stored in the memory 103, the memory 104, the memory 107, and the memory 108 in the FPGA. The arithmetic unit 102, the arithmetic unit 106, and the arithmetic unit 110 are composed of logic resources. The output of the memory 101 is connected to the computing unit 102; the output of the computing unit 102 is connected to the memory 103 and the memory 104 respectively; the output of the memory 103 and the memory 104 is connected to the computing unit 106, and the output of the memory 105 is connected to the computing unit 106; the output of the computing machine 106 is connected to the memory 107 and storage 108; the output of storage 107 and storage 108 is connected to computing unit 110, and the output of sto...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com