Target region extraction method for multi-modal medical image based on convolutional neural network

A technology of convolutional neural network and target area, which is applied in the field of target area extraction of multimodal medical images, can solve the problems of underutilization, high manpower consumption, underutilization of MRI differences of different parameters, etc., to overcome time-consuming Efforts to increase effort, improve accuracy, and overcome problems of subjective variability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The present invention will be further described in detail below in conjunction with the embodiments, so that those skilled in the art can implement it with reference to the description.

[0044] It should be understood that terms such as "having", "comprising" and "including" used herein do not exclude the presence or addition of one or more other elements or combinations thereof.

[0045] A method for extracting a target region of a multimodal medical image based on a convolutional neural network in this embodiment includes the following steps:

[0046] 1) Construct a mask region convolutional neural network for target region extraction in multimodal medical images:

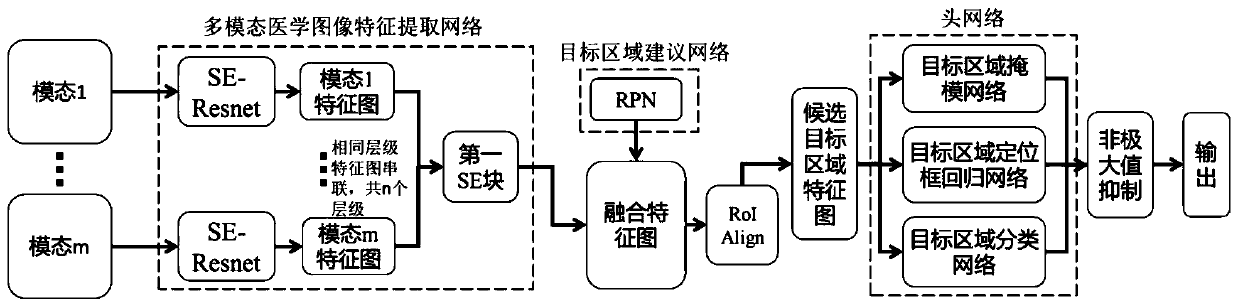

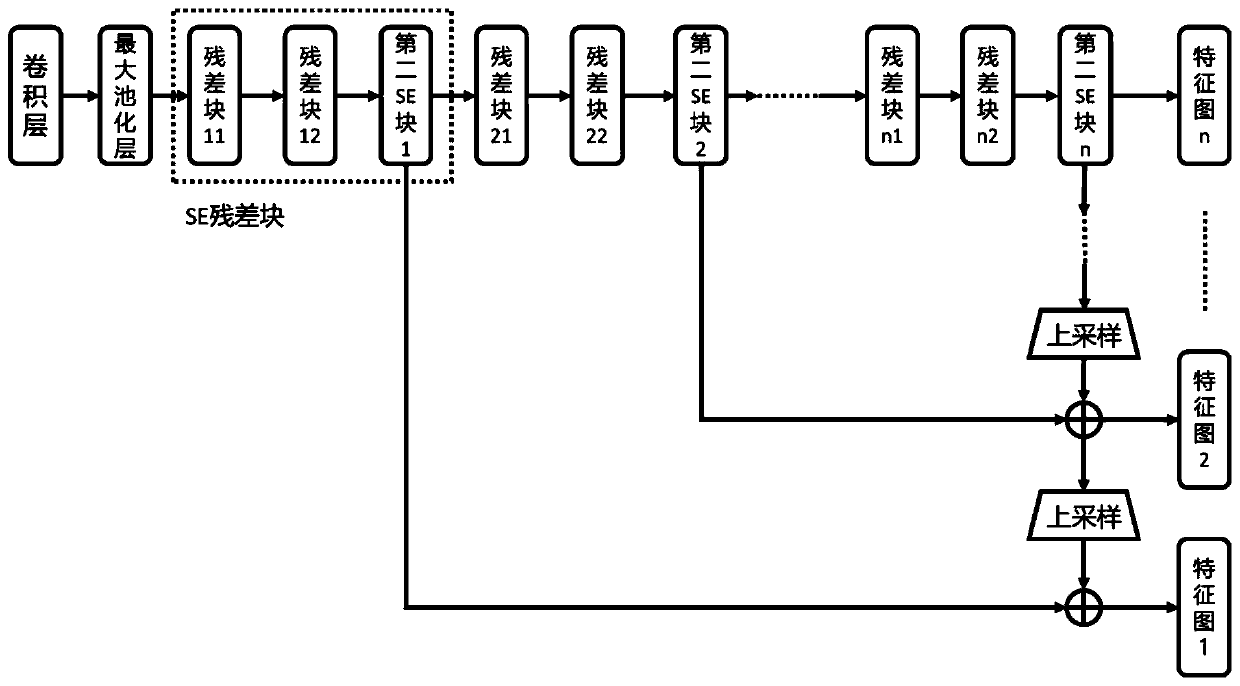

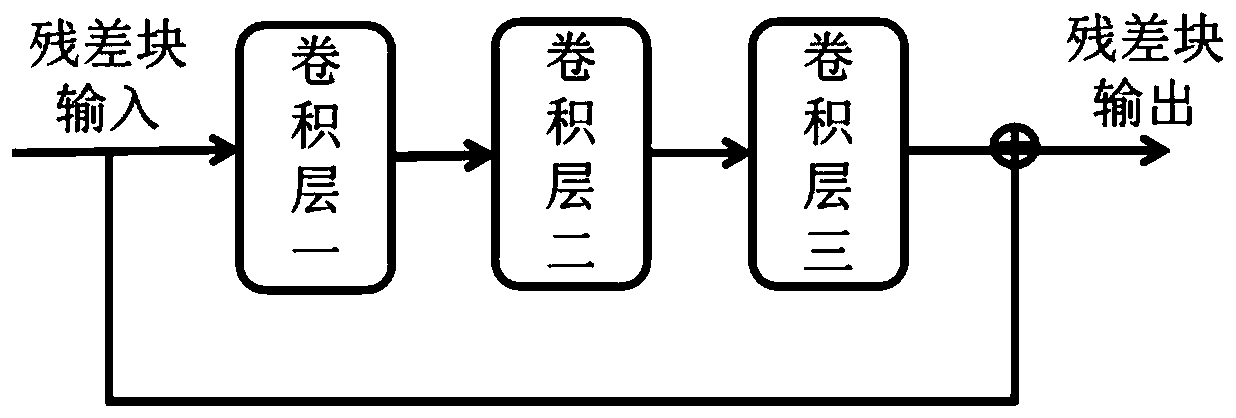

[0047] refer to figure 1 , the mask area convolutional neural network includes a multi-modal medical image feature extraction network, a target region proposal network, and a head network, and the multi-modal medical image feature extraction network extracts multi-level fusion feature maps of multi-modal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com