Voice feature matching method based on convolutional neural network

A convolutional neural network and voice feature technology, applied in the field of voice feature matching based on convolutional neural network, can solve the problems of poor software operation robustness, complex voice recognition system, low voice recognition accuracy, etc. The effect of enhancing software robustness and improving feature extraction efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

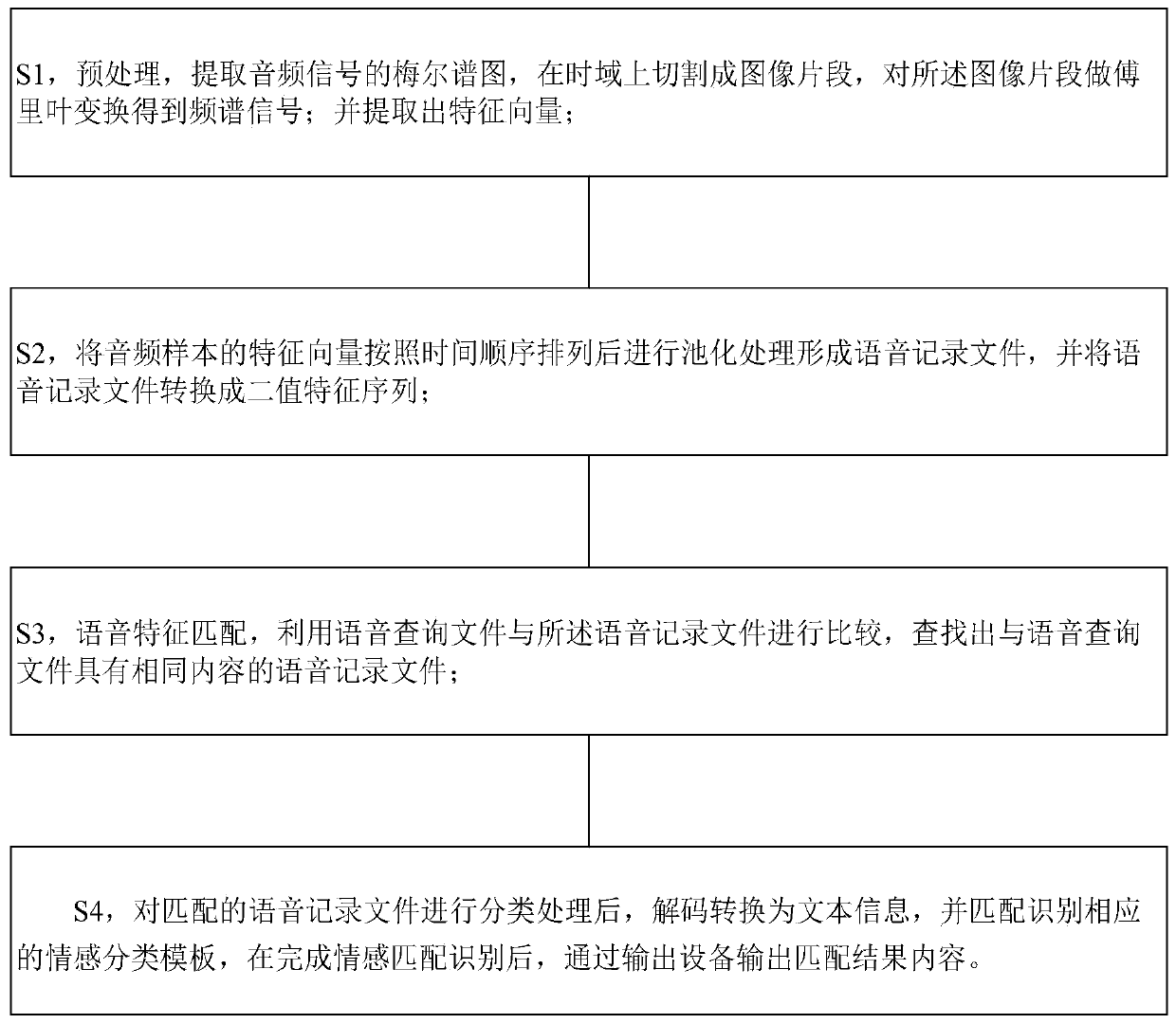

[0049] Such as figure 1 As shown, a speech feature matching method based on convolutional neural network, including:

[0050] S1, preprocessing, extracting the mel spectrogram of the audio signal, cutting it into image segments in the time domain, performing Fourier transform on the image segments to obtain spectral signals; and extracting feature vectors;

[0051]S2, arranging the feature vectors of the audio samples in chronological order and performing pooling processing to form a voice recording file, and converting the voice recording file into a binary feature sequence;

[0052] S3, voice feature matching, using the voice query file to compare with the voice record file, and find out the voice record file with the same content as the voice query file;

[0053] S4. After classifying the matched voice recording files, decode and convert them into text information, and match and recognize corresponding emotion classification templates. After completing the emotion matching...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com