Dynamic video tactile feature extraction and rendering method

A feature extraction and video technology, applied in the field of virtual reality and human-computer interaction, can solve the problems of low resolution, no consideration of inter-frame dynamic features, and only consider intra-frame features, etc., to increase feature information and excellent user-friendliness Sexuality, the effect of rich dimensions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

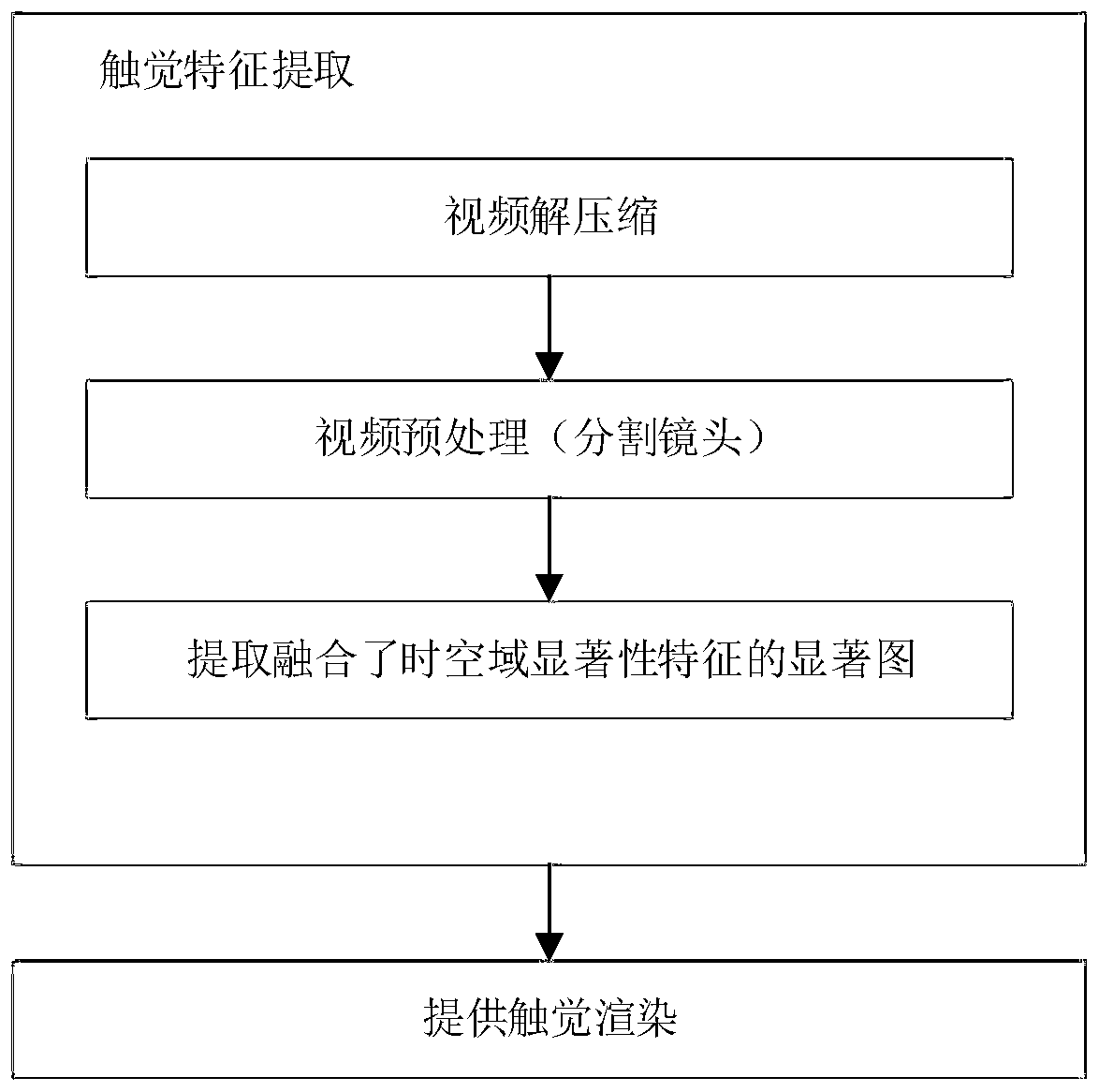

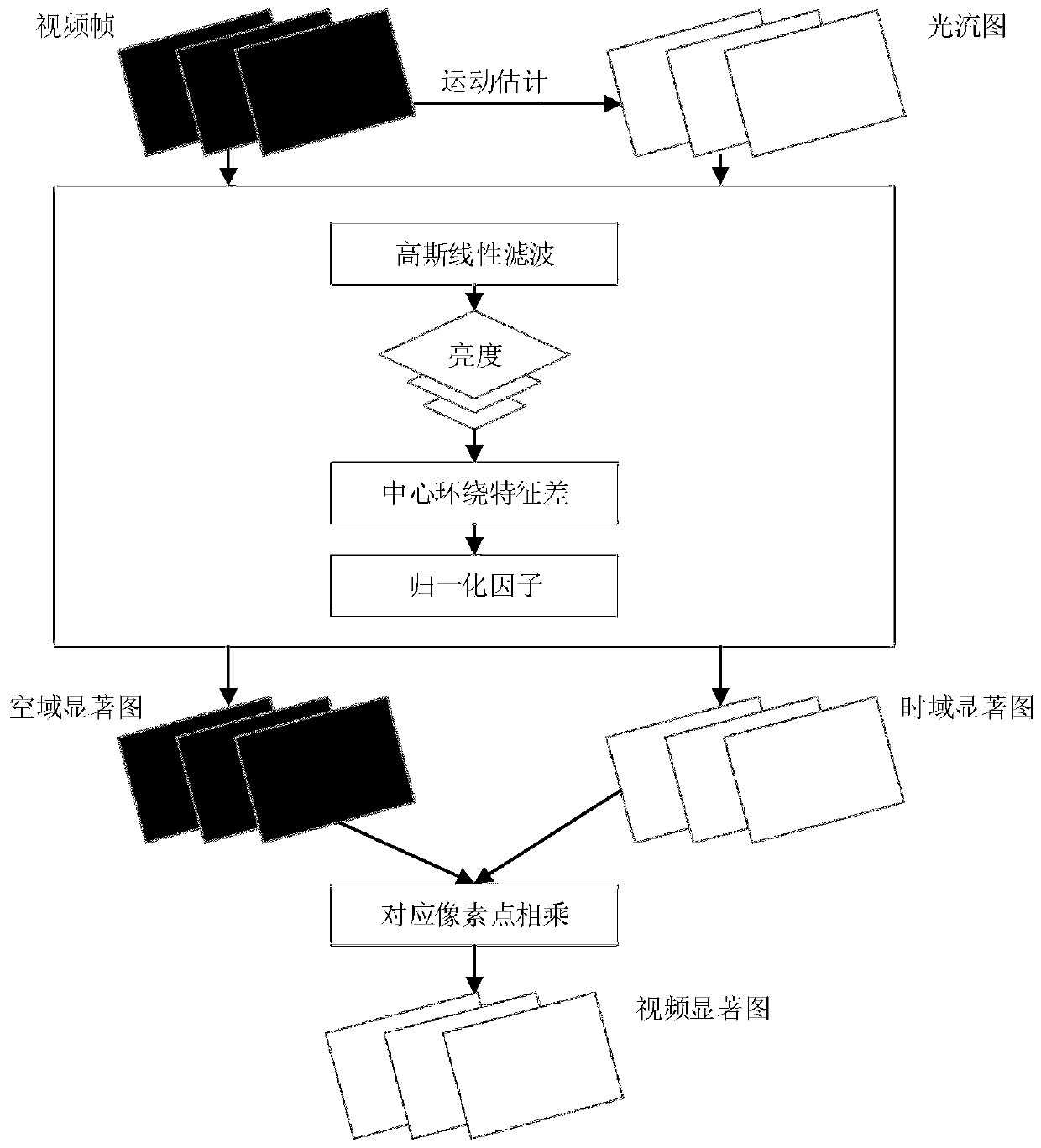

[0056] see figure 2 , including the following steps:

[0057] (1) decompressing and processing the received video;

[0058] (2) Video preprocessing, based on the color histogram feature segmentation shot between frames;

[0059] (1) First convert the RGB space to the HSI space to obtain the hue H (hue), saturation S (saturation) and brightness I (itensity) of each pixel in the image;

[0060]

[0061] here

[0062]

[0063]

[0064] (2) Then it is non-uniformly quantized according to human color perception, the hue H space is divided into 8 parts, the saturation S space is divided into 3 parts, the brightness I space is divided into 3 parts, and the entire HSI color space is divided into 72 parts. subspace (8×3×3), assign different weights to the three color components of HSV according to the sensitivity of human vision, and then use the following formula to synthesize a one-dimensional feature vector:

[0065] L=9H+3S+V

[0066] (3) count the number of pixels...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com