Method for analyzing matching degree between demand and output result based on text semantics

A technology of semantic analysis and matching degree, applied in the direction of semantic tool creation, text database query, unstructured text data retrieval, etc., to achieve the effect of reducing difficulty, reducing time and resource investment

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

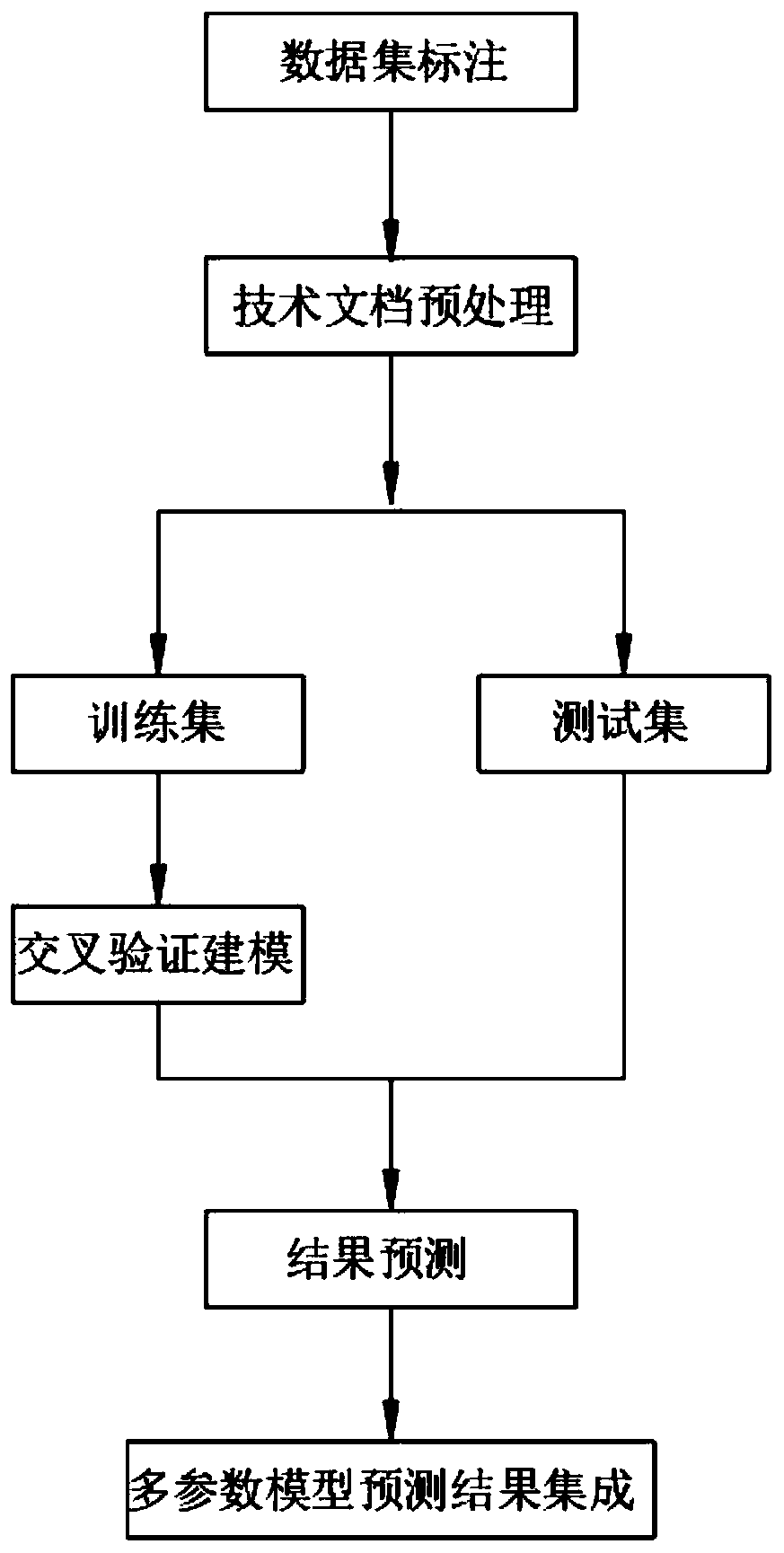

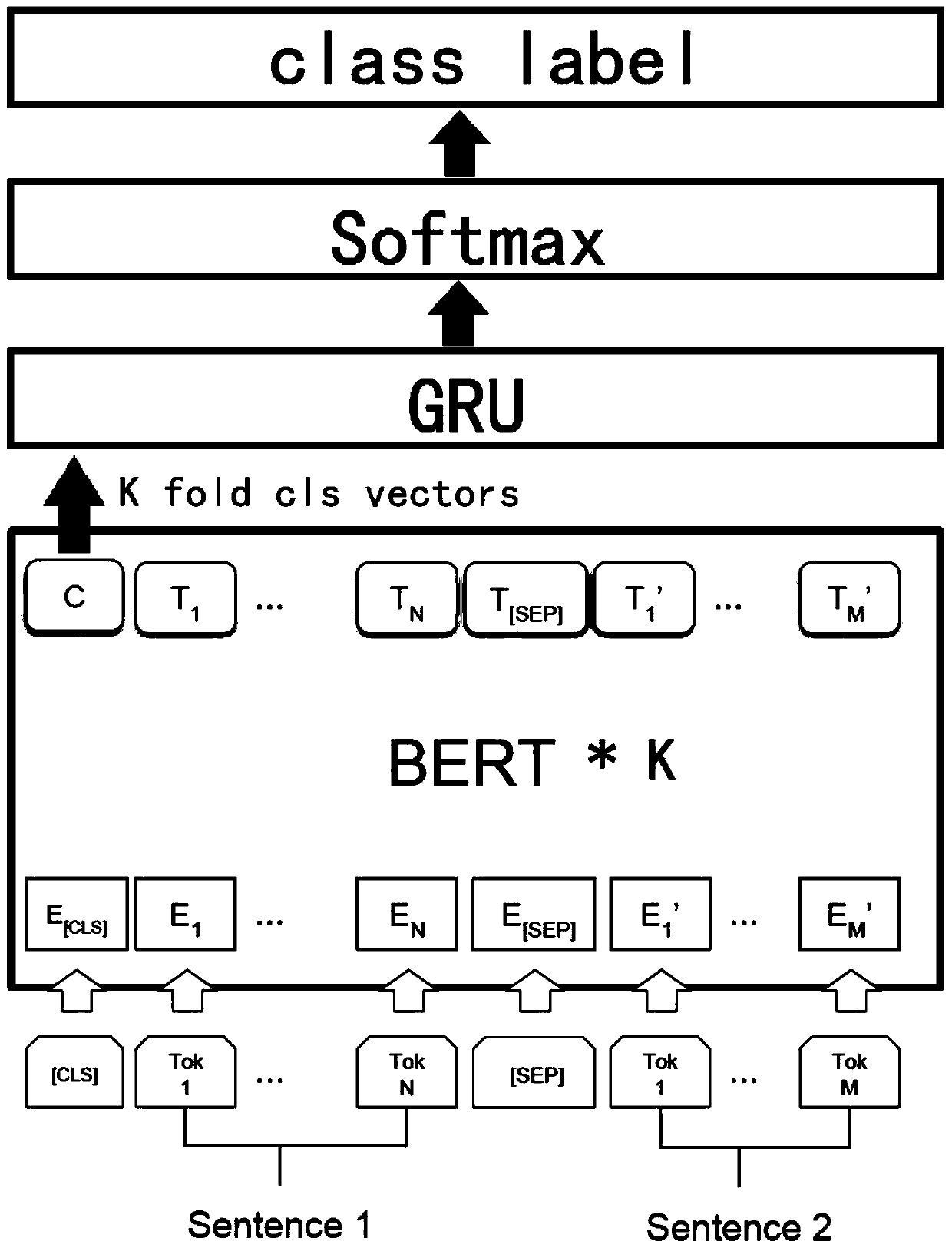

[0025] Such as Figure 1-4 As shown, this specific implementation mode adopts the following technical solutions: a method based on the matching degree between text semantic analysis requirements and output results, including the following steps:

[0026] A method for analyzing the matching degree between requirements and output results based on text semantics, comprising the following steps:

[0027] Step 1. Dataset labeling: Use the text information based on the project requirement description, achievement description and project title to compare and summarize the correlation matching degree of the two projects and perform four-category labeling to construct a labeling dataset for project matching calculation and modeling ;

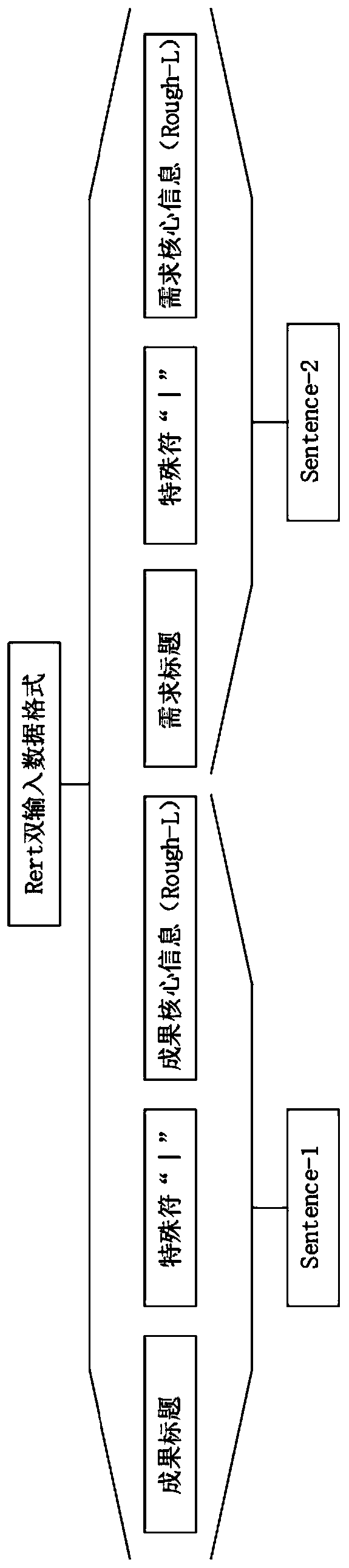

[0028] Step 2. Technical document preprocessing: Construct the Bert model input text. In view of the Bert model input sequence length and the limitation of computing resources, use the Rough-L algorithm based on the project name to core the project requ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com