Label construction method, system and device and storage medium

A construction method and labeling technology, applied in the field of image processing, can solve problems such as cumbersome and complex operations, low work efficiency, and heavy workload, and achieve the effect of simplifying the operation process and improving work efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

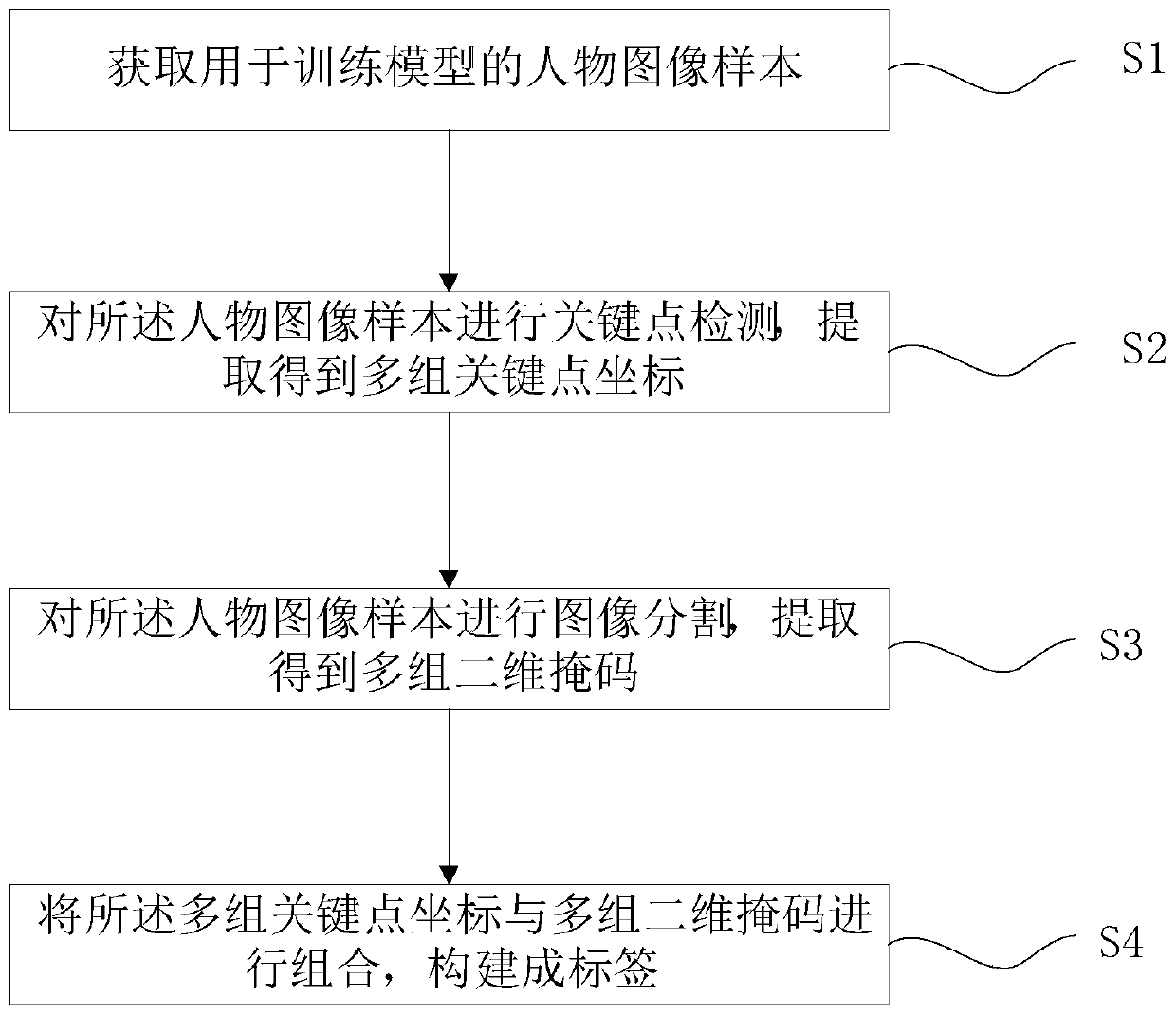

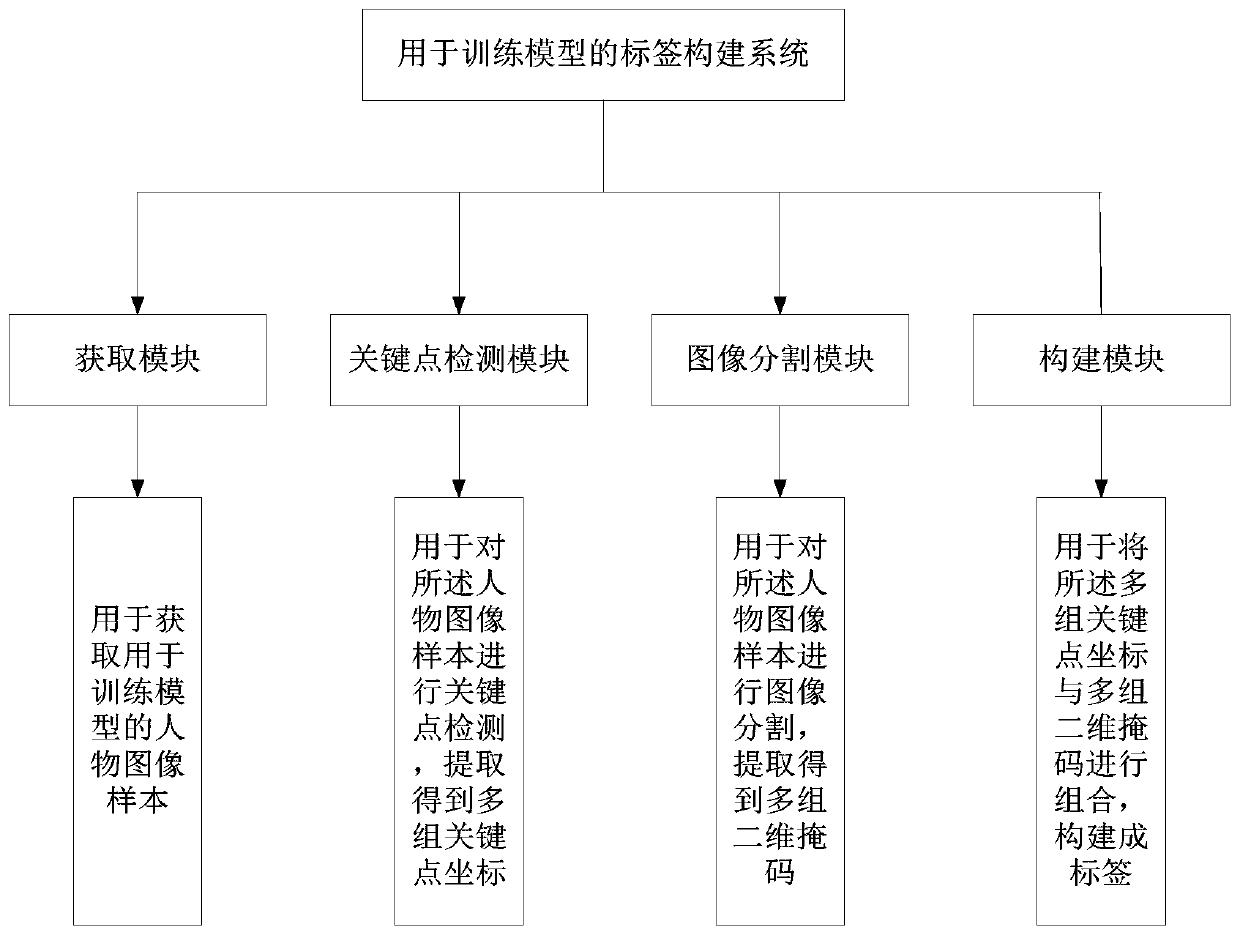

[0052] figure 1 It is a flow chart of the label construction method for training the model described in the embodiment of the present invention, such as figure 1 As shown, the method includes the following steps:

[0053] S1. Obtaining a person image sample for training the model;

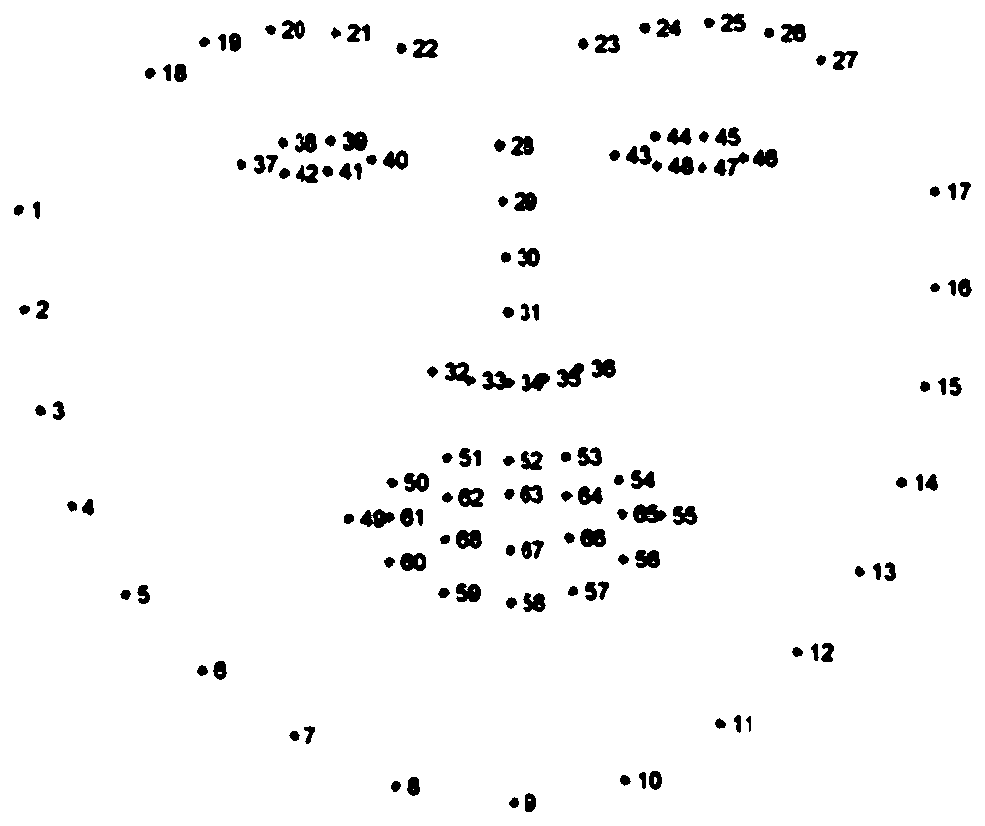

[0054] S2. Perform key point detection on the person image sample, and extract multiple sets of key point coordinates;

[0055] S3. Carry out image segmentation on the person image sample, and extract multiple groups of two-dimensional masks;

[0056] S4. Combining the multiple sets of key point coordinates with multiple sets of two-dimensional masks to form labels.

[0057] In this embodiment, the step S2, that is, the step of performing key point detection on the person image sample and extracting multiple sets of key point coordinates, is composed of the following steps:

[0058] S201. Using a deep neural network to perform area detection on the image, the area includes a face area and a bod...

Embodiment 2

[0085] Embodiments of the present invention also include a training method for generating an adversarial network model, comprising the following steps:

[0086] P1. Construct the first label using the label construction method described in Example 1;

[0087] P2. Construct a training set, the training set is composed of a person image sample and a first label, and the first label is constructed according to the person image sample;

[0088] P3. Obtain the training set to train the generation confrontation network model;

[0089] P4. Modifying the first label to obtain a plurality of different second labels;

[0090] P5. Generating an adversarial network model to obtain the second label;

[0091] P6. Detect whether the GAN model outputs an image corresponding to the second label.

[0092] In this embodiment, step P4, that is, the step of modifying the first label to obtain multiple second labels that are different from each other, specifically includes:

[0093] P401. Obtai...

Embodiment 3

[0097] The embodiment of the present invention also includes an image processing method, comprising the following steps:

[0098] D1. Acquire the first image, the first image is an image with label constraints, the constraints include human face outline, human body key point skeleton, human body outline, head outline and background;

[0099] D2. Using the GAN model trained by the training method described in Embodiment 2 to receive the first image and process it to output a second image, the second image is a real image corresponding to the restriction condition.

[0100] In summary, the label construction method for training the model in the embodiment of the present invention has the following advantages:

[0101] By extracting labels from person images, the complex person images are simplified into two-dimensional coordinates or two-dimensional masks of key points, which are used to train the generated confrontational neural network model (GAN model); by simply modifying th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com