Robot obstacle avoidance behavior learning method based on deep learning

A technology of deep learning and learning method, which is applied in the learning field of robot obstacle avoidance behavior, can solve the problems of large camera noise and poor use effect, and achieve the effects of reducing misjudgment, improving generalization ability, and improving inaccurate measurement depth

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

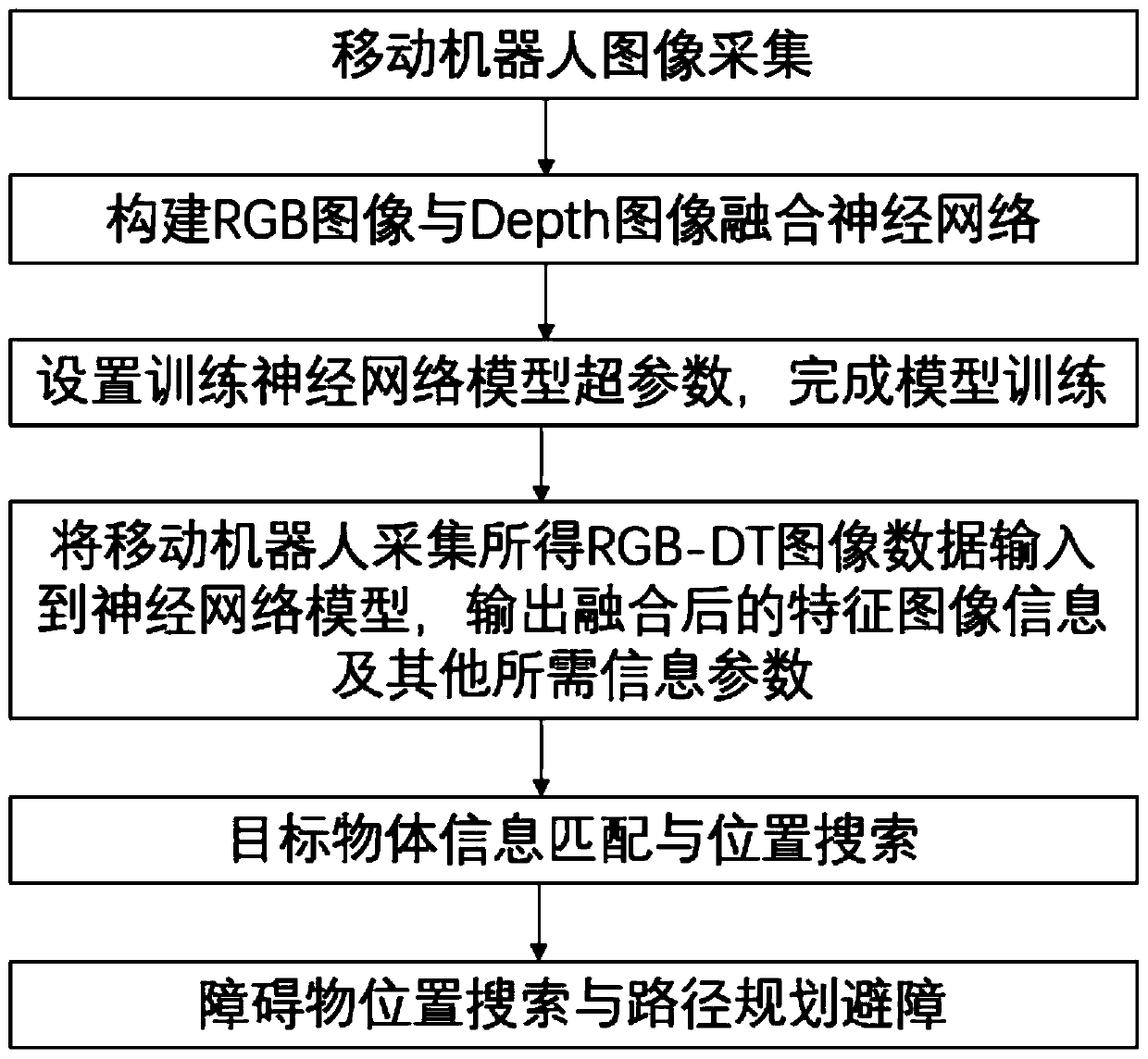

[0048] S1. Manipulate the robot to avoid obstacles in an unknown environment, collect RGB-D image data at a fixed frame rate through the Microsoft Kinect depth camera, and save them in time series;

[0049] S2, construct RGB image and Depth image fusion neural network model, input the collected RGB-D image data set into RGB image and Depth image fusion neural network model;

[0050] Specifically include:

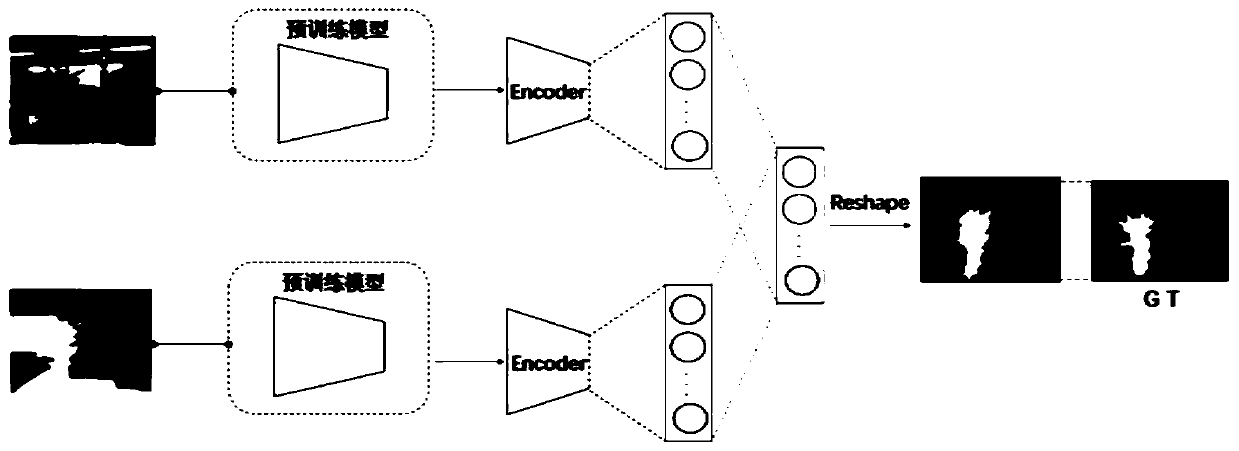

[0051] S21. Select the pre-training model of AlexNet as the feature extraction model of the input data: the input data is the NYUDepth Dataset V2 image data set, and the pre-training features are obtained after the feature extraction of the pre-training model;

[0052] S22 reduces the dimensionality of the pre-trained features through an encoder: the network of the encoder consists of two convolutional layers and a batch regularization layer BN;

[0053] S23. Vectorize the feature map obtained from dimensionality reduction, and use canonical correlation analysis to fuse fea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com