Virtual-real fusion man-machine collaborative simulation method and system

A technology of human-machine collaboration and virtual-real integration, applied in the field of virtual-real integration of human-machine collaborative simulation, it can solve the problems of uncertainty, danger, large number of establishments, and high cost, and achieve the effects of ensuring human safety, more simulation, and cost saving.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

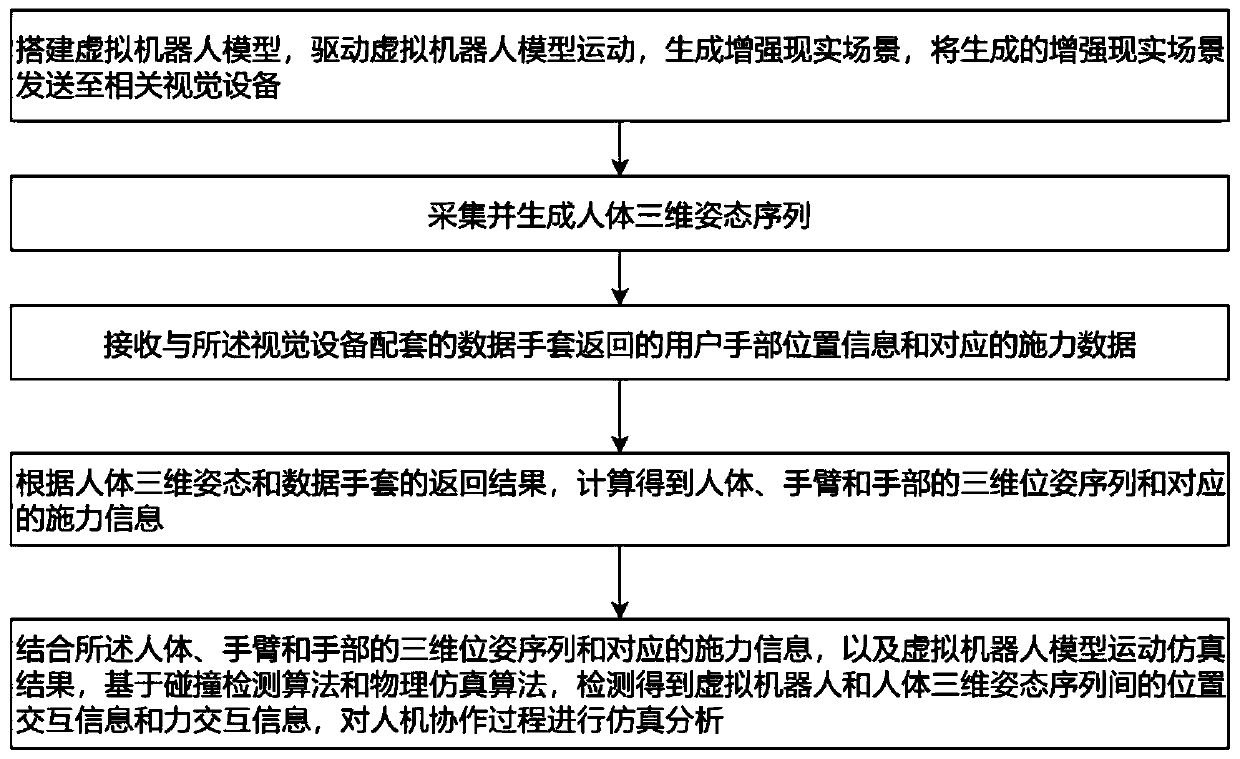

[0061] to combine figure 1 , the present invention proposes a virtual-real fusion human-machine collaborative simulation method, the simulation method comprising:

[0062] S1, build a virtual robot model, drive the movement of the virtual robot model, generate an augmented reality scene, and send the generated augmented reality scene to a relevant visual device.

[0063] S2, collecting and generating a human body three-dimensional pose sequence.

[0064] S3. Receive the user's hand position information and corresponding force application data returned by the data glove matched with the vision device.

[0065] S4, according to the 3D pose sequence of the human body and the returned result of the data glove, calculate the 3D pose sequence of the human body, arm and hand and the corresponding force information.

[0066] S5, combined with the 3D pose sequence of the human body, arm and hand and the corresponding force information, and the motion simulation results of the virtual...

specific Embodiment 2

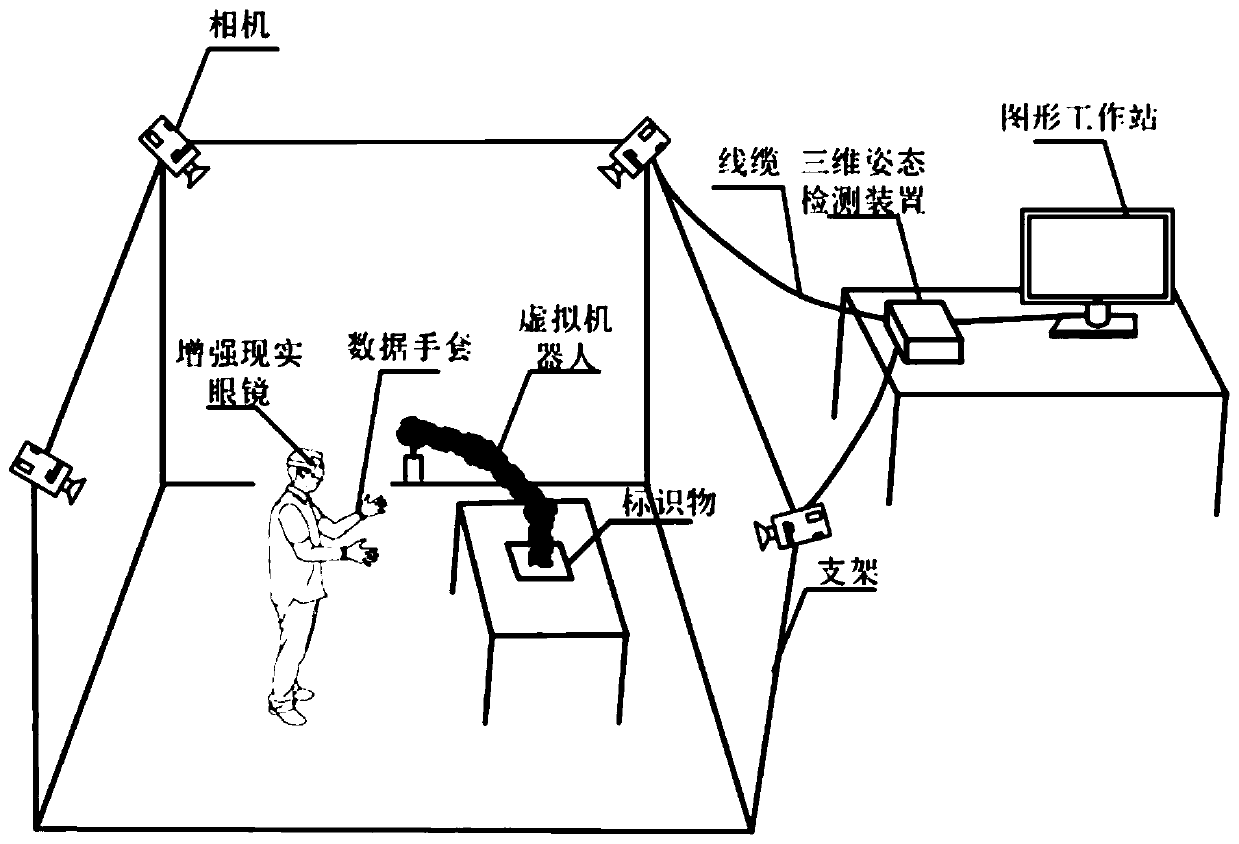

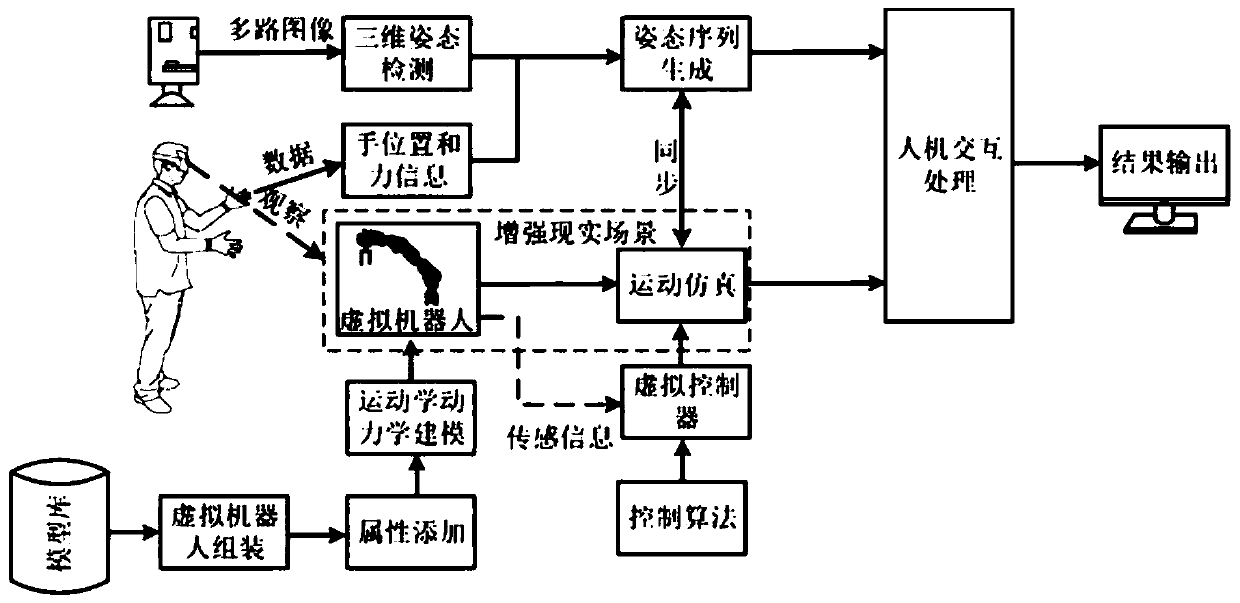

[0104] combine figure 2 , based on the foregoing method, the present invention also mentions a virtual-real fusion human-machine collaborative simulation system, which includes a human-machine three-dimensional posture acquisition device, a visual device (such as augmented reality glasses), a data glove, and a graphics workstation.

[0105] The human body three-dimensional posture collection device is used to collect and generate a human body three-dimensional posture sequence, and send it to a graphics workstation.

[0106] The graphics workstation is used to build a virtual robot model, drive the virtual robot model to move, generate an augmented reality scene, and send the generated augmented reality scene to a relevant visual device.

[0107] The vision device and the data glove are worn on the user and connected to the graphics workstation, the vision device is used to display the augmented reality scene including the virtual robot model sent by the graphics workstation ...

specific Embodiment 3

[0128] The present invention can also simulate the interactive behavior between the virtual human and the physical robot, and change the collection of the three-dimensional pose information and force information of the human into the collection of the three-dimensional pose information and force information of the physical robot, combined with the movement of the created virtual human Model, using the similar method mentioned above to realize the collaborative simulation process of virtual human-physical robot. Among them, collecting the 3D pose information and force information of the physical robot can be realized by the aforementioned methods, or can be obtained by calculating the data of many sensors and controllers installed on the physical robot, and the motion model of the created virtual human needs to be endowed with physical attributes. and motion attributes to create a motion model similar to the aforementioned virtual robot model.

[0129] Aspects of the invention ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com