Bionic vision self-motion perception map drawing method, storage medium and equipment

A map drawing and visual perception technology, applied in the direction of non-electric variable control, surveying and navigation, control/regulation system, etc., can solve the distortion of two-dimensional position estimation map, the position coordinates cannot be updated in real time, image matching accuracy and positioning accuracy Bad question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

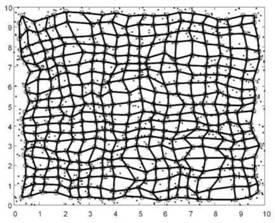

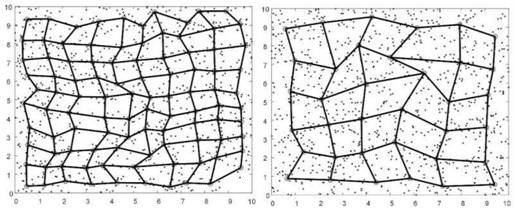

Method used

Image

Examples

Embodiment 2

[0132] Corresponding to Embodiment 1 of the present invention, Embodiment 2 of the present invention provides a computer-readable storage medium on which a computer program is stored, and when the program is executed by a processor, the following steps are implemented:

[0133] Step S1, according to the environmental information collected by the bionic visual perception system, construct an episodic memory unit representing a spatial position and an environmental landmark template, and generate a corresponding episodic memory library;

[0134] Step S2, according to the self-motion information collected by the gyroscope and the accelerometer, perform direction and displacement encoding, and update the pose perception information;

[0135] Step S3, using the episodic memory and the environmental landmark template to correct the ego-motion information;

[0136] Step S4, drawing and correcting the bionic self-motion perception two-dimensional environment position estimation map ac...

Embodiment 3

[0140] Corresponding to Embodiment 1 of the present invention, Embodiment 3 of the present invention provides a computer device, including a memory, a processor, and a computer program stored in the memory and operable on the processor. When the processor executes the program, the The following steps:

[0141] Step S1, according to the environmental information collected by the bionic visual perception system, construct an episodic memory unit representing a spatial position and an environmental landmark template, and generate a corresponding episodic memory library;

[0142] Step S2, according to the self-motion information collected by the gyroscope and the accelerometer, perform direction and displacement encoding, and update the pose perception information;

[0143] Step S3, using the episodic memory and the environmental landmark template to correct the ego-motion information;

[0144] Step S4, drawing and correcting the bionic self-motion perception two-dimensional envi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com