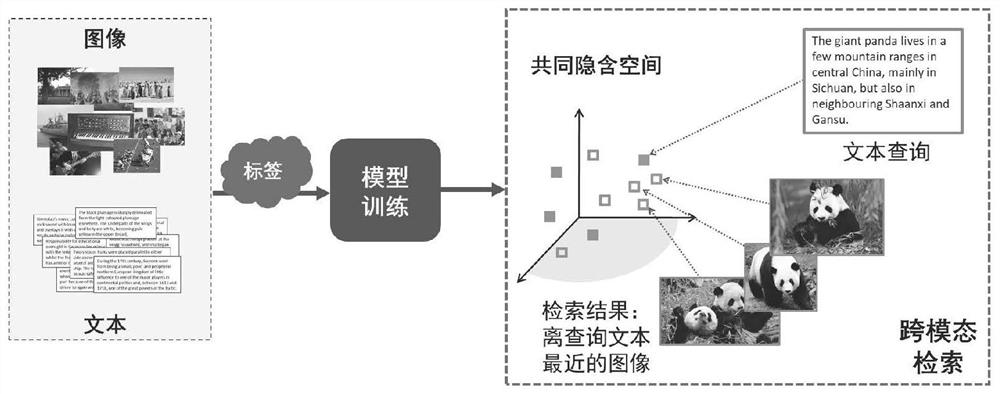

Cross-modal retrieval method and system based on semantic condition association learning

A cross-modal, conditional technology, applied in the multimedia field, can solve the problem of lack of discriminative power of noise cross-modal implicit spatial representation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

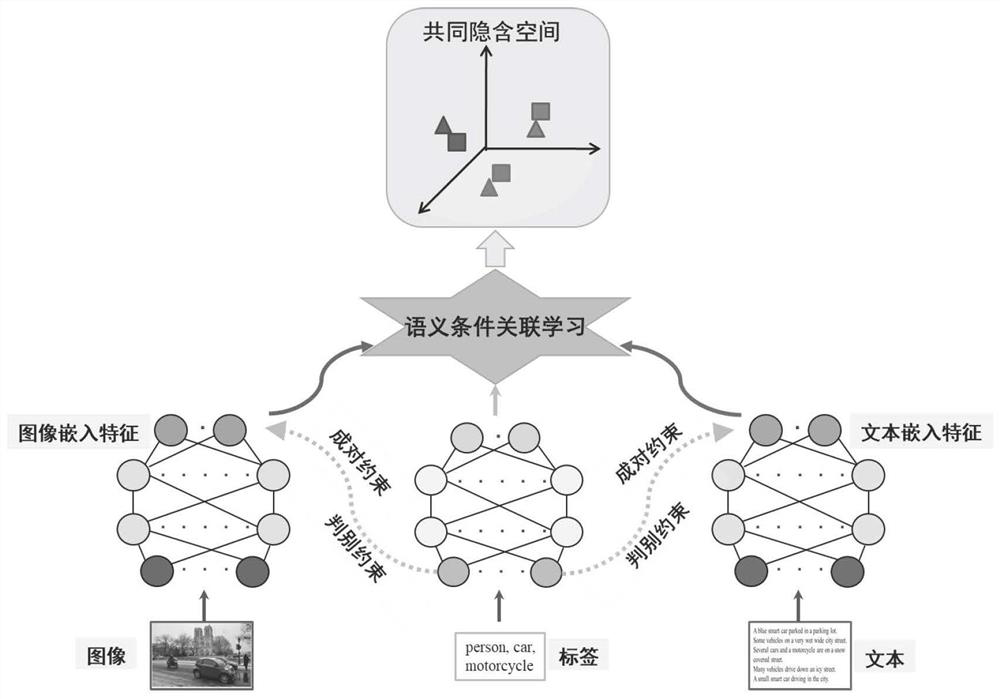

[0057] The present invention comprises following two key points:

[0058] Key point 1: Use label information to guide the deep feature learning of each modality data; in terms of technical effect, make each modality feature representation maintain multi-label semantic similarity, ensure the semantic discrimination of feature representation, and improve the cross-media retrieval effect.

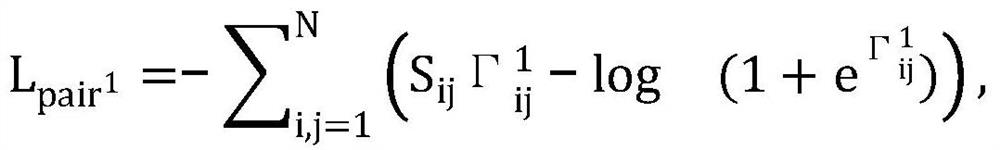

[0059] Key point 2: Establish conditional correlations for modal feature representation and high-level semantic information; in terms of technical effects, effectively mine high-level semantic correlations between different modalities, reduce the impact of noise labels on cross-modal implicit representations, and improve cross-modal accuracy of state retrieval.

[0060] In order to make the above-mentioned features and effects of the present invention more clear and understandable, the following specific examples are given together with the accompanying drawings for detailed description as fol...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com