Entity and relationship extraction method and system, device and medium

A technology of relational extraction and entities, applied in neural learning methods, instruments, unstructured text data retrieval, etc., can solve problems such as low efficiency, achieve the effect of improving efficiency and avoiding low model performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

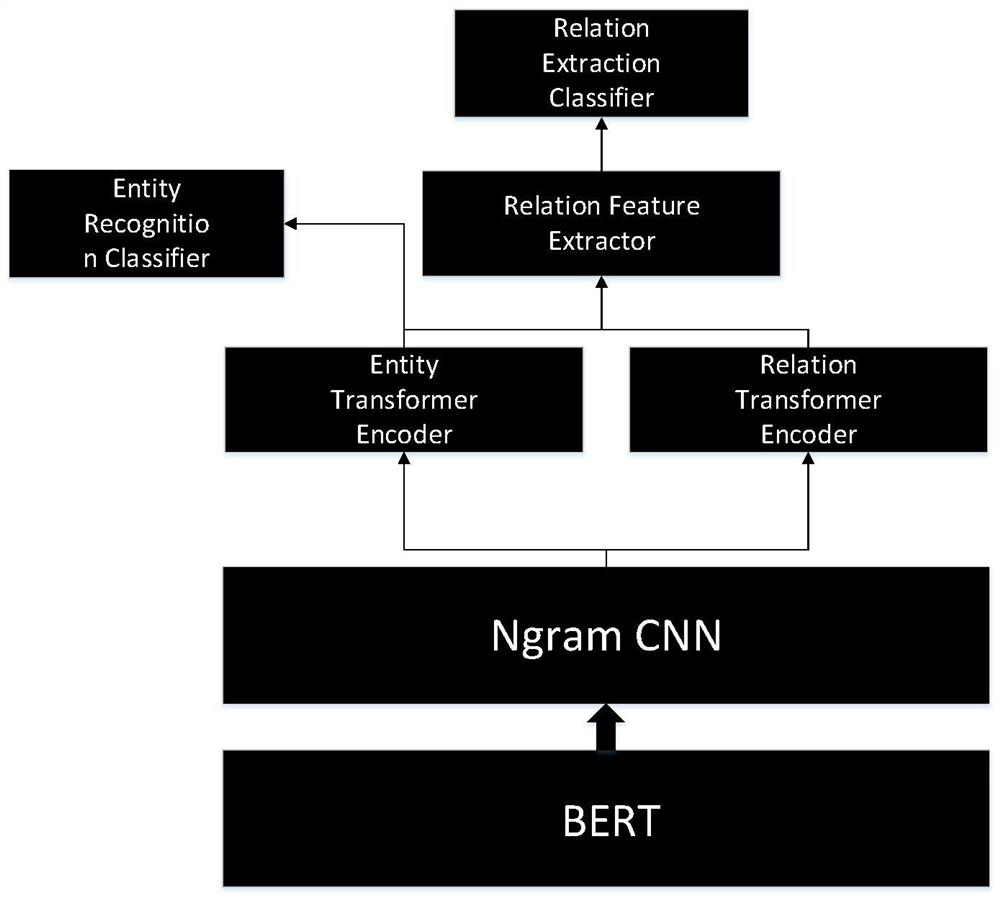

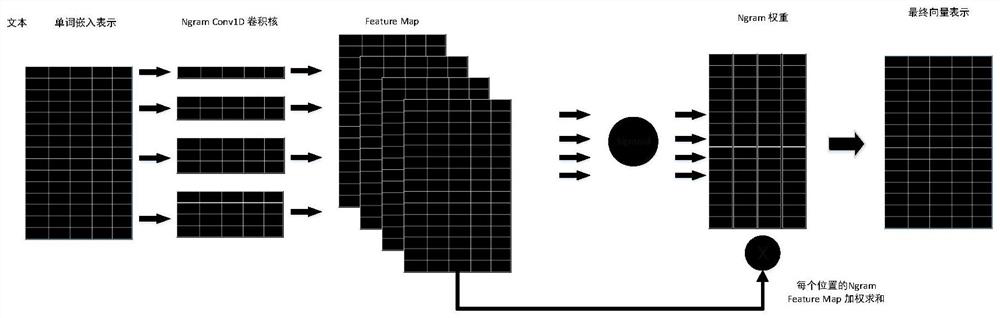

[0084] Please refer to Figure 1-Figure 2 , figure 1 It is a schematic diagram of the principle of entity and relationship extraction method, figure 2 It is a schematic diagram of the Ngram CNN architecture. This method specifically includes:

[0085] Word vector representation learning:

[0086] For the input document D={w 1 ,w 2 ,…,w n }, the document D word comes from the vocabulary database, w i ∈W v , i=1,...,n, n represents the number of words in the document, v represents the size of the vocabulary, and W represents the space of the vocabulary. Then through the BERT pre-training language model, the vector representation sequence of the document word sequence is obtained: X={x 1 ,x 2 ,…,xn },x i ∈R d ,i=1,...,n. x i is a d-dimensional vector in the real number space, representing the vector representation of the ith word, and R represents the real number space.

[0087] Use CNN network for ngram encoding:

[0088] For word embedding representation matrix ...

Embodiment 2

[0124] In the second embodiment, the entity and relation extraction method in the present invention is described in detail.

[0125] For the sentence "Mr. K was born in place D, and he led a party to establish country A on a certain day in a certain year.":

[0126] ["K","Xian","Sheng",...,"Country","."] Obtain the vector representation of each word in the sentence through the BERT model;

[0127] Extract the vector representation of the Ngram text segment headed by the current word in the sentence through the Ngram CNN encoder. For example, the vector representation of "Mr. K" is [0.3, 0.4, 0.44,..., 0.234];

[0128] The attention weight of each Ngram text segment headed by each character is obtained through the attention mechanism. For example, the Ngram text segment for the character "K" in the first position of the sentence includes: "K", "K first", "Mr. K" , "Mr. K out". Calculate their attention weights as 0.1, 0.2, 0.5, 0.2 respectively. Then calculate the vector repr...

Embodiment 3

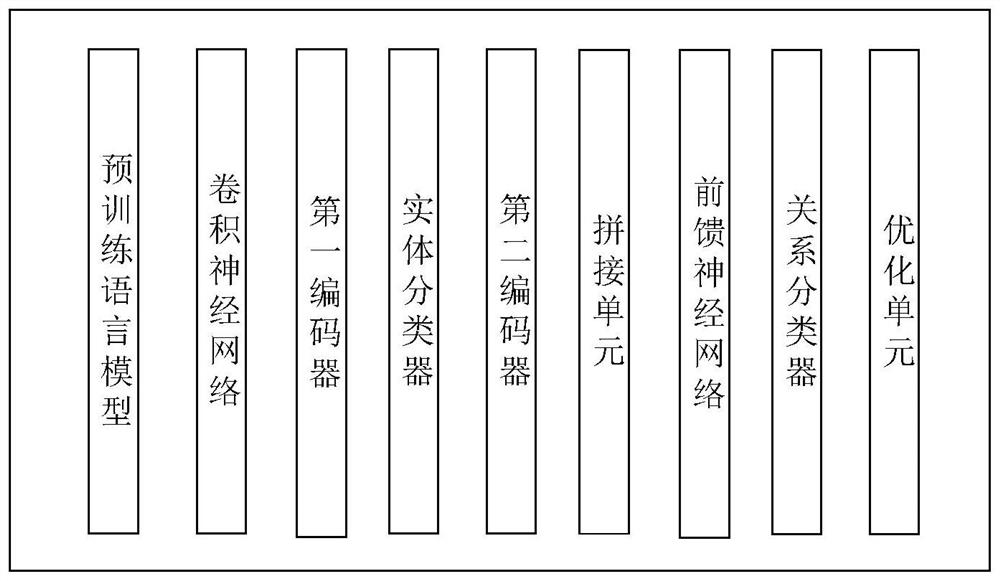

[0135]Please refer to image 3 , image 3 A schematic diagram of the composition of an entity and relationship extraction system, the third embodiment of the present invention provides an entity and relationship extraction system, the system includes:

[0136] The pre-trained language model is used to process the document input to the pre-trained language model, and obtain the vector representation sequence of the document word sequence;

[0137] Convolutional neural network, which is used to process the vector representation sequence input to the convolutional neural network, and encodes the embedding representation of each word with the attention mechanism to obtain the sequence embedding representation;

[0138] a first encoder, configured to process the sequence embedding representation input to the first encoder to obtain entity feature embedding representation information;

[0139] The entity classifier is used to perform entity classification by embedding the entity f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com