Lip language recognition-based method for improving speech comprehension degree of patient with severe hearing impairment

A lip language and patient technology, applied in the field of speech understanding, can solve the problems of hearing-impaired patients with limited functions, achieve the effects of improving speech comprehension, avoiding semantic loss, and improving language comprehension

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053]The present invention will be further explained below in conjunction with the drawings.

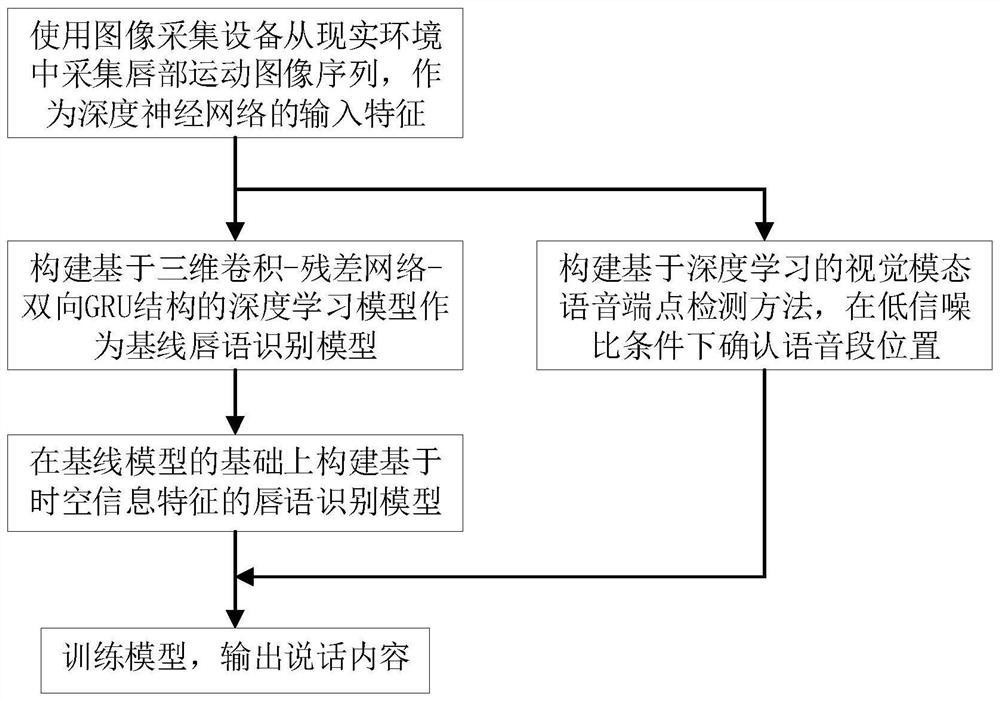

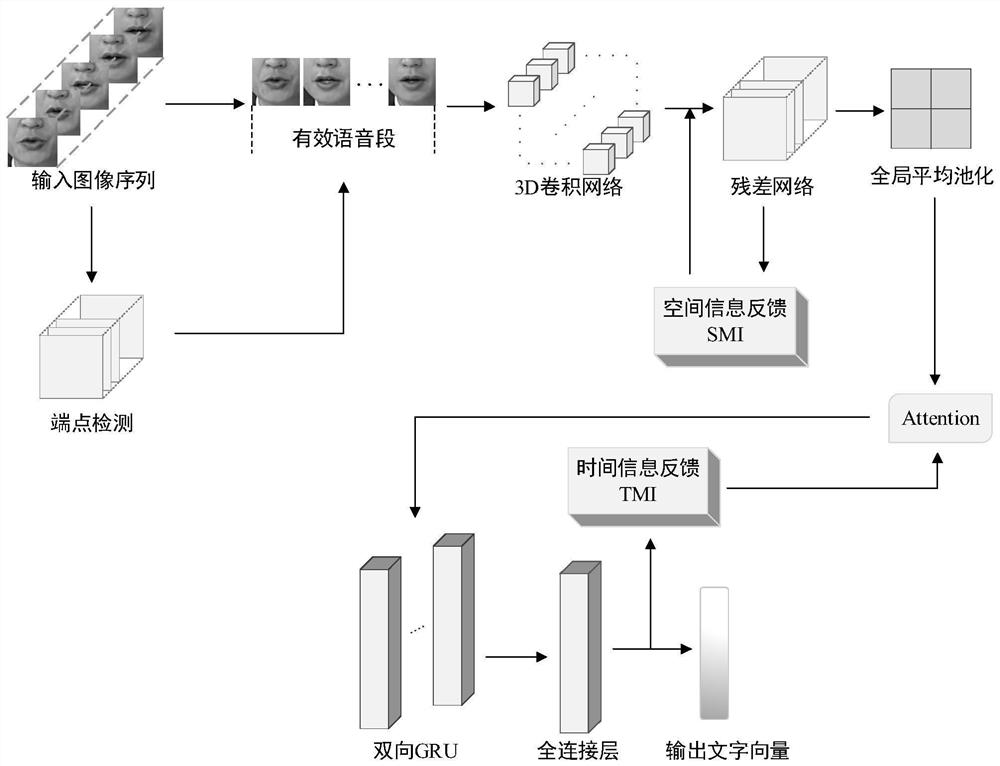

[0054]The invention discloses a method for improving the speech comprehension of severe hearing impaired patients based on lip recognition, such asfigure 1 As shown, including the following steps:

[0055]Step (A): Use an image acquisition device to acquire a sequence of lip motion images from the real environment as the input feature of the deep neural network.

[0056]Step (B). Construct a visual modal speech endpoint detection method based on deep learning to confirm the position of the voice segment under the condition of low signal-to-noise ratio. The endpoint detection method uses key points to detect and estimate the motion state of the lips and their relative Location, and build a model based on this to determine whether it is a voice segment, as follows:

[0057]Step (B1), constructing a multi-scale neural network model based on depth separable convolution as a key point detection model, the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com