Patents

Literature

36 results about "Speech comprehension" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Speech comprehension starts with the identification of the speech signal against an auditory background and its transformation to an abstract representation, also called decoding. Speech sounds are perceived as phonemes, which form the smallest unit of meaning.

Semantic object synchronous understanding implemented with speech application language tags

ActiveUS7200559B2Sound input/outputSpeech recognitionSpeech comprehensionApplication programming interface

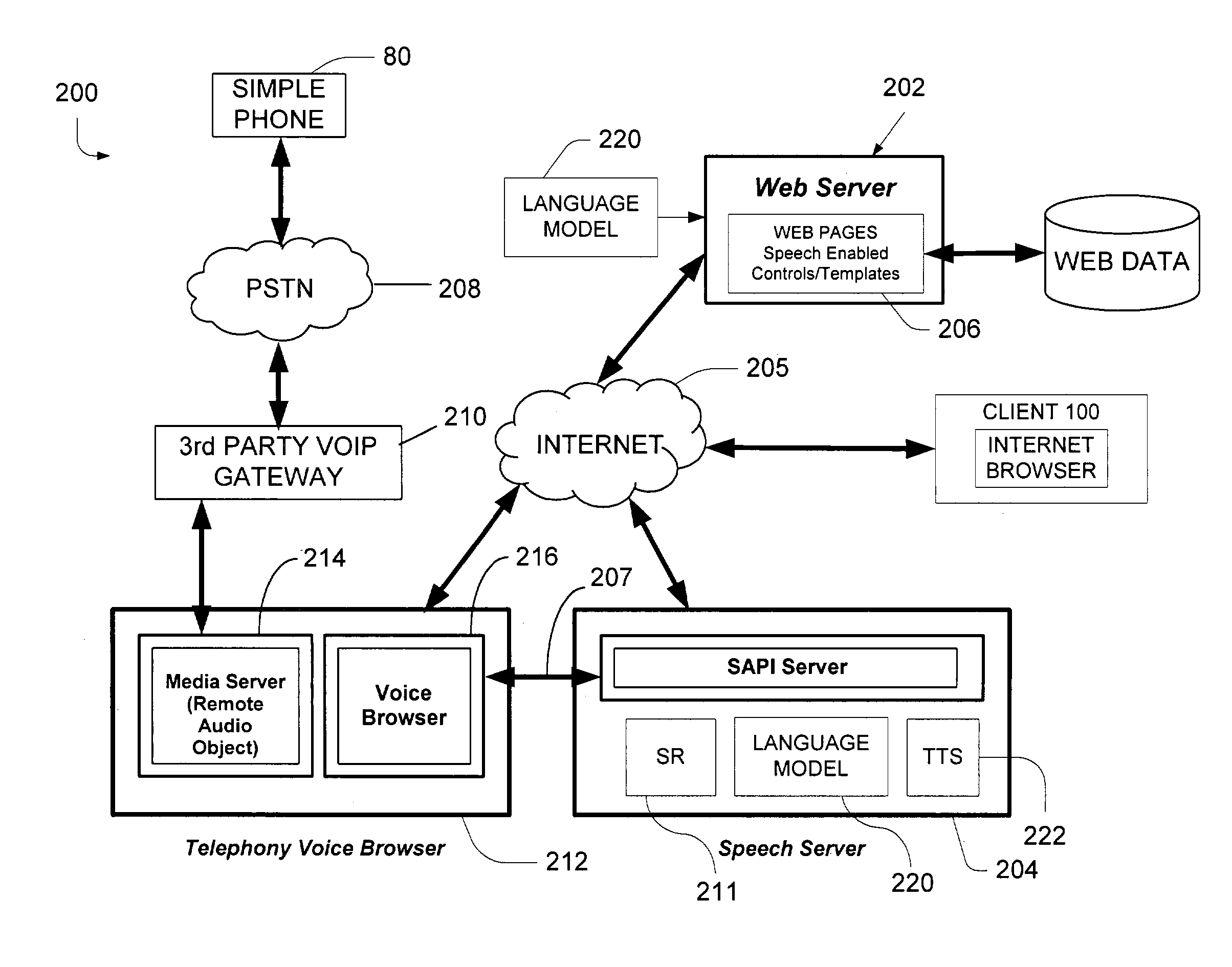

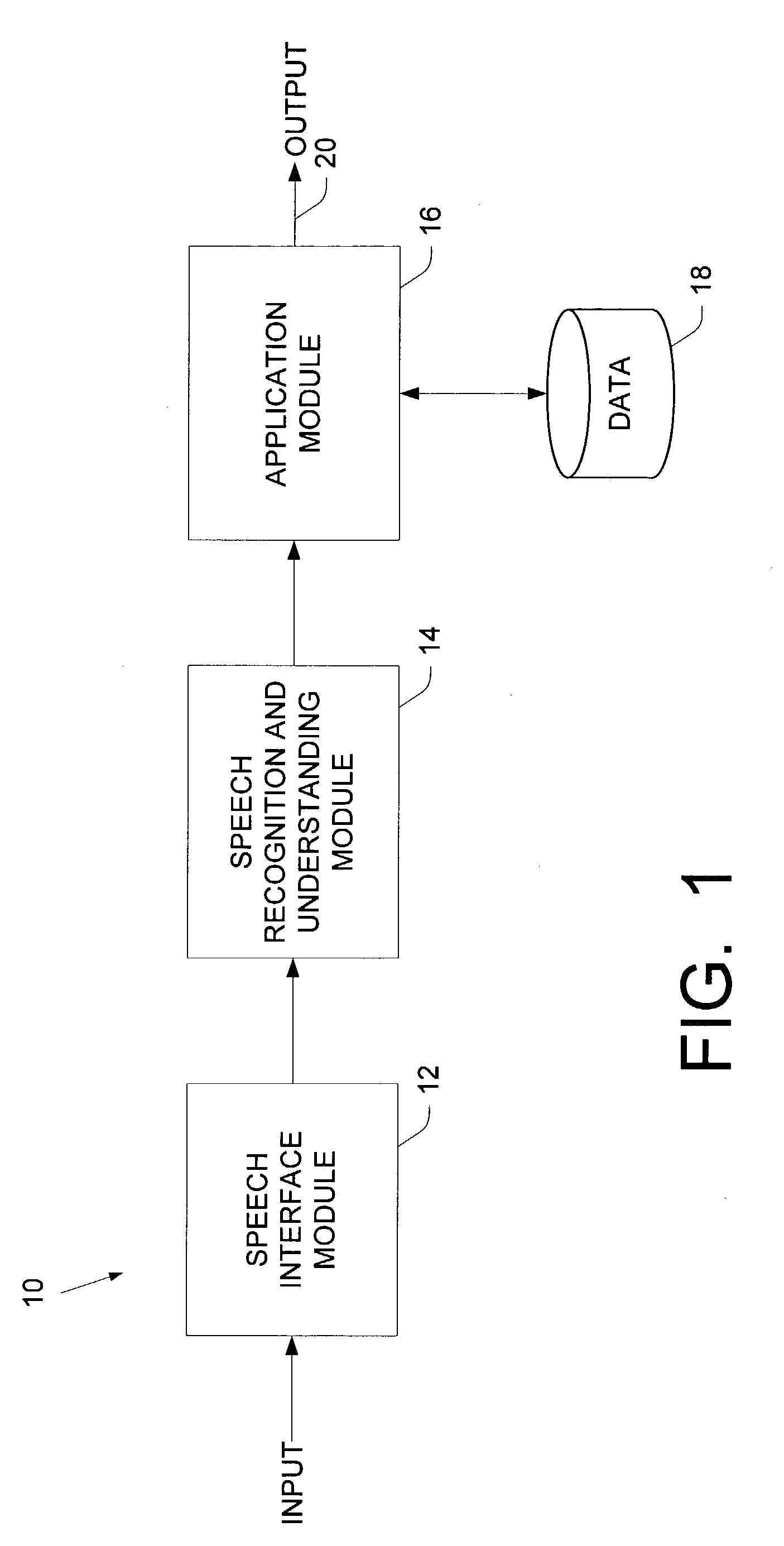

A speech understanding system includes a language model comprising a combination of an N-gram language model and a context-free grammar language model. The language model stores information related to words and semantic information to be recognized. A module is adapted to receive input from a user and capture the input for processing. The module is further adapted to receive SALT application program interfaces pertaining to recognition of the input. The module is configured to process the SALT application program interfaces and the input to ascertain semantic information pertaining to a first portion of the input and output a semantic object comprising text and semantic information for the first portion by accessing the language model, wherein performing recognition and outputting the semantic object are performed while capturing continues for subsequent portions of the input.

Owner:MICROSOFT TECH LICENSING LLC

Speech understanding method and system

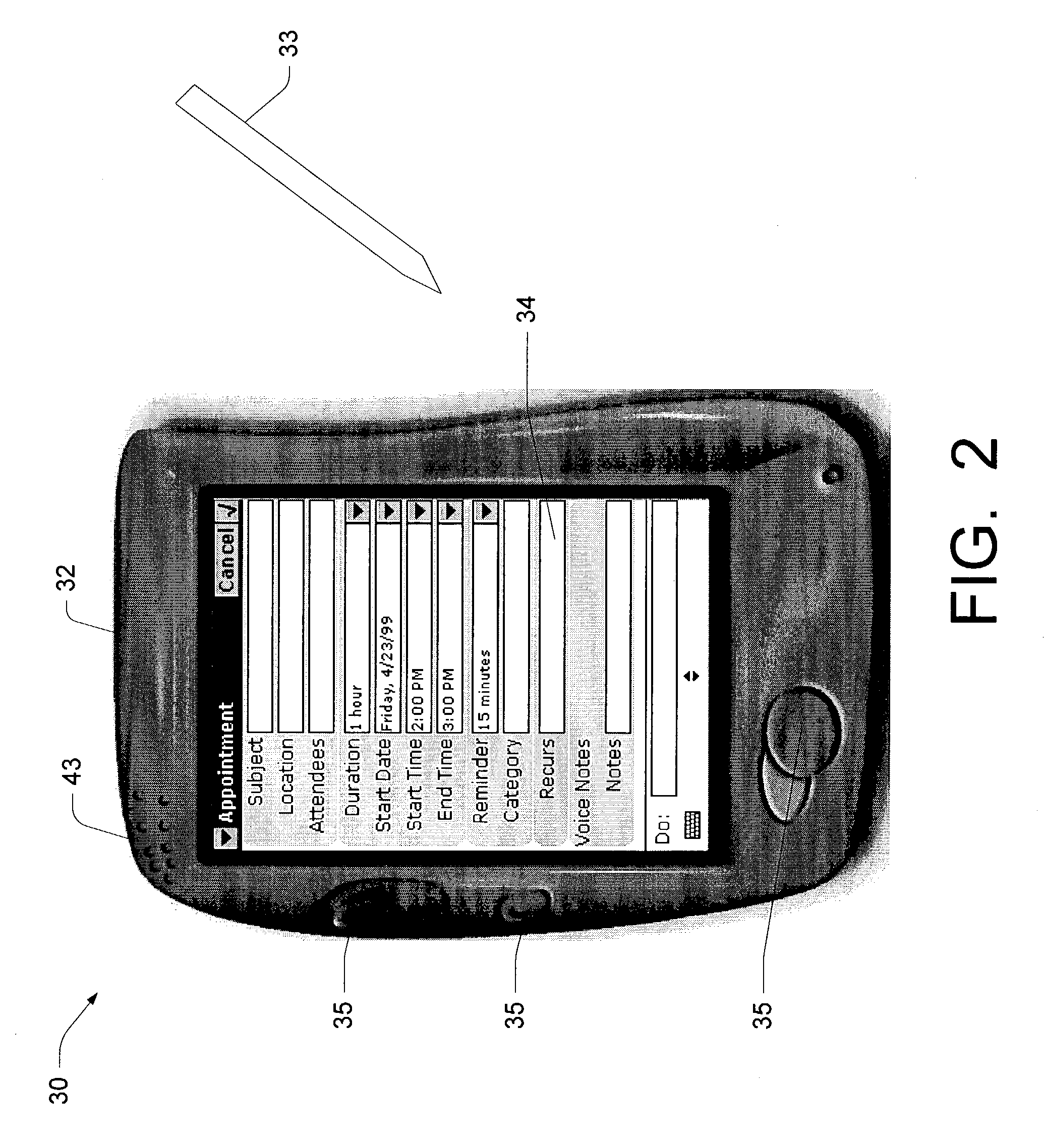

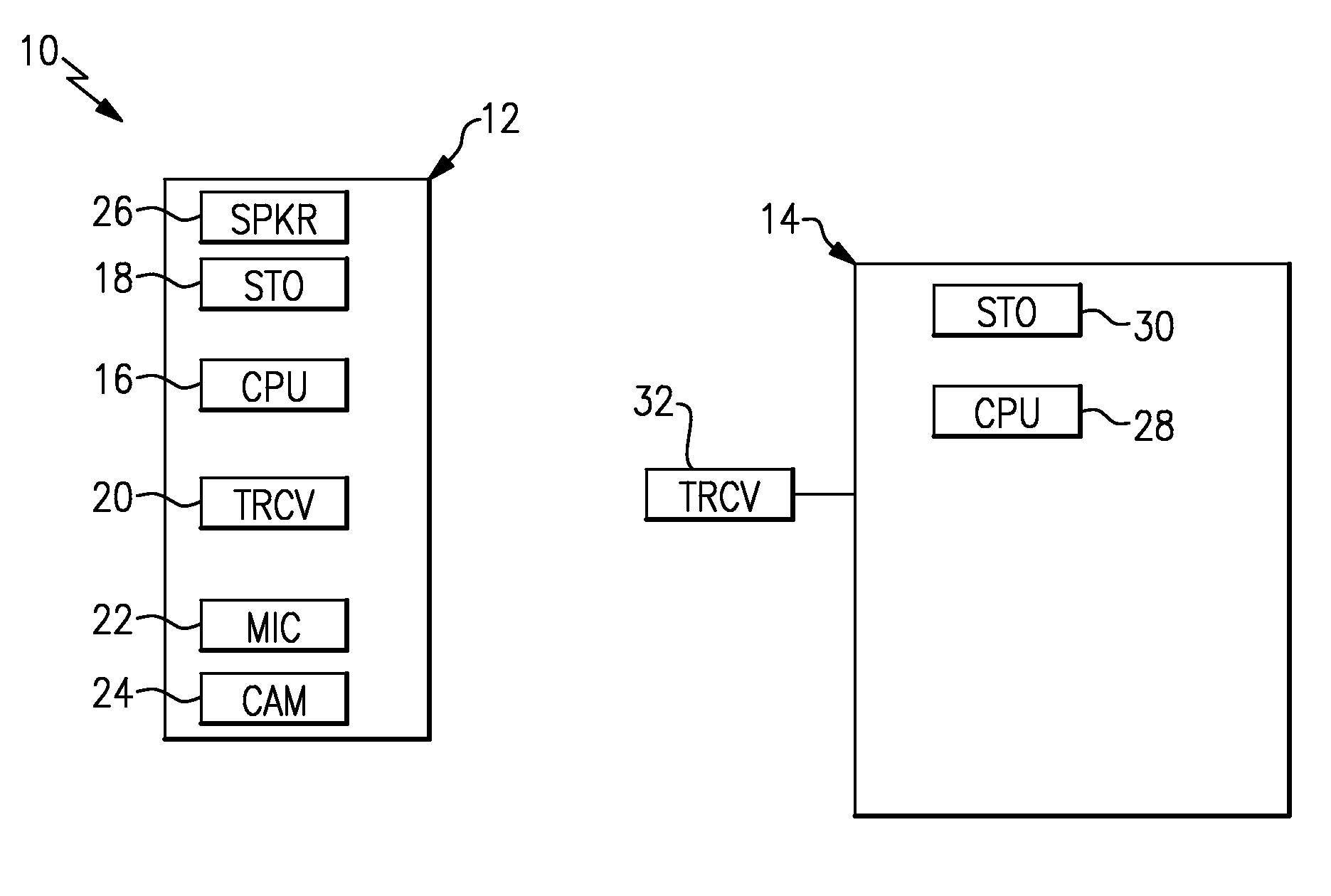

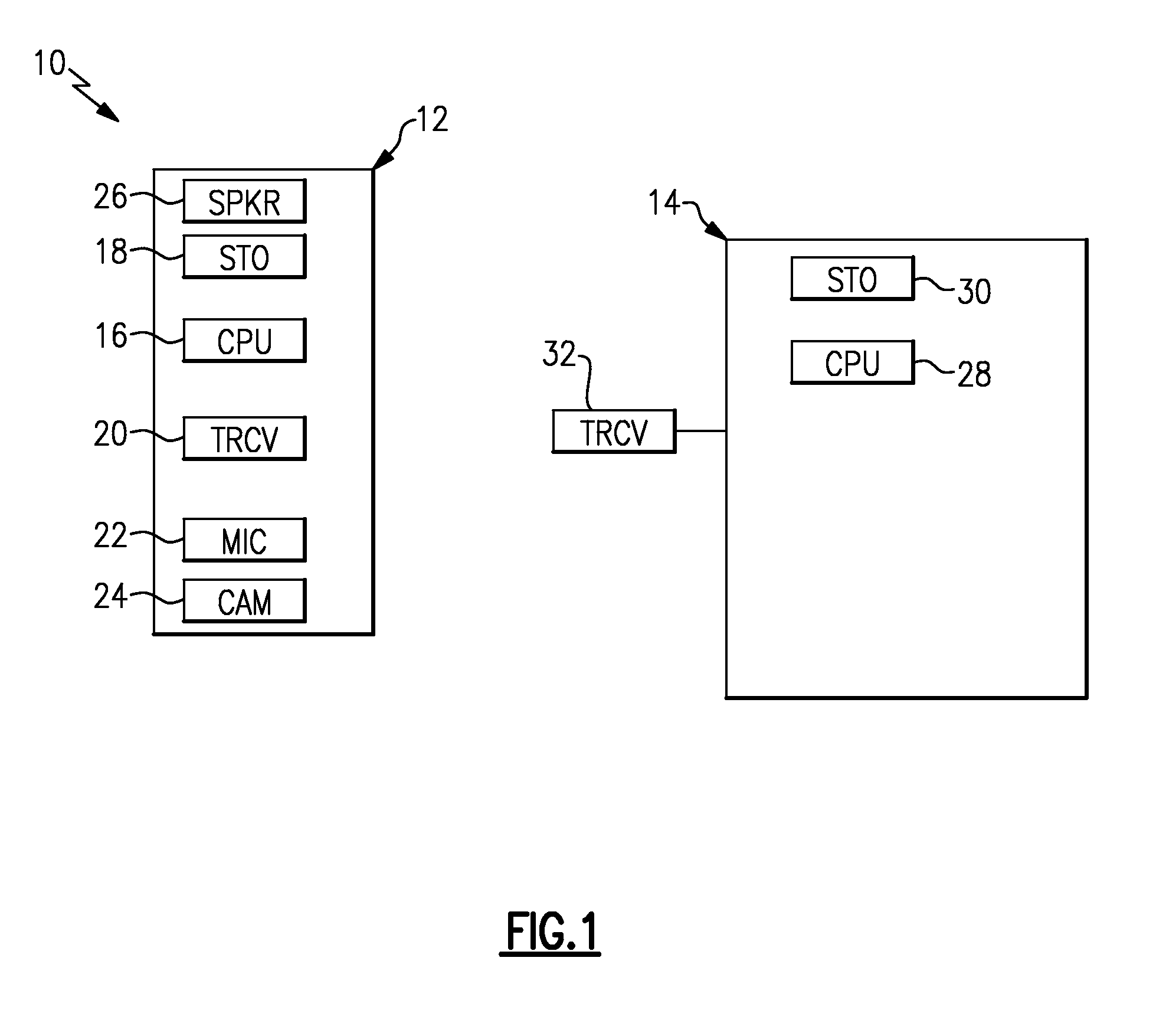

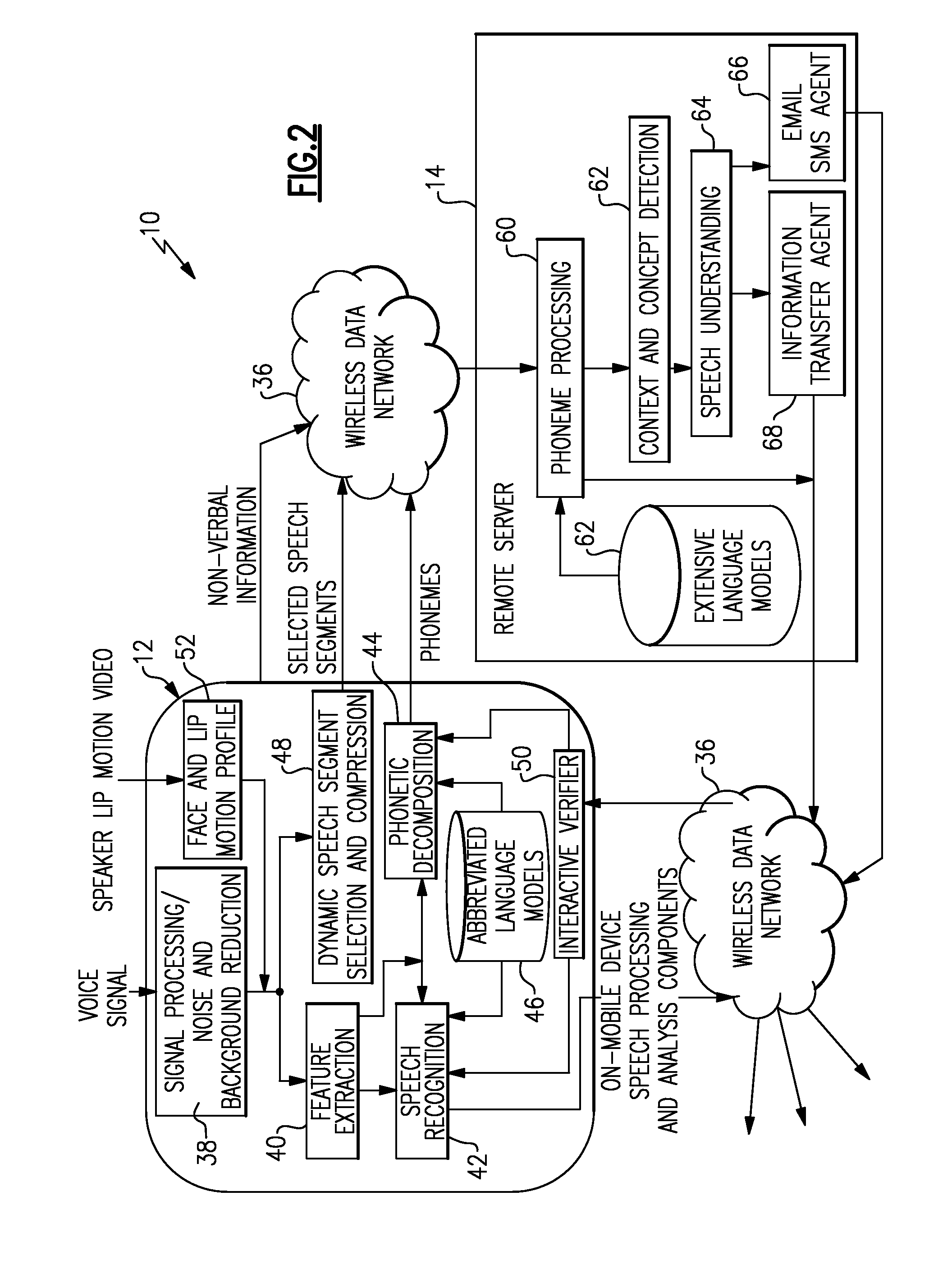

A speech recognition system includes a mobile device and a remote server. The mobile device receives the speech from the user and extracts the features and phonemes from the speech. Selected phonemes and measures of uncertainty are transmitted to the server, which processes the phonemes for speech understanding and transmits a text of the speech (or the context or understanding of the speech) back to the mobile device.

Owner:APPY RISK TECH LTD

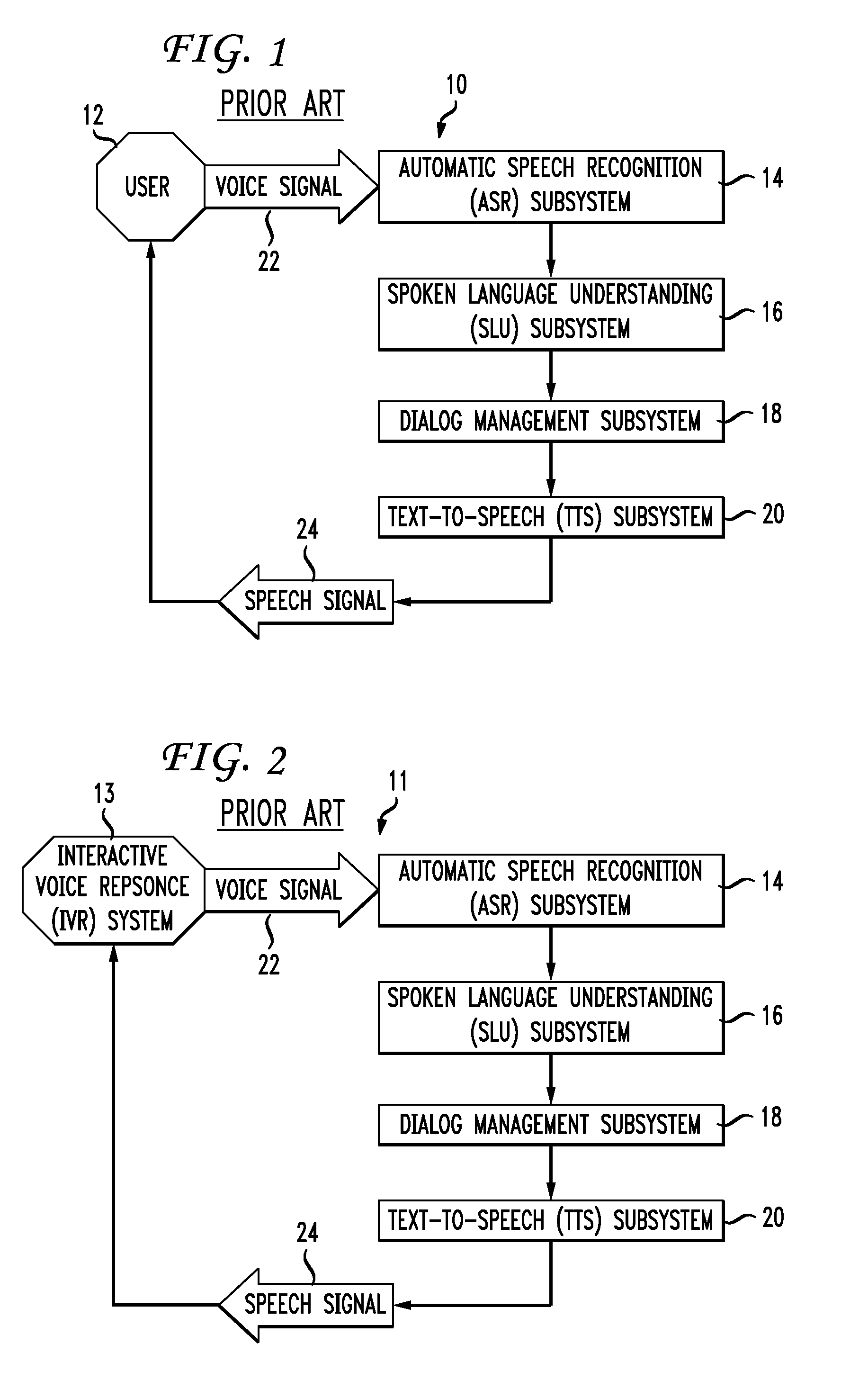

Method and apparatus for preventing speech comprehension by interactive voice response systems

ActiveUS20060074677A1Reduce the possibilitySignal can be recognizedSecret communicationTelevision systemsSpeech comprehensionInteractive voice response system

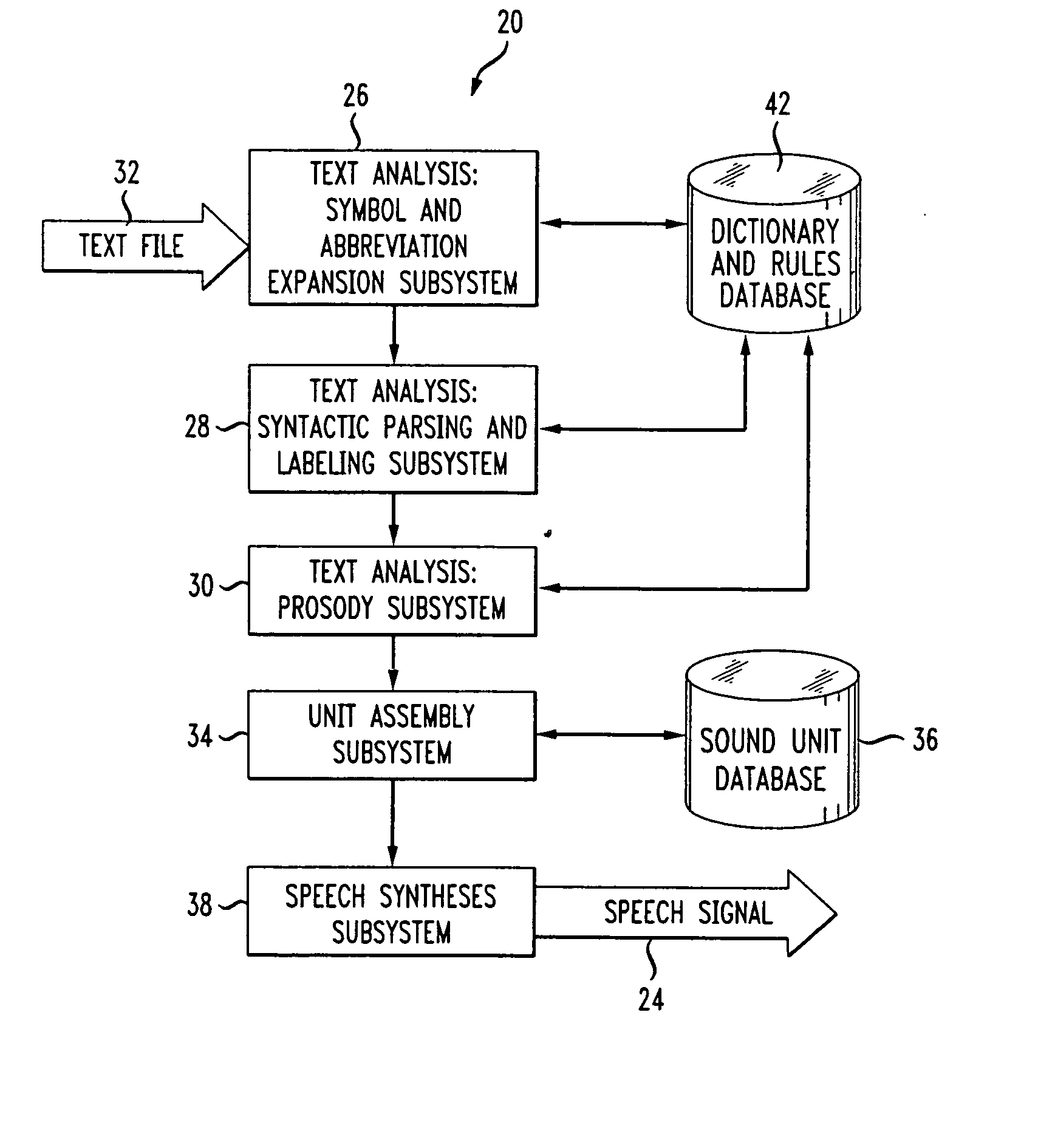

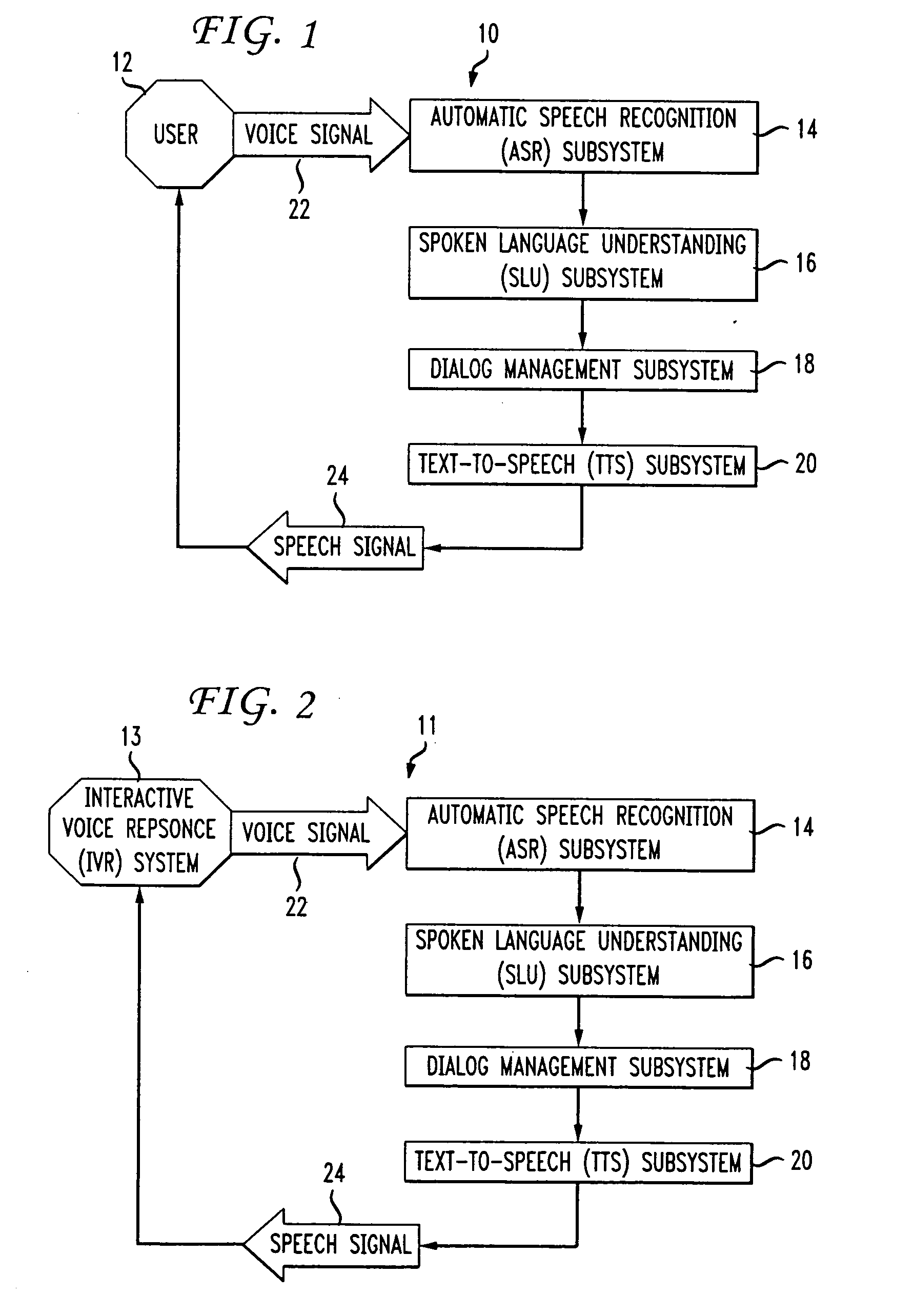

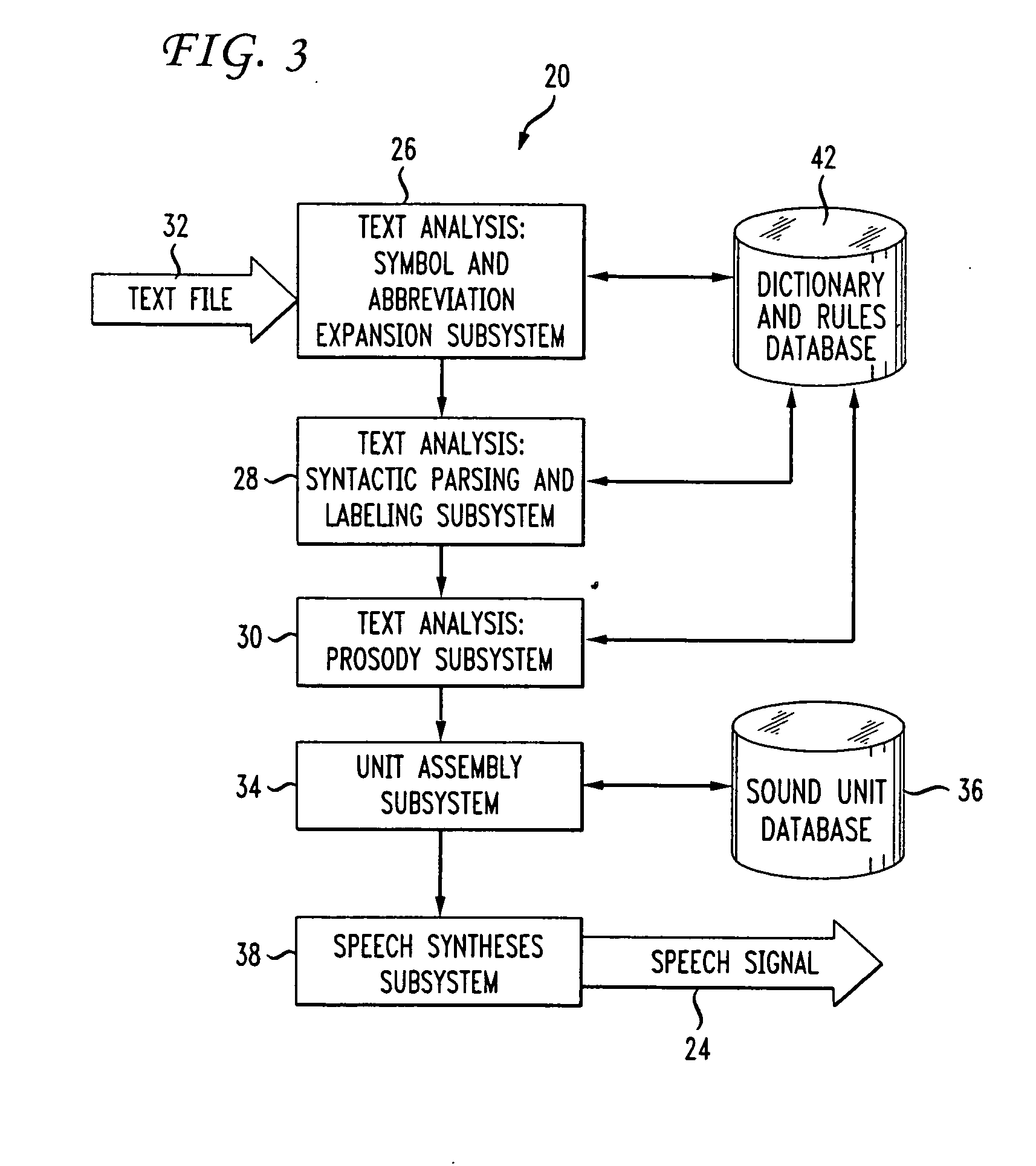

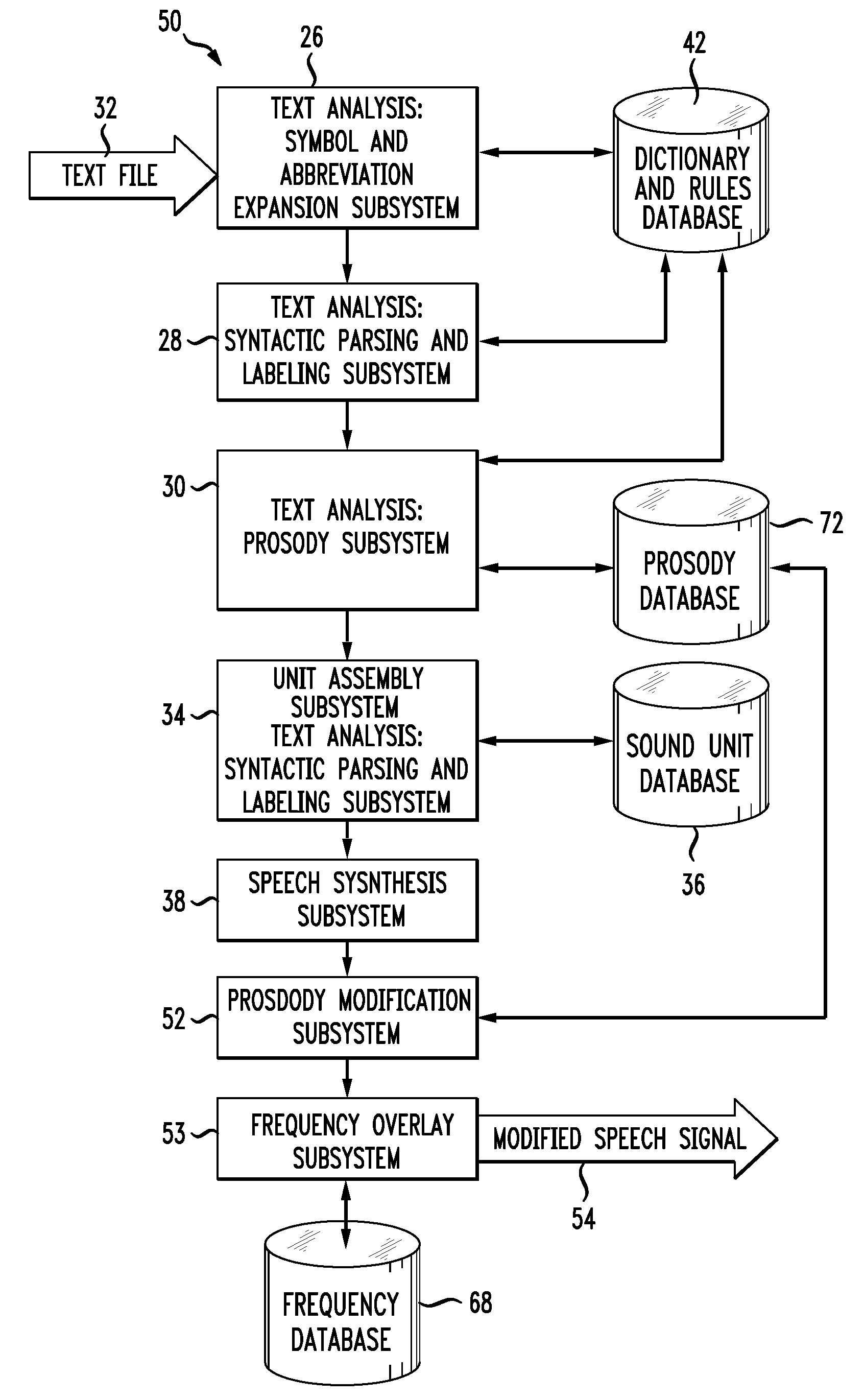

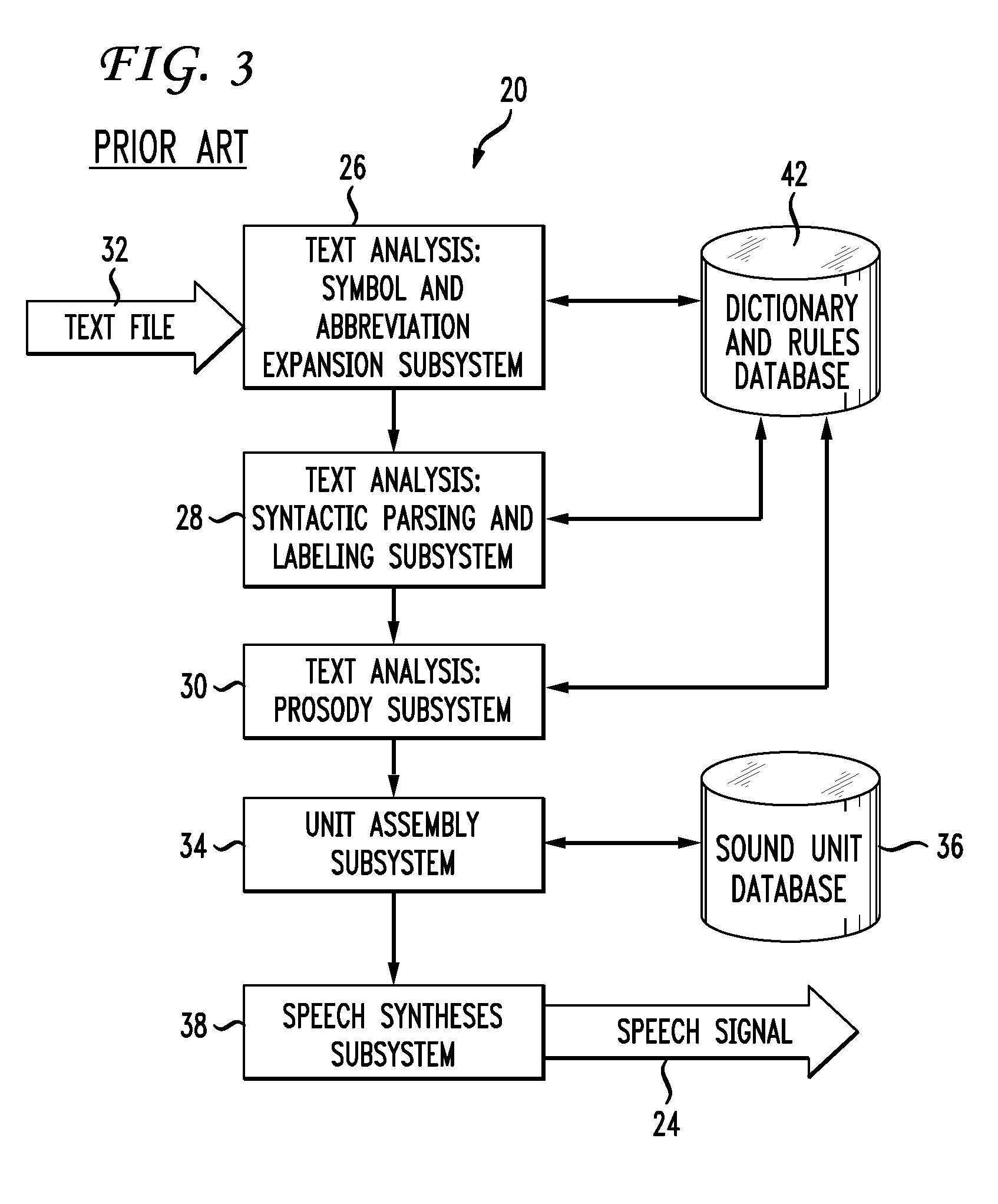

A method and apparatus utilizing prosody modification of a speech signal output by a text-to-speech (TTS) system to substantially prevent an interactive voice response (IVR) system from understanding the speech signal without significantly degrading the speech signal with respect to human understanding. The present invention involves modifying the prosody of the speech output signal by using the prosody of the user's response to a prompt. In addition, a randomly generated overlay frequency is used to modify the speech signal to further prevent an IVR system from recognizing the TTS output. The randomly generated frequency may be periodically changed using an overlay timer that changes the random frequency signal at a predetermined intervals.

Owner:NUANCE COMM INC

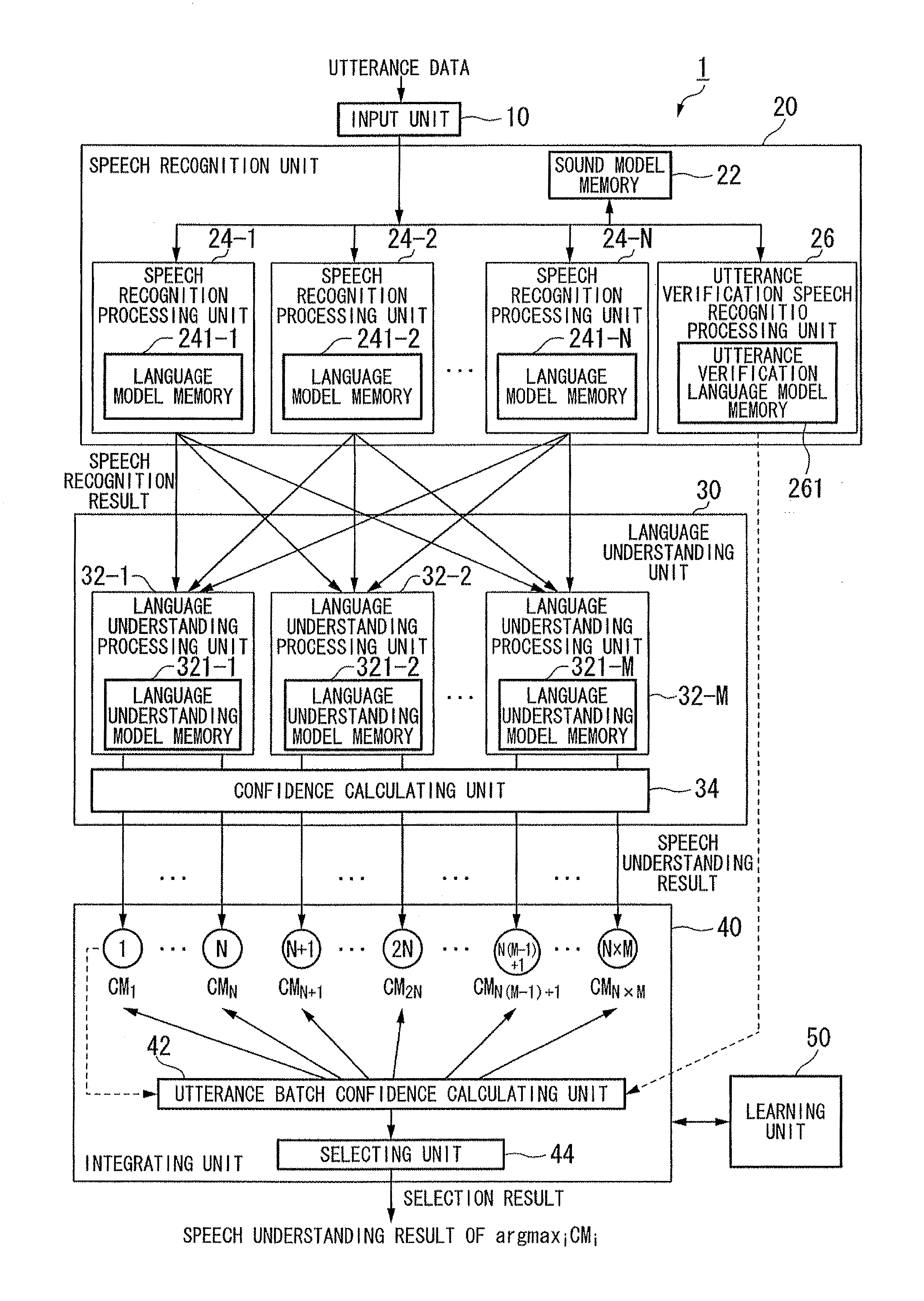

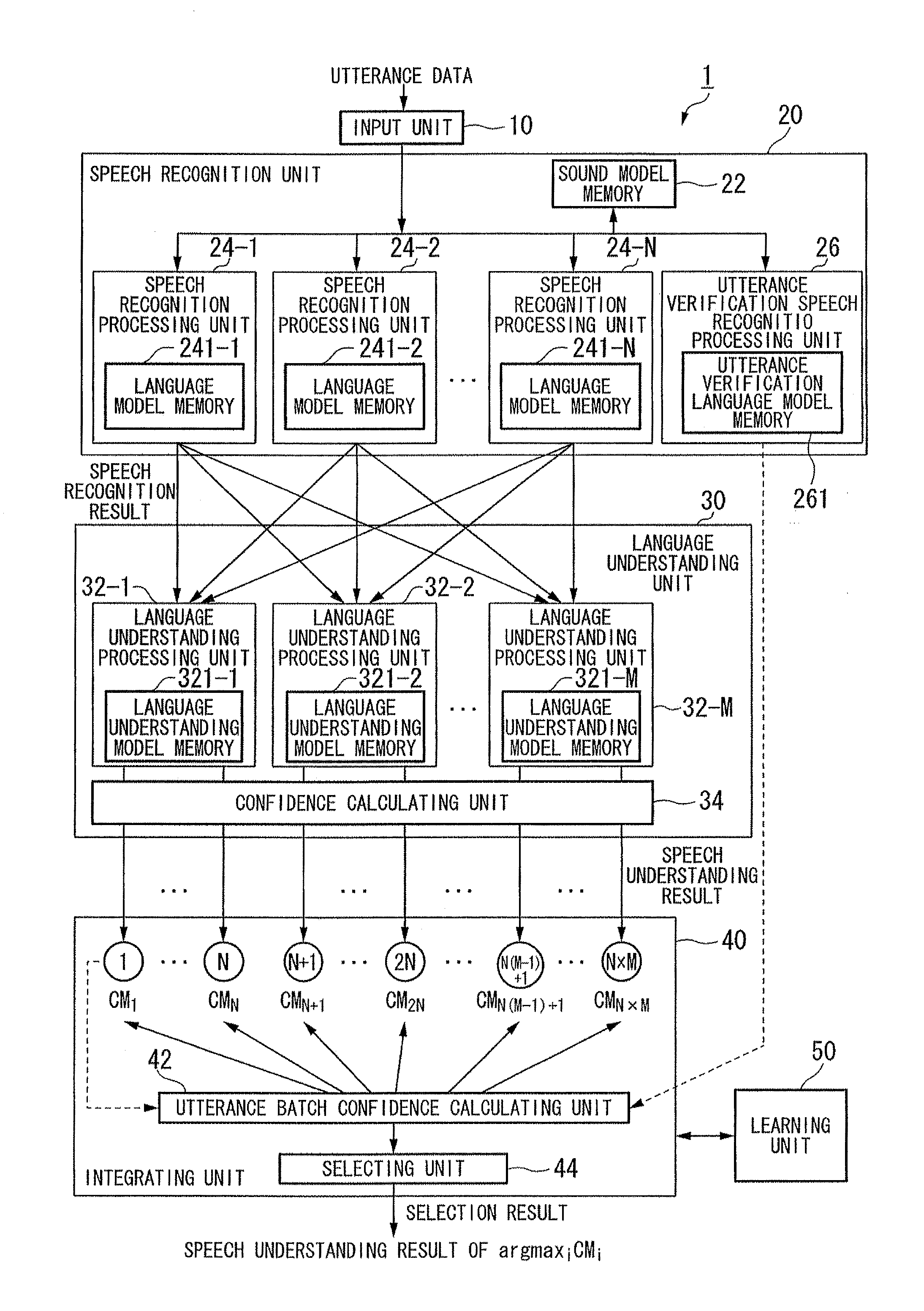

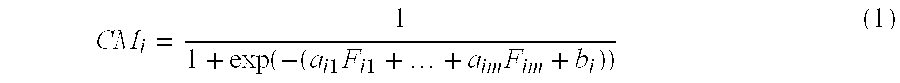

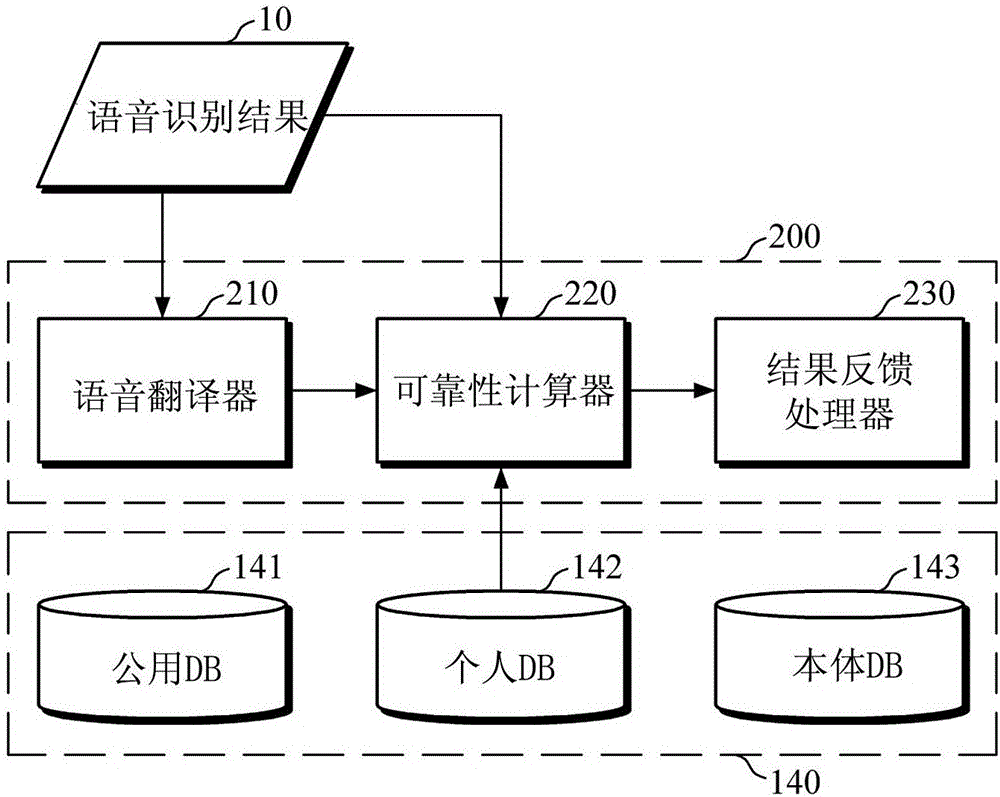

Speech understanding apparatus using multiple language models and multiple language understanding models

A speech understanding apparatus includes a speech recognition unit which performs speech recognition of an utterance using multiple language models, and outputs multiple speech recognition results obtained by the speech recognition, a language understanding unit which uses multiple language understanding models to perform language understanding for each of the multiple speech recognition results output from the speech recognition unit, and outputs multiple speech understanding results obtained from the language understanding, and an integrating unit which calculates, based on values representing features of the speech understanding results, utterance batch confidences that numerically express accuracy of the speech understanding results for each of the multiple speech understanding results output from the language understanding unit, and selects one of the speech understanding results with a highest utterance batch confidence among the calculated utterance batch confidences.

Owner:HONDA MOTOR CO LTD

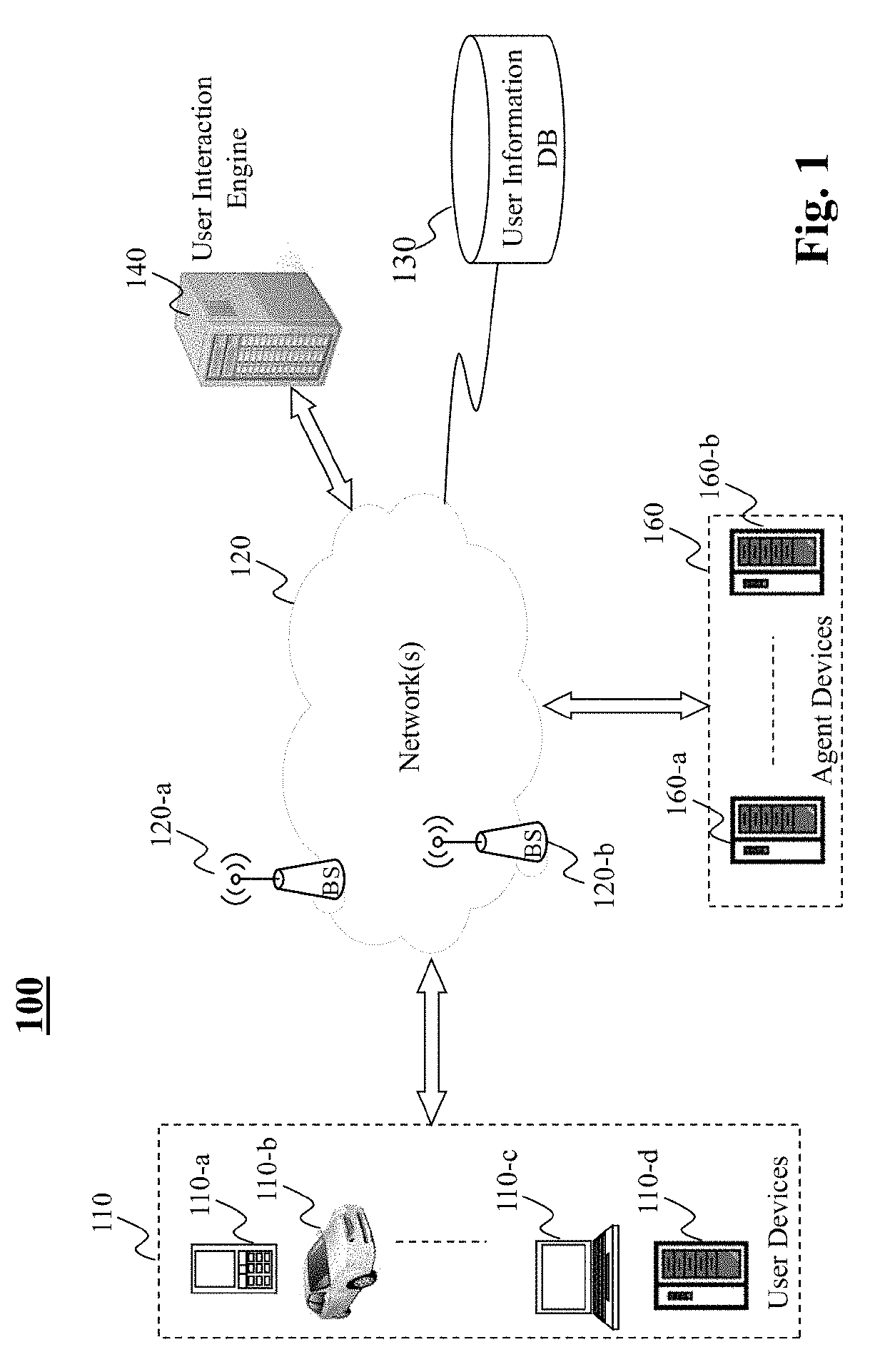

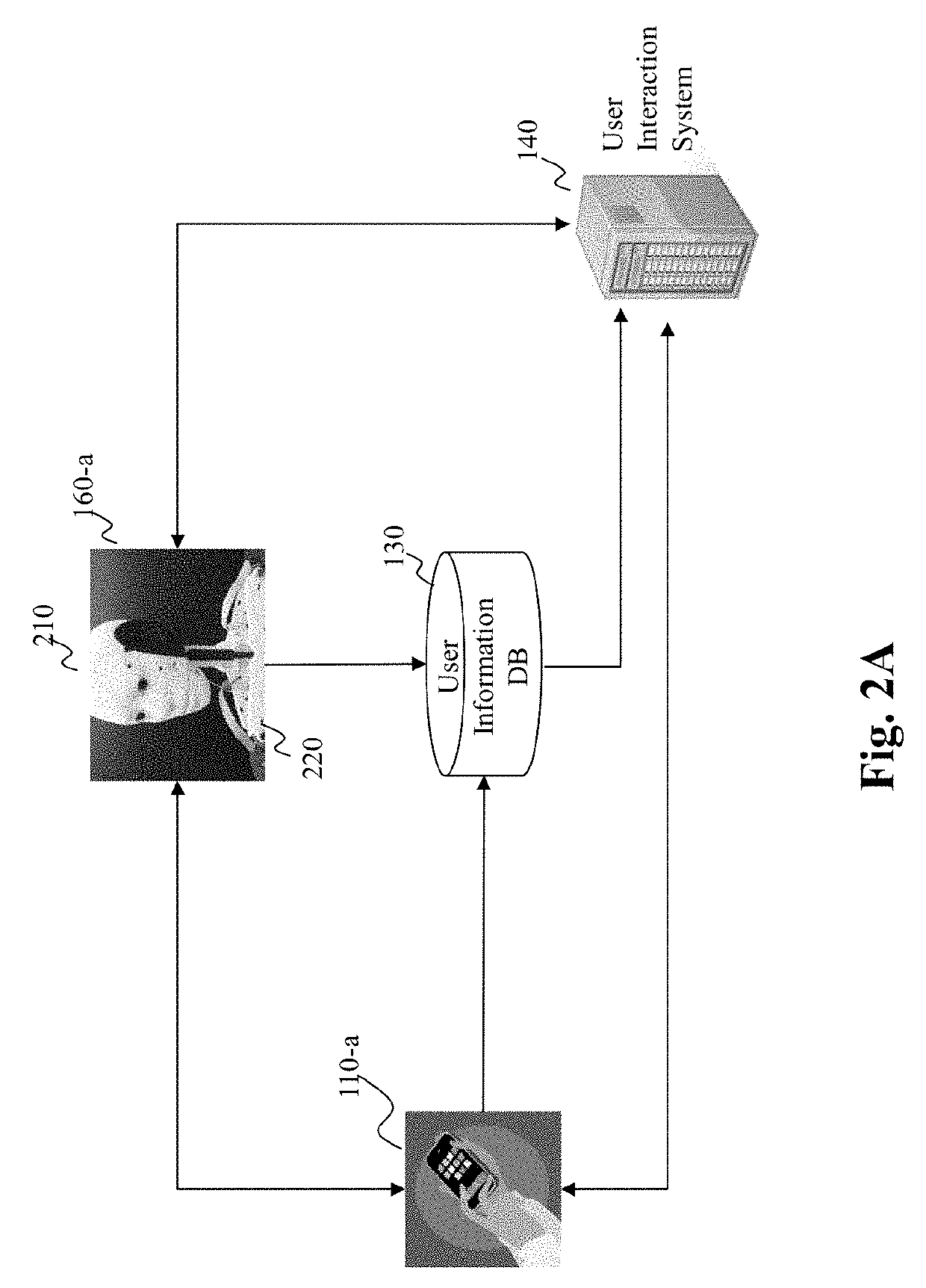

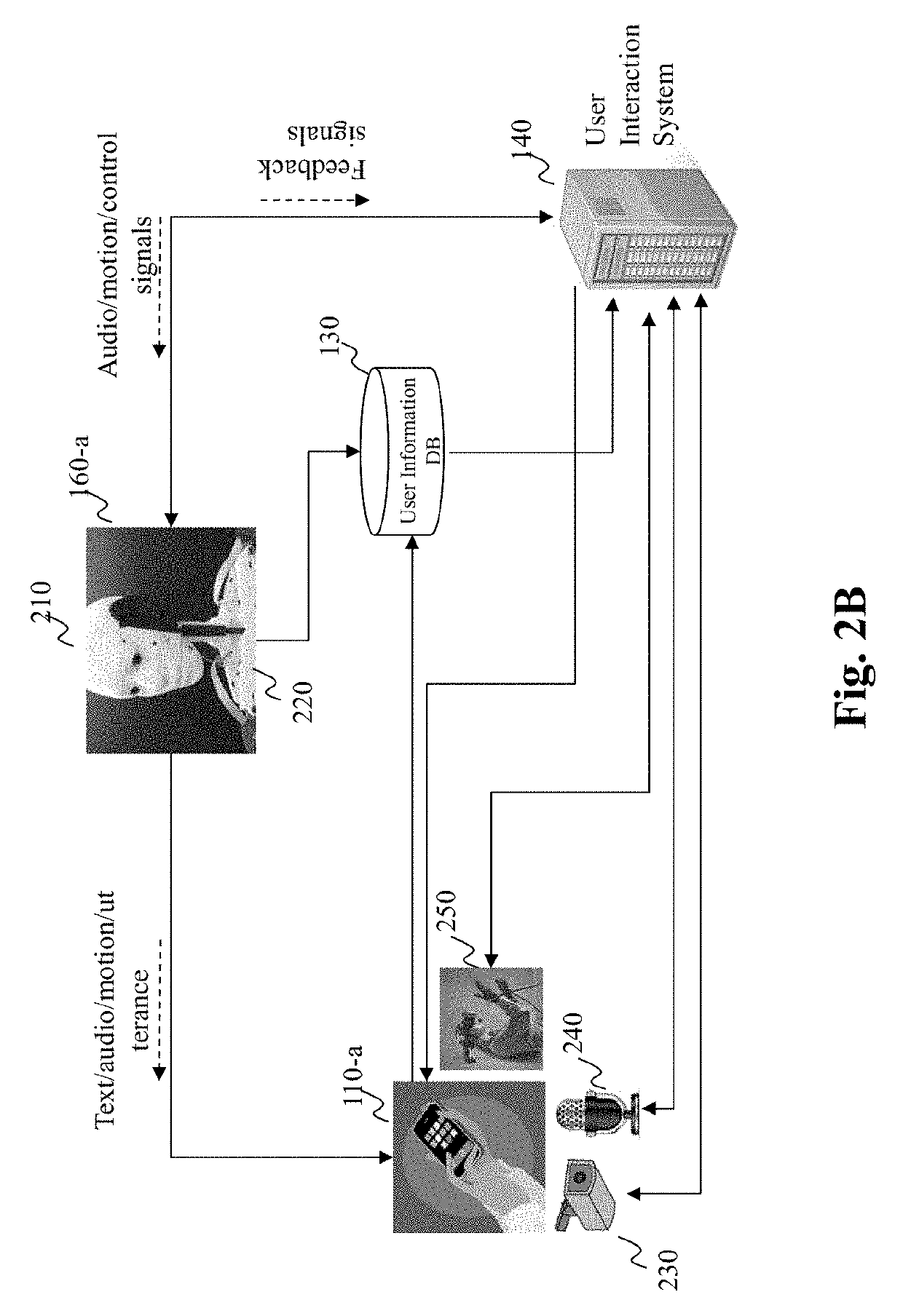

System and method for speech understanding via integrated audio and visual based speech recognition

ActiveUS20190279642A1Speech recognitionAcquiring/recognising facial featuresSpeech comprehensionVision based

The present teaching relates to method, system, medium, and implementations for speech recognition. An audio signal is received that represents a speech of a user engaged in a dialogue. A visual signal is received that captures the user uttering the speech. A first speech recognition result is obtained by performing audio based speech recognition based on the audio signal. Based on the visual signal, lip movement of the user is detected and a second speech recognition result is obtained by performing lip reading based speech recognition. The first and the second speech recognition results are then integrated to generate an integrated speech recognition result.

Owner:DMAI INC

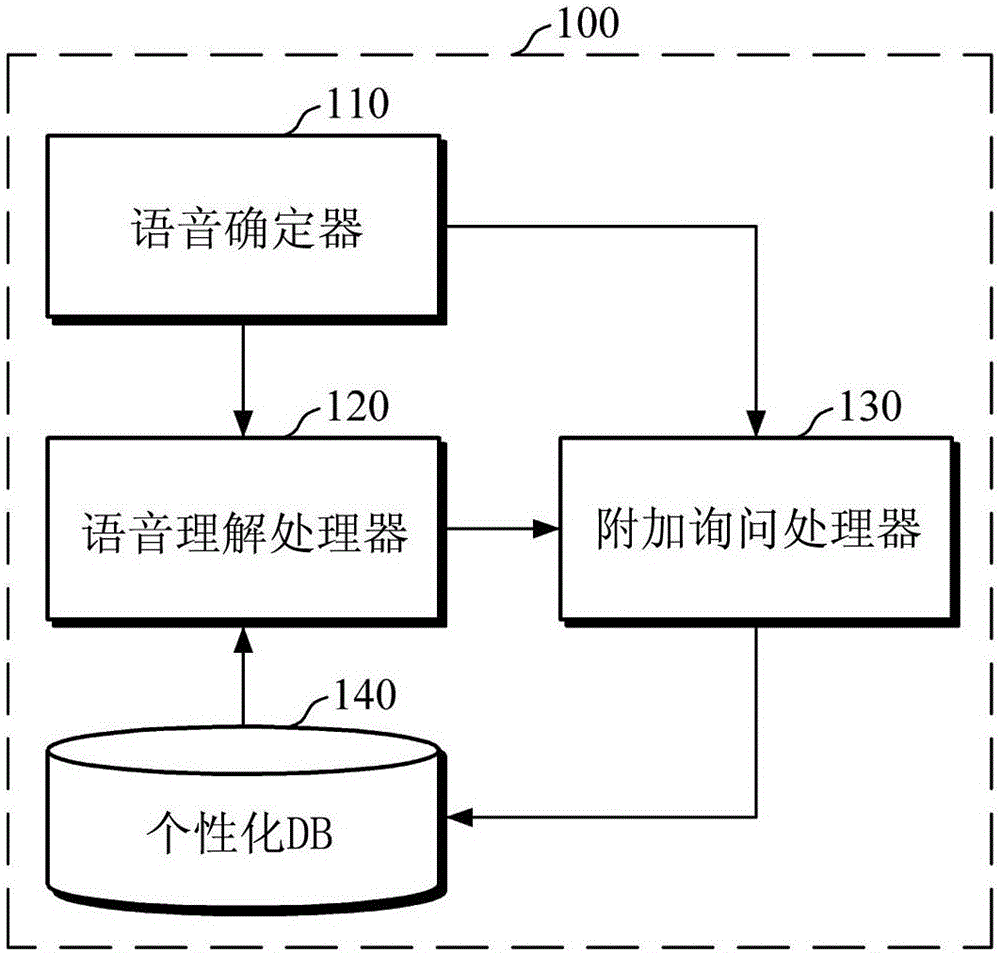

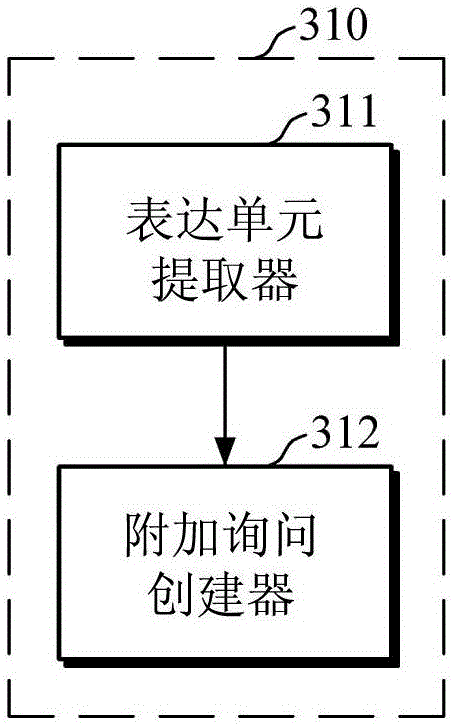

Intelligent dialog management apparatus and method

An intelligent dialog processing apparatus and method. The intelligent dialog processing apparatus includes a speech understanding processor, of one or more processors, configured to perform an understanding of an uttered primary speech of a user using an idiolect of the user based on a personalized database (DB) for the user, and an additional-query processor, of the one or more processors, configured to extract, from the primary speech, a select unit of expression that is not understood by the speech understanding processor, and to provide a clarifying query for the user that is associated with the extracted unit of expression to clarify the extracted unit of expression.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and System for Preventing Speech Comprehension by Interactive Voice Response Systems

InactiveUS20090228271A1Reduce the possibilitySpeech recognitionSpeech synthesisSpeech comprehensionInteractive voice response system

A method of and system for generating a speech signal with an overlayed random frequency signal using prosody modification of a speech signal output by a text-to-speech (TTS) system to substantially prevent an interactive voice response (IVR) system from understanding the speech signal without significantly degrading the speech signal with respect to human understanding. The present invention involves modifying a prosody of the speech output signal by using a prosody of the user's response to a prompt. In addition, a randomly generated overlay frequency is used to modify the speech signal to further prevent the IVR system from recognizing the TTS output. The randomly generated frequency may be periodically changed using an overlay timer that changes the random frequency signal at a predetermined intervals.

Owner:NUANCE COMM INC

Method and device for voice identification and language comprehension analysing

InactiveCN1831937ASimplify Design ComplexityThe need to reduce usageNatural language data processingSpeech recognitionLanguage understandingSpeech comprehension

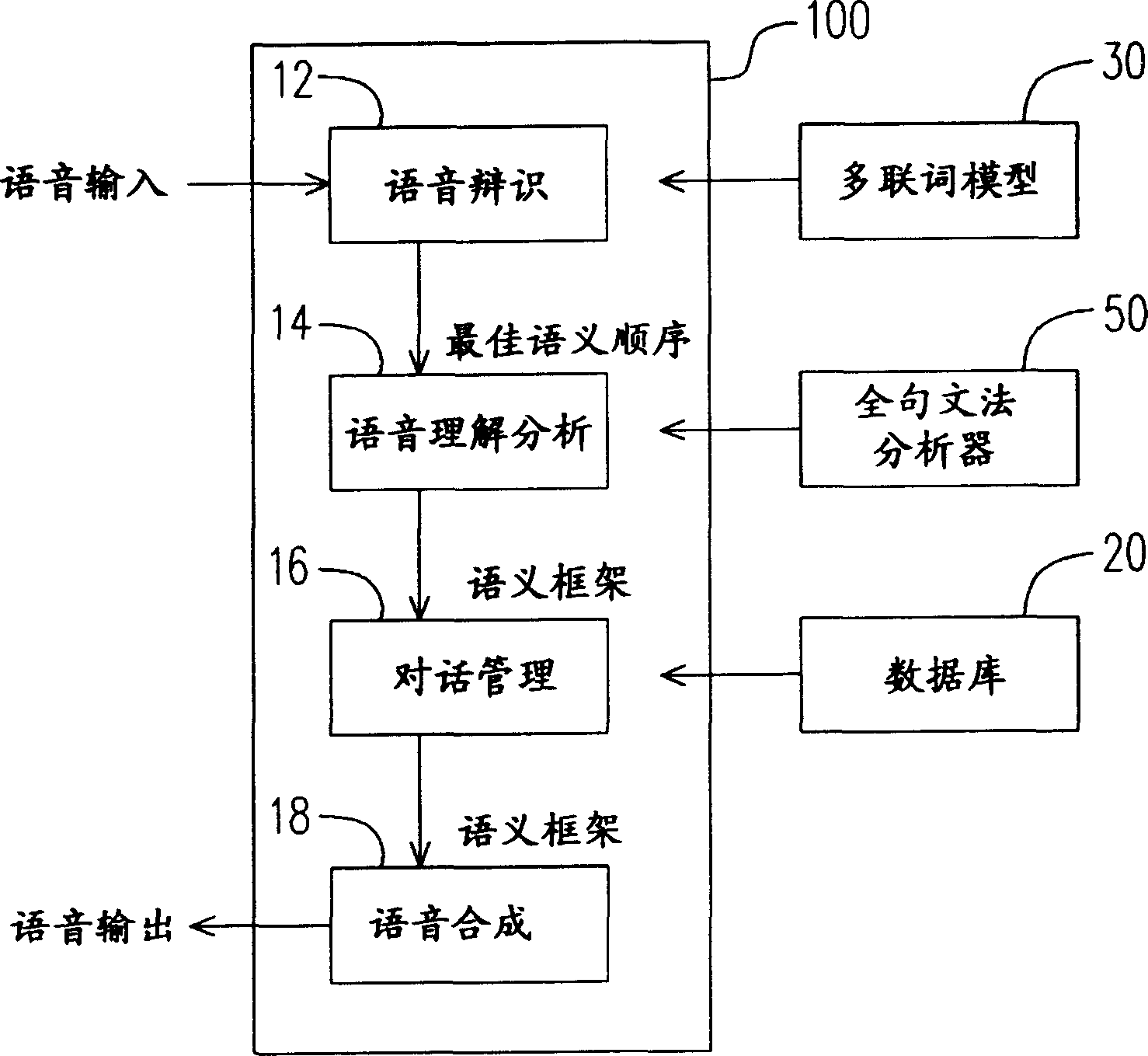

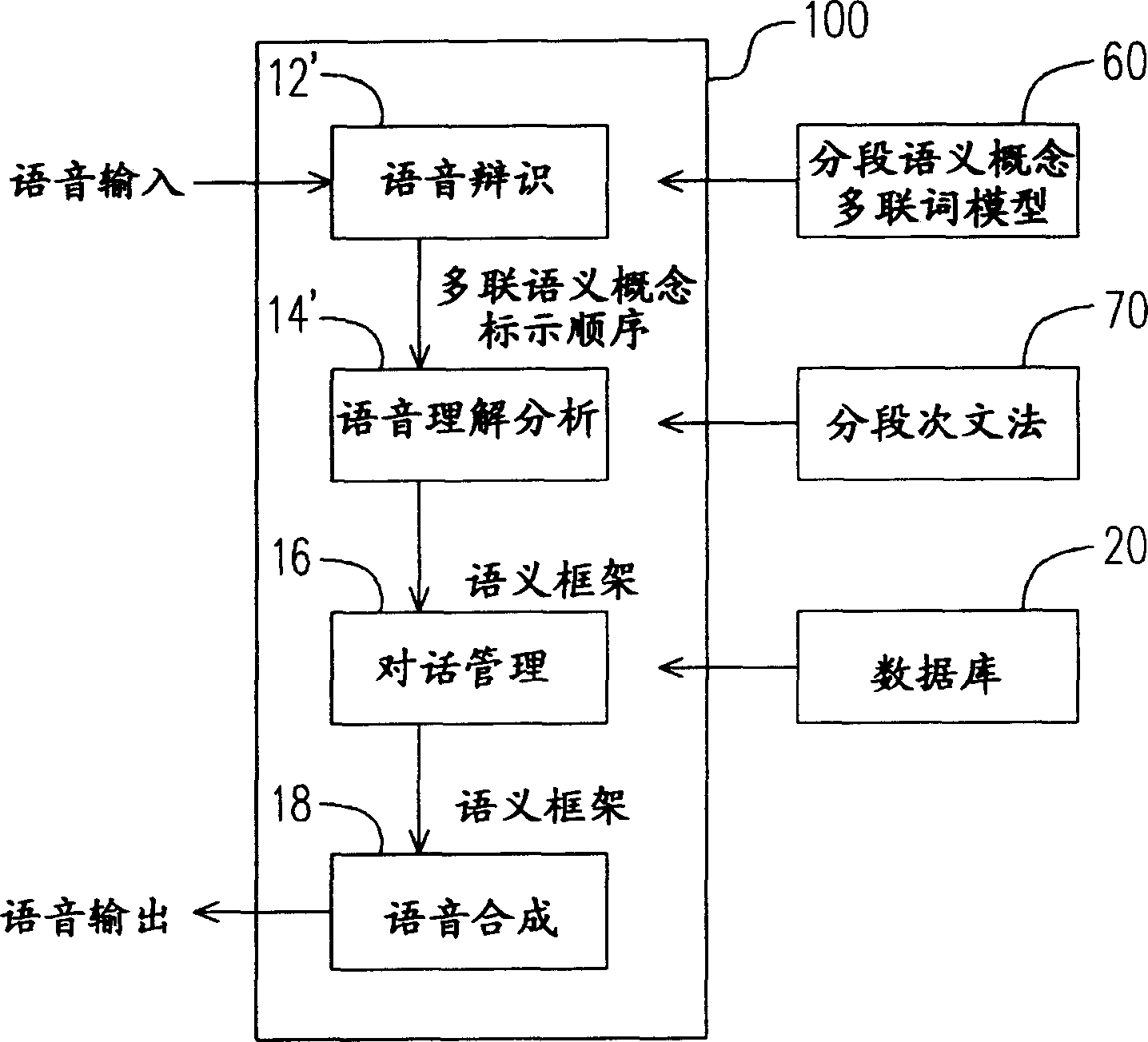

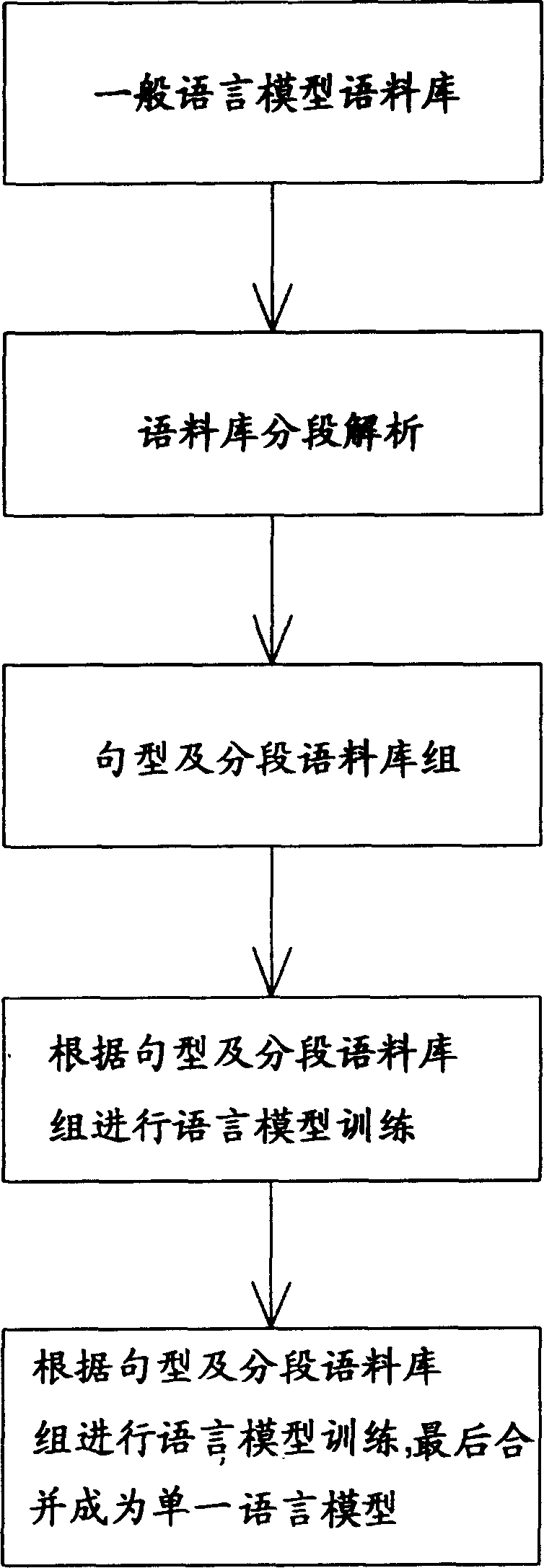

A pronunciation identification and language understanding - analyzing device consists of pronunciation identification module for receiving a pronunciation input and for dividing pronunciation input to be multiple sectional semantics according to a concept multiple word square model of sectional semantics, and pronunciation understanding - analyzing module for analyzing those sectional semantics according to a sectional grammar. The method utilizing said device is also disclosed.

Owner:DELTA ELECTRONICS INC

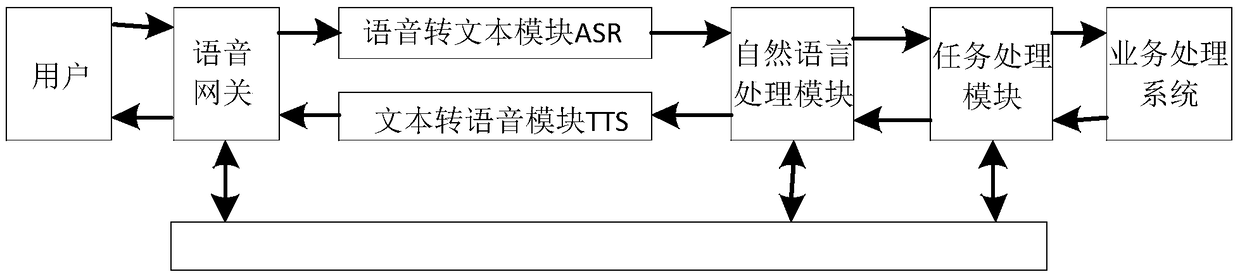

Smart interaction method for speech and text mutual conversion

InactiveCN109361823AImprove experienceImprove service capabilitiesSemantic analysisAutomatic exchangesSpeech comprehensionProcess module

The embodiment of the invention discloses a smart interaction method for speech and text mutual conversion. The smart interaction method comprises the following steps that: a speech command sent by auser is sent to a speech gateway through a public switched telephone network (PSTN); the speech gateway sends speech information to a speech to text module ASR for speech recognition and speech to text processing; the text information is transmitted to a natural language processing module (NLP) for semantic comprehension; a semantic comprehension result is transmitted to a task processing module;the task processing module matches a logical task with relevant knowledge; a user service processing system performs service processing according to a matching result, and outputs a service processingresult; the service processing result is transmitted to a task processing module; the processed result data are transmitted to the NLP for speech comprehension interaction; speech synthesis is performed on the text through a text to speech module (TTS); and corresponding interaction is performed on the synthesized speech and the user through the speech gateway. Through adoption of the smart interaction method, the problems of small data processing amount, high running cost and poor user experience in an existing enterprise hotline service system are solved.

Owner:深圳市一号互联科技有限公司

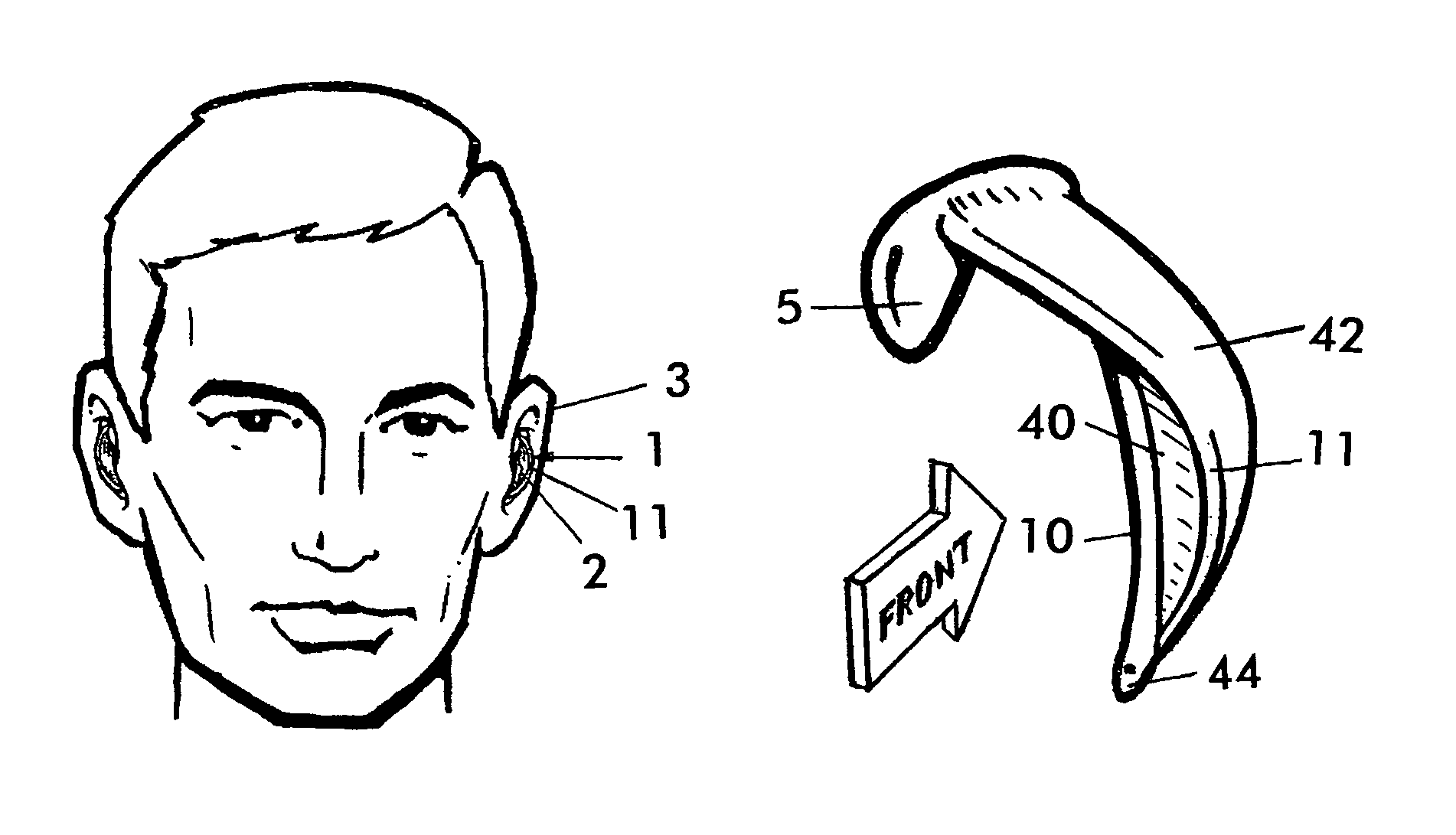

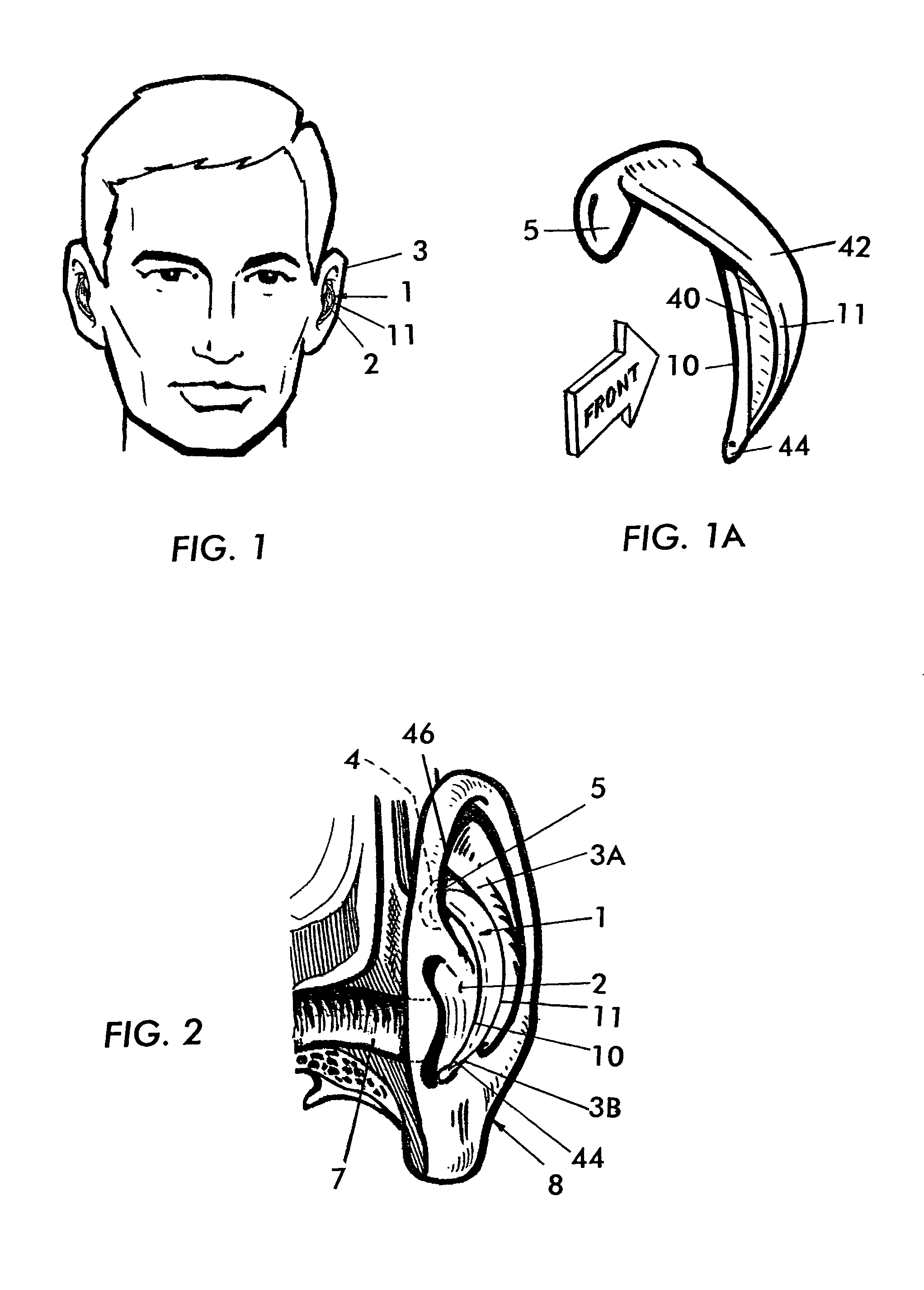

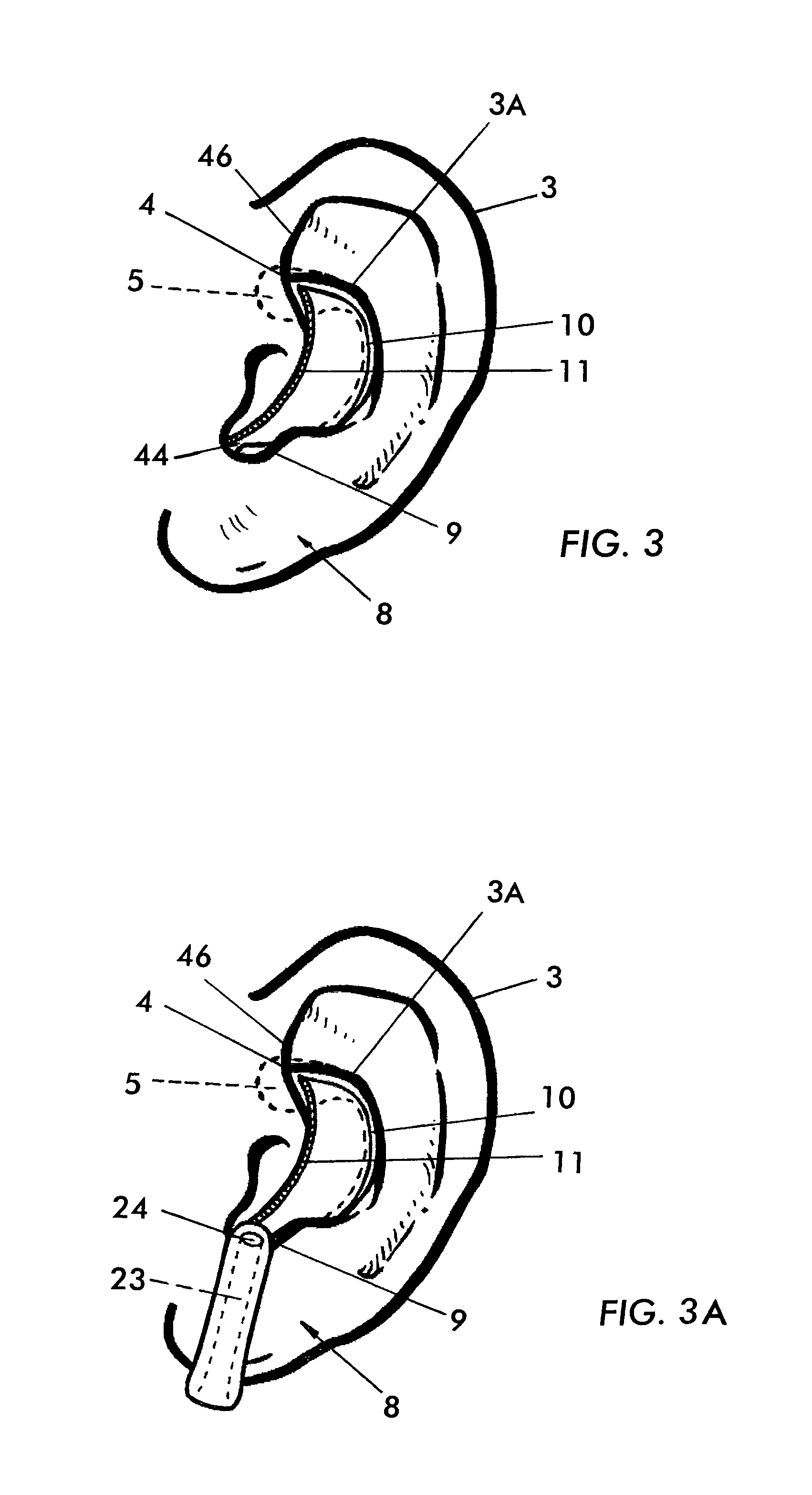

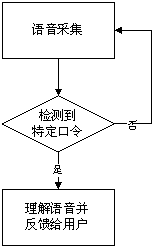

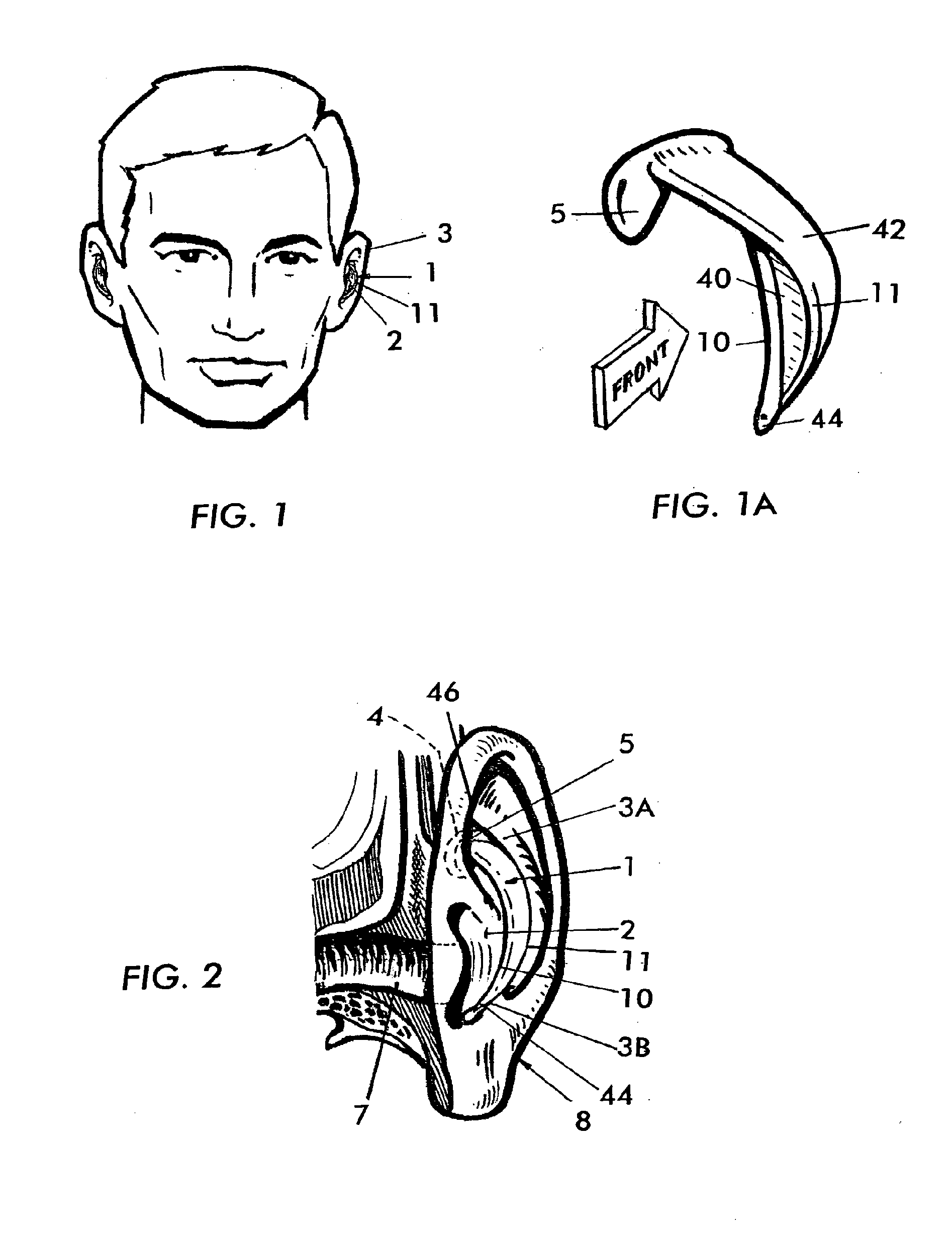

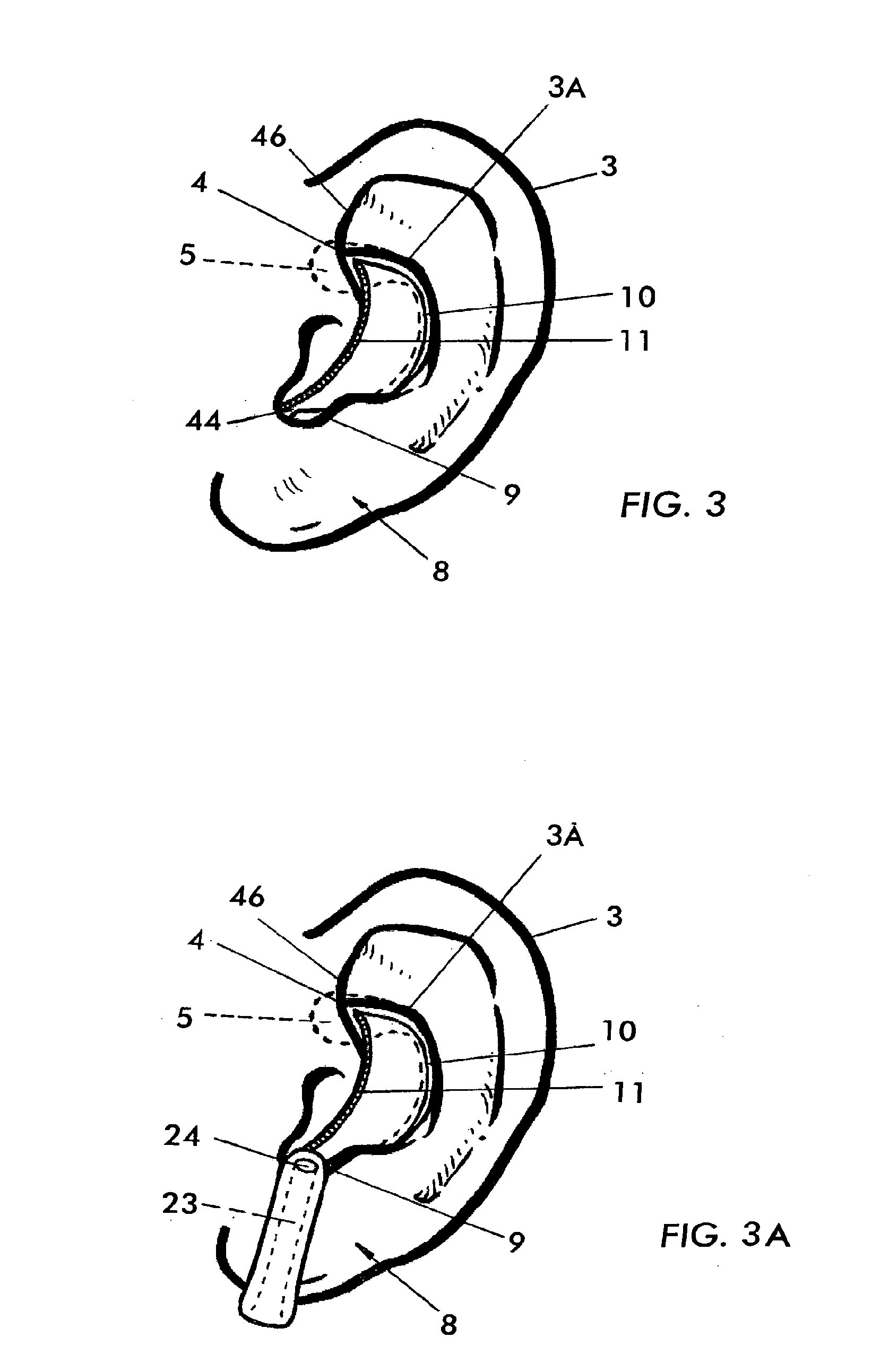

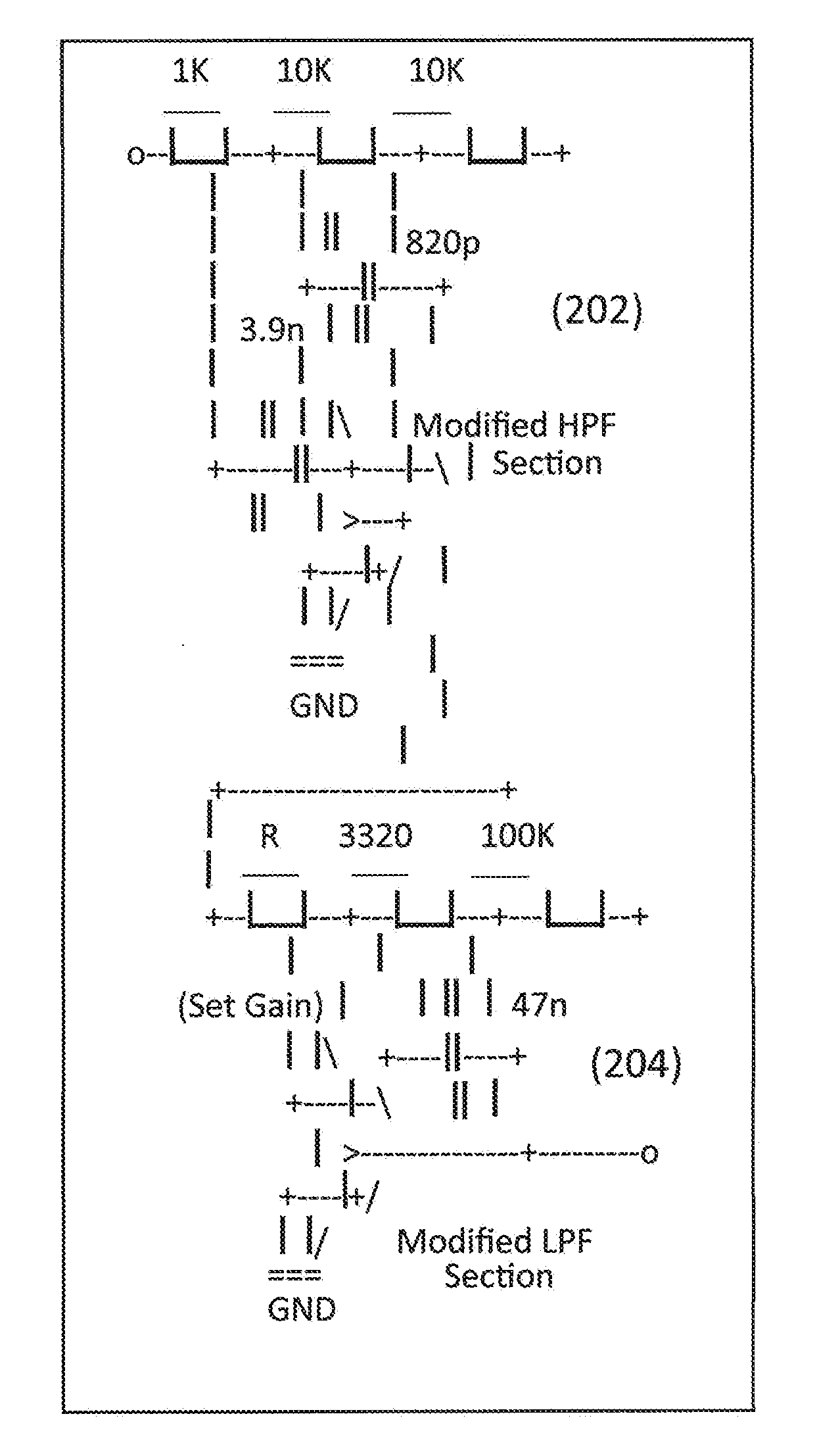

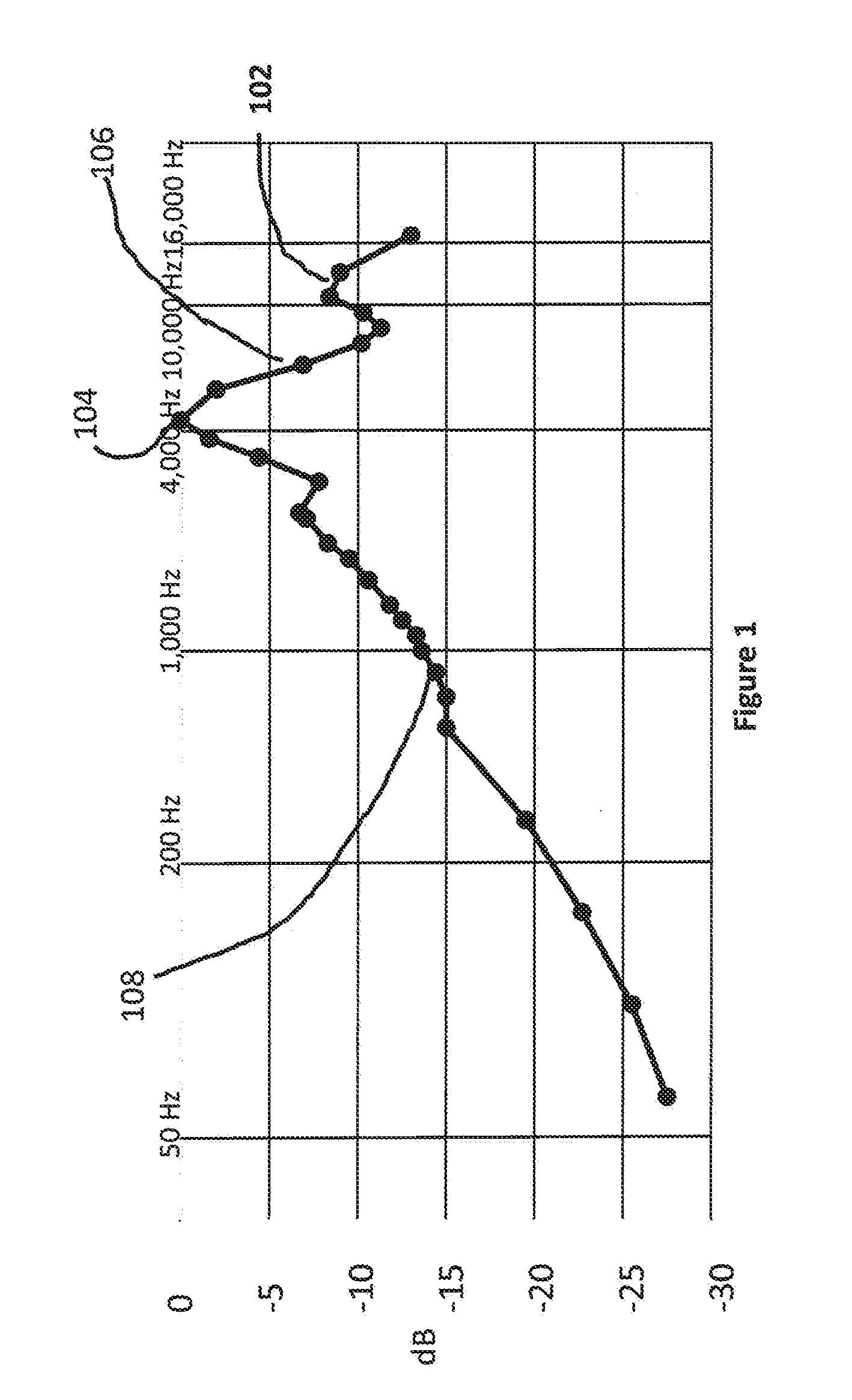

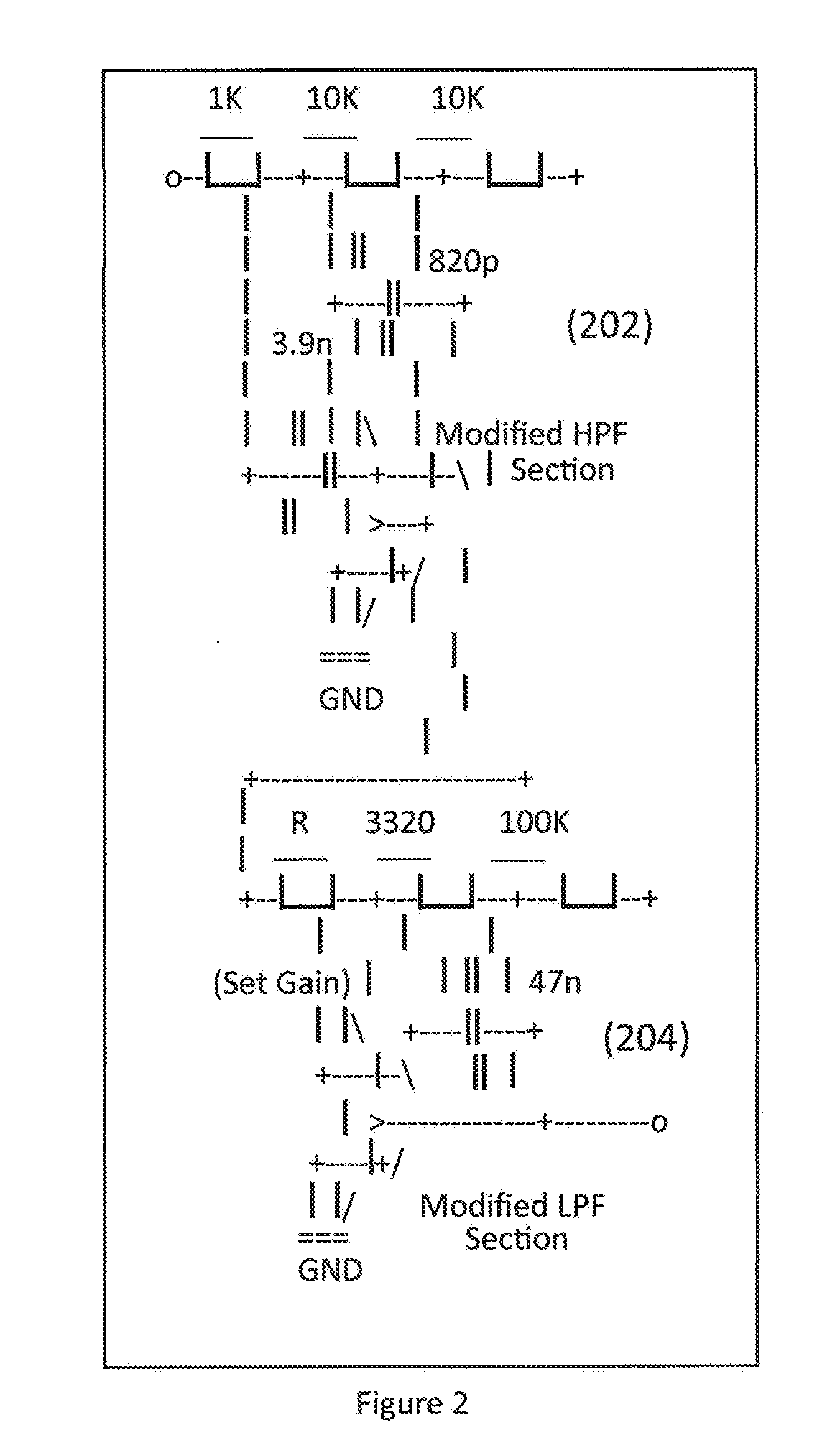

External ear insert for hearing comprehension enhancement

InactiveUS7916884B2Enhanced ear passage gainImprove speech comprehensionEar supported setsIn the ear hearing aidsSpeech comprehensionElectrophonic hearing

A simple hearing enhancement device that takes the normally adequately loud sound levels and optimizes selective frequency gain of the patient's ear passage to improve speech comprehension.

Owner:KAH JR CARL L C

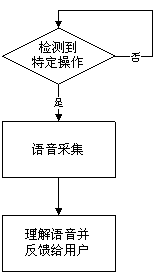

Method for voice interaction with intelligent voice device

InactiveCN104253902AImprove experienceInteractive natureInput/output for user-computer interactionSubstation equipmentSpeech comprehensionEngineering

Owner:宋婉毓

External ear insert for hearing comprehension enhancement

InactiveUS20110150258A1Improve speech comprehensionNeed lessEar supported setsIn the ear hearing aidsSpeech comprehensionHearing perception

A simple hearing enhancement device that takes the normally adequately loud sound levels and optimizes selective frequency gain of the patient's ear passage to improve speech comprehension.

Owner:KAH JR CARL L C

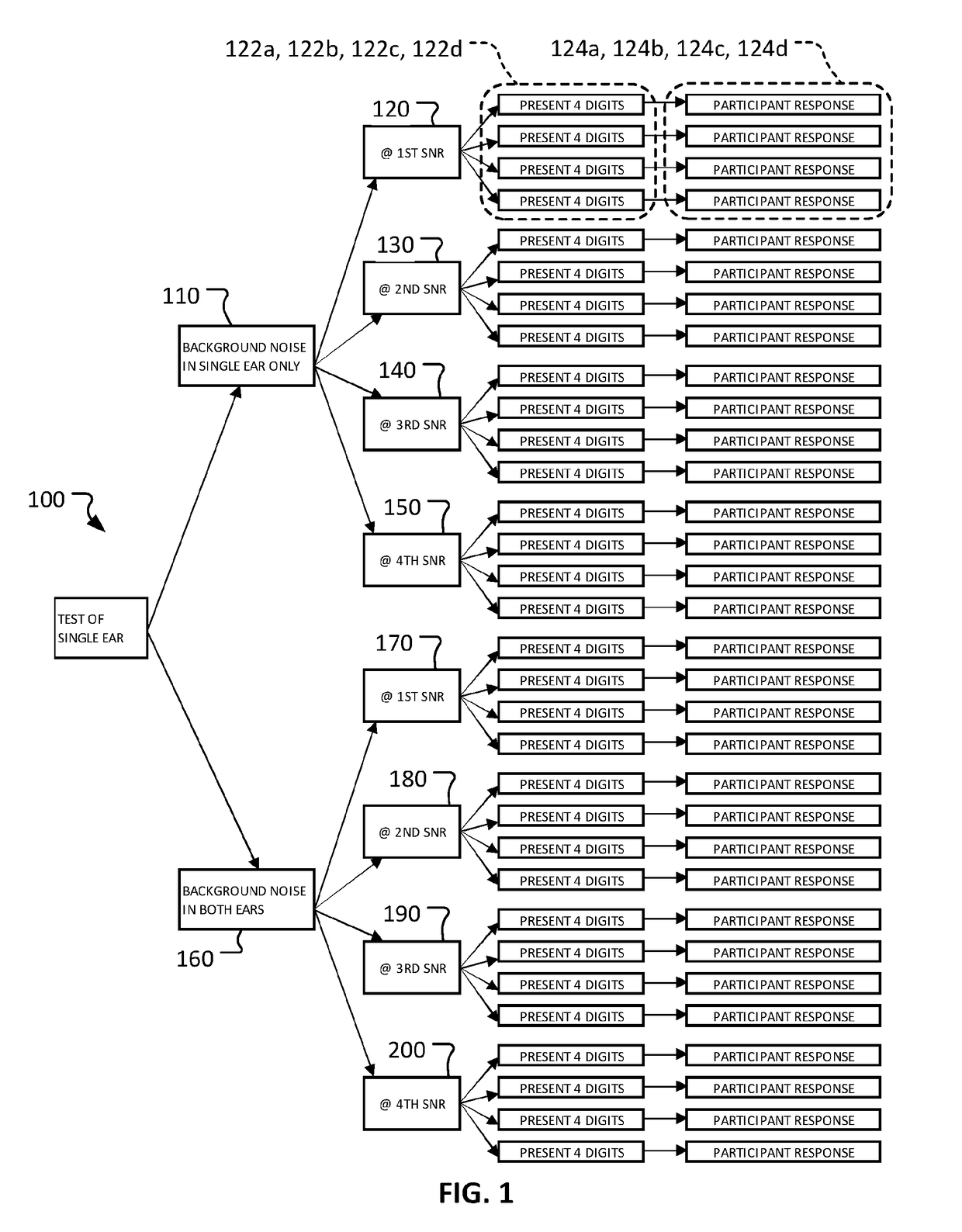

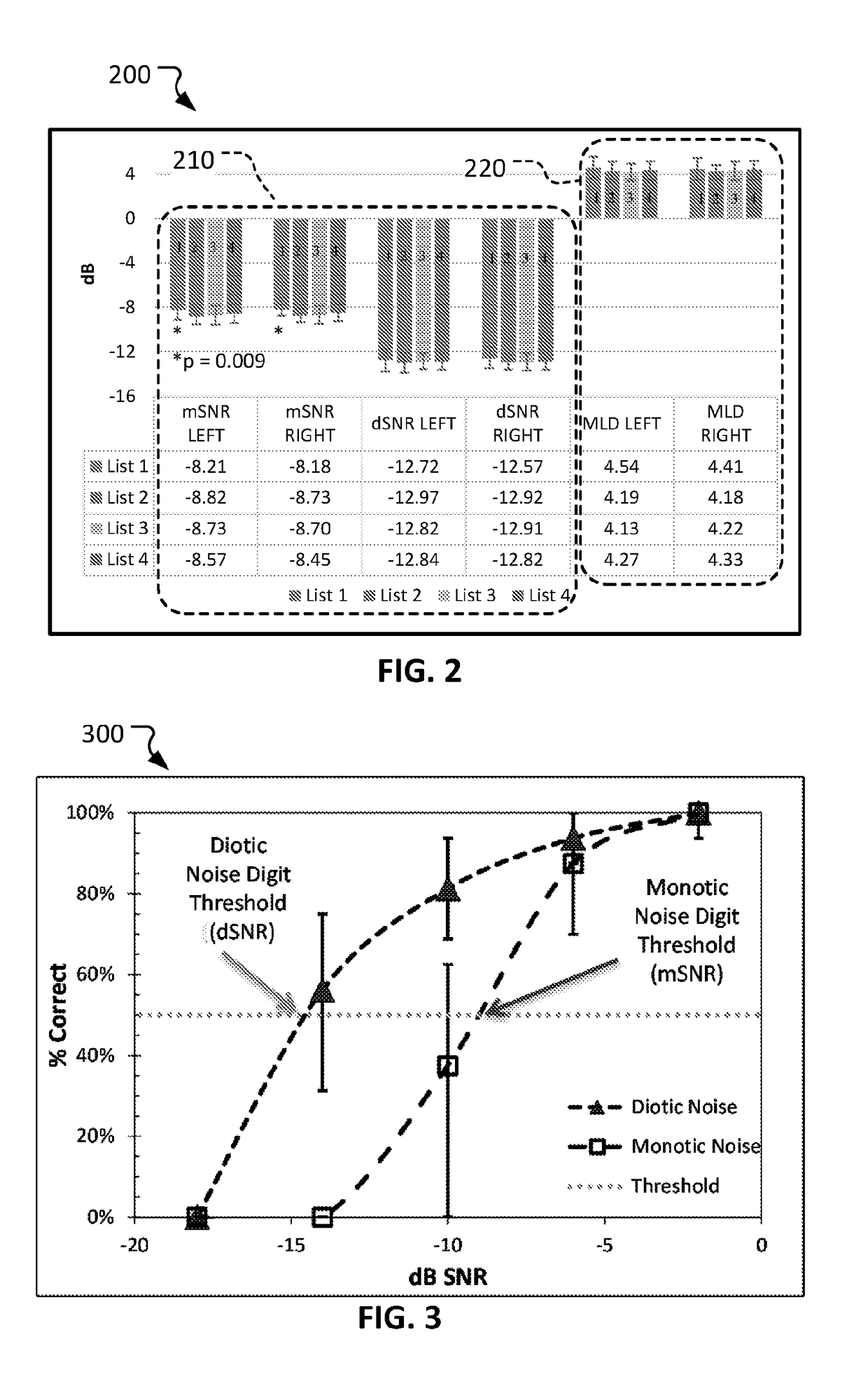

Audiology testing techniques

ActiveUS20190069811A1Accurate hearing assessmentFunction useAudiometeringSensorsSpeech comprehensionHearing test

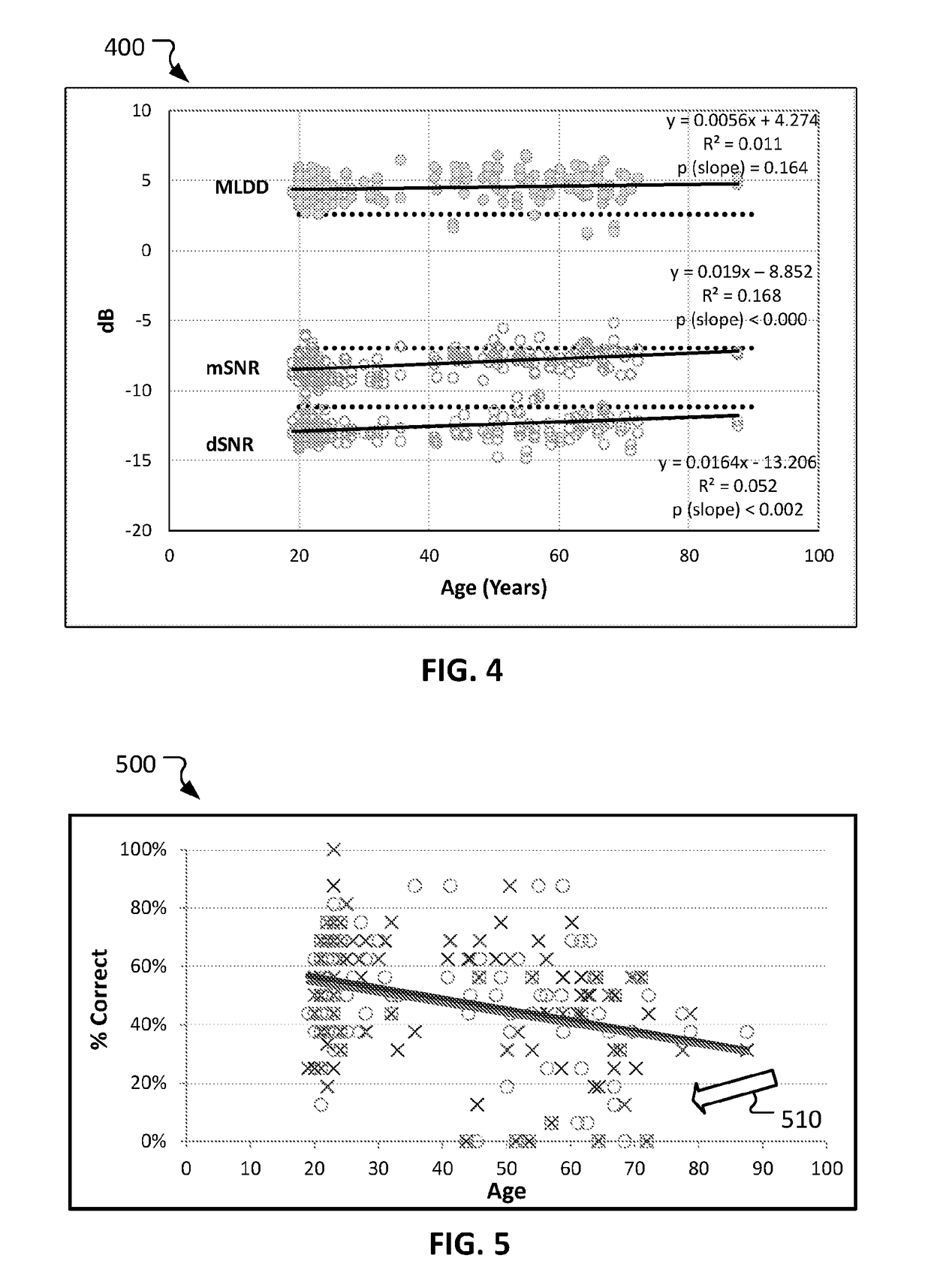

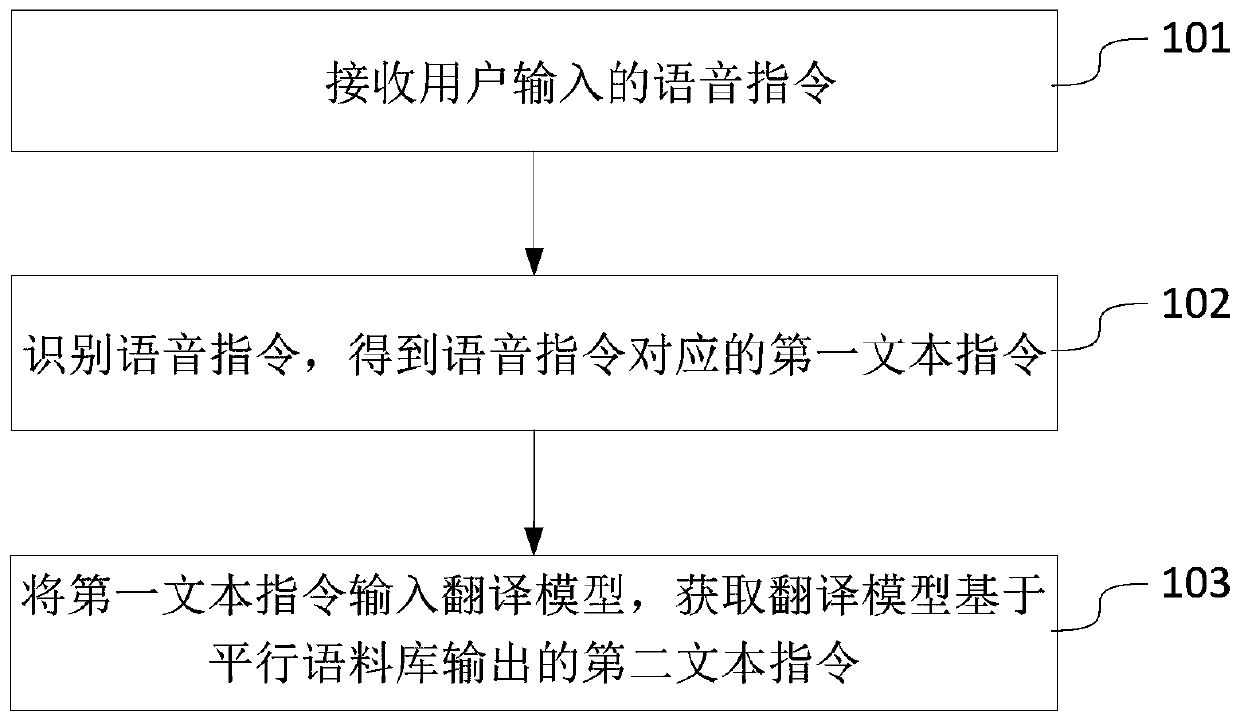

Speech understanding in the presence of background noise can be assessed using novel hearing tests. One such test is a Masking Level Difference with Digits (MLDD) test, which is a clinical tool designed to measure auditory factors that influence the understanding of speech presented within a background of noise. The MLDD test can be used clinically to facilitate a determination of functional hearing. The MLDD test presents background noise using both monotic and diotic conditions.

Owner:MAYO FOUND FOR MEDICAL EDUCATION & RES

Voice understanding method and device, electronic equipment and medium

ActiveCN110428813AAccurate operationSpeech recognitionSpecial data processing applicationsSpeech comprehensionUser input

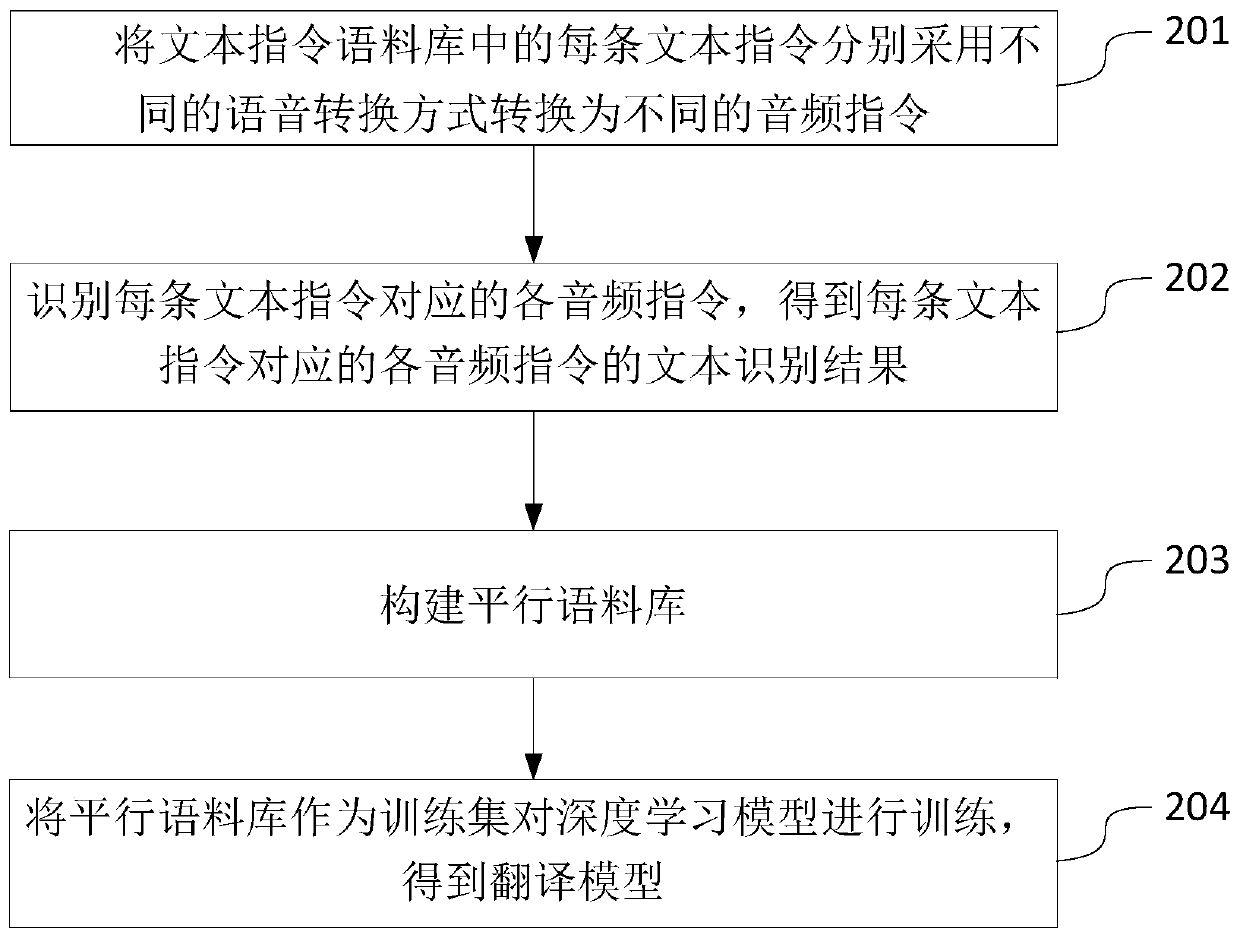

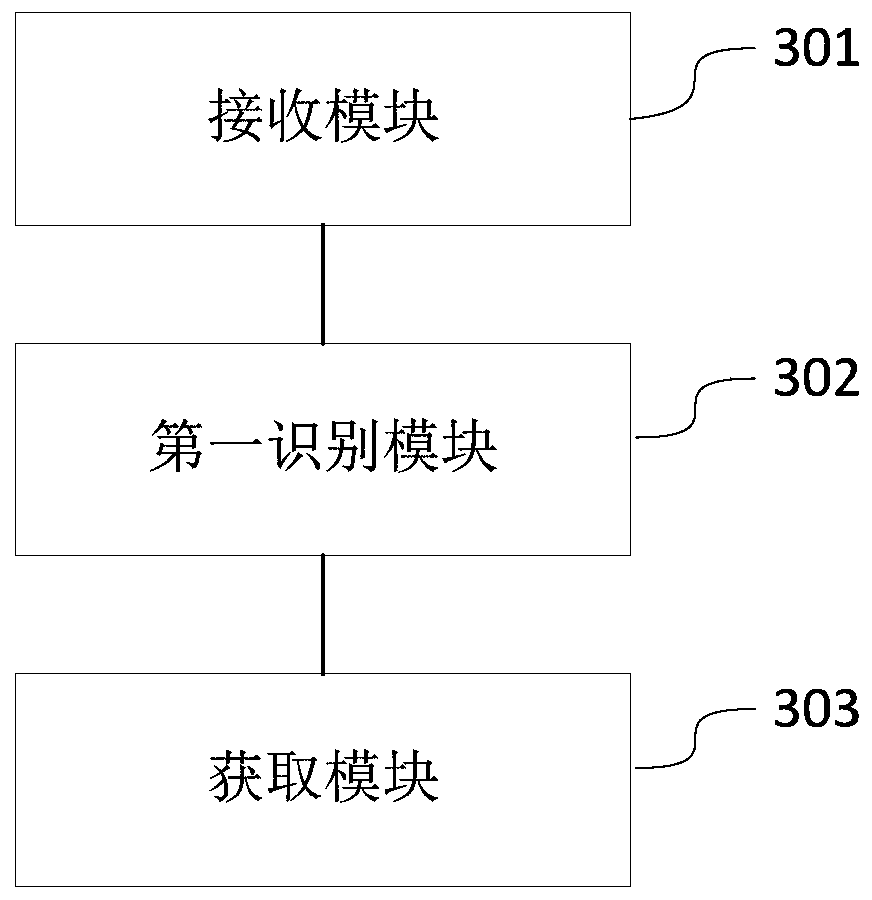

An embodiment of the invention provides a voice understanding method and device, electronic equipment and a medium, relates to the technical field of multimedia content interaction and is used for correctly understanding a voice instruction of a user. A scheme of the embodiment comprises steps as follows: receiving the voice instruction input by the user, recognizing the voice instruction to obtain a first text instruction corresponding to the voice instruction, and then, inputting the first text instruction into a translation model to obtain a second text instruction output by the translationmodel on basis of a parallel corpus, wherein the translation model is generated on basis of training of the parallel corpus.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

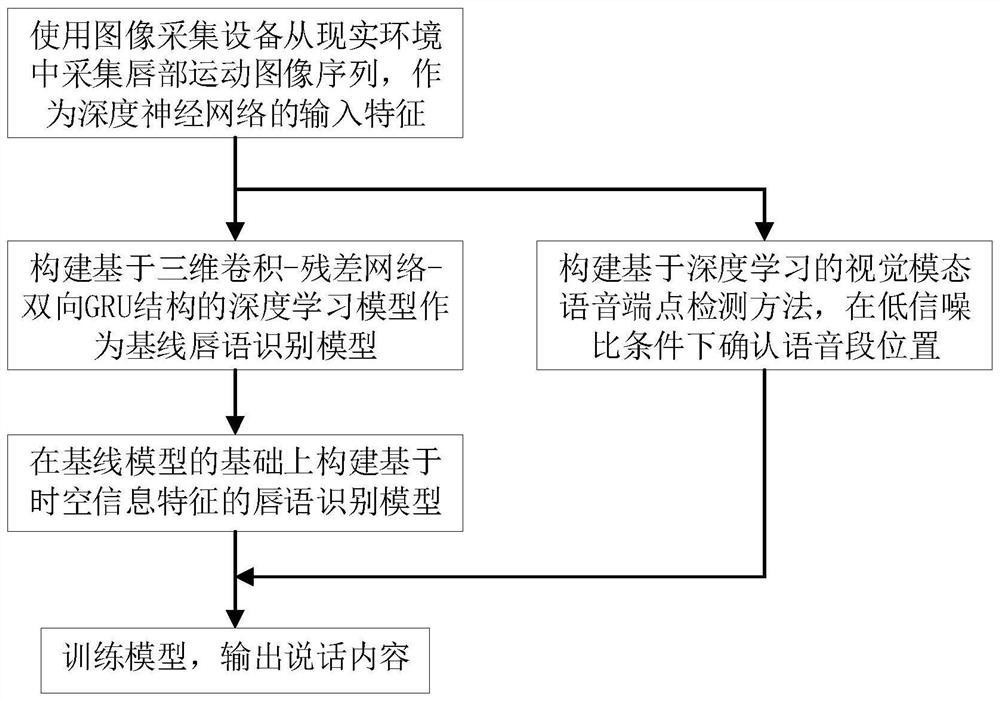

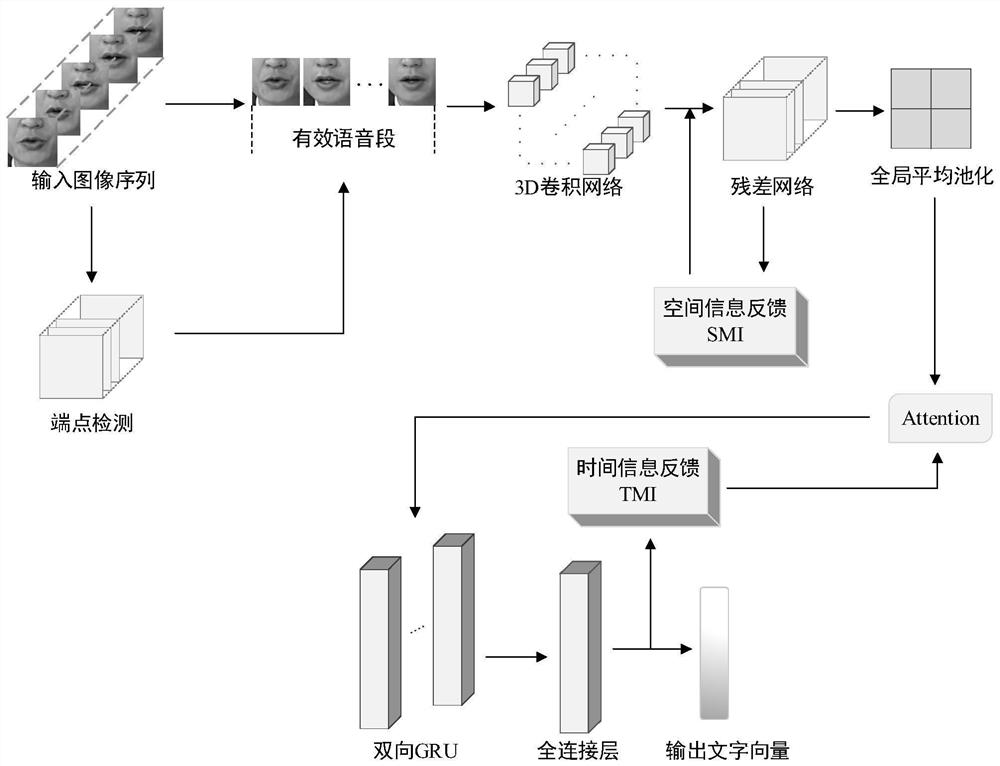

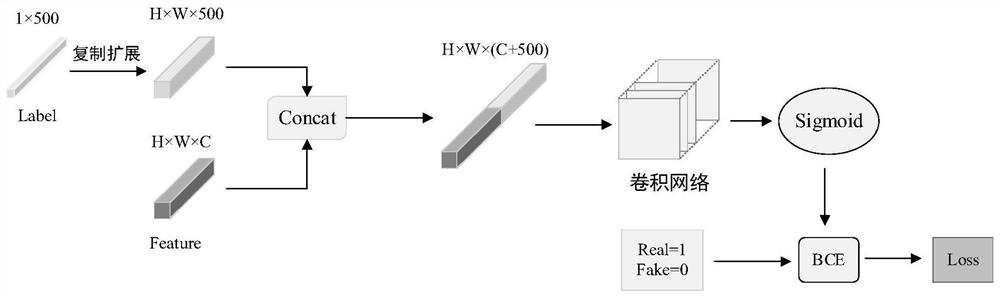

Lip language recognition-based method for improving speech comprehension degree of patient with severe hearing impairment

PendingCN112330713AImprove robustnessImprove accuracyImage enhancementImage analysisLanguage understandingTime information

The invention discloses a lip language recognition-based method for improving the speech comprehension degree of a patient with severe hearing impairment. The method comprises the steps: collecting alip motion image sequence from a real environment through image collection equipment, and enabling the lip motion image sequence to serve as an input feature of a deep neural network; constructing a visual modal voice endpoint detection method based on deep learning, and determining the position of a voice segment under the condition of a low signal-to-noise ratio; constructing a deep learning model based on a three-dimensional convolution-residual network-bidirectional GRU structure as a baseline model; constructing a lip language recognition model based on spatio-temporal information features on the basis of the baseline model; and training a network model by using the cross entropy loss, and identifying the speaking content according to the trained lip language identification model. According to the method, fine-grained features and time domain key frames of the lip language image are captured through space-time information feedback, so that the adaptability to the lip language features in a complex environment is improved, the lip language recognition performance is improved, the language understanding ability of a patient suffering from severe hearing impairment is improved, and the method has a good application prospect.

Owner:NANJING INST OF TECH

Front Enclosed In-Ear Earbuds

InactiveUS20170006380A1Small sizeIncrease signal levelMicrophonesLoudspeakersSpeech comprehensionProximal point

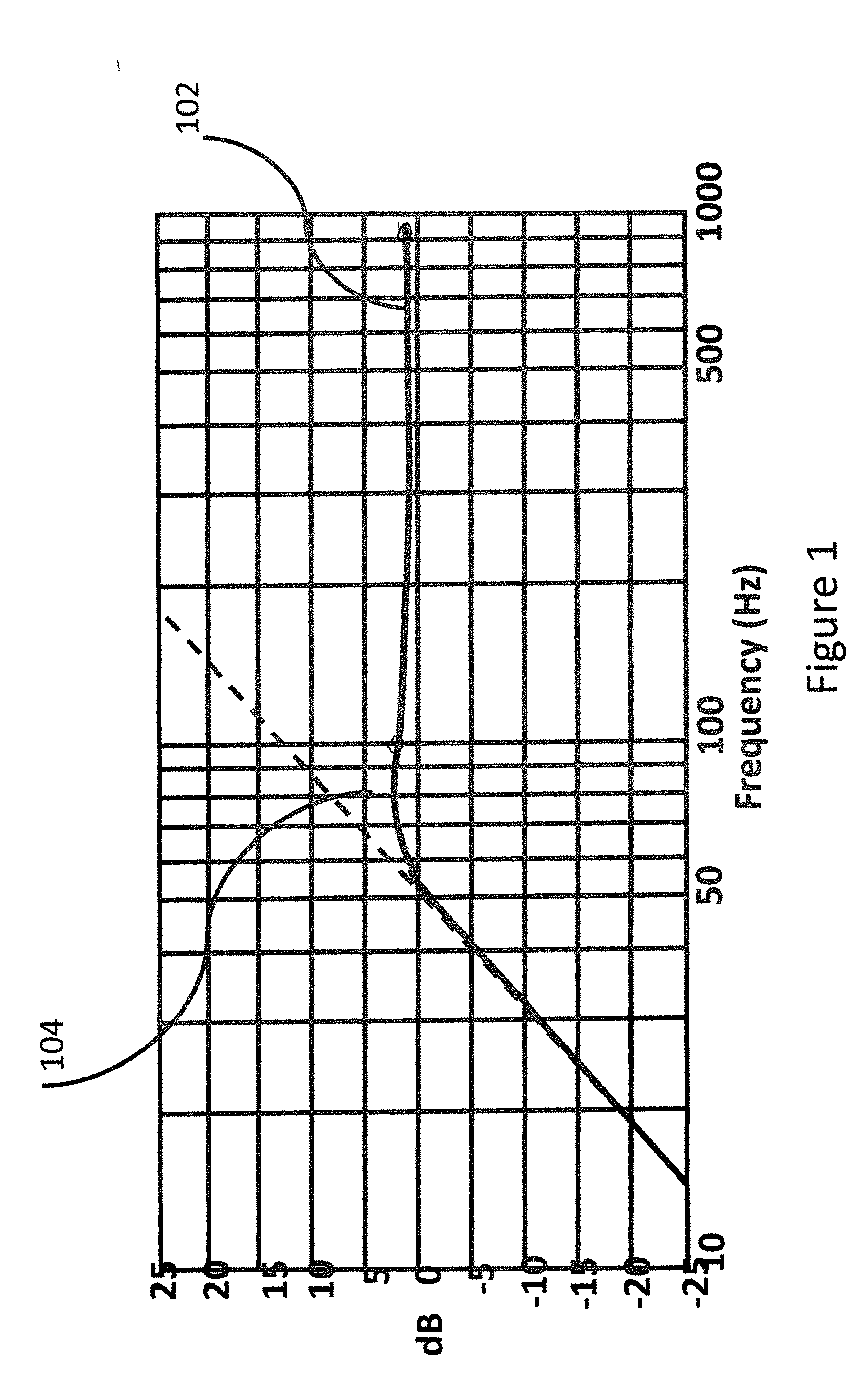

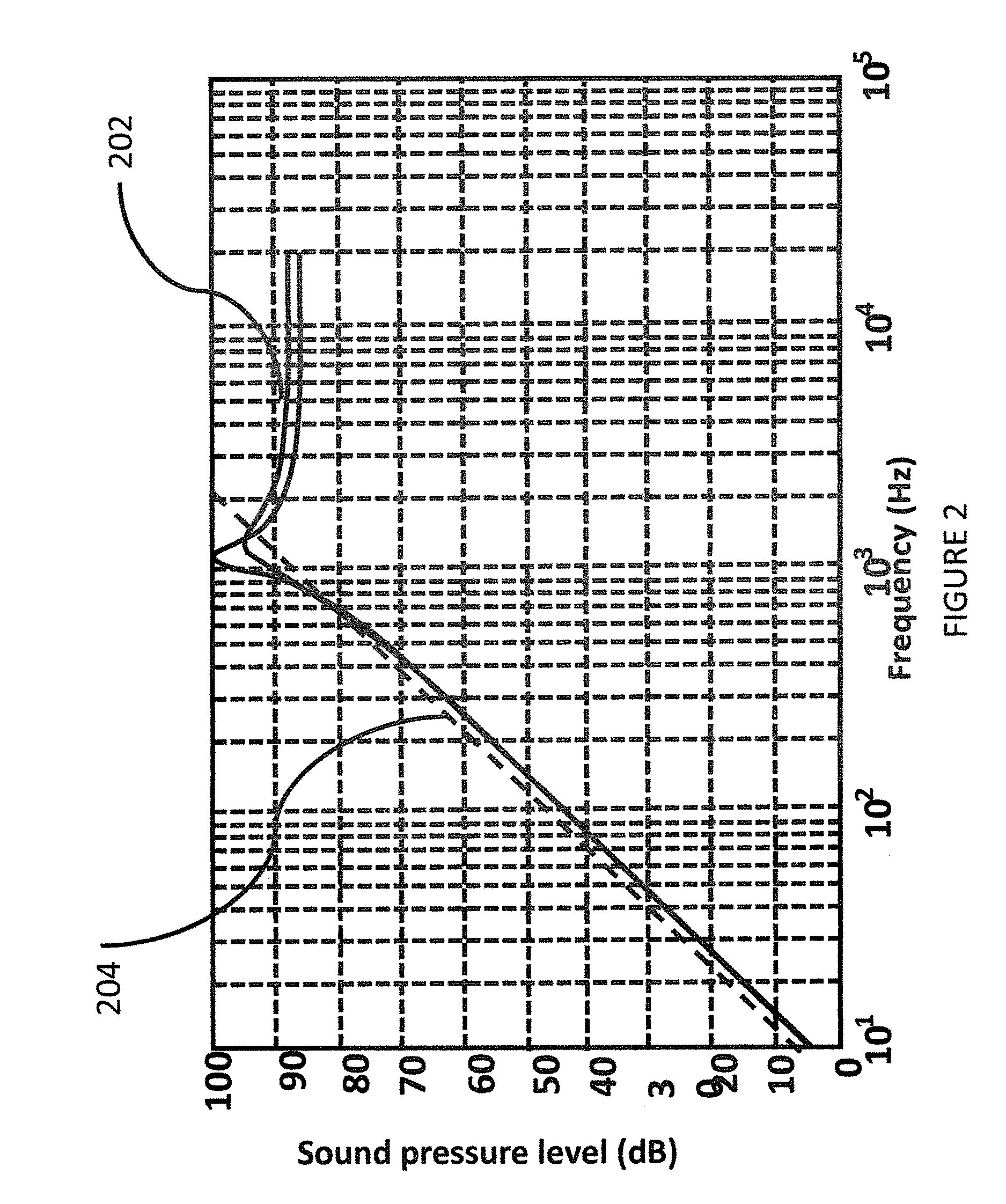

When an in-ear earbud is inserted into the ear canal, a front side sealed enclosure is created. The enclosure volume is sealed at the near end by the in-ear earbud and at the far end by the eardrum. The air trapped in the ear canal provides a stiff reactive volume that is comparable to the rear sealed enclosure volume provided by the earbud housing. An in-ear earbud simulator that closely replicates the ear canal volume and shape permits real-use measurements of the in-ear earbud frequency response. Such measurements lead to a transfer function that provides a frequency near flat frequency response. The transfer function is implemented with first order high pass and first order low pass circuits, either analog or digital in their embodiment. Additionally use of the approach for speech comprehension enhancement for cell phone conversations is implemented.

Owner:GOBELI GARTH W +2

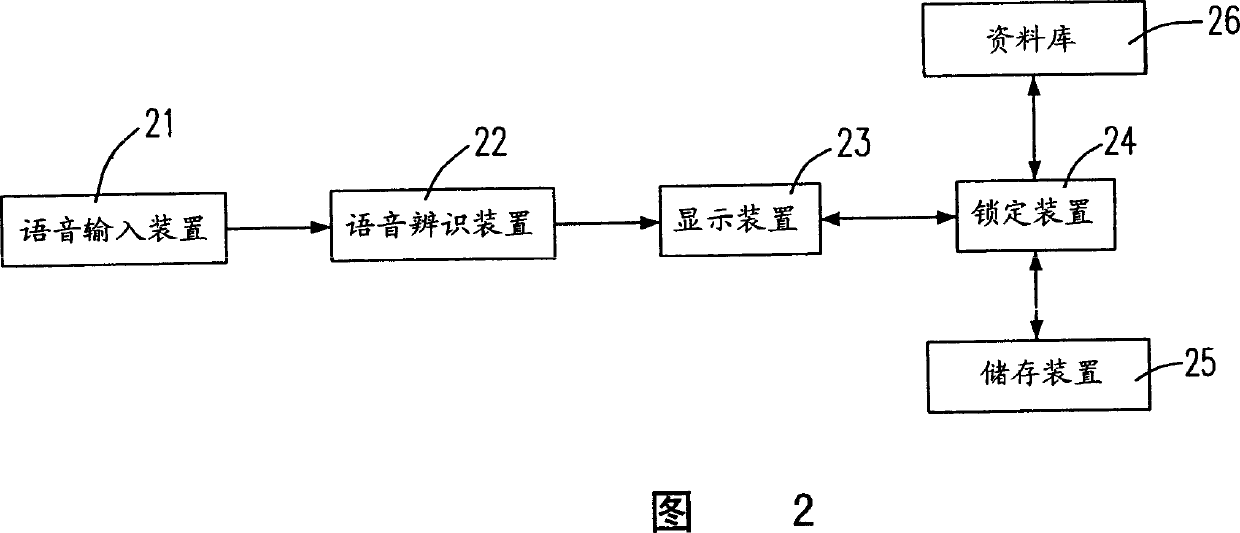

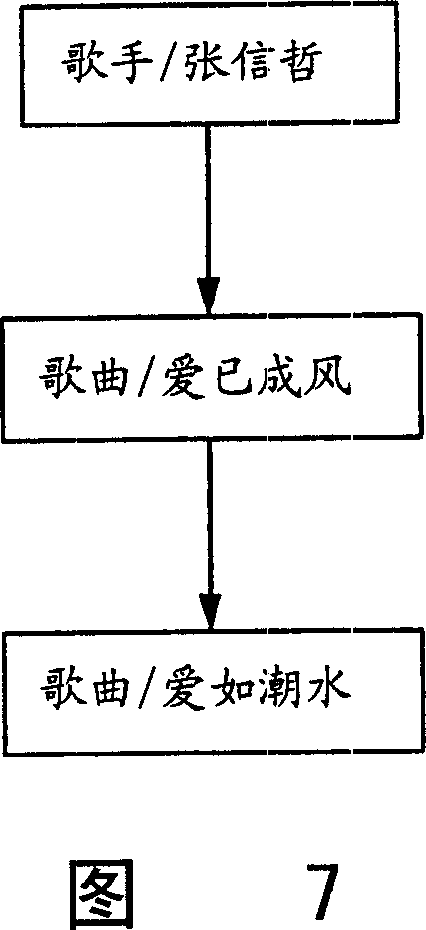

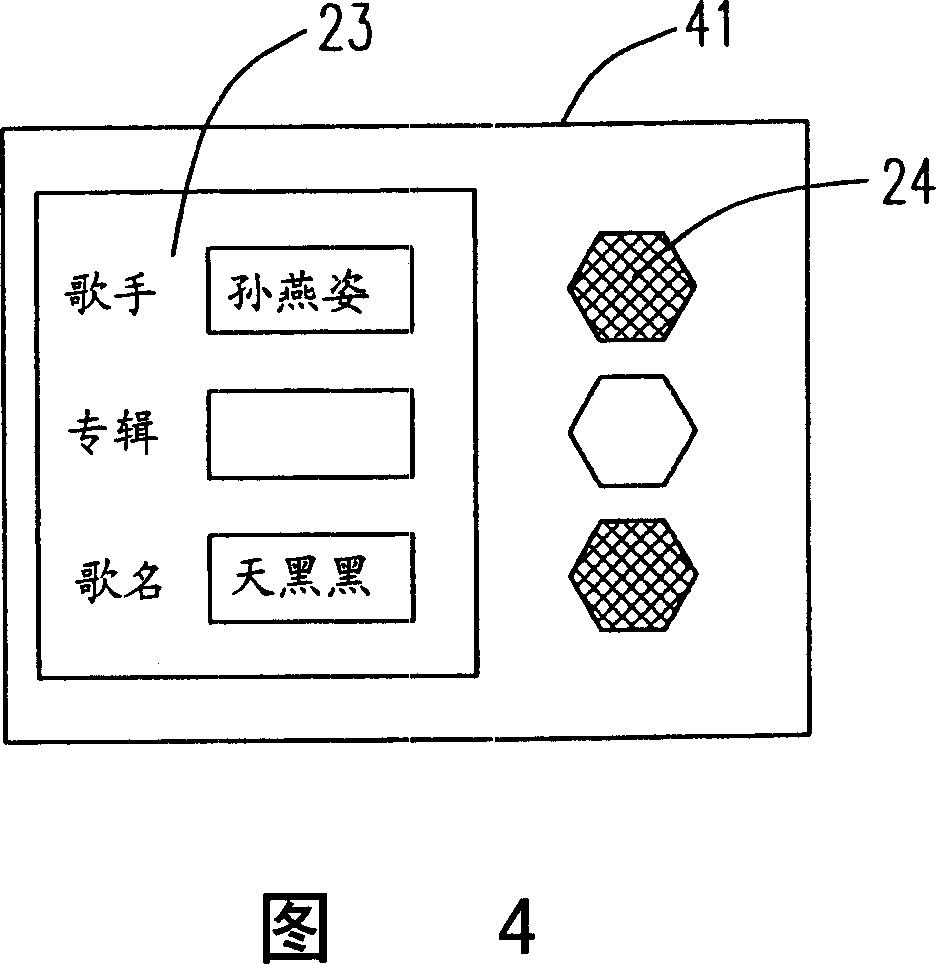

Speech identifying method and system

InactiveCN1825431BReach searchWill not affect viewingNatural language data processingSpeech recognitionDigital videoSpeech comprehension

The invention is a voice recognizing method and system, comprising: (a) receiving a user voice and recognizing the user voice to produce plural recognized results; (b) displaying these recognized results for the user to lock accurate one of these recognized results; (c) determining the accurate one is enough; (d) if not enough, storing them as known ones and reducing the recognizing range and repeating the steps (a)-(c); (e) if enough, searching a data according to the accurate one. The interactive voice comprehending component of the invention provides a main man- machine interface function,able to fast and effectively achieve searching of large amount of data. And its application range comprises small-screen devices, such as small digital video / audio storing and playing devices: MP3 players, intelligent action telephones, etc.

Owner:DELTA ELECTRONICS INC

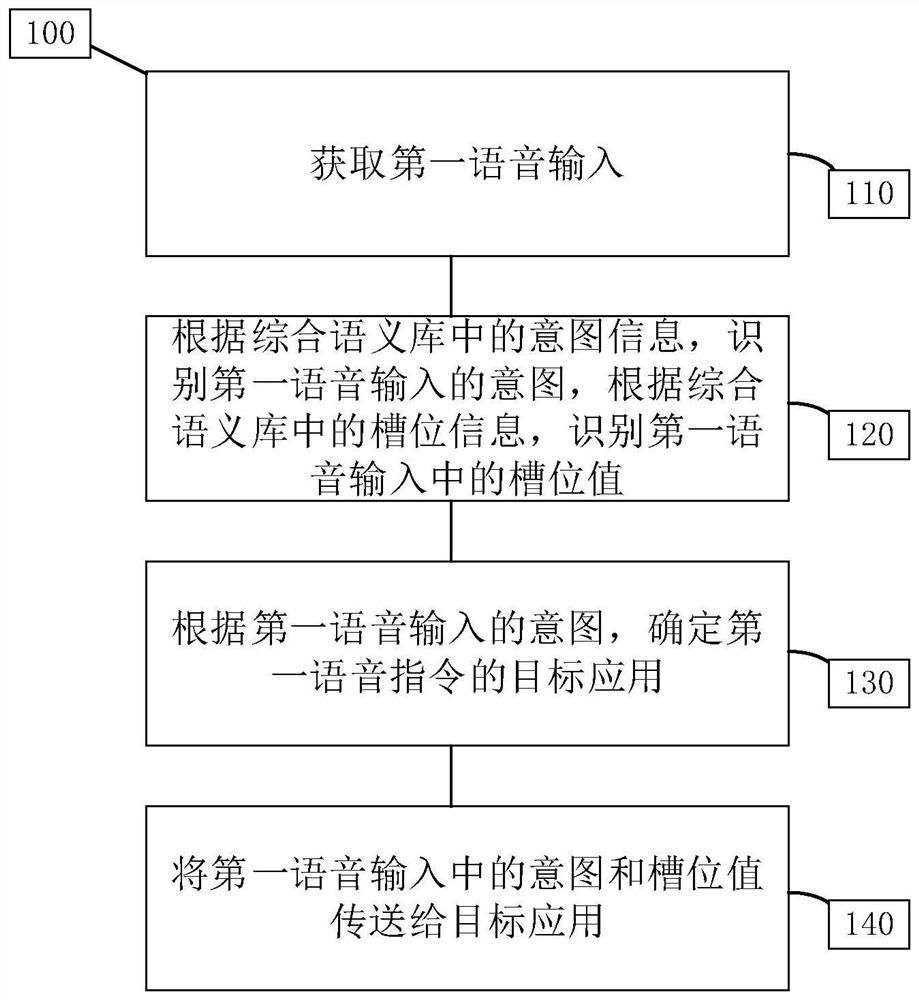

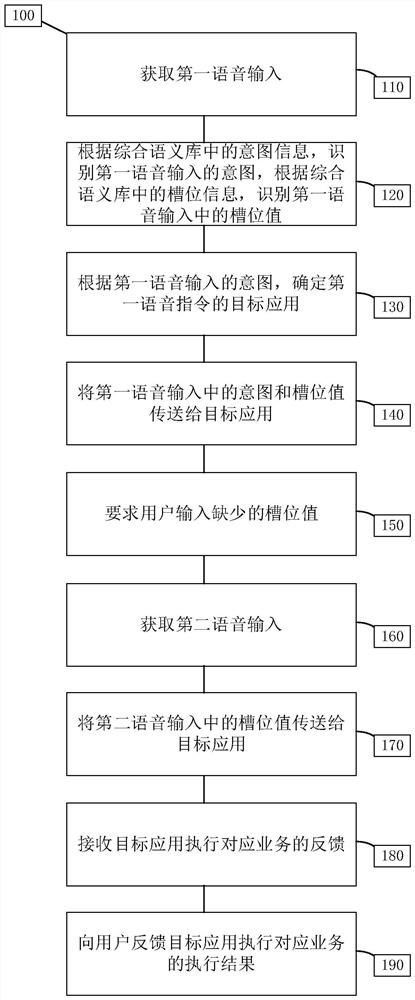

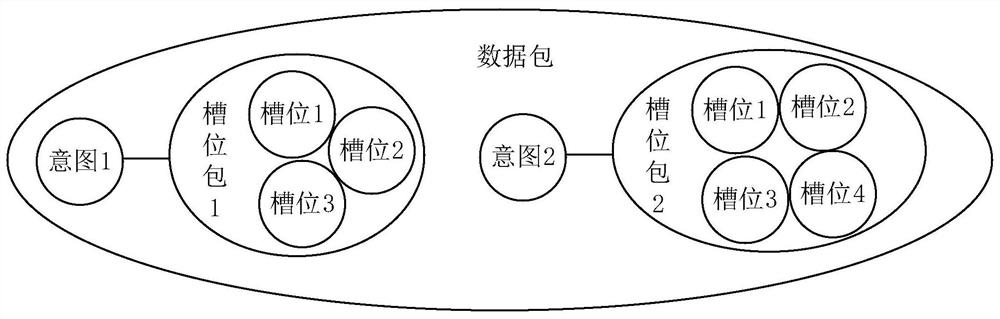

Voice understanding method and device

ActiveCN112740323AAvoid repetitionSemantic analysisSpeech recognitionNatural language understandingSpeech comprehension

The invention relates to the field of natural language understanding, and provides a voice understanding method in an embodiment, which can be used in voice understanding equipment arranged on a cloud side or an end side to understand voice information of a user and identify a target application corresponding to the intention of the user in the voice information. The voice understanding method comprises the following steps: acquiring voice information of a user, respectively identifying intention of the voice information and slot values in the voice information according to intention information and slot information from a plurality of registered applications stored in a comprehensive voice library, and matching applications corresponding to the voice information according to the intention of the voice information to obtain a voice understanding result; and the intention of the voice information and the slot value in the voice information are transmitted to the corresponding application. The voice understanding method can support flexible expression of a user, can be adapted to all registered applications, does not need to re-collect data, label data and train a model when adapting to a new application, and has the advantages of short adaptation period and low cost.

Owner:HUAWEI TECH CO LTD

Corrections for Transducer Deficiencies

We describe a straightforward method / device that provides the “ideal” compensation to small micro-speaker acoustic transducers, such as those in current large-scale use in earbud headphones. This compensation results in an audio transducer that provides an output that precisely reproduces the input sound quality to the listener. The transducer thus has a “flat” reproduction for input sound; i.e. the reproduction is linear and independent of the sound frequency over a designated frequency range such as 20 Hz to 20,000 Hz. Applications for music appreciation and for speech comprehension enhancement for mobile phone communications are discussed.

Owner:GOBELI GARTH W +2

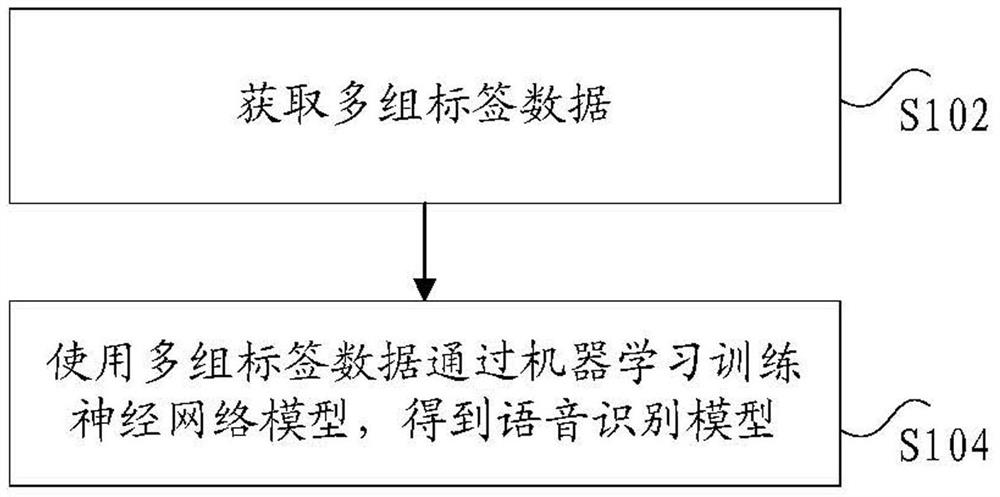

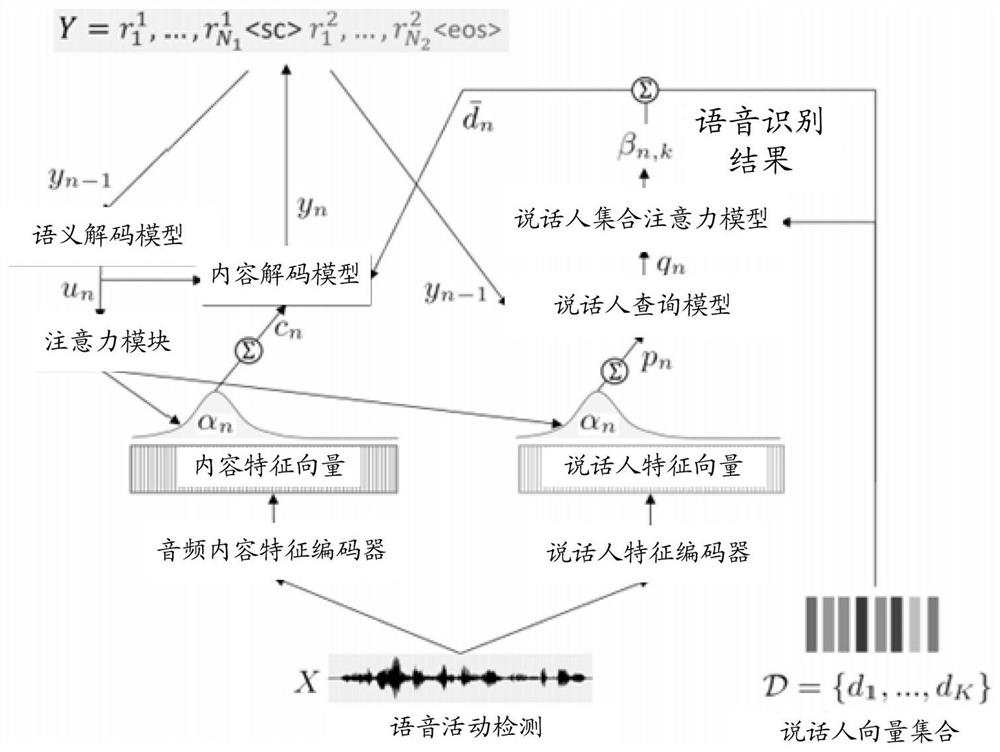

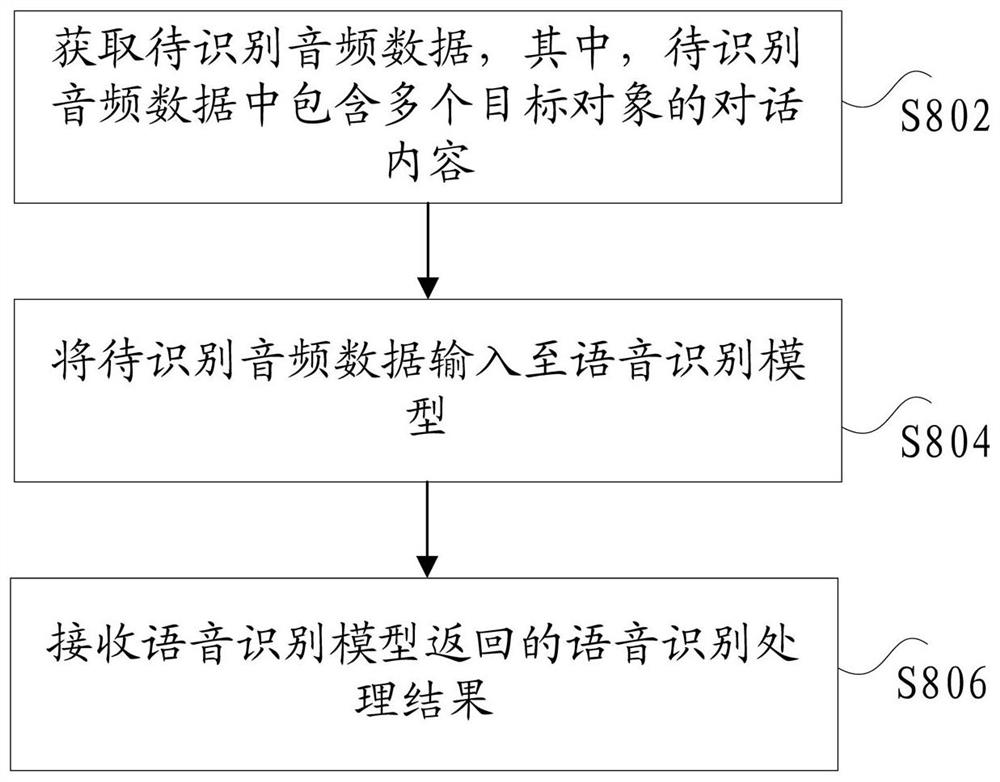

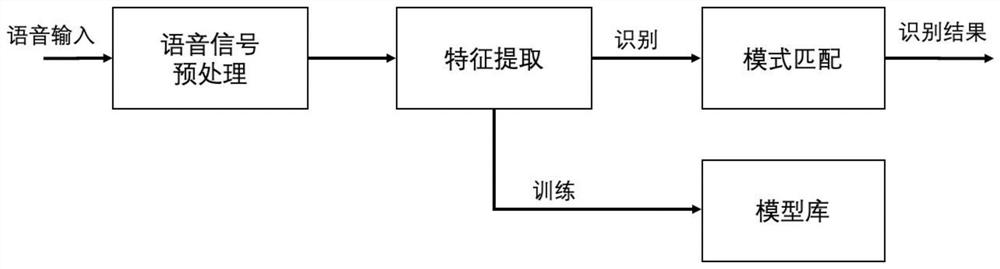

Voice recognition model acquisition method and device, electronic equipment and storage medium

The invention provides a voice recognition model acquisition method and device, electronic equipment and a storage medium, and relates to the fields of natural voice understanding, voice technologies, intelligent customer service and voice transcription. According to the specific implementation scheme, obtaining multiple sets of label data, wherein each set of data in the multiple sets of label data comprises audio sample data of sample objects and a sample object set obtained by conducting feature vector extraction processing on the audio sample data, and the audio sample data comprise dialogue content of the multiple sample objects; and training a neural network model through machine learning by using the multiple groups of label data to obtain a speech recognition model. According to the invention, the technical problem of poor speech recognition effect of a speech recognition model in the prior art is solved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

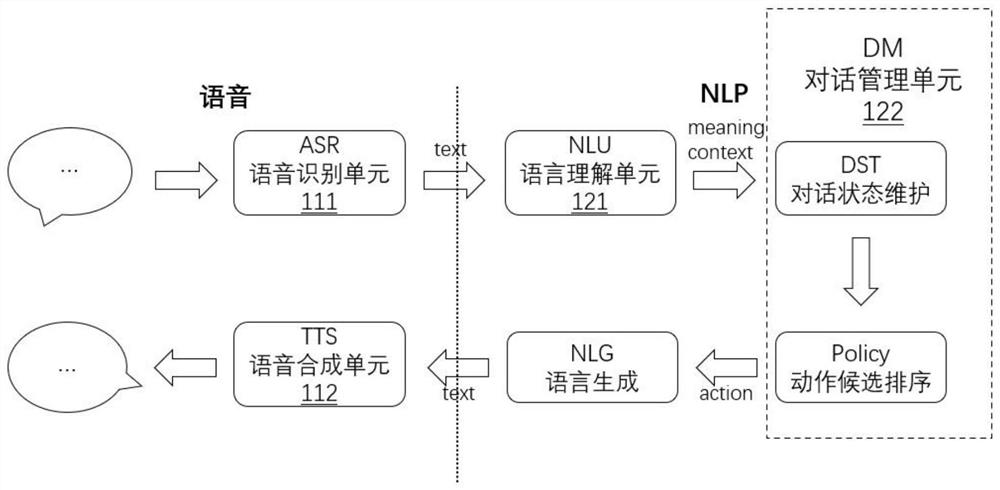

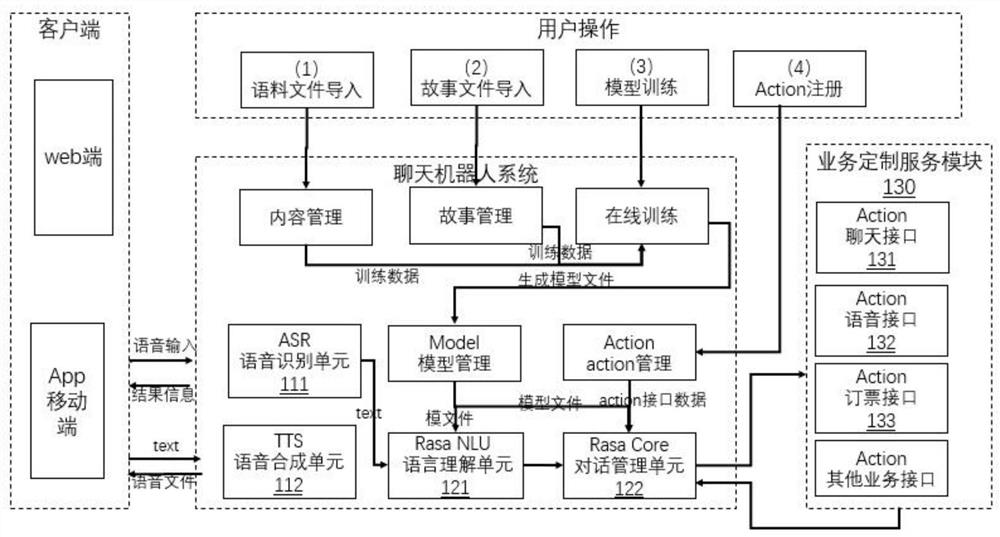

Chat robot system and conversation method based on voice recognition and Rasa framework

PendingCN114220425AImprove experienceChat conversations are smoothNatural language data processingSpeech recognitionLanguage understandingSpeech comprehension

The invention relates to a chat robot system based on voice recognition and a Rasa framework and a conversation method. The system comprises a voice service module and an intelligent assistant module. The voice service module comprises a voice recognition unit and a voice synthesis unit, and the voice recognition unit is used for recognizing input voice information and converting the input voice information into text information; the voice synthesis unit is used for converting the received text information into voice information; the intelligent assistant comprises a language understanding unit and a dialogue management unit, and the language understanding unit is used for performing user intention classification and entity extraction according to text information; and the dialogue management unit is used for maintaining and updating the dialogue state and action selection of the user and correspondingly outputting replied text information to the input of the user according to the understanding result of the voice understanding unit. The chat conversation of the robot is smoother, and the experience of the robot is improved.

Owner:FUJIAN YIRONG INFORMATION TECH +2

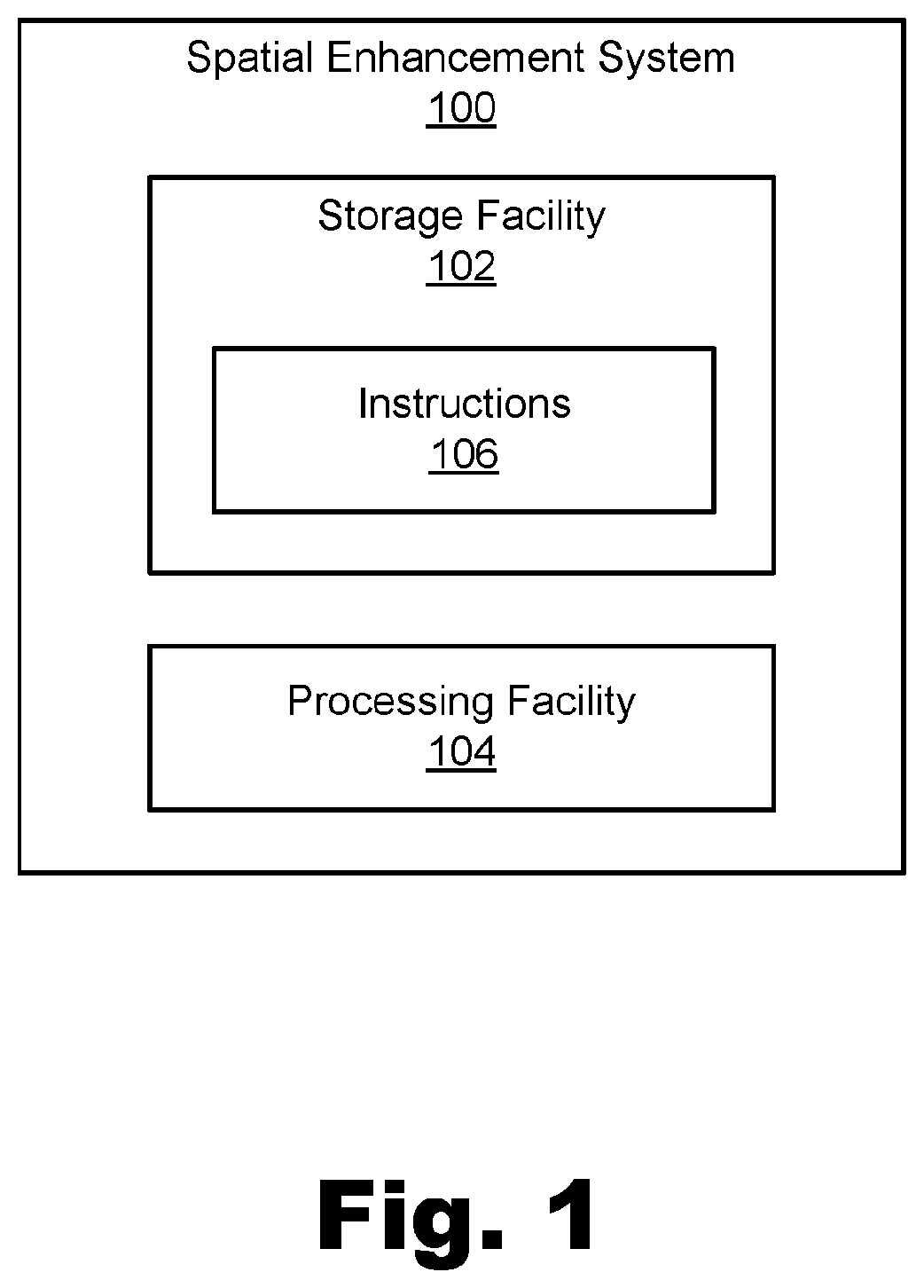

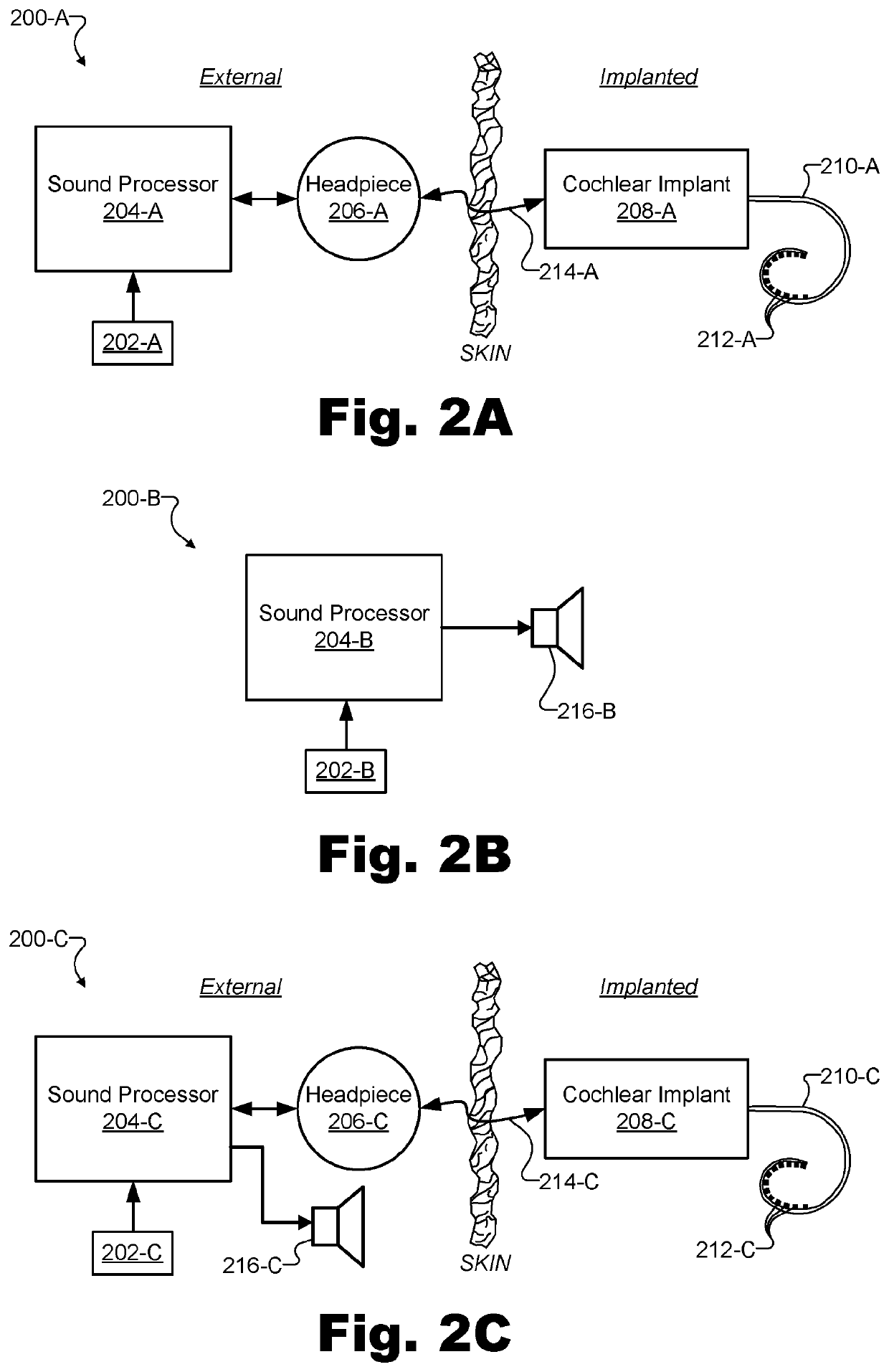

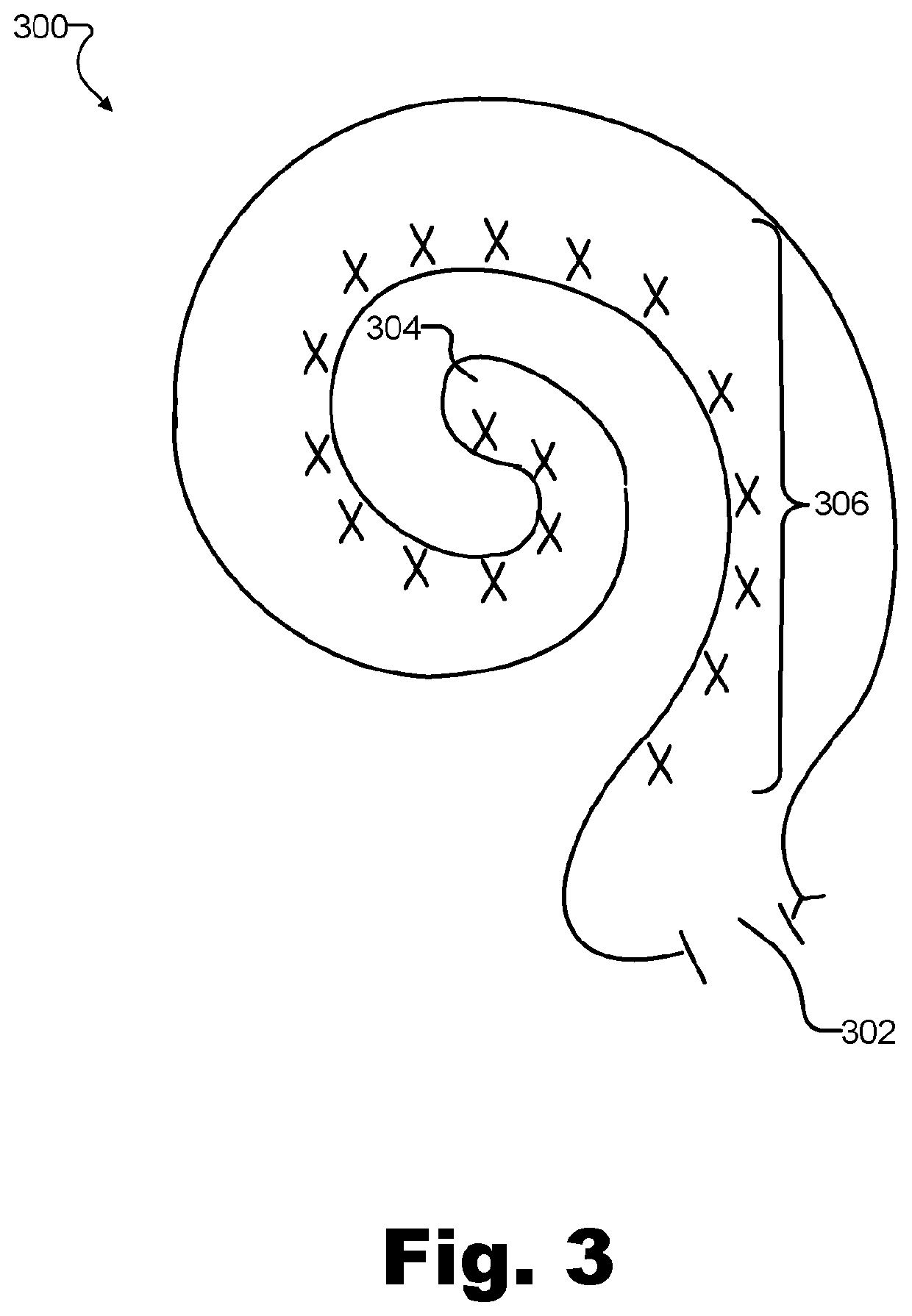

Systems and methods for frequency-specific localization and speech comprehension enhancement

PendingUS20220191627A1Head electrodesSets with desired directivitySpeech comprehensionHearing apparatus

An exemplary spatial enhancement system performs frequency- specific localization and speech comprehension enhancement. Specifically, the system receives an audio signal presented to a recipient of a hearing device, and generates, based on the audio signal, a first frequency signal and a second frequency signal. The first frequency signal includes a portion of the audio signal associated with a first frequency range, and the second frequency signal includes a portion of the audio signal associated with a second frequency range. Based on the first and second frequency signals, the system generates an output frequency signal that is associated with the first and second frequency ranges and that is configured for use by the hearing device in stimulating aural perception by the recipient. This generating of the output frequency signal includes processing the first frequency signal to apply a localization enhancement and processing the second frequency signal to apply a speech comprehension enhancement.

Owner:ADVANCED BIONICS AG

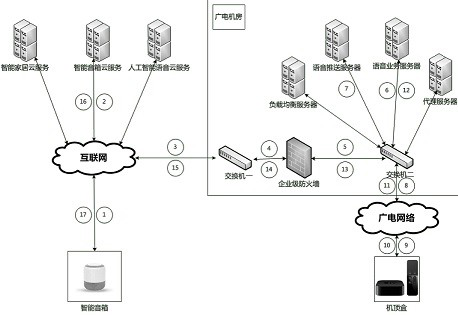

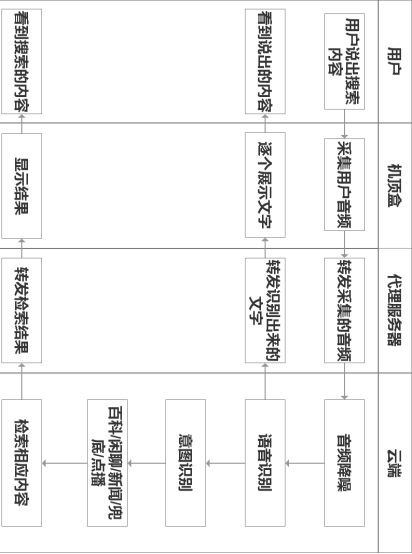

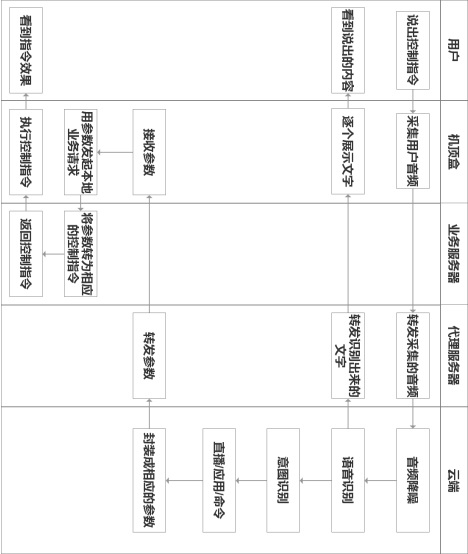

Method for controlling set-top box by intelligent sound box terminal based on broadcasting and TV

InactiveCN112738584ARealize far-field voice controlImprove operational capabilitiesSpeech recognitionSelective content distributionControl setSpeech comprehension

The invention discloses a method for controlling a set-top box by an intelligent sound box terminal based on broadcast and television, which comprises the following steps: 1) after the intelligent sound box terminal is electrified, connecting a network through a physical key, and accessing a service background of the intelligent sound box terminal; 2) enabling the Bluetooth voice remote controller to display a pairing code through voice, enabling the set top box to display a unique identifier, namely a television pairing code, and completing bidirectional binding; 3) entering a television mode, switching the instruction transmission interface to the broadcast and TV voice service platform by the sound box cloud service platform, and controlling the set top box through the sound box; 4) enabling the intelligent sound box terminal equipment to collect user voice, perform voice ASR natural voice recognition and NLU natural voice understanding processing, and then send intention to the user set top box voice apk through the voice platform proxy server to be processed; and 5) enabling the voice platform service server to receive the intention request of the set-top box user, request the set-top box to execute the intention request, and realize remote voice control of the set-top box without adjusting hardware of the set-top box by connecting the set-top box with the intelligent sound box terminal.

Owner:江苏有线技术研究院有限公司

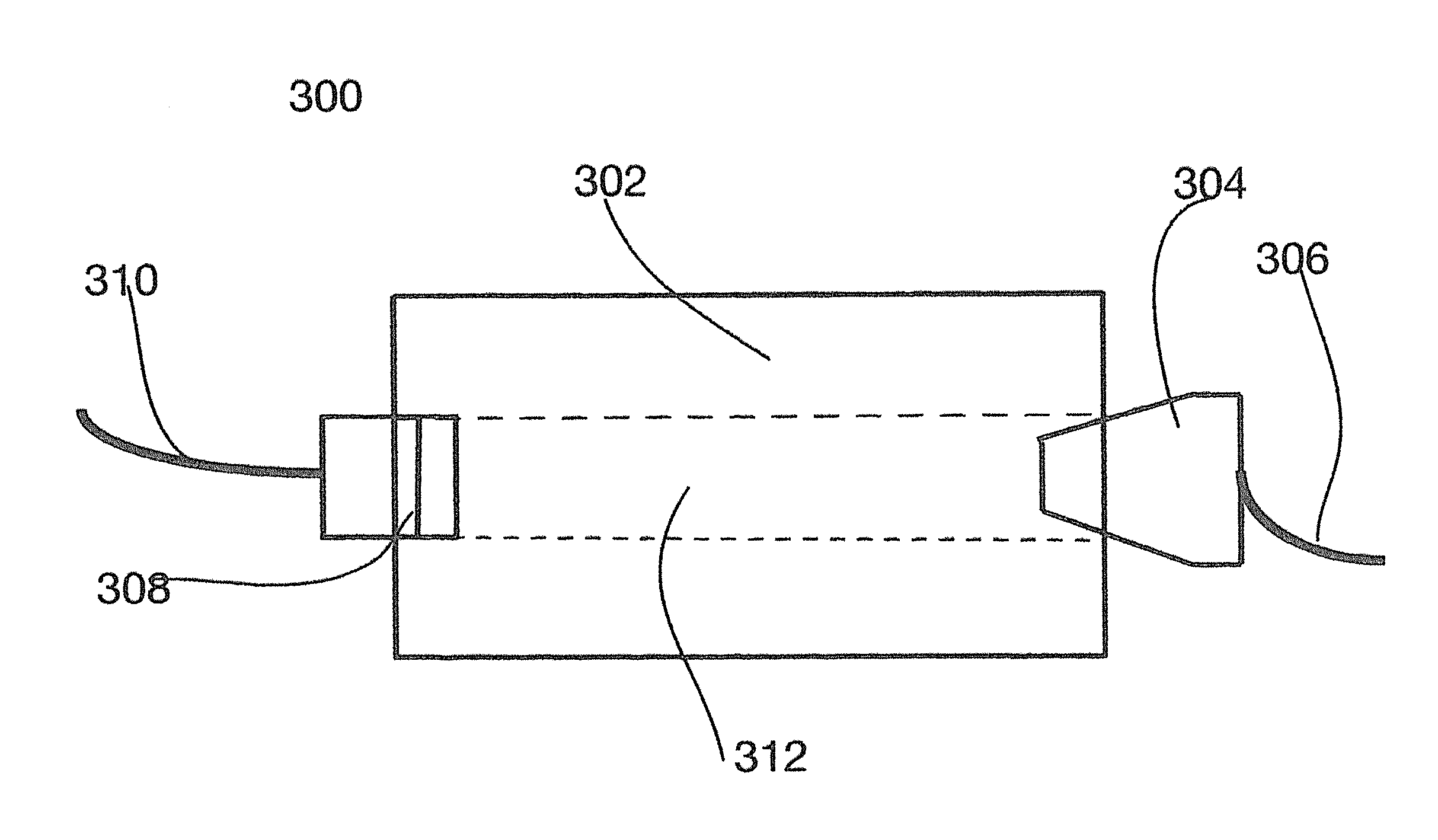

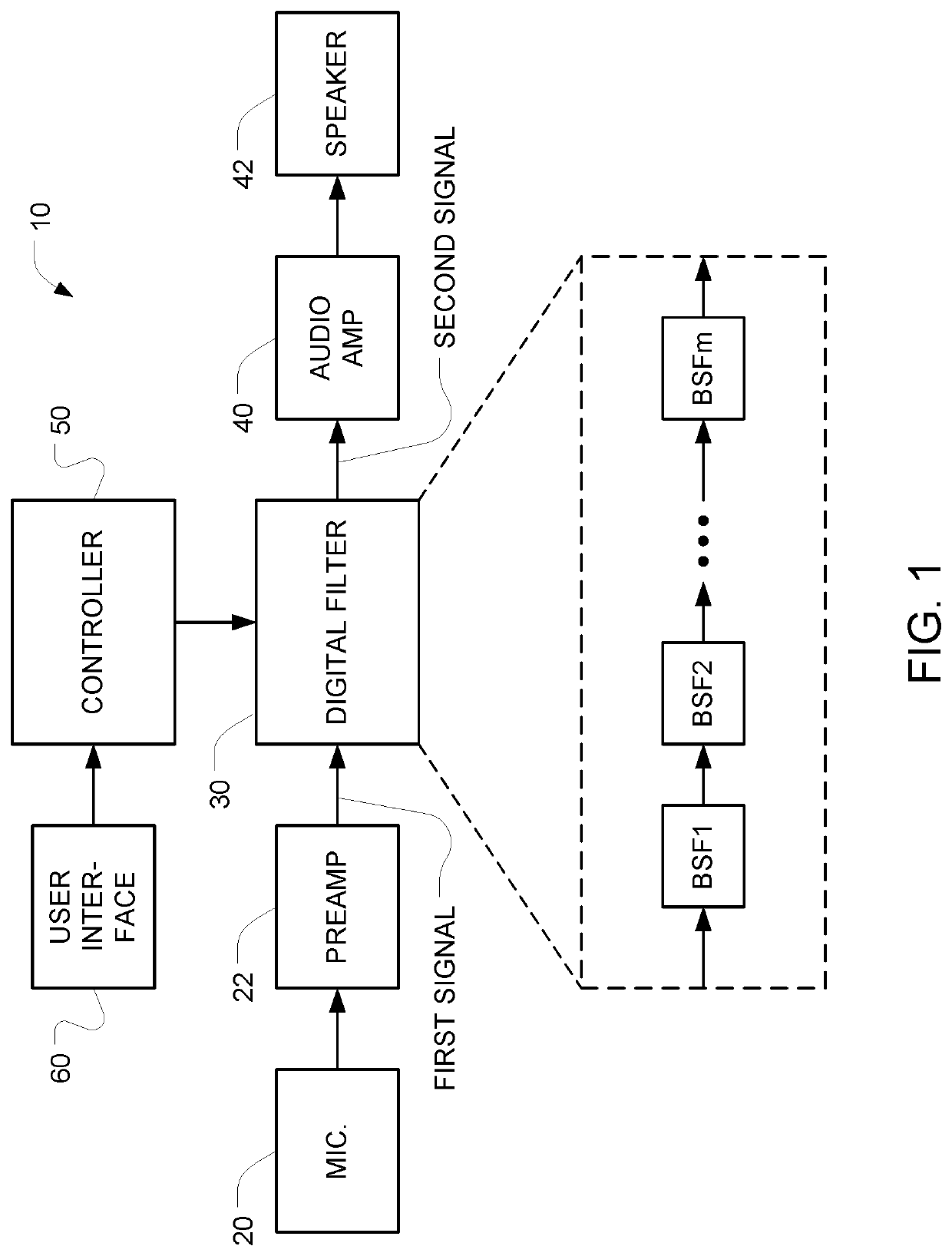

Telecommunication Device that Provides Improved Understanding of Speech in Noisy Environments

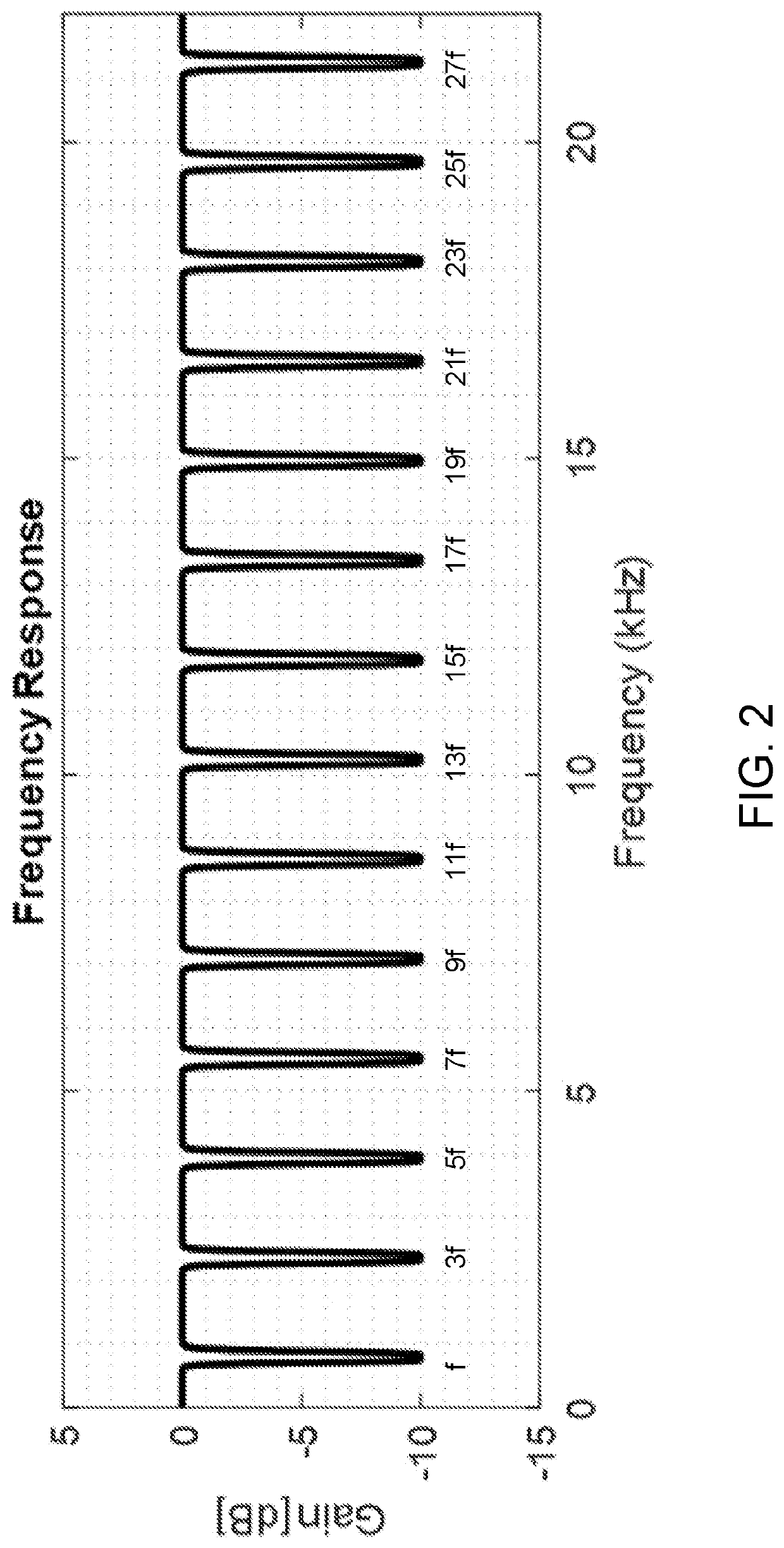

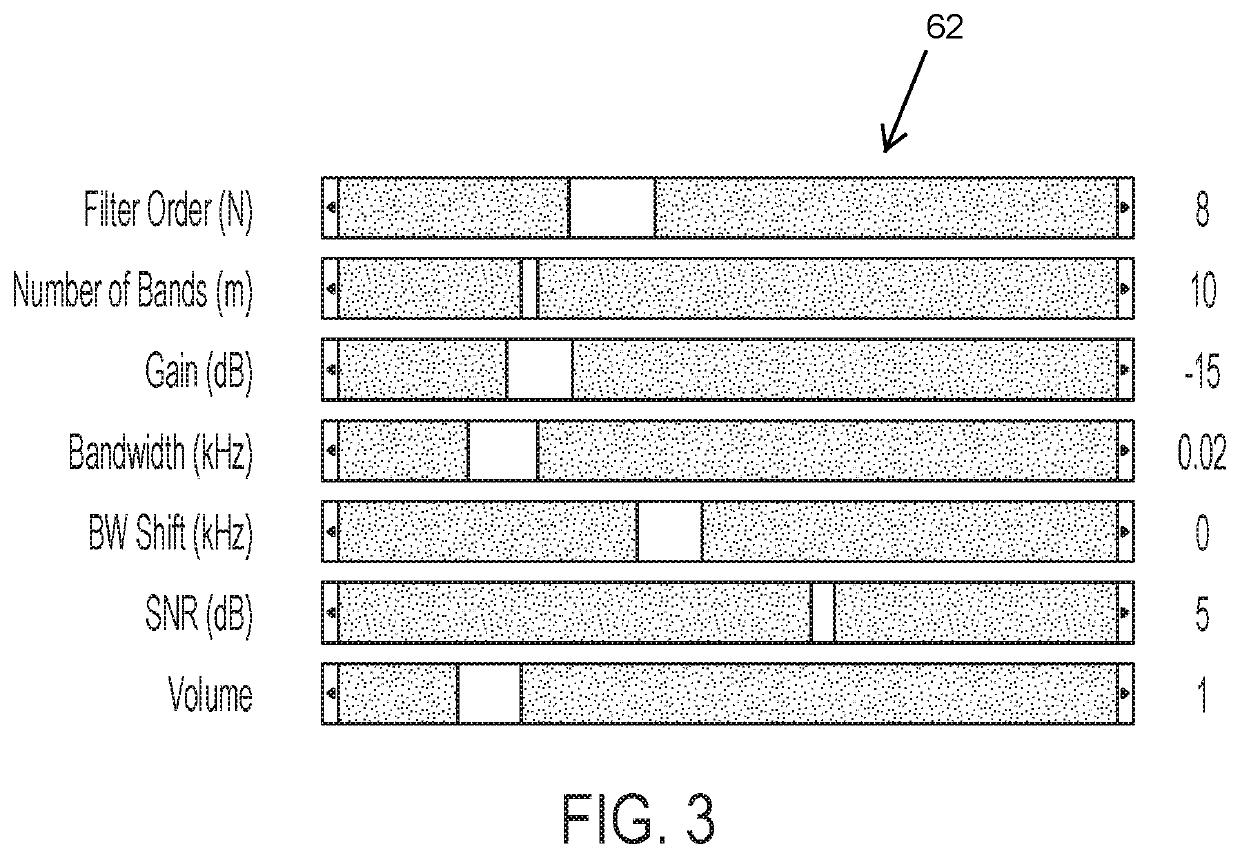

InactiveUS20210027797A1Improve intelligibilityHearing aids signal processingSpeech recognitionSpeech comprehensionDigital filter

A telecommunication apparatus (e.g., a cell phone) can provide improved understanding of speech in noisy environments by processing incoming audio signals using a digital filter that has at least four audio frequency stop bands, with an audio frequency pass band positioned between adjacent stop bands. Each of the stop bands has a respective center frequency and a respective bandwidth, and the center frequencies of all the stop bands are positioned at regular intervals on a linear scale. The filtered signal is amplified to drive a speaker (e.g., a speaker that is built into the cell phone or incorporated within a set of headphones).

Owner:PALTI YORAM +1

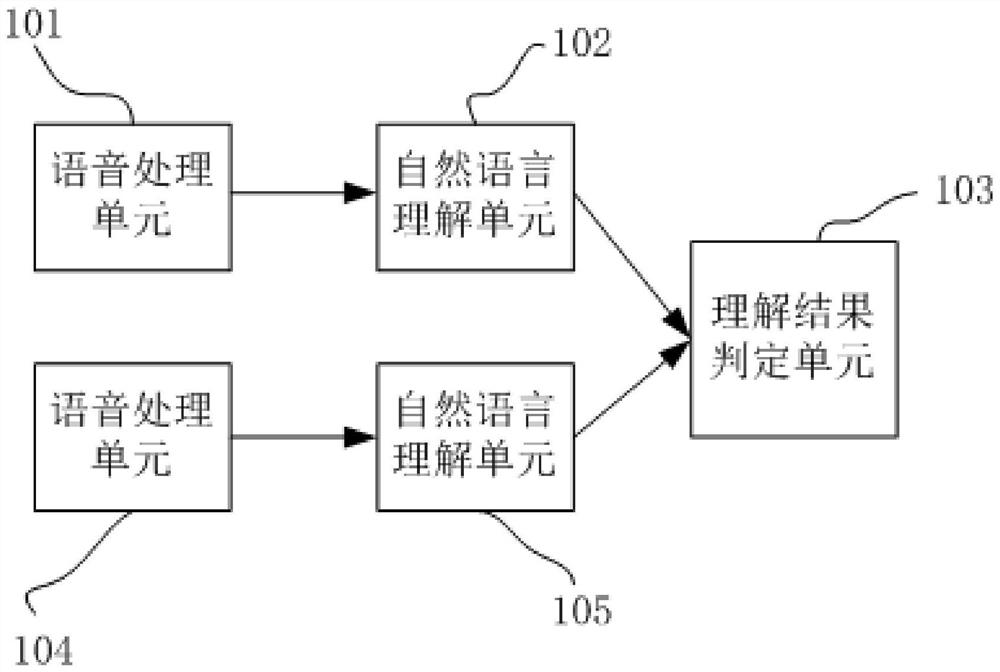

Intelligent voice understanding system with multiple voice understanding engines and intelligent voice interaction method

The invention provides an intelligent voice understanding system with multiple voice understanding engines and an intelligent voice interaction method. The intelligent voice understanding system comprises a first voice understanding engine which processes voice without adopting a transcription mode, a second voice understanding engine which processes voice in a transcription mode and an understanding result judgment unit, a voice processing unit of the first voice understanding engine processes the voice to obtain voice data in a coding sequence form, and a natural language understanding unit acquires an intention corresponding to the voice based on the voice data in the coding sequence form through a natural language understanding model; a voice processing unit of the second voice understanding engine performs transcription processing on the voice to obtain voice data in a text form, and the natural language understanding unit acquires an intention corresponding to the voice based on the voice data in the text form through a natural language understanding model; and the understanding result determination unit determines an intention corresponding to the speech on the basis of the understanding results of the two speech understanding engines.

Owner:水木智库(北京)科技有限公司

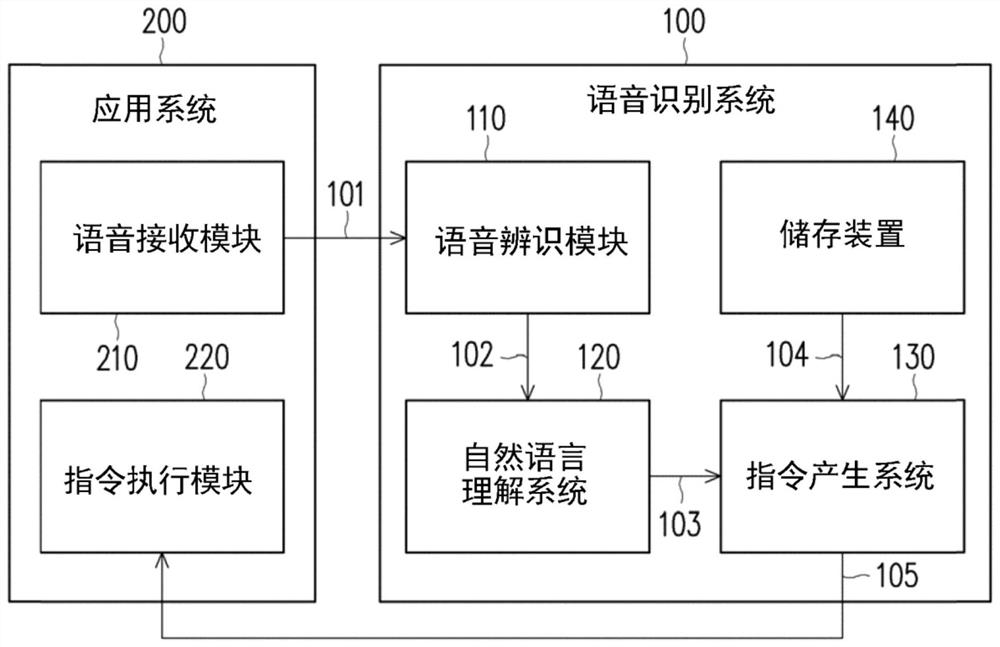

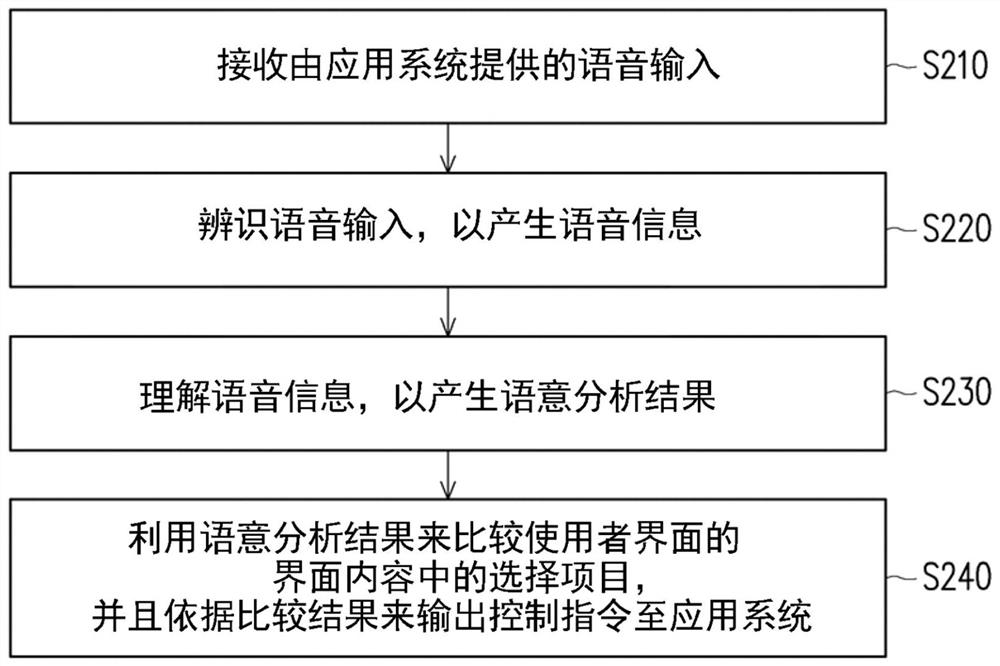

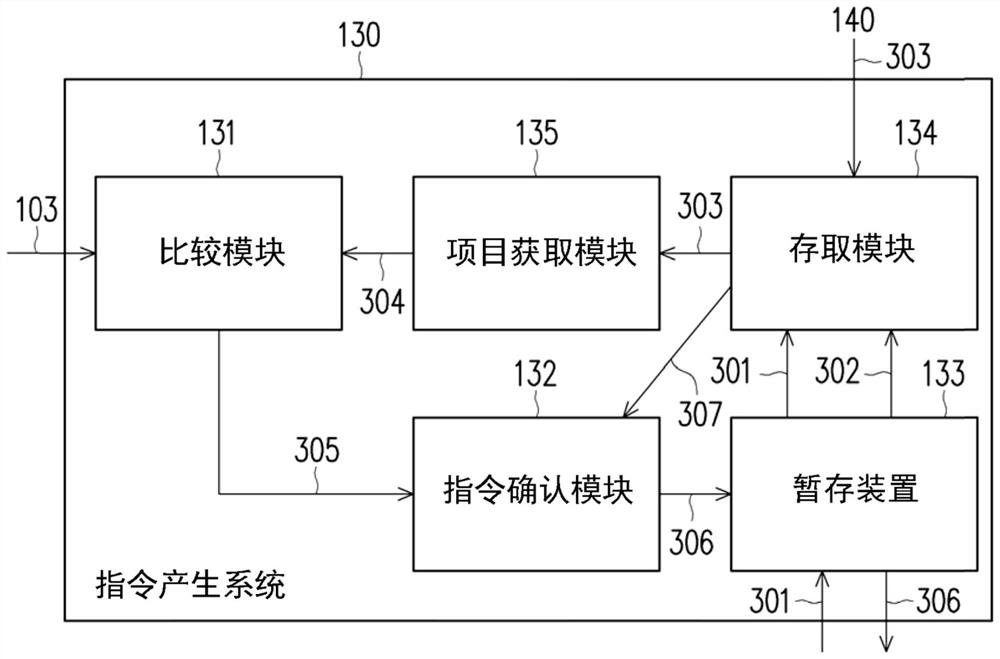

Speech recognition system, instruction generation system and speech recognition method thereof

PendingCN112216278AConvenient voice recognition functionSave system resourcesSpeech recognitionSpeech comprehensionSpeech input

The invention provides a speech recognition system, an instruction generation system and a speech recognition method thereof. The speech recognition system is adapted to communicate with an application system. The application system receives a voice input. The speech recognition system comprises a speech recognition module, a natural speech understanding system and an instruction generation system. The speech recognition module receives the speech input provided by the application system and recognizes the speech input to generate speech information. The natural speech understanding system iscoupled to the speech recognition module. The natural speech understanding system understands the speech information to generate a semantic analysis result. The instruction generation system is coupled to the natural speech understanding system. The instruction generation system uses the semantic analysis result to compare selection items in an interface content of a current user interface, and outputs a control instruction to the application system according to the comparison result. Therefore, a convenient voice recognition function can be provided, and system resources required for voice recognition in an application system can be reduced.

Owner:VIA TECH INC

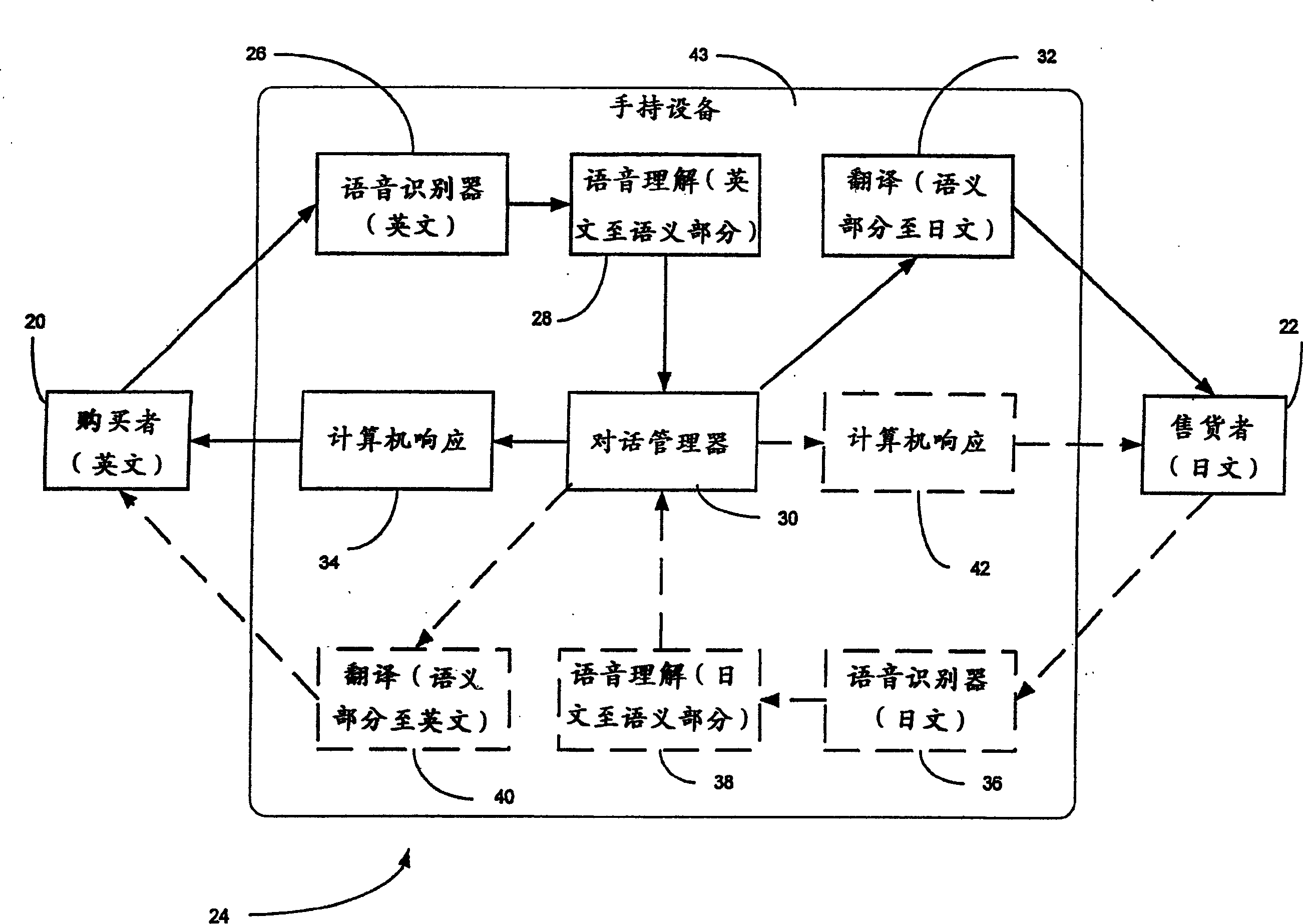

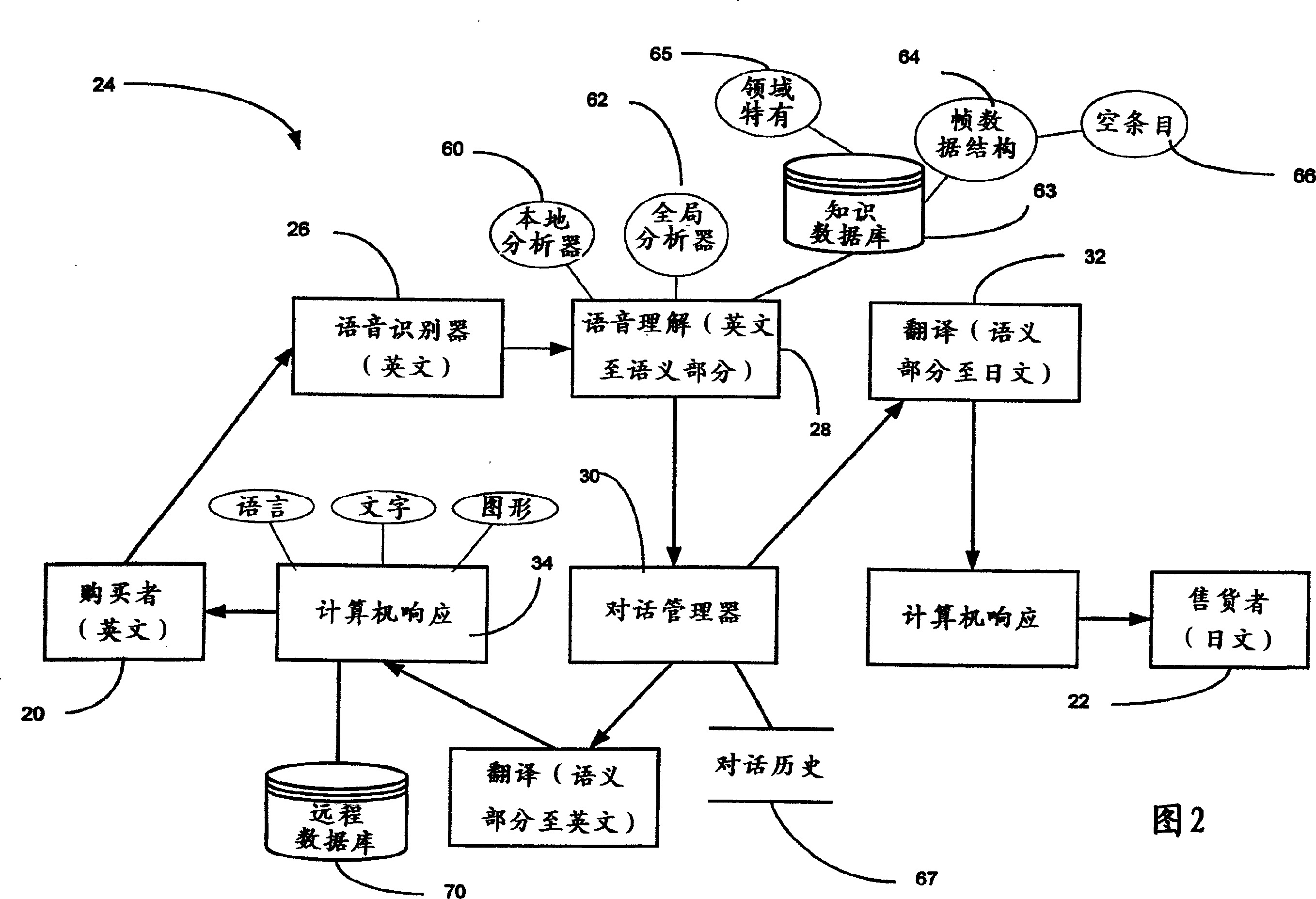

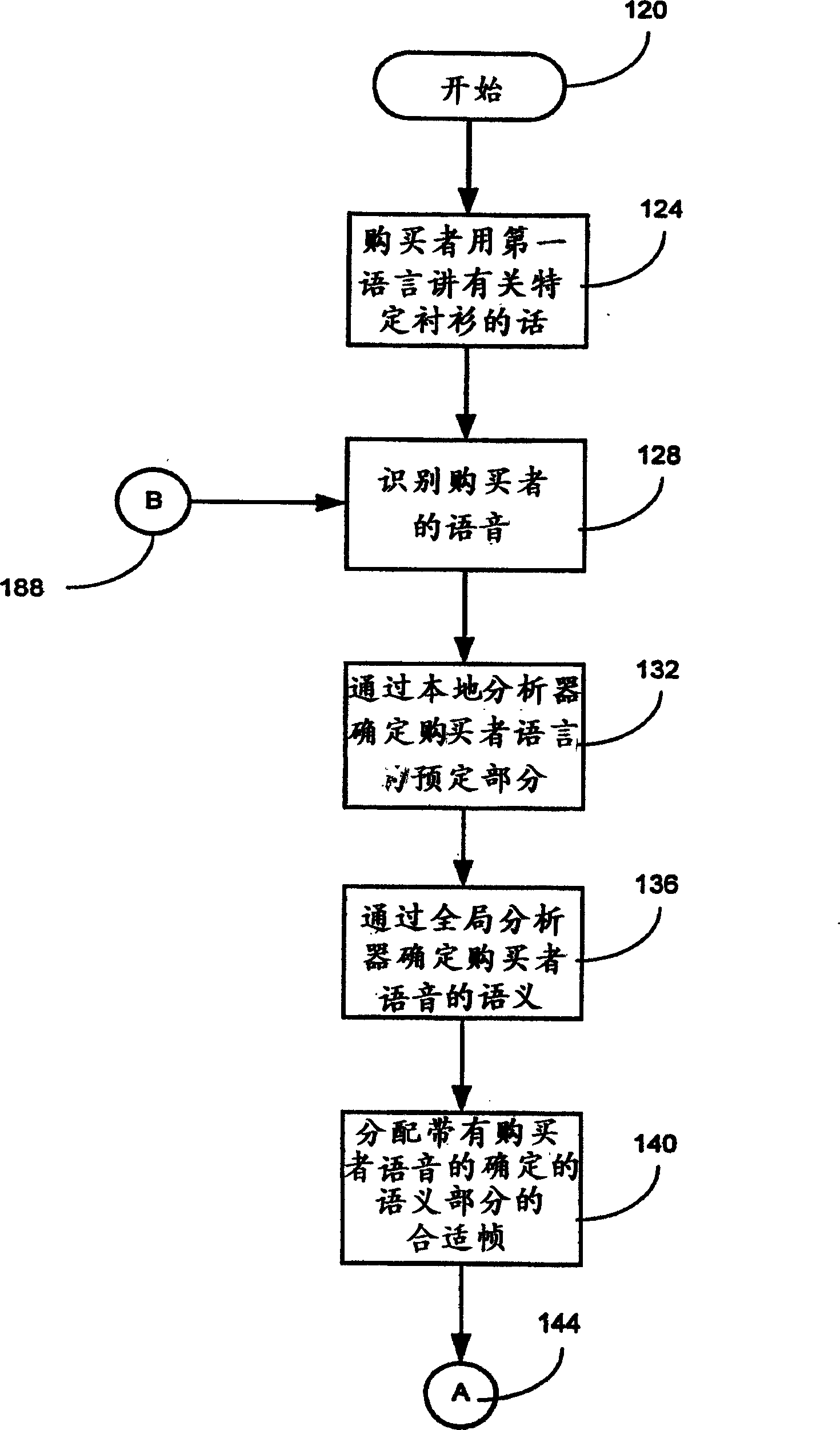

Apparatus and method for implementing language translation between speakers of different languages

InactiveCN1204513CData processing applicationsSpeech recognitionSpeech comprehensionSpeech identification

The present invention discloses an apparatus for performing language translation between speakers of different languages, comprising a speech recognizer for receiving a spoken utterance in a first language from a first speaker and operable to convert the spoken utterance in digital format; a speech understanding module connected to a speech recognizer for determining the semantic part of a spoken utterance; a dialog manager connected to the speech understanding module for determining the semantic information present in a spoken utterance based on the determined semantic part Insufficient conditions; connected to a computer response module of the dialogue manager, when there is a condition of insufficient semantic information in the oral speech, the computer response module is operable to provide a response to the first speaker, the response of the first speaker is consistent with the verbal The semantic part of the utterance is associated; a language translation module connected to the dialog manager, operable to translate the semantic part into a second language different from the first language.

Owner:PANASONIC CORP

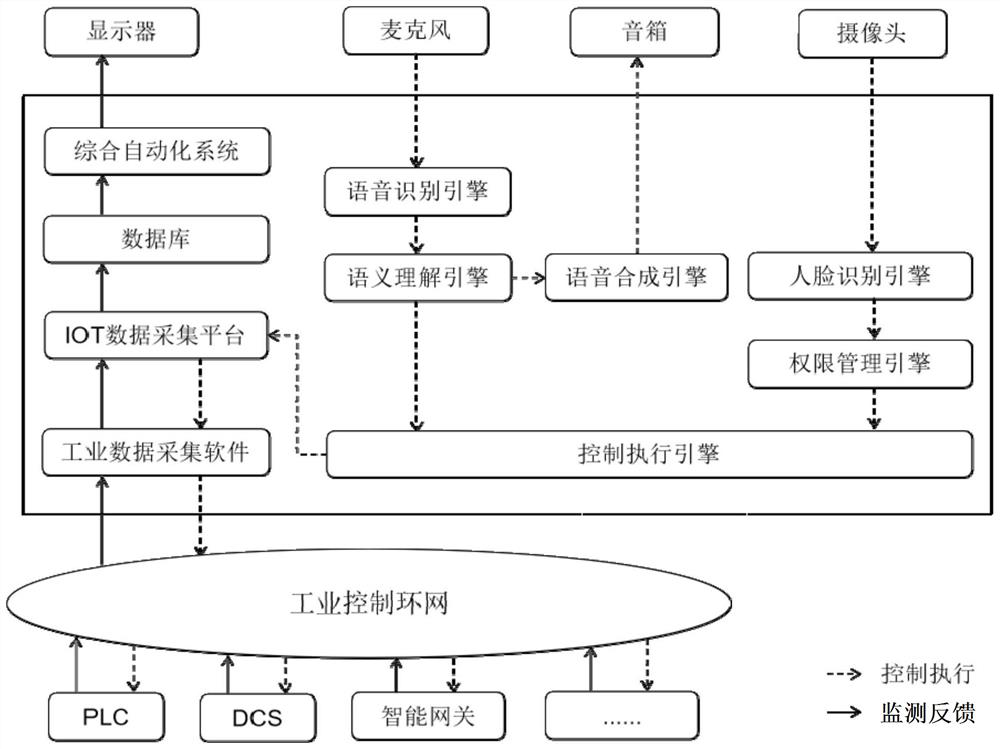

Voice control coal mine integrated automation system and method

PendingCN114553922AImprove control efficiencyReduce control difficultyMining devicesCharacter and pattern recognitionInformatizationSpeech comprehension

The invention discloses a voice control coal mine integrated automation system and method, and belongs to the field of coal mine informatization monitoring control. The system comprises a hardware part including a microphone, a sound box, a camera, a host and a display; and the software part comprises an integrated automation system, industrial data acquisition software, an IOT data acquisition platform, an intelligent voice service engine, a face recognition engine, an authority management engine and a control execution engine. The intelligent voice service engine is used for achieving control instruction voice recognition and instruction voice understanding, the face recognition engine is called to achieve control object identity recognition, and finally a corresponding control command is executed according to a permission judgment result. The artificial intelligence technology is applied to simplify the coal mine comprehensive automation control process, and the control accuracy is improved; the operation authority is strictly checked, and the control safety is improved.

Owner:中煤信息技术(北京)有限公司

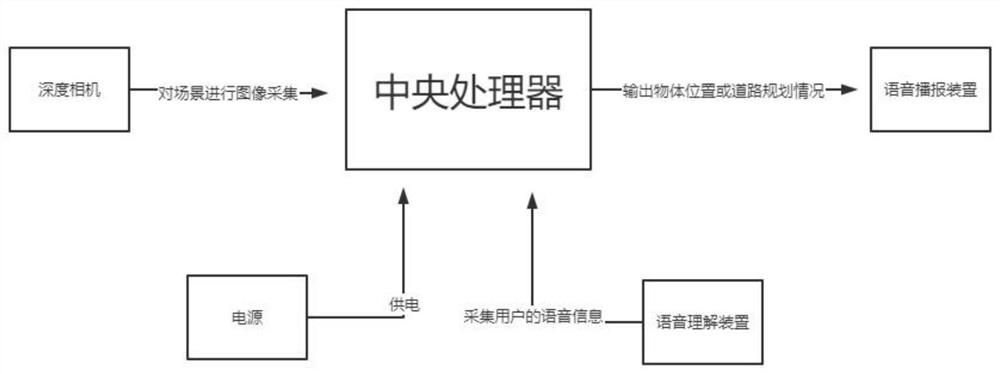

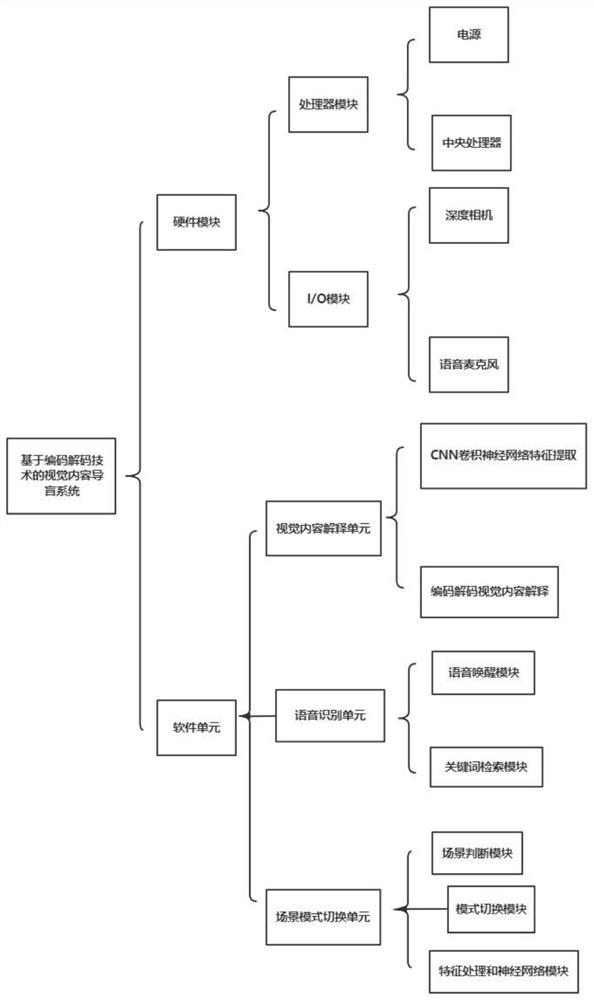

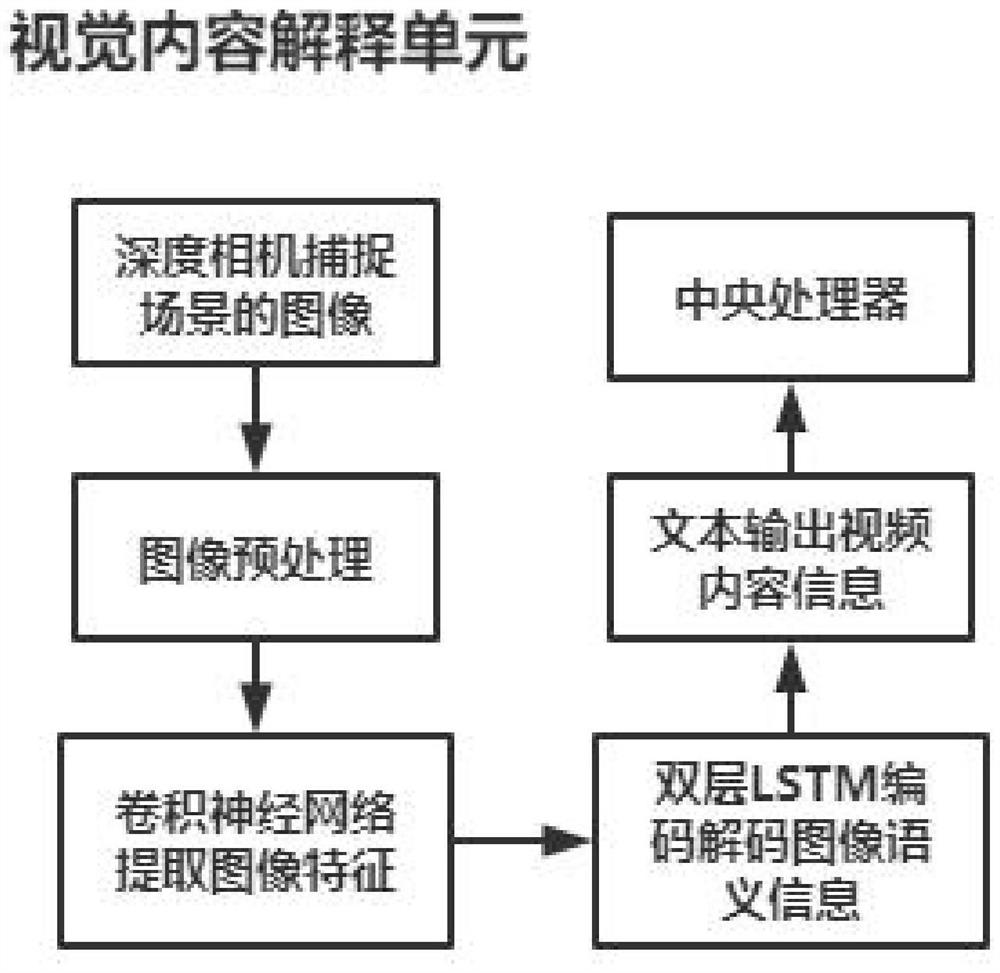

Visual content blind guiding auxiliary system and method based on coding and decoding technology

ActiveCN114469661AImprove travel safetyReduce accident rateWalking aidsCharacter and pattern recognitionSpeech comprehensionRgb image

The invention discloses a visual content blind guiding auxiliary system and method based on coding and decoding technology, and relates to the field of computer vision, the system comprises a central processor module, a depth camera module, a voice broadcast device module, a voice understanding device module and a power supply module; wherein the central processing unit is used for controlling the system, visual data processing and signal transmission, control software with a blind guiding system is deployed on the central processing unit, and the control software comprises a visual content interpretation unit, a voice recognition unit and a road planning unit; the depth camera is used for carrying out image acquisition on a current scene and generating an RGB image and a depth map; the voice broadcast device is used for understanding the voice information output by the central processing unit and playing object searching or road planning conditions; the voice understanding device is used for collecting voice information of a user and transmitting the voice information to the central processing unit; the power supply is used for supplying power to the central processor. The blind person can be assisted in better life, and the life quality is improved.

Owner:SHENYANG LIGONG UNIV

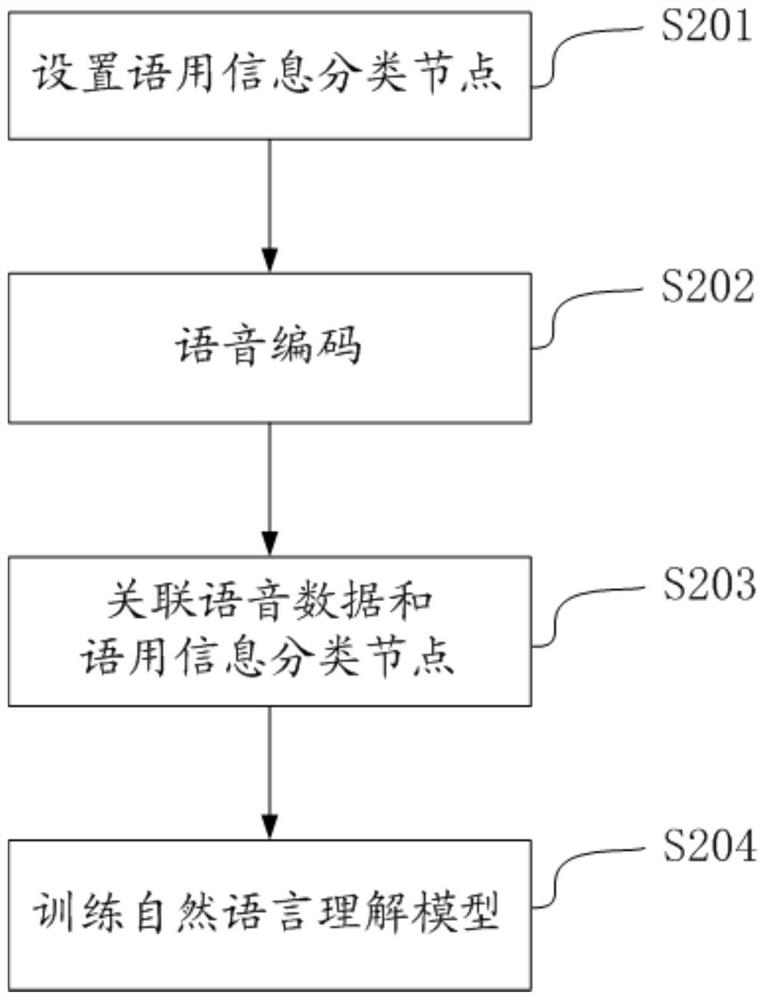

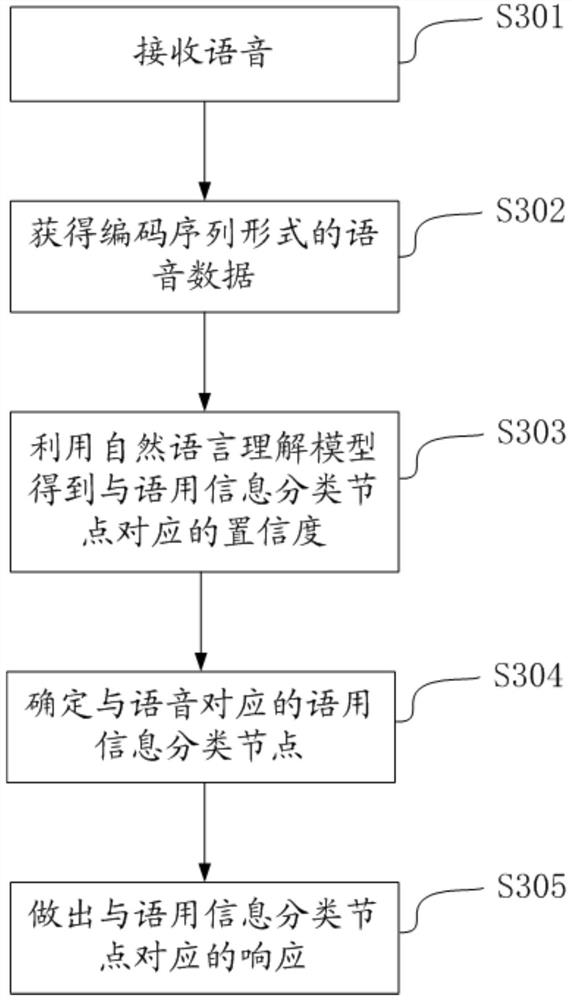

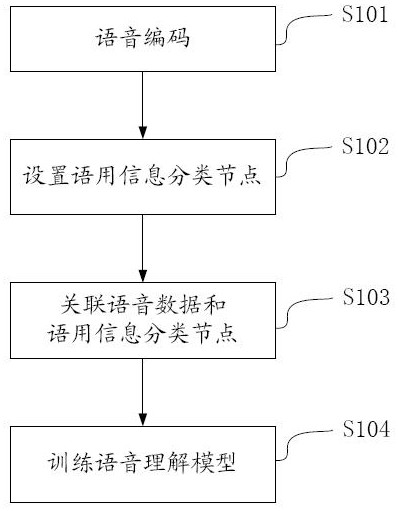

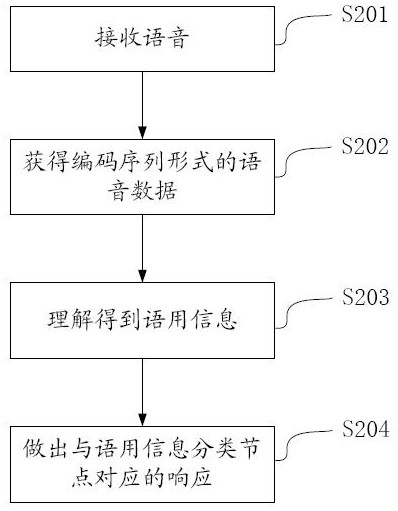

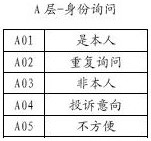

Speech understanding model generation method and intelligent speech interaction method based on pragmatic information

The invention discloses a speech understanding model generation method and an intelligent speech interaction method based on pragmatic information, and the speech understanding model generation method comprises the steps: processing speech, and obtaining speech data in a coding sequence form; presetting pragmatic information classification nodes; correlating the voice data in the coding sequence form with pragmatic information classification nodes; and generating a voice understanding model by using the voice data in the coding sequence form and the pairing data of the pragmatic information classification nodes. According to the method, pragmatic information is directly understood from voice, and information loss caused by the fact that the voice is transferred into characters is avoided; the method is not limited by characters, and a set of voice interaction architecture and a corresponding model can support various language environments such as different dialects, small languages and mixed languages; training corpora and a training voice understanding model are collected according to the hierarchy of pragmatic information classification nodes of voice interaction, and the data volume required by training is greatly reduced; and through simple association operation, the voice obtained in voice interaction is used for rapid iteration of the voice understanding model.

Owner:水木智库(北京)科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com