Human body posture estimation method based on motion feature constraints

A technology of motion characteristics and human body posture, applied in biometric recognition, computing, computer components, etc., can solve problems such as low accuracy, camera shake, motion blur, etc., and achieve improved accuracy, good posture estimation, and enhanced reasoning effect of ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0043] (1) Use a video dataset with multi-person pose annotations, and build a human spatiotemporal window on the video.

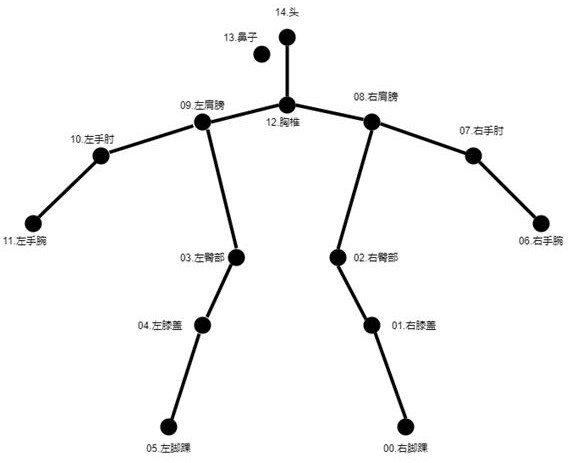

[0044] This implementation method selects PoseTrack as the data set, which is a large-scale video data set for multi-person pose estimation and multi-person pose tracking. It contains more than 1356 video sequences and more than 276K human body pose annotations. The key points of the human body and the sequence numbers of the key points in this data set are as follows: figure 1 As shown, it includes right ankle, right knee, right hip, left hip, left knee, left ankle, right shoulder, right elbow, right wrist, left shoulder, left elbow, left wrist, thoracic spine, head, and nose. Personal key points.

[0045] The present invention bel...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com