Face feature extraction method, system and device based on feature re-calibration and medium

A face feature and feature extraction technology, applied in the field of deep learning and image processing, can solve the problems of serious time consumption, poor robustness, and limited extraction ability, and achieve improved feature extraction ability, high feature extraction speed, and good feature extraction effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

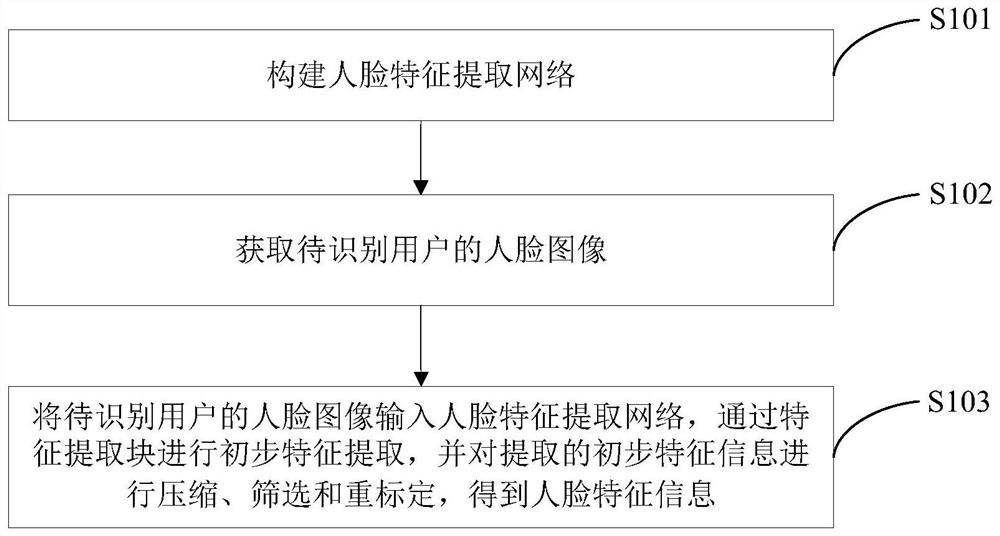

[0057] Such as figure 1 As shown, the present embodiment provides a method for extracting facial features based on feature recalibration, the method comprising the following steps:

[0058] S101. Construct a face feature extraction network.

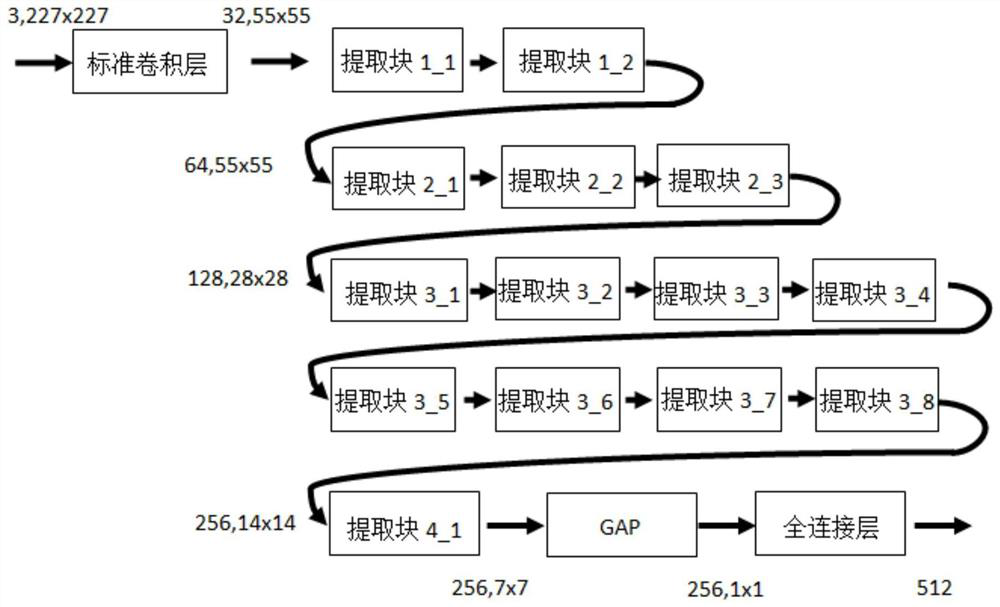

[0059] Among them, the face feature extraction network is mainly composed of multiple feature extraction blocks stacked, and the specific structure of the face feature extraction network is as follows: figure 2 As shown, it specifically includes a standard convolutional layer connected in sequence, a first feature extraction block, a second feature extraction block, a third feature extraction block, a fourth feature extraction block, a global average pooling (Global Average Pooling, GAP) layer and Fully Connected (FC) layer, figure 2 The extraction blocks in all refer to the feature extraction blocks of this embodiment, and 227x227, 55x55, 28x28, etc. all refer to image resolutions.

[0060] The first feature extraction block consist...

Embodiment 2

[0099] Such as Figure 6 As shown, the present embodiment provides a facial feature extraction system based on feature recalibration. The system includes a facial feature extraction network construction unit 601, an image acquisition unit 602 and a feature extraction unit 603. The specific functions of each unit are as follows:

[0100] The facial feature extraction network construction unit 601 is configured to construct a facial feature extraction network; wherein, the facial feature extraction network includes multiple feature extraction blocks.

[0101] The image acquisition unit 602 is configured to acquire a face image of the user to be identified.

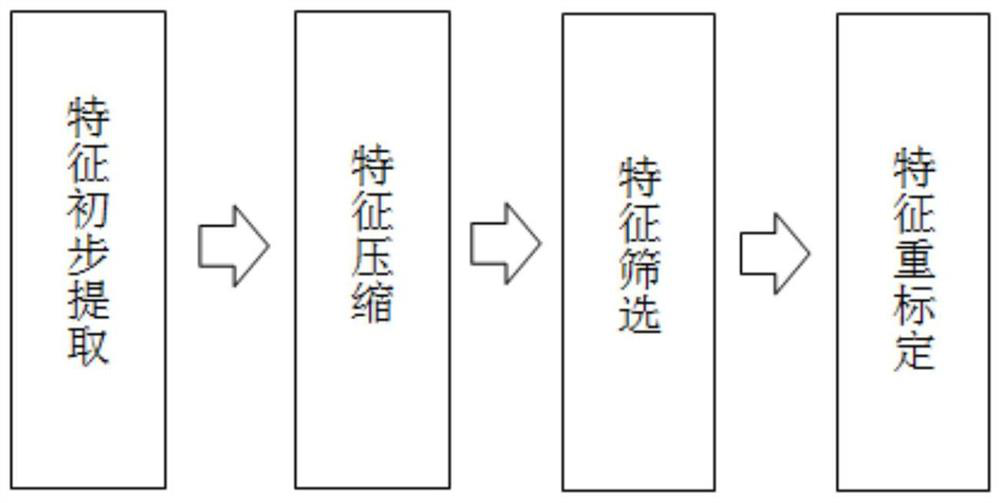

[0102] The feature extraction unit 603 is used to input the face image of the user to be identified into the face feature extraction network, perform preliminary feature extraction through the feature extraction block, and compress, screen and recalibrate the extracted preliminary feature information to obtain the face featu...

Embodiment 3

[0105] This embodiment provides a computer device, which can be a computer, such as Figure 7 As shown, a processor 702, a memory, an input device 703, a display 704 and a network interface 705 are connected through a system bus 701, the processor is used to provide computing and control capabilities, and the memory includes a non-volatile storage medium 706 and an internal memory 707, the non-volatile storage medium 706 stores an operating system, a computer program, and a database, the internal memory 707 provides an environment for the operation of the operating system and the computer program in the non-volatile storage medium, and the processor 702 executes the During computer program, realize the face feature extraction method of above-mentioned embodiment 1, as follows:

[0106] Construct human face feature extraction network; Wherein, described human face feature extraction network comprises multi-block feature extraction block;

[0107] Obtain the face image of the u...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com