Reading understanding method based on ELMo embedding and gating self-attention mechanism

A reading comprehension and attention technology, applied in the computer field, can solve problems such as ignoring polysemy of words, not capturing long contextual information well, and not considering long text context dependencies, etc., so as to improve the accuracy of the model , the effect of improving the performance of the model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

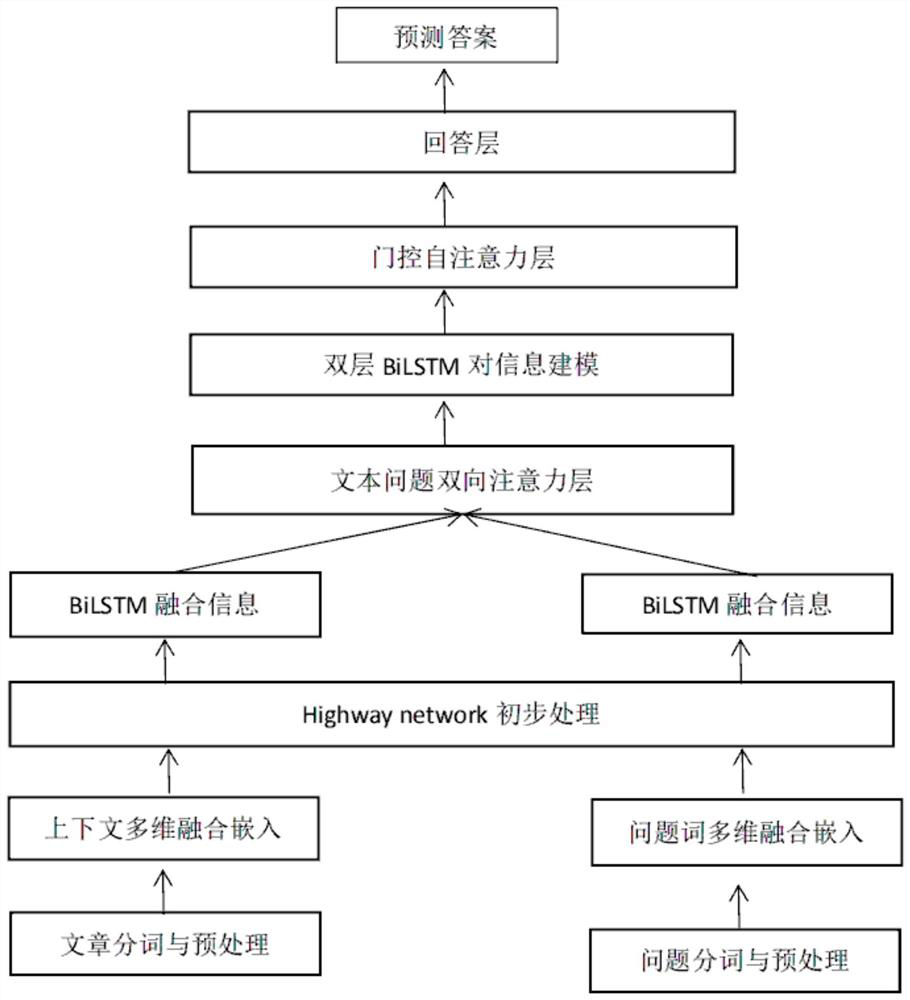

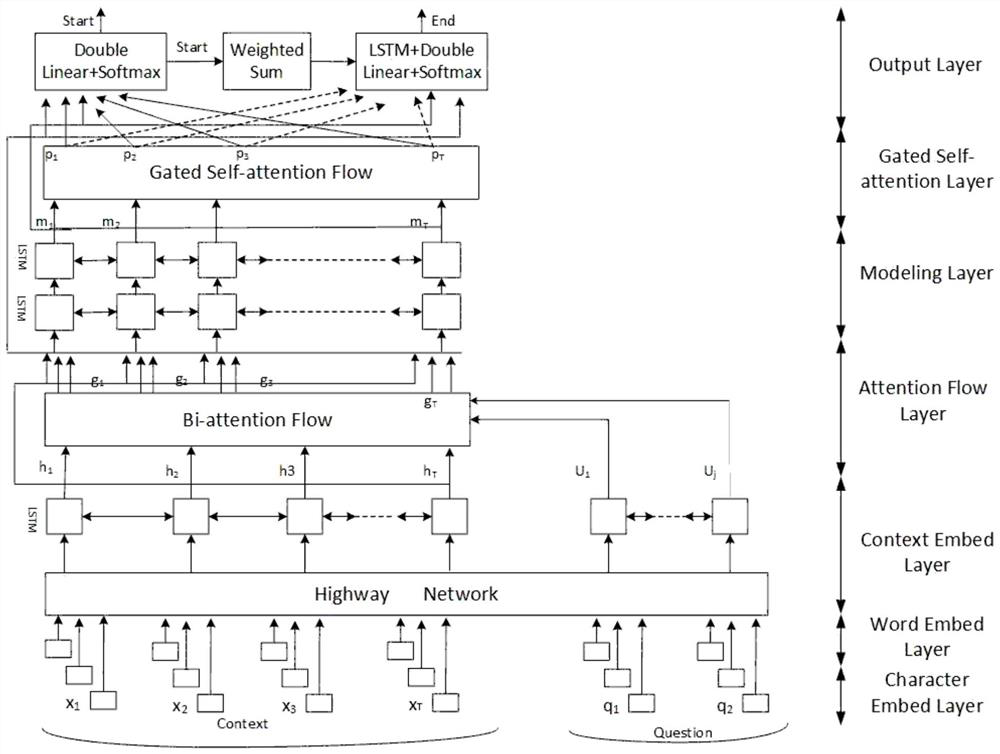

[0053] Such as figure 1 As shown, a reading comprehension method based on ELMo embedding and gated self-attention mechanism, the method includes the following steps:

[0054] S1: Segment and preprocess the article and the question respectively, and establish the glossary of glove words and the character list in the word that have appeared in the article and question after word segmentation;

[0055] S2: Using the pre-trained ELMo encoder, input each word to obtain its ELMo embedding representation containing contextual information;

[0056] S3: for each word, it is mapped to the corresponding word vector in the glove word vocabulary, obtains its word level representation;

[0057] S4: For each letter of the word, find the corresponding representation in its character table, and use the character vector as the input of the convolutional neural network. The output of the convolutional layer is max-pooled to obtain a fixed-length character embedding representation for each word ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com