Manipulator locating, guiding and calibrating method based on machine vision cooperation

A technology of machine vision and calibration method, applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve the problems of complex calibration process, high precision requirements of calibration blocks, and difficult to complete calibration, etc., and achieve the effect of simple calibration process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020]The present invention will be described in detail below with reference to the drawings.

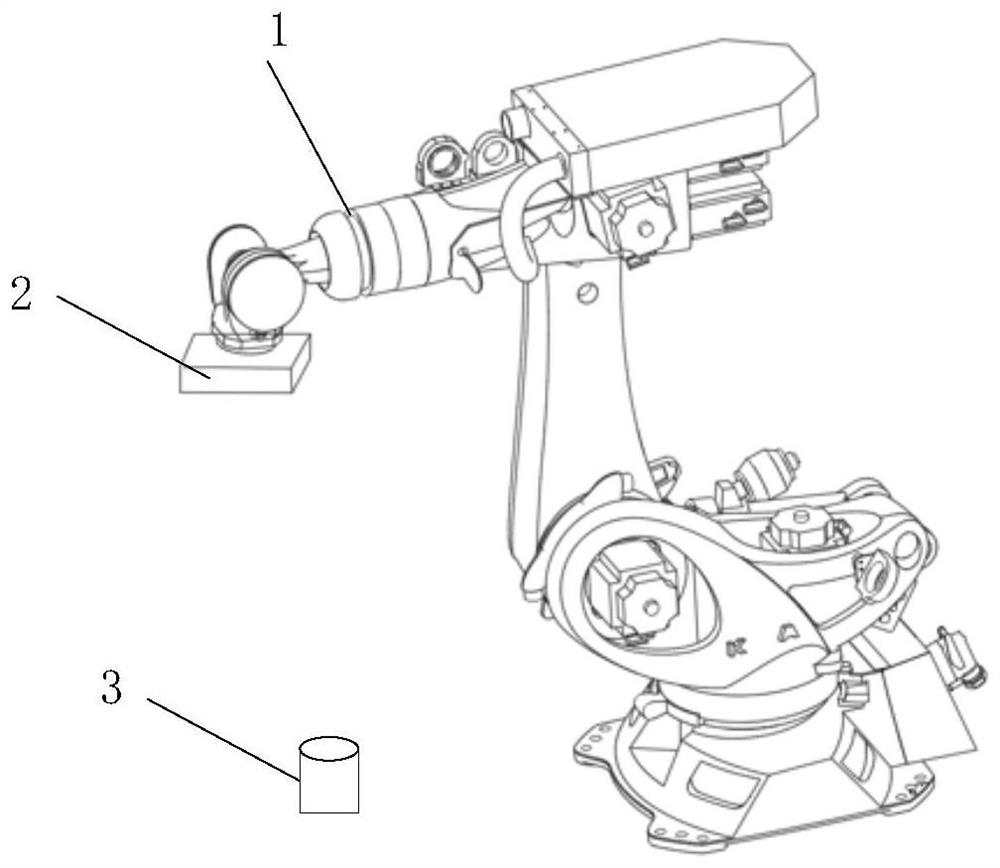

[0021]The present invention relates to a visual system positioning guidance calibration method, and the visual system used includes a controller, a camera with a lens, and a light source.

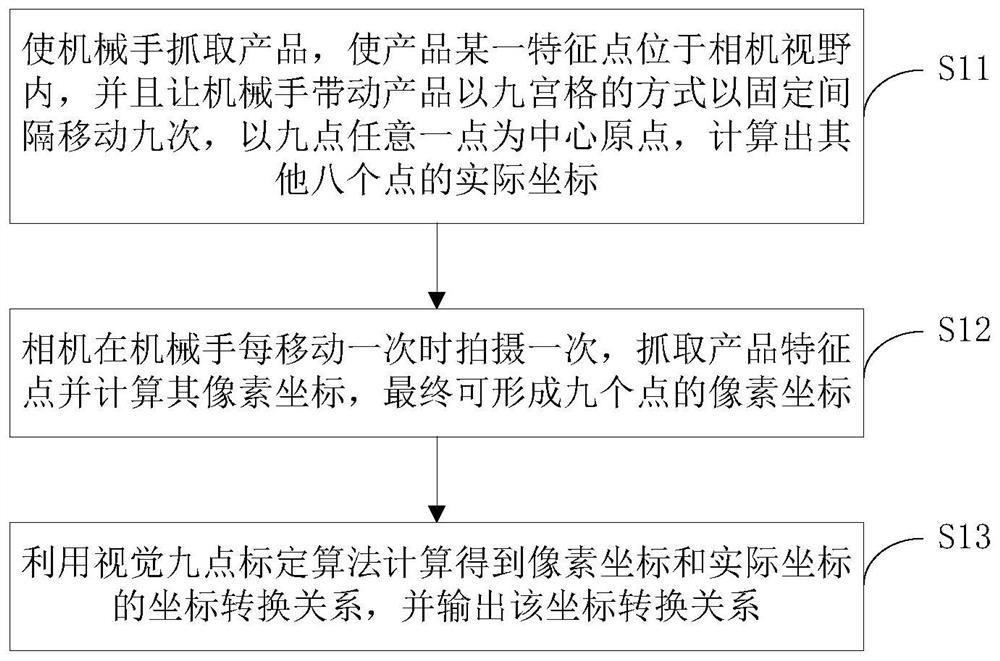

[0022]Combined referencefigure 1 The calibration method of the present invention based on the machine-based visual mating robot is included in the following steps:

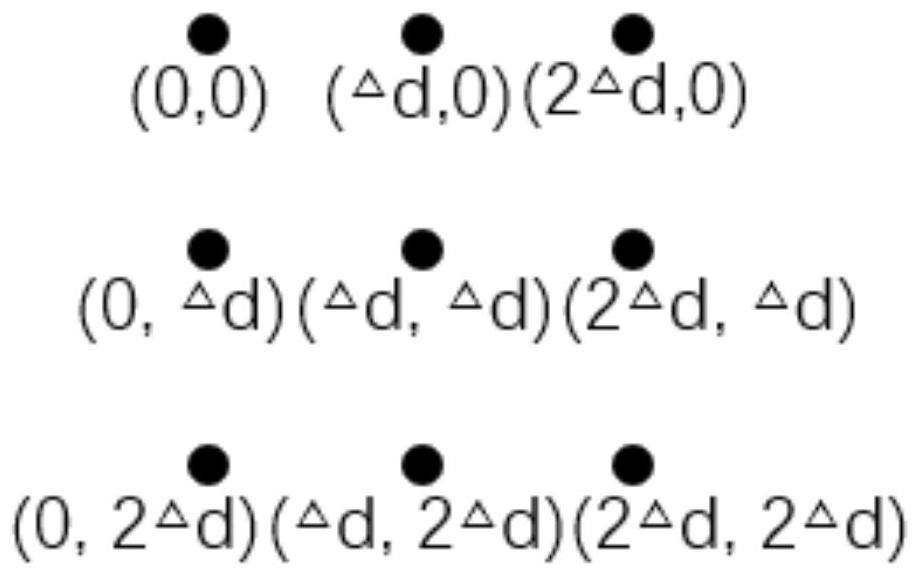

[0023]S11, using a robot to grab the product, enabling a characteristic point in the camera in the camera, and allows the robot to move the product in a nine-rich manner, the moving distance is △ D, at nine o'clock as the center origin, calculate other The actual coordinates of eight points;

[0024]S12, the camera captures the characteristics of the characteristics and calculates the pixel coordinates of the product when the robot is moved once, grab the product feature point and calculate its pixel coordinates.

[0025]S13, using the visual nine-point ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com